Invariant central difference filter method for autonomous mobile robot visual slam

A technology of robot vision and autonomous movement, applied in the field of information processing of aerospace systems, can solve the problems of high computing efficiency and high computing complexity, and achieve the effects of high computing efficiency, improved computing accuracy, and fast computing speed.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

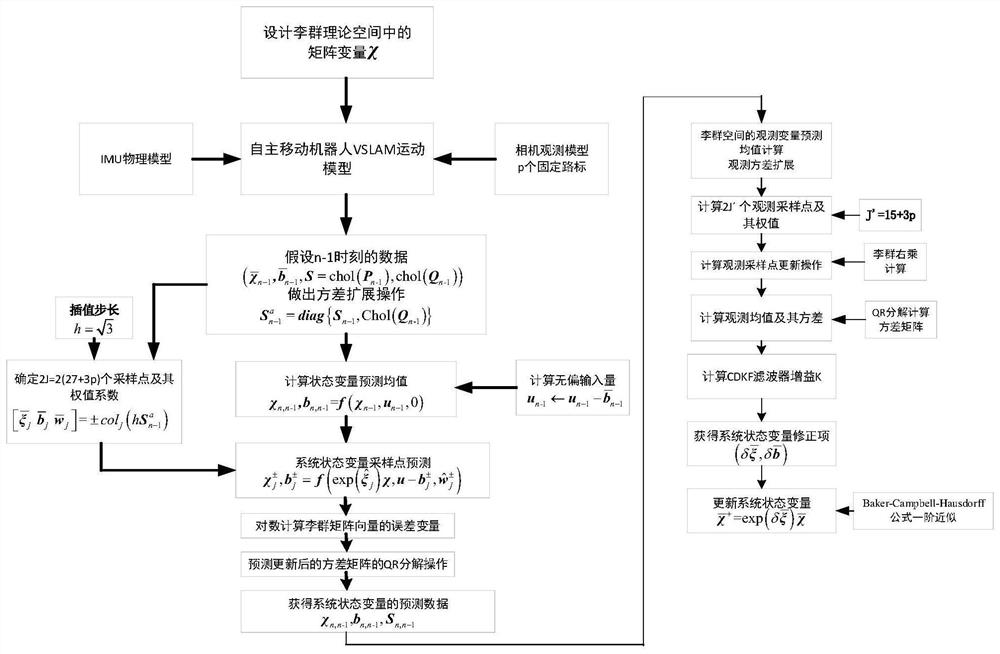

Method used

Image

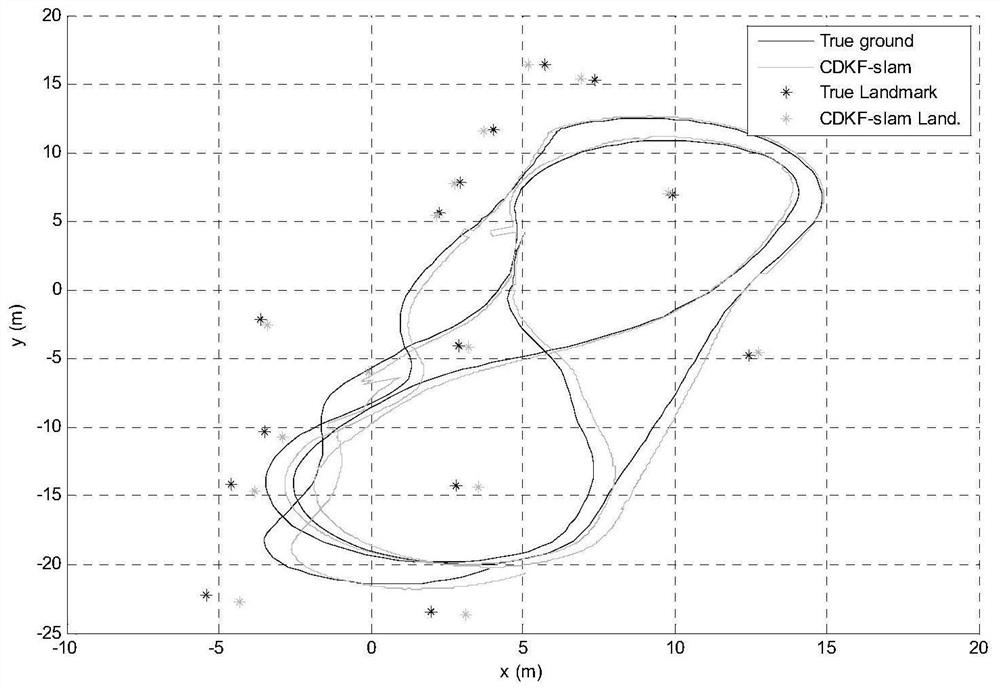

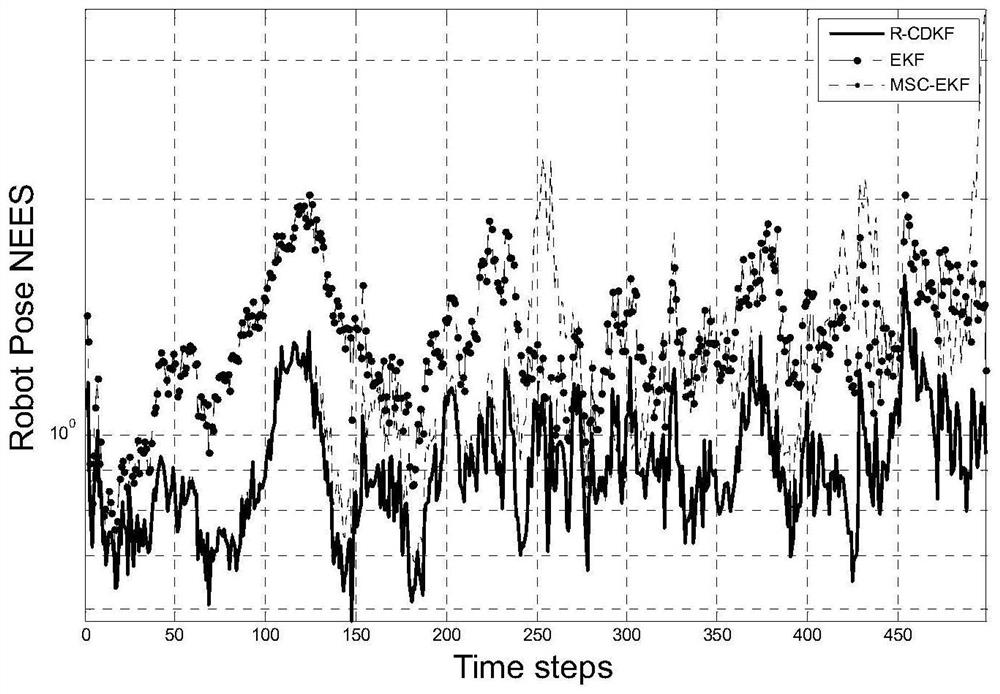

Examples

specific Embodiment

[0119] Specific embodiment: consider the autonomous mobile robot VSLAM system, the autonomous mobile robot is equipped with a monocular camera and an inertial IMU measurement component, and the IMU measurement component provides the 3-dimensional attitude, velocity and position vector information of the autonomous mobile robot system, and the monocular camera is for p Observation is carried out at a fixed landmark position, and p observation equations are obtained, so the VSLAM system model of the autonomous mobile robot can be expressed as:

[0120]

[0121] Here the VSLAM system state vector is a Lie group matrix variable composed of 3-dimensional attitude, velocity and position vectors Bias vector from the rest of the 3D gyroscope and accelerometer form a mixed state vector; the 3-dimensional gyroscope and accelerometer output ω and a in the motion model equation form a control input variable: u=[ω T ,a T ] T . The system noise is noise vector w n is the Gaussia...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com