Identification method of PointNet for complex scene

A recognition method and complex scene technology, applied in the field of optimizing PointNet's recognition of complex scenes, can solve problems such as objects that cannot be parsed out of details

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] The present invention will be further described below in conjunction with drawings and embodiments.

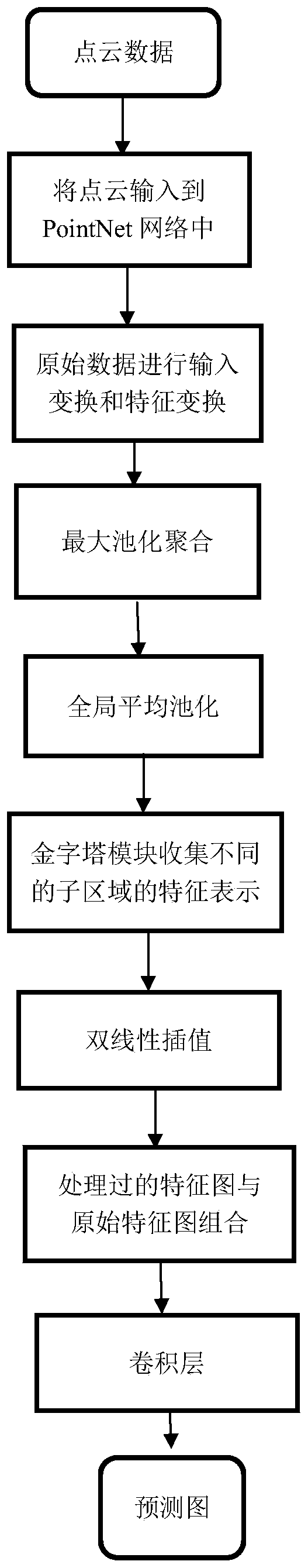

[0018] Such as figure 1 As shown, the present invention realizes a kind of processing 3D recognition tasks in three-dimensional space, including tasks such as object classification, partial segmentation and semantic segmentation, and the specific implementation steps are as follows:

[0019] S1. Input data: a three-dimensional point cloud (n*3) of n points of point cloud data is used as input.

[0020] S2. Predict an effective transformation matrix through the mini-network (T-net), and directly apply this transformation to the coordinates of the input points. Change the input, adjust the point cloud (unordered vector) in the space, and rotate it to an angle that is more conducive to segmentation. Input the point cloud data, first perform affine transformation with T-Net, the specific performance is that the original data passes through a 3D space transformation matrix...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com