Pedestrian motion mode recognition method based on deep hybrid model

A motion mode and hybrid model technology, applied in character and pattern recognition, biological neural network models, instruments, etc., to achieve the effects of reducing the amount of calculation, improving recognition accuracy, and high reliability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

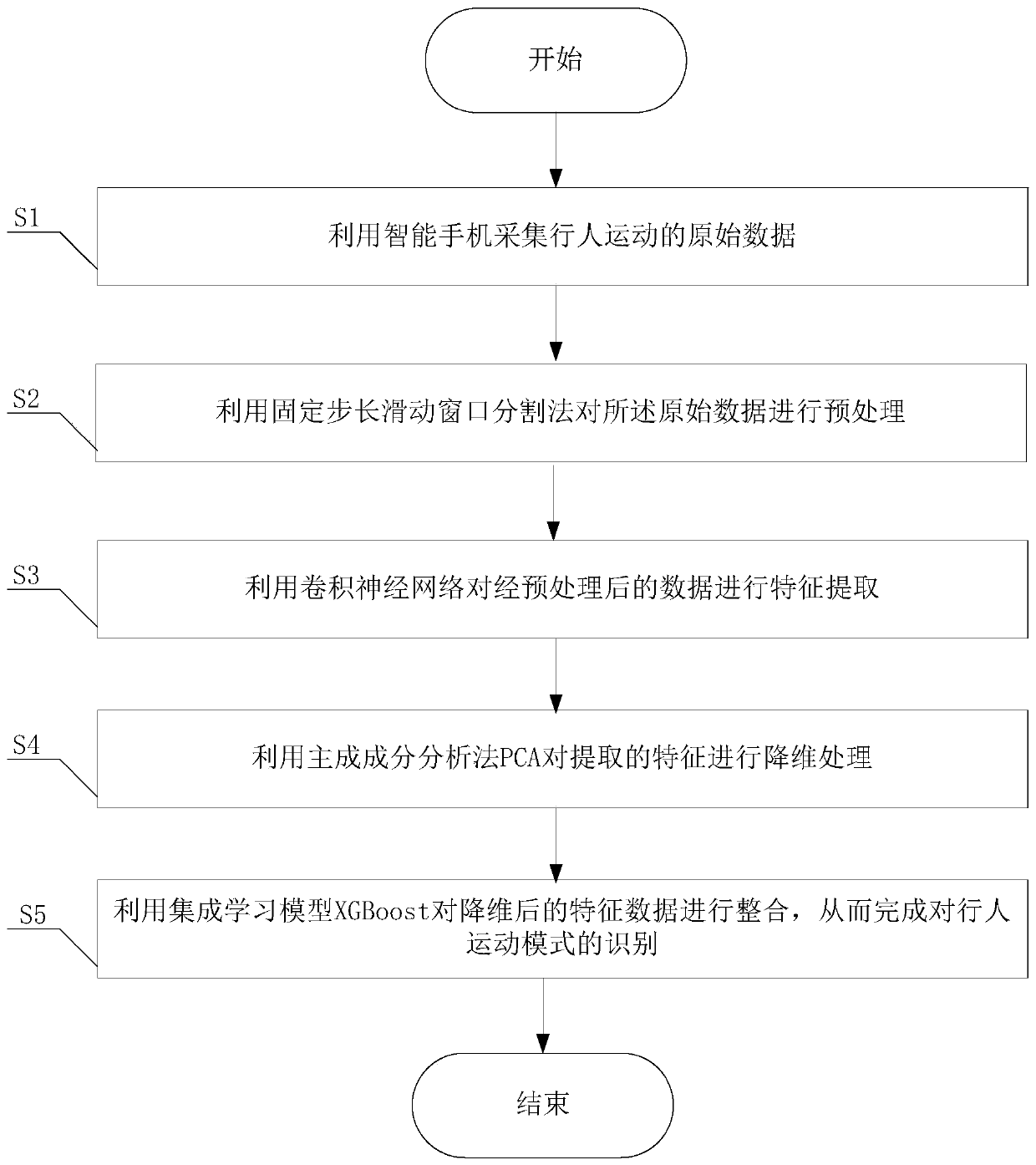

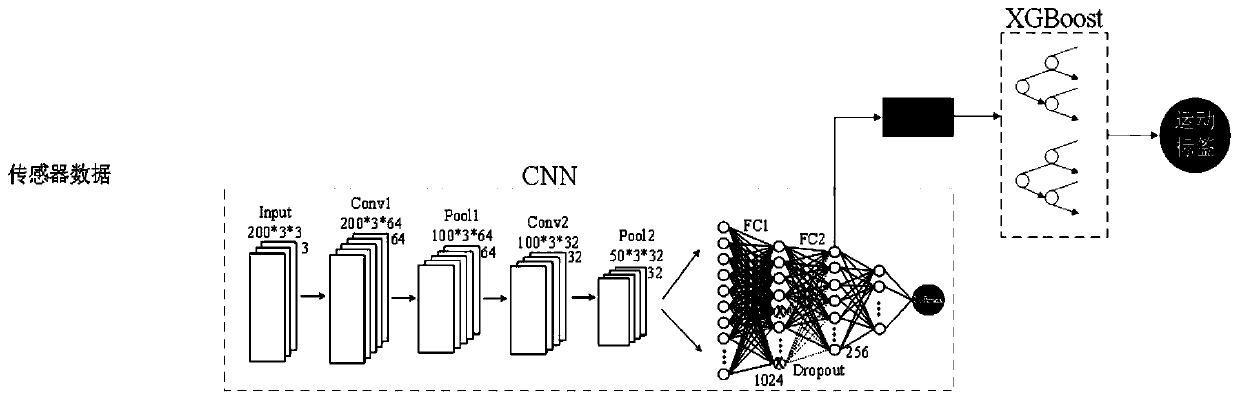

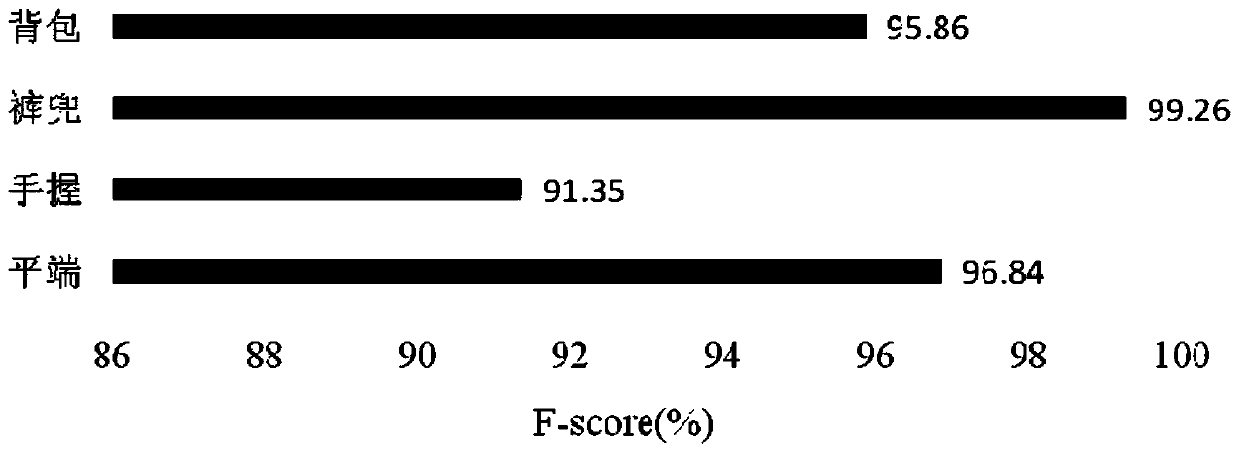

[0058] Such as figure 1 As shown, the present invention provides a pedestrian movement pattern recognition method based on a deep hybrid model. Such as figure 2 As shown, the network in the present invention is composed of a convolutional neural network CNN combined with a hybrid model of XGBoost. The present invention uses the convolutional neural network CNN as a trainable feature extractor that can automatically obtain features from the input, and uses the learning model XGBoost as a network The top-level recognizer is used to generate results, which effectively guarantees high reliability of feature extraction and classification. Based on the built-in acceleration sensor, gyroscope and magnetometer of the smart phone, the present invention adopts the deep learning framework Convolutional Neural Network (CNN) to mine rich and high-quality features in the input information, and then uses Principal Component Analysis (PCA) to analyze the proposed The features are reduced i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com