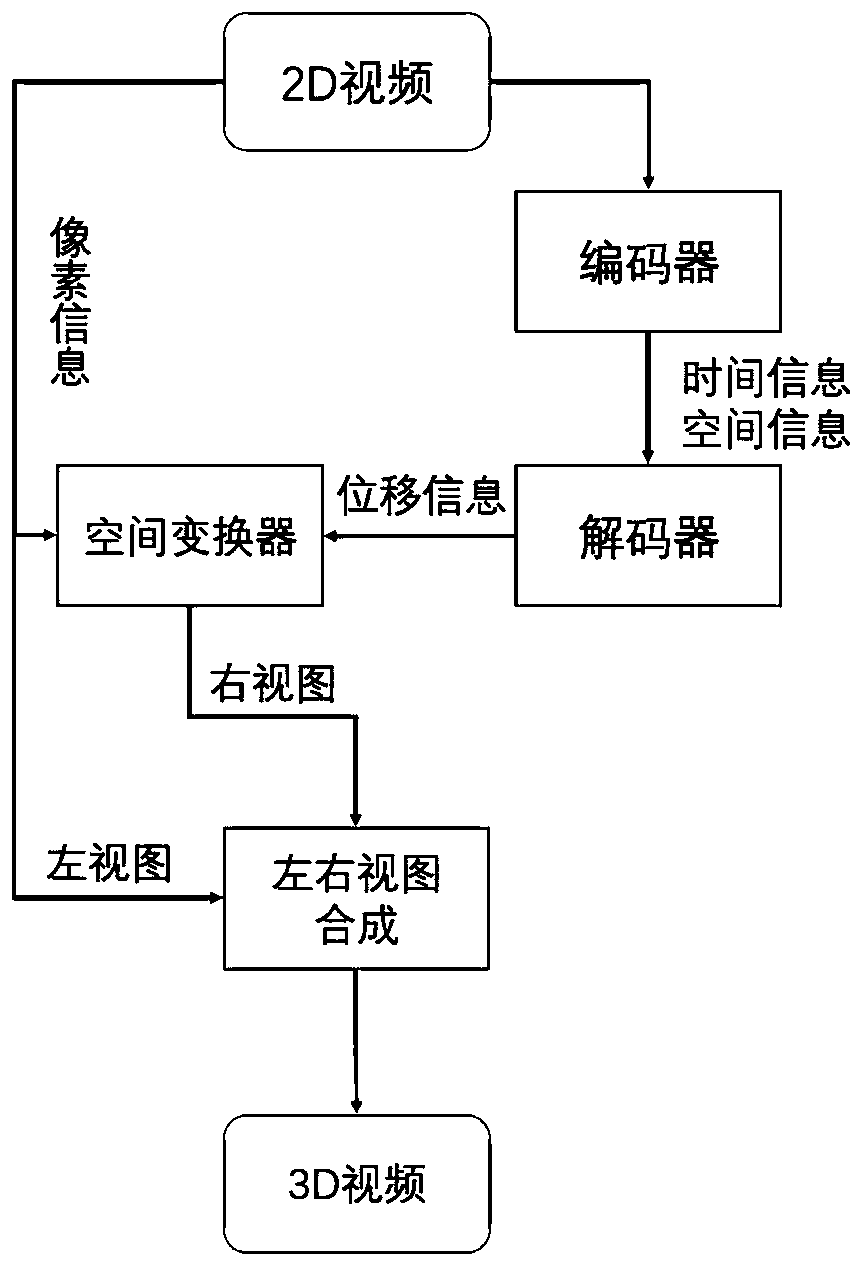

Full-automatic 2D video-to-3D video conversion method based on spatiotemporal information modeling

A conversion method, a fully automatic technology, applied in the field of conversion from 2D video to 3D video, to achieve the effect of reducing the amount of calculation, improving conversion efficiency, and improving conversion quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] A method for converting fully automatic 2D video to 3D video based on spatio-temporal information modeling, comprising the following steps:

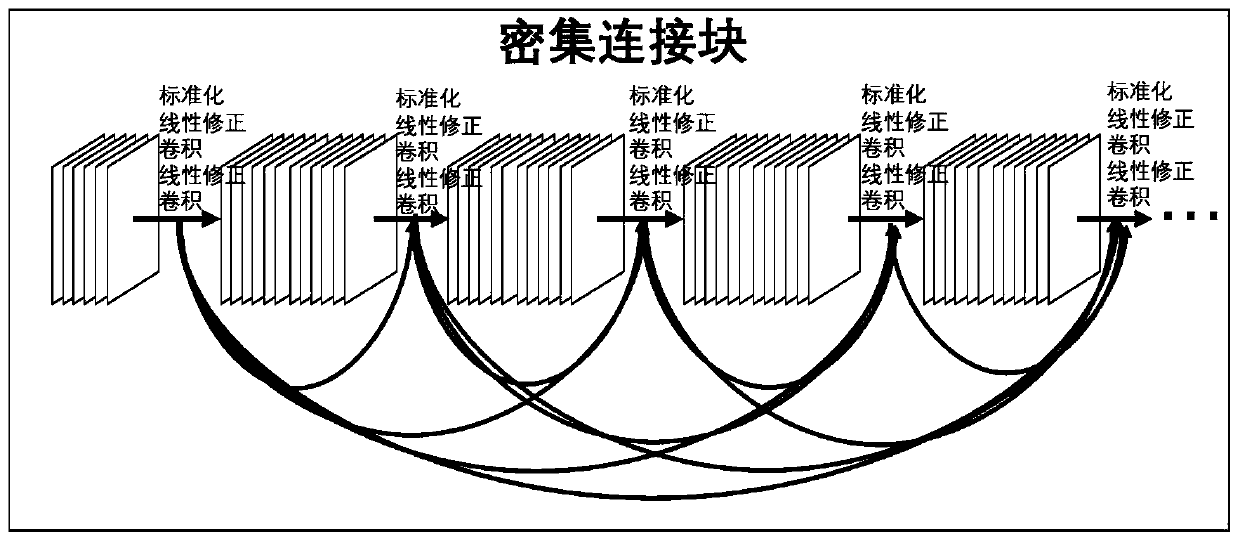

[0027] Step 1: Extract multiple video frames using the encoder network time information f t with spatial information f s , for the encoder network, we use a densely connected neural network and replace the 2D convolutions in the densely connected neural network with 3D convolutions;

[0028] 1.1 Densely connected neural network

[0029] Suppose the input is an image X 0 , after an L-layer neural network, the input of the jth layer of the densely connected neural network is not only related to the output of the j-1 layer, but also related to the output of all previous layers, denoted as:

[0030] x j =H j ([X 0 ,X 1 ,...,X j-1 ])

[0031] where: X j is the output of the i-th layer of the neural network, H j () is the nonlinear transformation of the jth layer of the neural network,

[0032] A densely connected neural n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com