A Lip Recognition Method and Device Based on Dual Discriminator Generative Adversarial Network

A technology of dual discriminators and recognition methods, applied in biological neural network models, character and pattern recognition, instruments, etc., to achieve the effects of improving conversion quality, reducing angle range, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

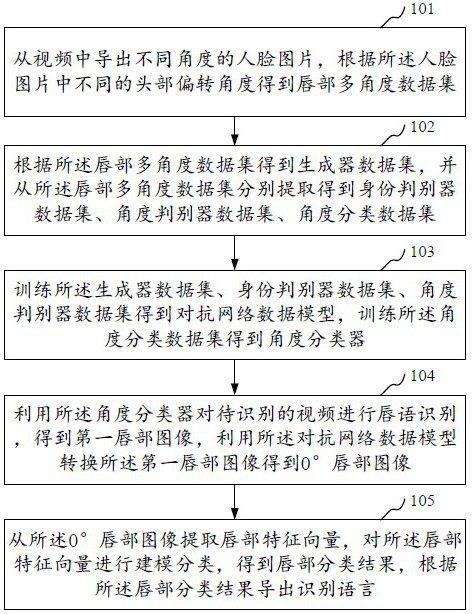

[0059] In one embodiment, such as figure 1 As shown, a lip recognition method based on a double discriminator generation confrontation network is provided, including the following steps:

[0060] Step 101, deriving face pictures of different angles from the video, and obtaining lip multi-angle data sets according to different head deflection angles in the face pictures;

[0061] Step 102, obtaining a generator data set according to the lip multi-angle data set, and extracting an identity discriminator data set, an angle discriminator data set, and an angle classification data set from the lip multi-angle data set;

[0062] Step 103, training the generator data set, identity discriminator data set, and angle discriminator data set to obtain an adversarial network data model, and training the angle classification data set to obtain an angle classifier;

[0063] Step 104, using the angle classifier to perform lip recognition on the video to be recognized to obtain a first lip im...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com