Convolutional neural network weight compression method and device based on arm architecture fpga hardware system

A convolutional neural network and ARM architecture technology, applied in the field of neural network speed-up, can solve problems such as poor implementation effect, no hardware characteristics taken into account, and increased computing system burden, so as to improve computing efficiency, optimize storage, and reduce the number of multiplications Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

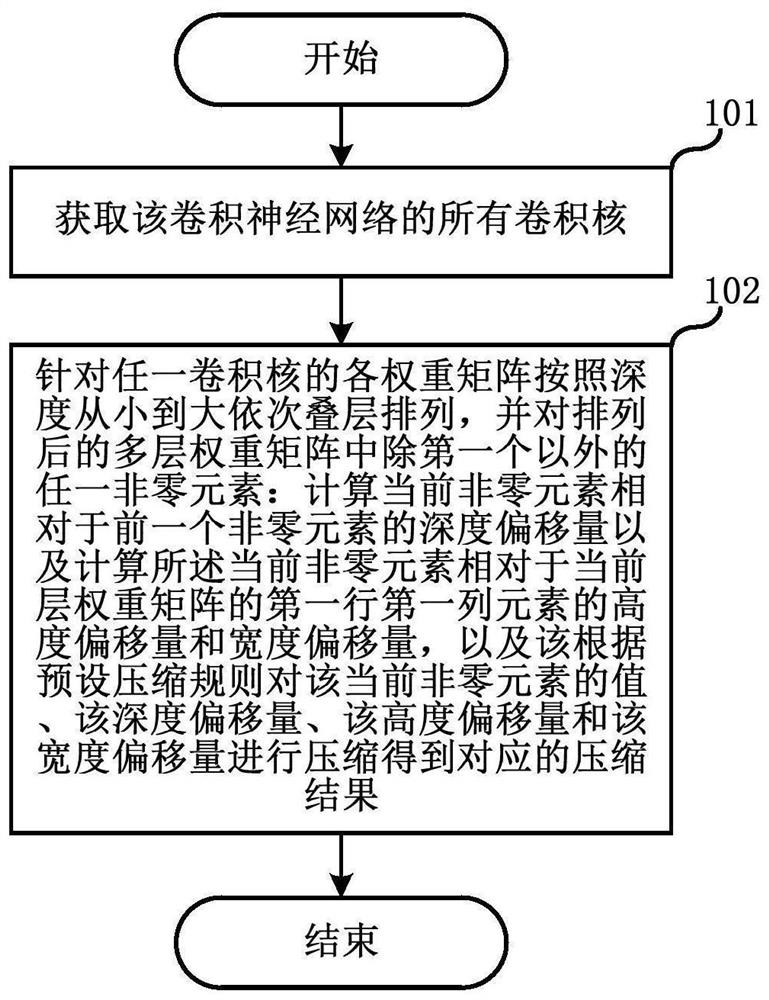

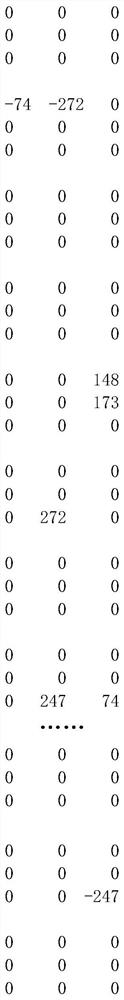

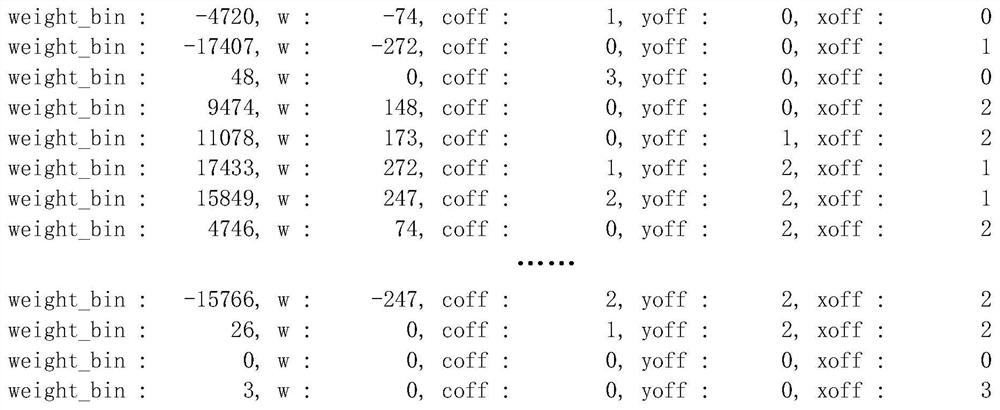

Method used

Image

Examples

Embodiment Construction

[0040] In the following description, many technical details are proposed in order to enable readers to better understand the application. However, those skilled in the art can understand that the technical solutions claimed in this application can be realized even without these technical details and various changes and modifications based on the following implementation modes.

[0041] Explanation of some concepts:

[0042] Sparse Matrix: The number of non-zero elements in the matrix is much smaller than the total number of matrix elements, and the distribution of non-zero elements is irregular. It is generally considered that the total number of non-zero elements in the matrix is less than the total value of all elements in the matrix When it is equal to 0.05, the matrix is called a sparse matrix.

[0043] Huffman Encoding (Huffman Encoding): It is an entropy coding (weight coding) greedy algorithm for lossless data compression.

[0044] In order to make the purpose, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com