Linear structure light fitting plane-based robot repeated positioning accuracy measurement method

A technology of repetitive positioning accuracy and linear structured light, applied in the field of computer vision, can solve problems such as noise pollution, and achieve the effects of non-contact measurement, good anti-interference performance, and fast measurement speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

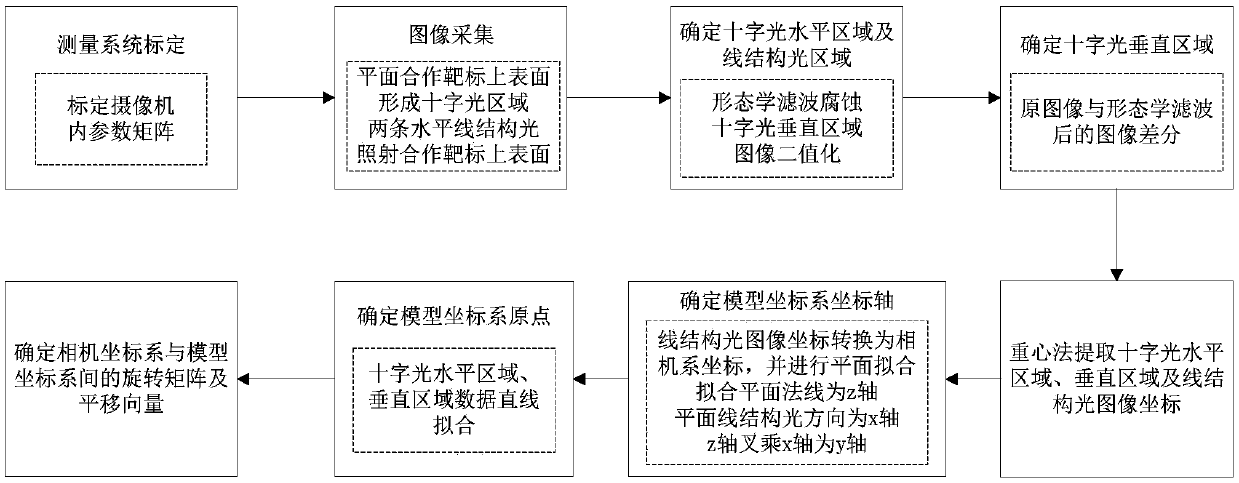

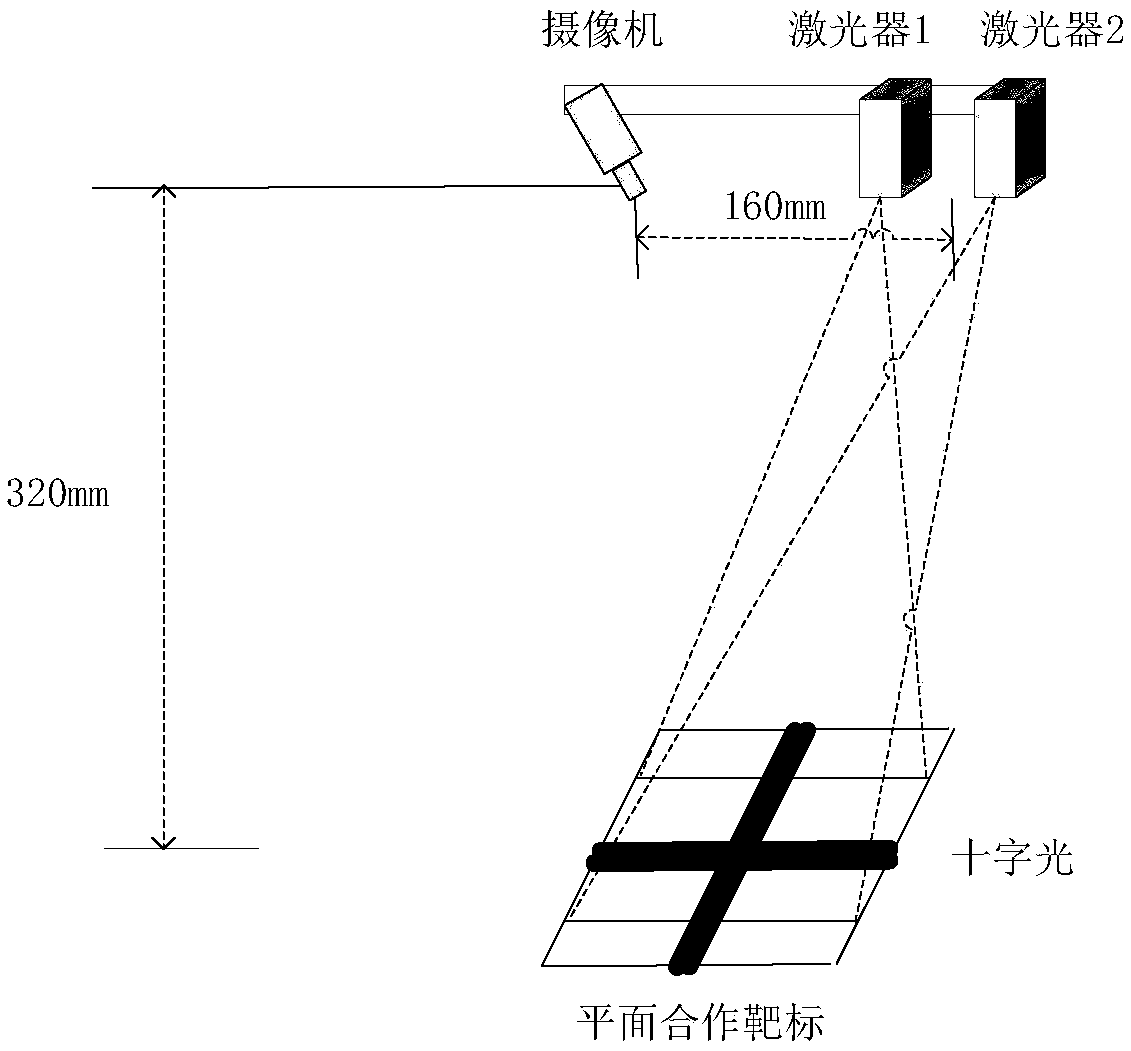

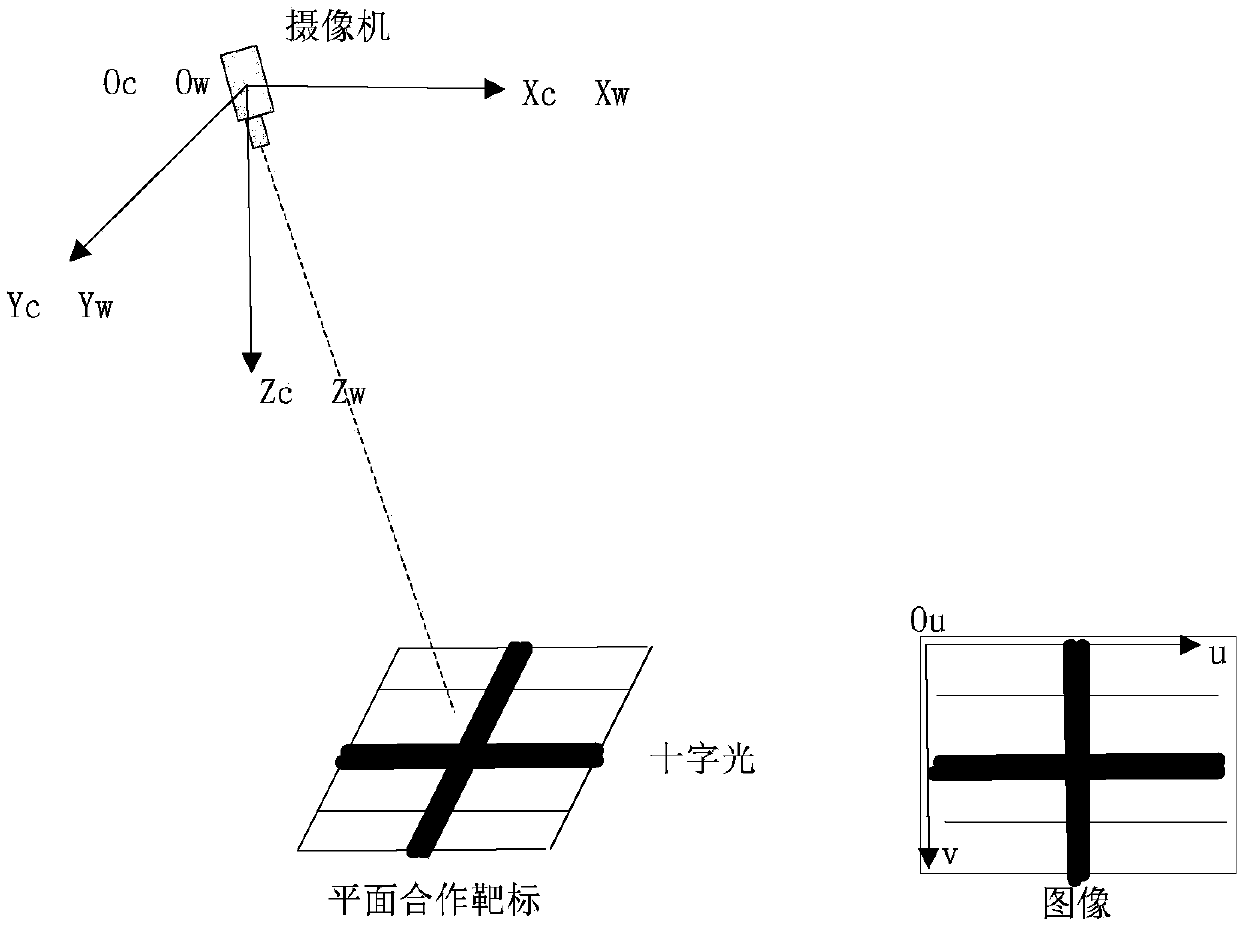

[0050] Such as Figure 4 As shown, the robot repetitive positioning accuracy measurement method based on the line structured light fitting plane of the present invention uses the planar cooperative target and the line structured light to fit the cooperative target plane equation, and then determines the coordinate axis and origin of the model coordinate system, and finally obtains the camera The rotation matrix and translation vector between the coordinate system and the model coordinate system. Specifically, two horizontal lines of structured light are used to irradiate the upper surface of the planar cooperation target, and its image coordinates are extracted; according to the geometric relationship between the camera coordinate system and the image coordinate system, the line structured light image coordinates are converted into camera syst...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com