Compressed video quality enhancement method based on attention mechanism and time dependence

A video quality and time-dependent technology, applied in the direction of digital video signal modification, electrical components, image communication, etc., can solve the problems of inability to obtain, increase the difficulty of network training, etc., achieve the improvement of objective quality evaluation indicators, enhance visual quality, and enhance quality Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The embodiments of the present invention will be described in detail below, but the protection scope of the present invention is not limited to the examples.

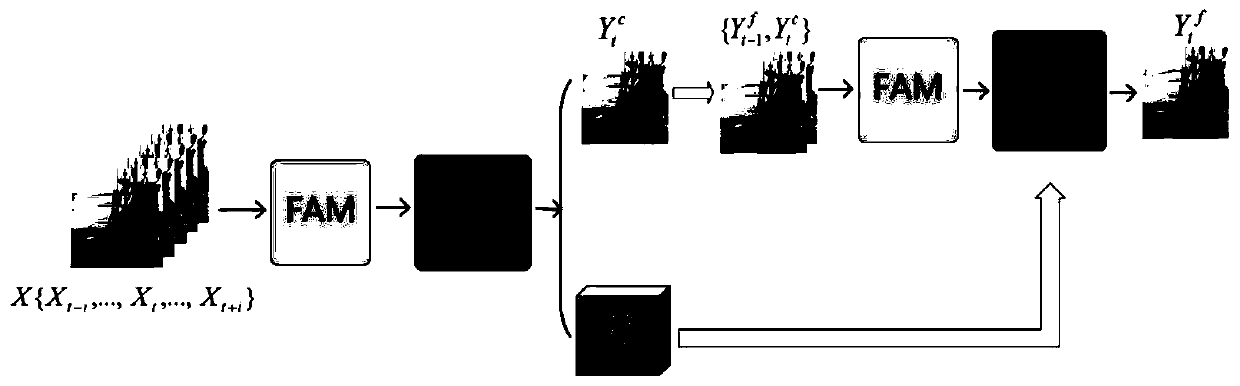

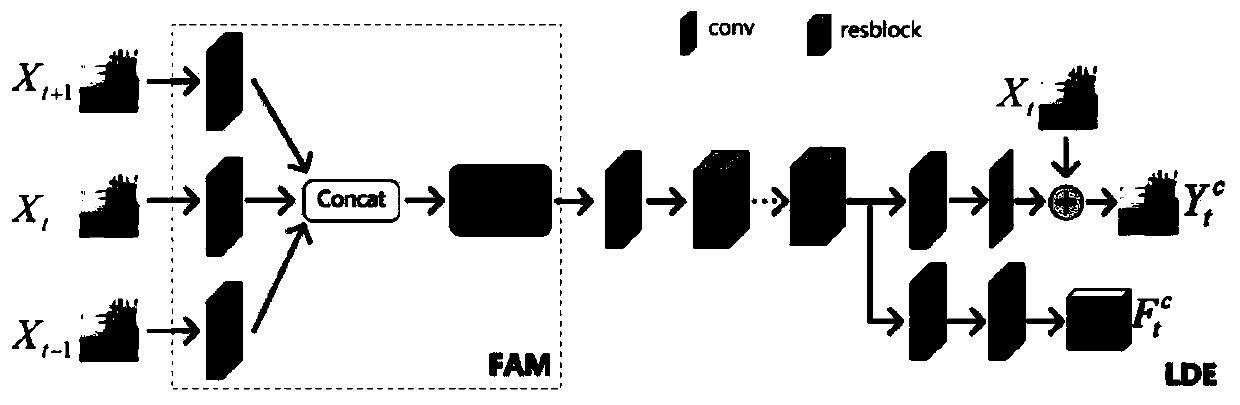

[0038] use figure 1The network structure in , was trained with 63 video sequences with resolutions ranging from 176x144 to 1920x1080.

[0039] The specific process is as follows:

[0040] (1) During training, use 5 consecutive frames as the input of the network, select 13 sets of inputs as a batch, and each frame is cut into 64x64 patches for easy training; since each frame to be enhanced needs to use The two previous frames and the last two frames, so for the first two frames and the last two frames in each video, a copy of that frame will be used to replace the missing frame;

[0041] (2) When testing, use 16 video sequences different from the training set as the test set. When testing the objective quality of each video, first calculate the PSNR value between each frame in the video and the uncompressed orig...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com