Real-time audio-driven virtual character mouth shape synchronous control method

A virtual character, audio-driven technology, applied in the field of virtual character gesture control, can solve the problem of not being able to obtain the phoneme sequence corresponding to the voice synchronously, and achieve the effects of saving communication bandwidth, improving user experience, and reducing system complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] Embodiments of the present invention will be described below with reference to the drawings, but it should be realized that the invention is not limited to the described embodiments and that various modifications of the invention are possible without departing from the basic idea. The scope of the invention is therefore to be determined only by the appended claims.

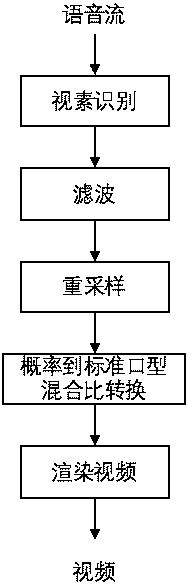

[0035] Such as figure 1 As shown, a real-time audio-driven virtual character lip synchronization control method provided by the present invention includes the following steps:

[0036] A step of identifying viseme probabilities from a real-time speech stream;

[0037] a step of filtering the viseme probabilities;

[0038] A step of converting the sampling rate of the viseme probability into the same sampling rate as the rendering frame rate of the avatar;

[0039] A step of converting the viseme probabilities into a standard lip configuration and performing lip rendering.

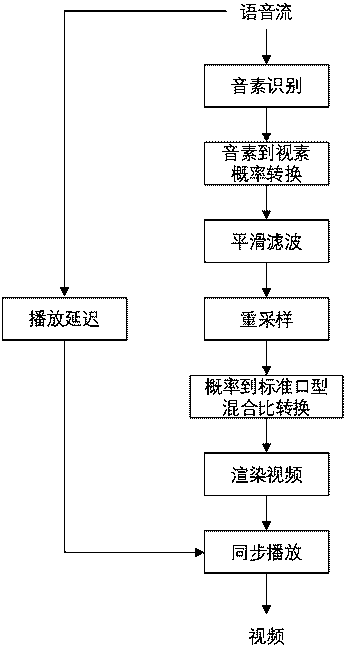

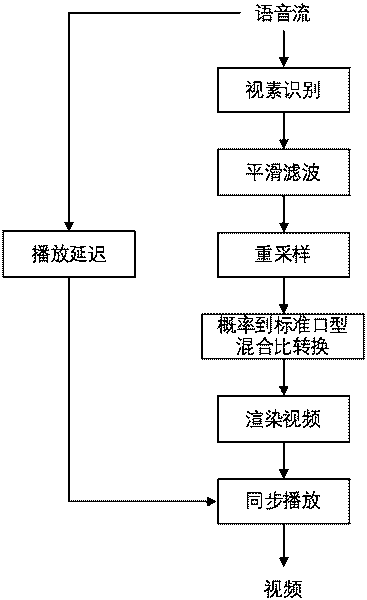

[0040] Such as figure 2 As ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com