A 3D Object Fusion Feature Representation Method Based on Multimodal Feature Fusion

A feature fusion and feature fusion technology, applied in neural learning methods, character and pattern recognition, biological neural network models, etc., to achieve the effect of excellent multimodal information fusion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] Below in conjunction with accompanying drawing, the present invention is further described;

[0023] A 3D object fusion feature representation method based on multimodal feature fusion, the steps are as follows:

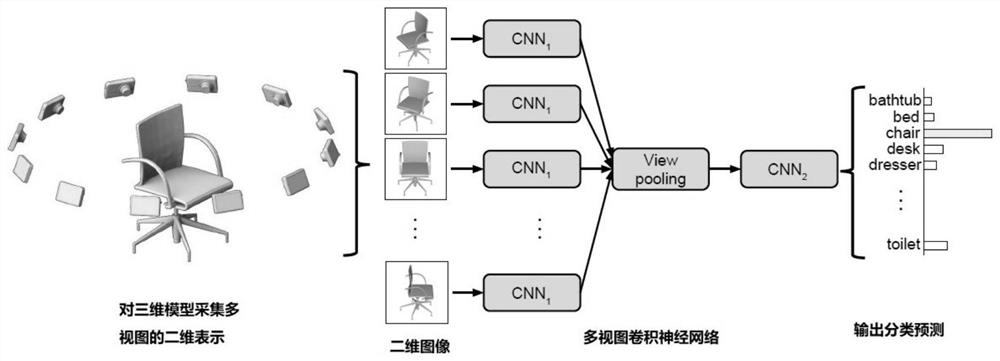

[0024] Step (1), process multi-view through multi-view neural network Figure three dimension information;

[0025] Such as figure 1 As shown, multiple independent CNNs that do not share weights are used to input multi-view information, and then through Max-pooling, the outputs of multiple CNNs are unified into one output, and a discriminator (that is, a non-linear network based on a fully connected layer) is added. classifier) to classify the model.

[0026] First, convert the 3D model data into multi-view data. The specific method is to place 12 cameras evenly around the 3D model (that is, at intervals of 30 degrees) on the middle horizontal plane of the 3D model, and take a group of 12 pictures as the 3D model. multi-view representation of . Then use...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com