Hyperspectral image classification method based on depth feature cross fusion

A hyperspectral image, cross-fusion technology, applied in the field of hyperspectral image classification, hyperspectral image classification based on depth feature cross-fusion, can solve the problem of not considering the correlation of depth features, the loss of spatial features, etc., to solve the loss of spatial features , Enhance the effect of representational ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

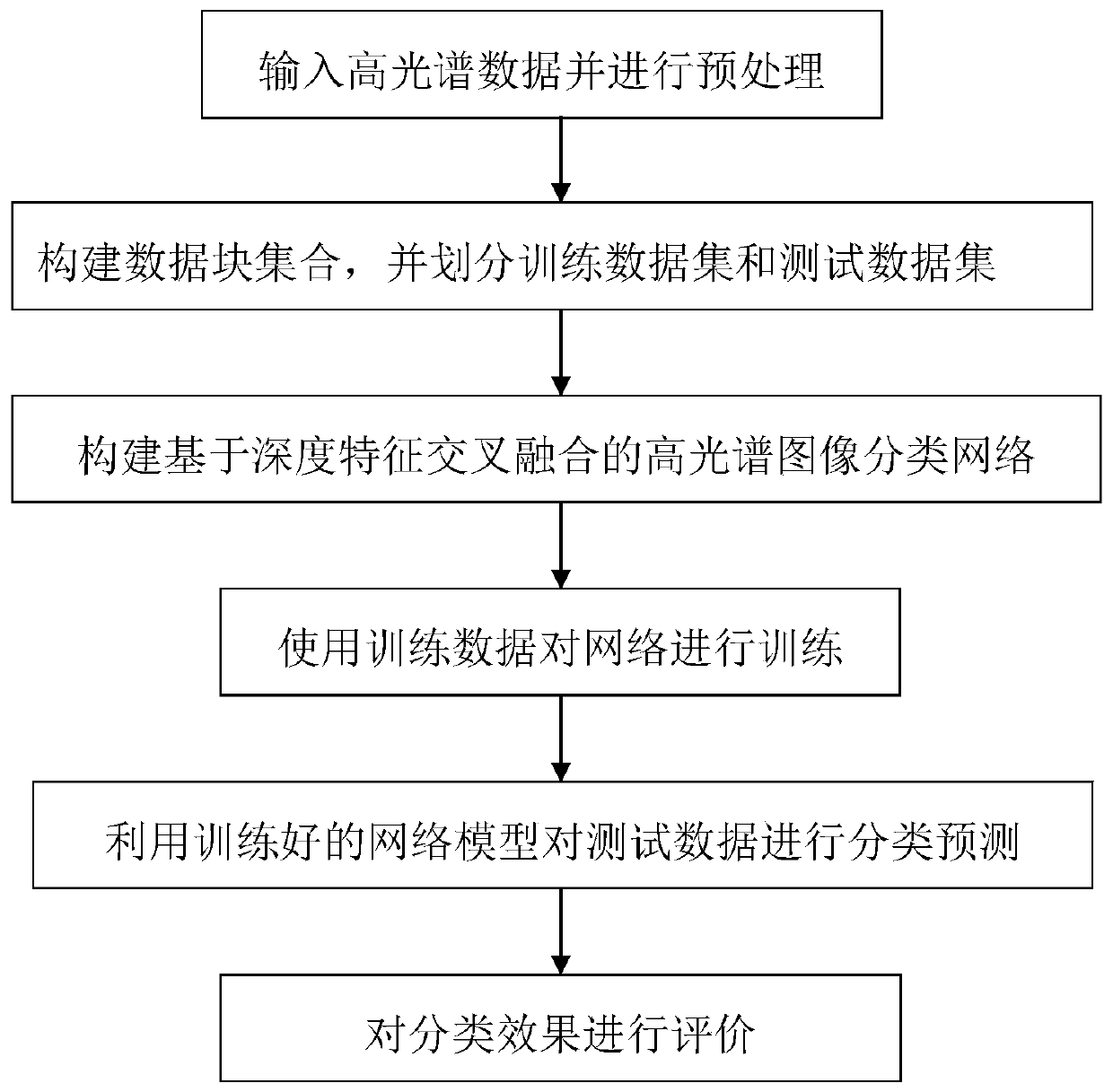

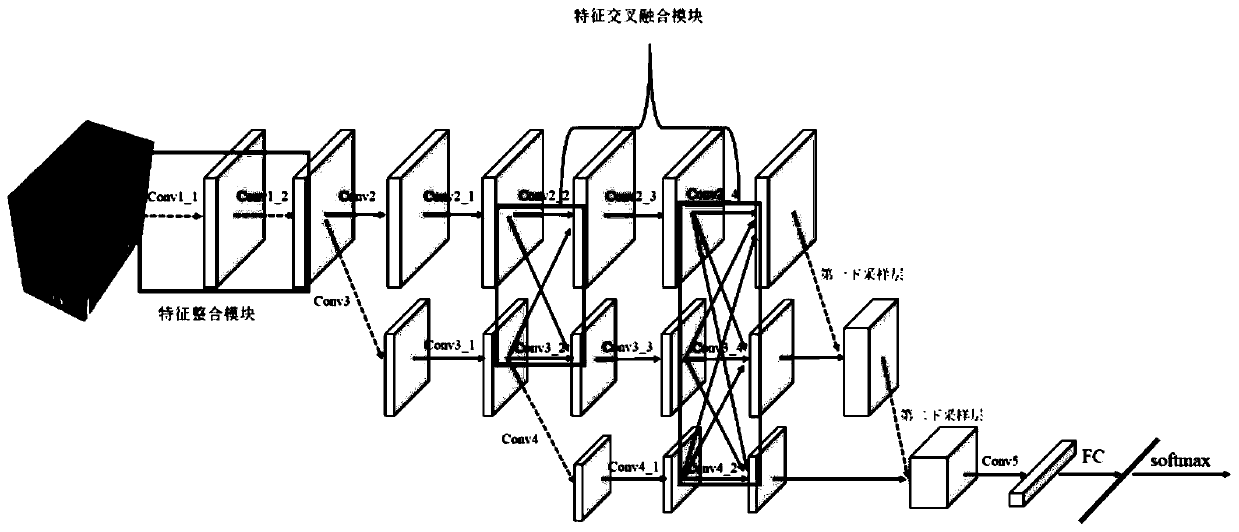

[0034] refer to figure 1 , an implementation flow chart of a hyperspectral image classification method based on deep feature cross-fusion, the implementation steps of the present invention are described in detail:

[0035] Step 1, input hyperspectral data and preprocess it.

[0036] First, input the hyperspectral data, read the data to obtain the hyperspectral image and its corresponding classification label; where the hyperspectral image is a three-dimensional cube data of h×w×b, and the corresponding category label is a two-dimensional category label of h×w Data, where h represents the height of the hyperspectral image, w represents the width of the hyperspectral image, and b represents the number of spectral bands of the hyperspectral image.

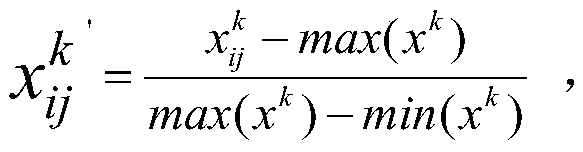

[0037] Each spectral dimension of the hyperspectral data is then normalized:

[0038] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com