Article identification method for efficiently labeling samples

A recognition method and sample labeling technology, which is applied in the field of item recognition with high-efficiency sample labeling, can solve problems such as low efficiency of image processing and recognition, large proportion of background area, and slow model training, so as to save sample labeling time, save time, and improve The effect of detection speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0052] An item identification method for efficiently labeling samples involved in this embodiment, the specific process steps are as follows:

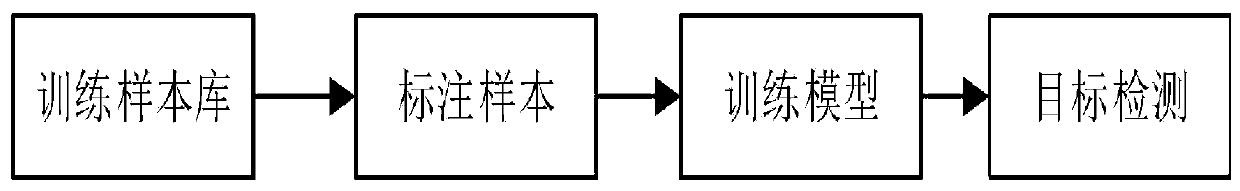

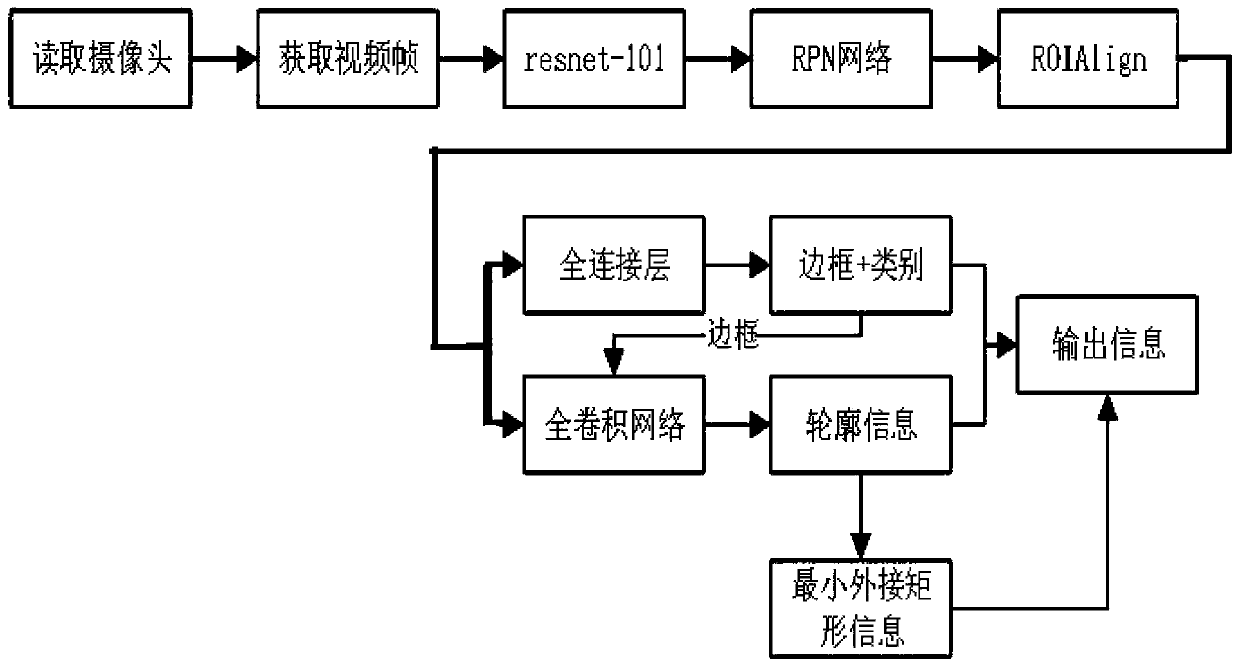

[0053] S1. Item subject detection and category prediction

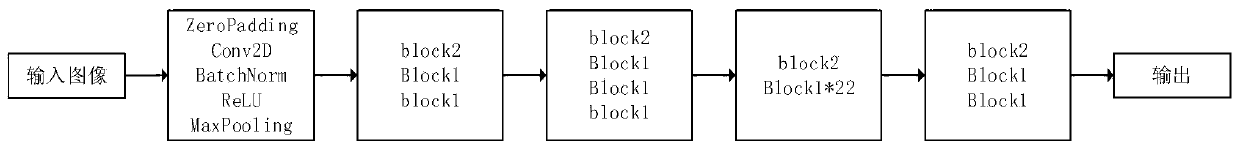

[0054] Use the item detection algorithm to train the item detection model, then locate the area of interest to the user in the video image, and predict the corresponding category according to the item detection model; the item detection algorithm uses the improved mask rcnn algorithm, the specific process is as follows:

[0055] S11. Training sample library

[0056] According to the needs, prepare static pictures of the corresponding category as training samples for training to form a training data set; the training data set includes training 16 types of items, namely: knife, cup, remote control, shoulder bag, mobile phone, scissors, laptop, mouse , backpacks, keys, wallets, glasses, umbrellas, fans, puppies, and kittens; the training data set mainly includes 3 parts, namel...

Embodiment 2

[0083] The item identification method with efficient sample labeling involved in Embodiment 1 can be used for item search. During item search, image acquisition is performed on targets along the line according to the planned path, and the target is predicted by image processing of acquired video frames through the item identification method with efficient sample labeling. The category, based on the depth map to obtain the real distance between the algorithm target and the camera, check whether the detected target category is consistent with the target category to be found, and tell the user the specific location of the target by voice broadcast after verification; there are two types of item search Situation: search for general items and search for sub-categories; this step uses a robot as an application example to explain the process of item search;

[0084] S21. Search for general items

[0085] Large-category item search refers to searching for a certain type of item in the...

Embodiment 3

[0120] Its main body structure of the robot involved in embodiment 2 includes a binocular camera, a controller, a voice interaction module, a drive unit and a power supply; the robot head is equipped with a binocular camera, and the binocular camera is used for video image acquisition; the binocular camera and the robot body The electrical information of the internal controller is connected, and the controller is electrically connected to the power supply; the voice interaction module is set on the surface of the robot body, and the voice interaction module is connected to the electrical information of the controller. The voice interaction module is used for voice interaction between the user and the robot, and adding subclass samples ; The lower part of the robot is provided with a drive unit, the drive unit adopts the existing crawler or wheel drive structure, and the drive unit is electrically connected with the controller.

[0121] The controller involved in this embodiment...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com