Adversarial sample detection method and device and computer readable storage medium

A technology of adversarial samples and detection methods, applied in the field of data processing, can solve the problem of inability to accurately detect adversarial samples, and achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

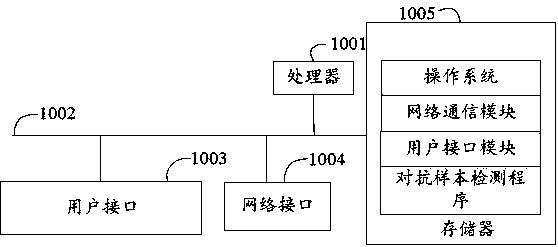

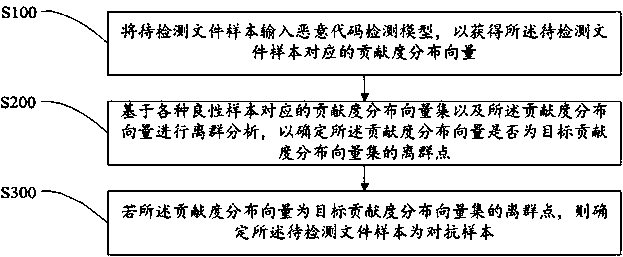

Image

Examples

no. 1 example

[0106] Based on the first embodiment, a second embodiment of the adversarial sample detection method of the present invention is proposed. In this embodiment, step S100 includes:

[0107] Step S110, input the file sample to be detected into the malicious code detection model, so as to obtain a plurality of feature maps corresponding to the file sample to be detected through the target channel of the target convolution layer in the malicious code detection model;

[0108] Step S120, based on each feature map, determine the contribution vector corresponding to the file sample to be detected;

[0109] Step S130, determining the contribution distribution vector based on the contribution vector and the file structure of the file sample to be detected.

[0110] In this embodiment, the file samples to be detected are input into the malicious code detection model for model training, and multiple feature maps corresponding to the file samples to be detected are obtained through the tar...

no. 2 example

[0114] Based on the second embodiment, a third embodiment of the adversarial sample detection method of the present invention is proposed. In this embodiment, step S120 includes:

[0115] Step S121, based on the output result of the classifier in the malicious code detection model, determine the weight corresponding to each of the feature maps;

[0116] Step S122 , performing weighted average on each of the feature maps based on each of the weights, and performing a noise filtering operation on the weighted average result to obtain the contribution vector.

[0117] In this embodiment, after obtaining a plurality of feature maps corresponding to the file samples to be detected, the output result of the classifier in the malicious code detection model is obtained, and the weights corresponding to each feature map are calculated according to the output results, and then based on each weight. The feature maps are weighted and averaged to obtain the weighted average result. Among ...

no. 4 example

[0135] Based on the fourth embodiment, the fifth embodiment of the adversarial sample detection method of the present invention is proposed. In this embodiment, step S132 includes:

[0136] Step S501, based on the position of the file header and the position of each section in the file structure, divide the sample of the file to be detected into blocks to obtain multiple file blocks;

[0137] Step S502, dividing each file block into blocks based on a preset rule to obtain a preset number of sub-file blocks corresponding to each file block;

[0138] Step S503, based on the contribution degree corresponding to each byte and each sub-file block, determine the contribution degree distribution vector.

[0139]In this embodiment, when the contribution corresponding to each byte in the file sample to be detected is obtained, the position of the file header and the positions of each section in the file structure are obtained, and based on the position of the file header and the positi...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap