Global local time representation method for video-based pedestrian re-identification

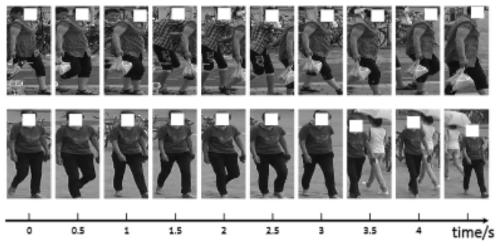

A re-identification and video technology, applied in the field of artificial intelligence, can solve the problems of complex training of long sequences of videos

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

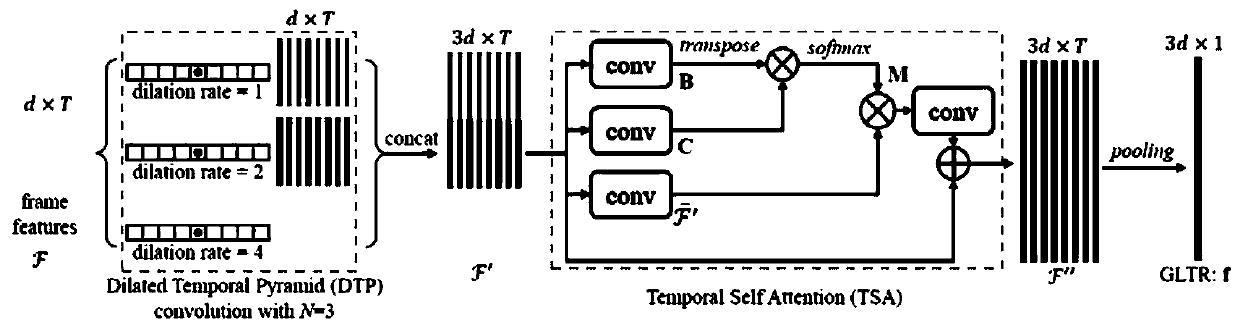

Method used

Image

Examples

Embodiment Construction

[0057] The following description and drawings illustrate specific embodiments of the invention sufficiently to enable those skilled in the art to practice them.

[0058] 1 Basic introduction

[0059] We test our method on a newly proposed large-scale video dataset for person ReID (LS-VID) and four widely used video ReID datasets, including PRID, iLIDS VID, MARS and DukeMTMC Video ReID. Experimental results show that GLTR has consistent performance advantages on these datasets. It achieves 8702% first-class accuracy on the MARS dataset without re-ranking, which is 2% better than the recent PBR which uses additional body part cues for video feature learning. It achieves 9448% and 9629% first-class accuracy on PRID and DukeMTMC VideoReID, respectively, which also exceeds the current state of the art.

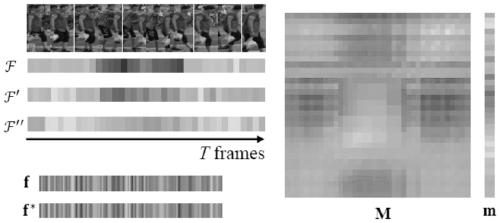

[0060] The GLTR representation is a series of frame features extracted by simple DTP and TSA models. Although computationally simple and efficient, this solution outperforms ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com