Intelligent goods pickup identification method based on depth vision

A recognition method and deep vision technology, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve the problems of high cost of RFID tags, high labor costs, and low intelligence of smart containers

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0066] The specific embodiments of the present invention will be further described below with reference to the accompanying drawings.

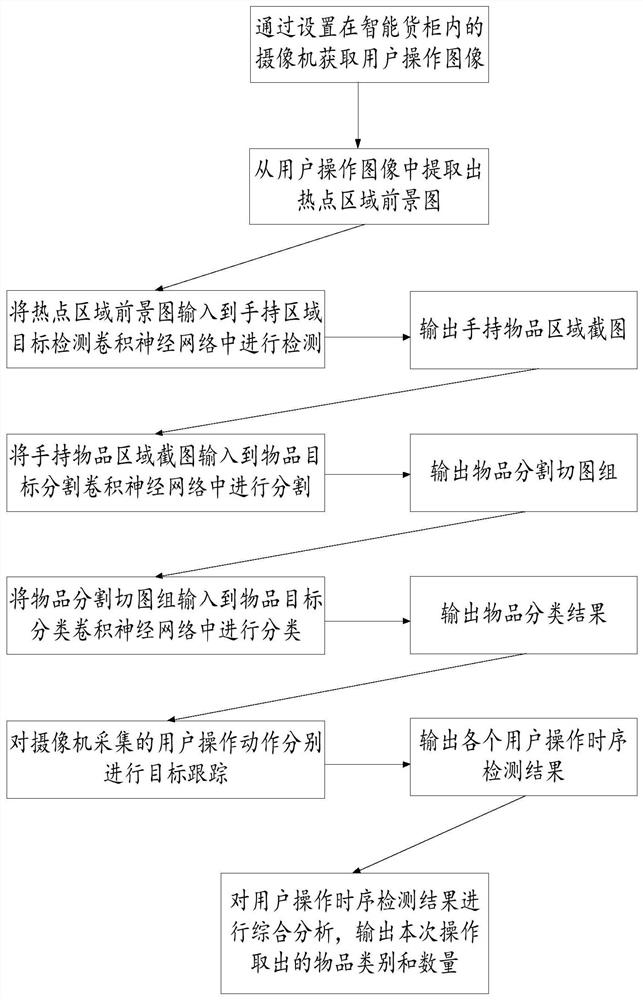

[0067] The present application discloses a deep vision-based intelligent pick-up and identification method. The method flow chart is as follows: figure 1 As shown, the identification method includes the following steps:

[0068] Step 1: Obtain the user operation image through the camera set in the smart container, such as figure 2 shown.

[0069] Step 2: Extract the foreground image of the hot spot area from the user operation image by the frame difference method.

[0070] Step 201: Load the user operation image, and acquire the previous frame image and the next frame image of the current user operation image.

[0071] Step 202: Convert the current user operation image, the previous frame image, and the next frame image into grayscale images, respectively obtain the two-way grayscale difference between the current user operation image, the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com