Remote supervision relationship extraction method with entity perception based on PCNN model

A technology of relation extraction and remote supervision, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of not further exploring the different contributions of the three segments in PCNN, ignoring semantic information, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

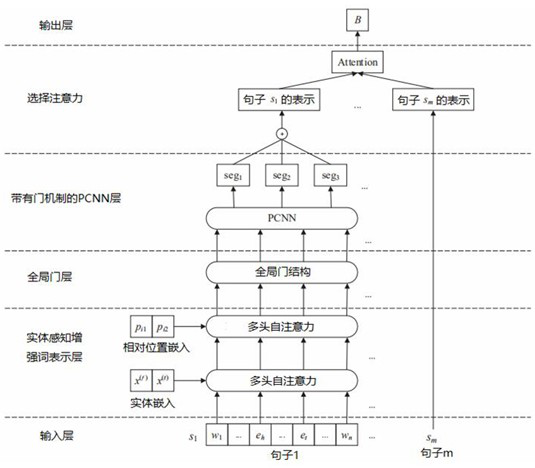

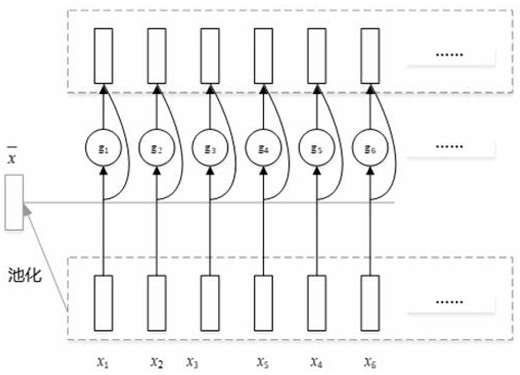

[0073] The remote supervised relation extraction task can be briefly described as: given a bag B={s 1 ,s 2 ,...,s m}, each sentence in the bag contains the same entity pair (head entity e f and tail entity e t ), the purpose of relation extraction is to predict the relation y between two entities. According to this definition, the extraction of remote supervision relations in the present invention adopts a novel gated segmental convolutional neural network EA-GPCNN with entity-aware enhancement function, such as figure 1 shown.

[0074] Specifically, it can be summarized as follows:

[0075] S1. For a sentence in a given sentence bag, the input layer uses Google's pre-trained word2vec word vector to map each word in the sentence to a low-dimensional word embedding vector to obtain an input sequence;

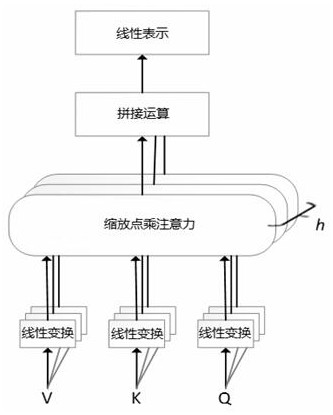

[0076] S2. The entity-aware enhanced word representation layer uses a multi-head self-attention mechanism to fuse word embeddings with head and tail entity embeddings and r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com