Point cloud collision detection method applied to robot grabbing scene

A collision detection and robot technology, applied in the field of robot vision, which can solve problems such as unstable grasping

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

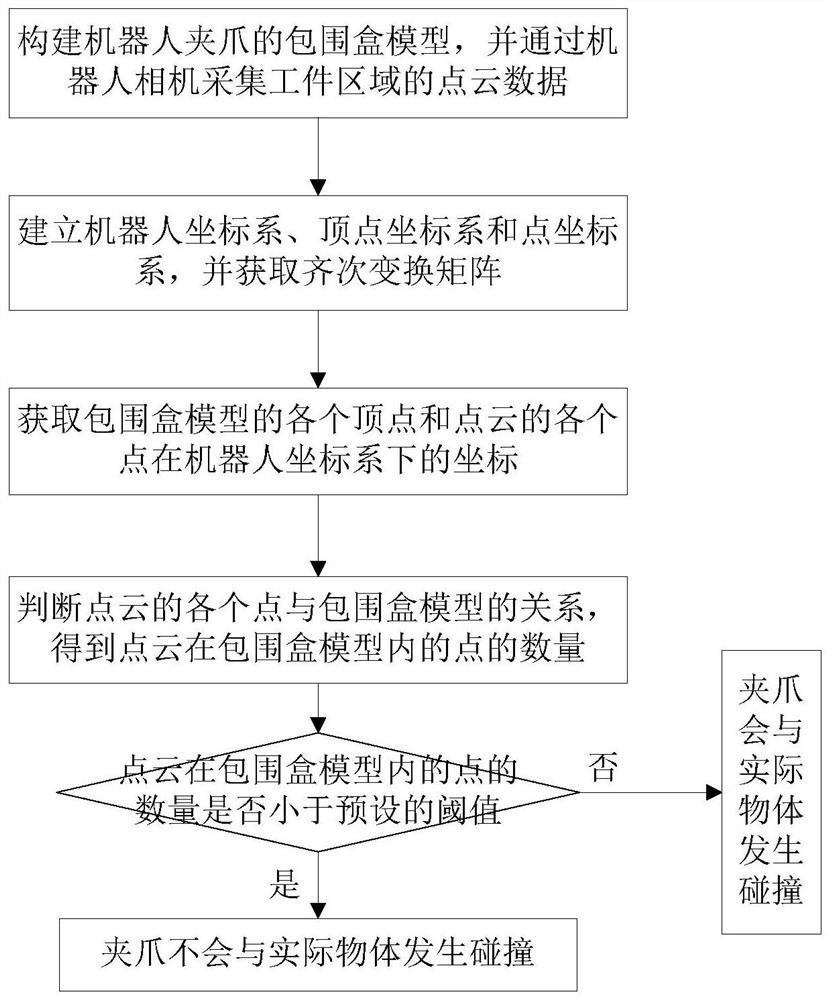

[0071] like figure 1 As shown, a point cloud collision detection method for robot grabbing scene, including the following steps:

[0072] S1: Construct the bounding box model of the robot gripper, and collect the point cloud data of the workpiece area through the robot camera;

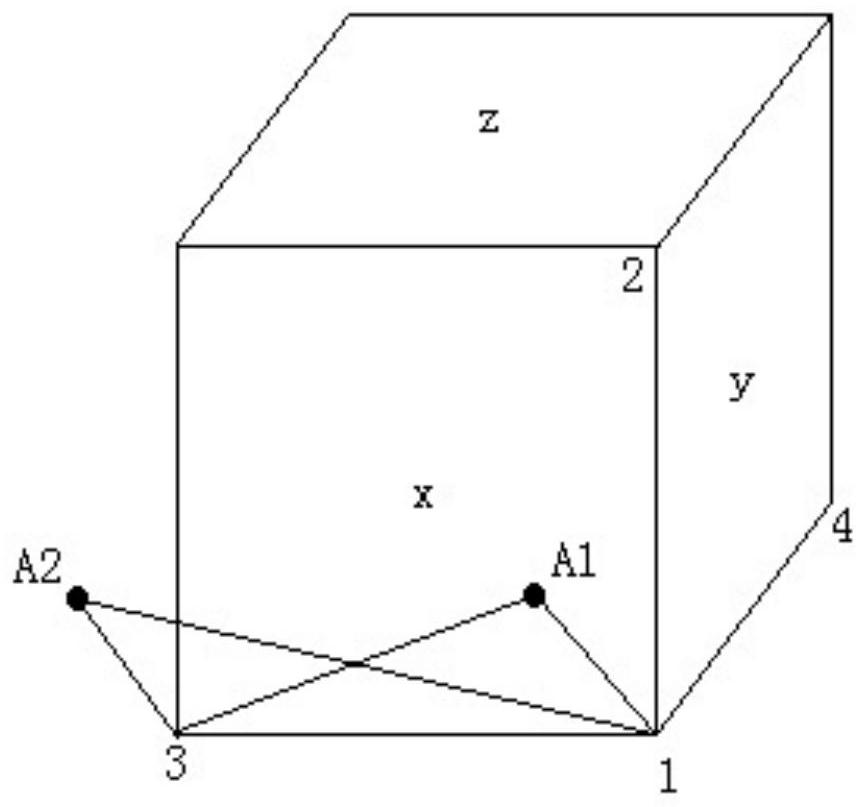

[0073] S2: Establish the robot coordinate system, the vertex coordinate system of each vertex of the bounding box model and the point coordinate system of each point in the point cloud, and obtain the homogeneous transformation matrix of each vertex coordinate system and each point coordinate system in the robot coordinate system;

[0074] S3: Obtain the coordinates of each vertex of the bounding box model and each point of the point cloud in the robot coordinate system according to the homogeneous transformation matrix;

[0075] S4: According to the coordinates of each vertex of the bounding box model and each point of the point cloud in the robot coordinate system, judge the relationship between eac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com