Patents

Literature

652results about "Image feed-back" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

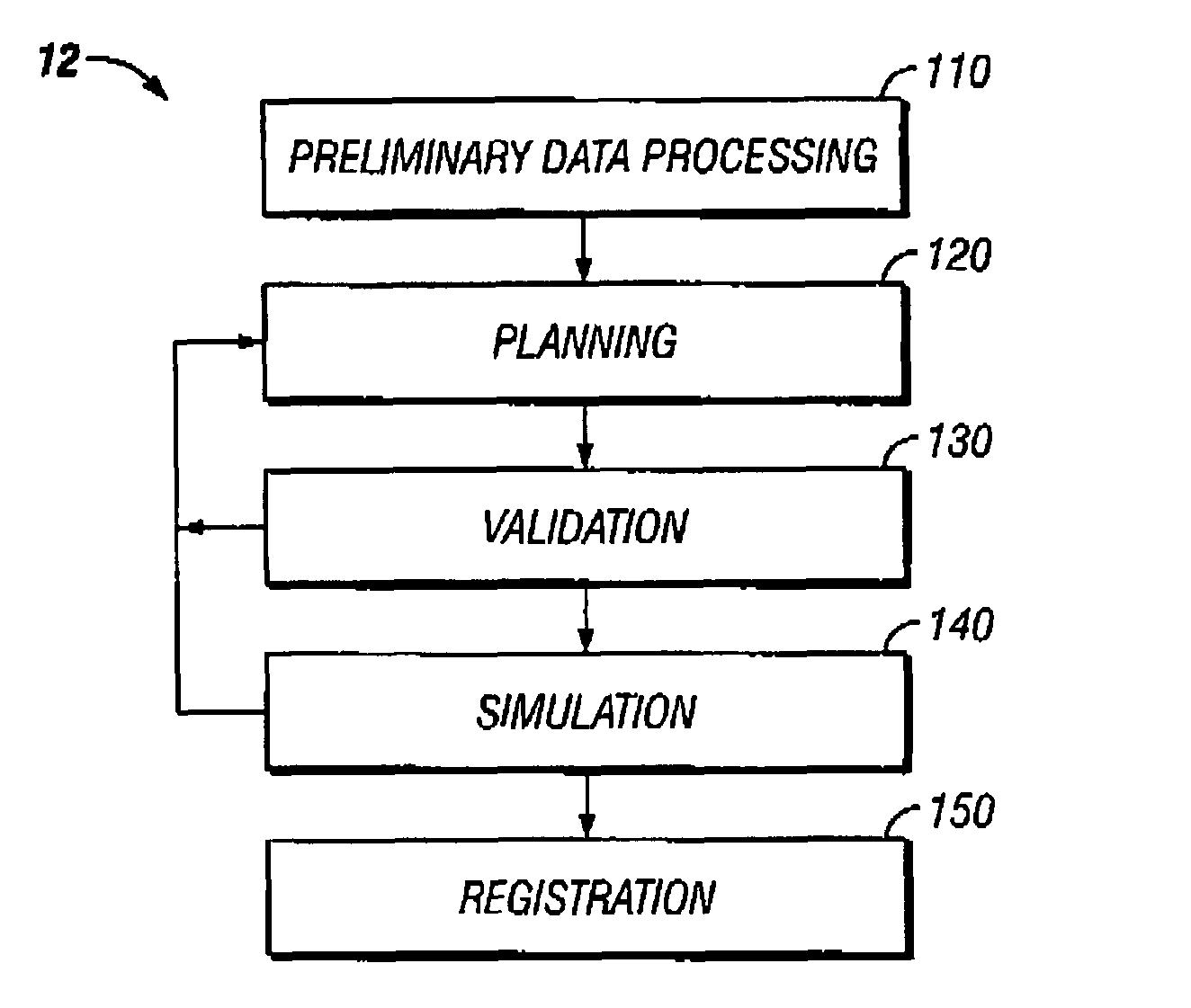

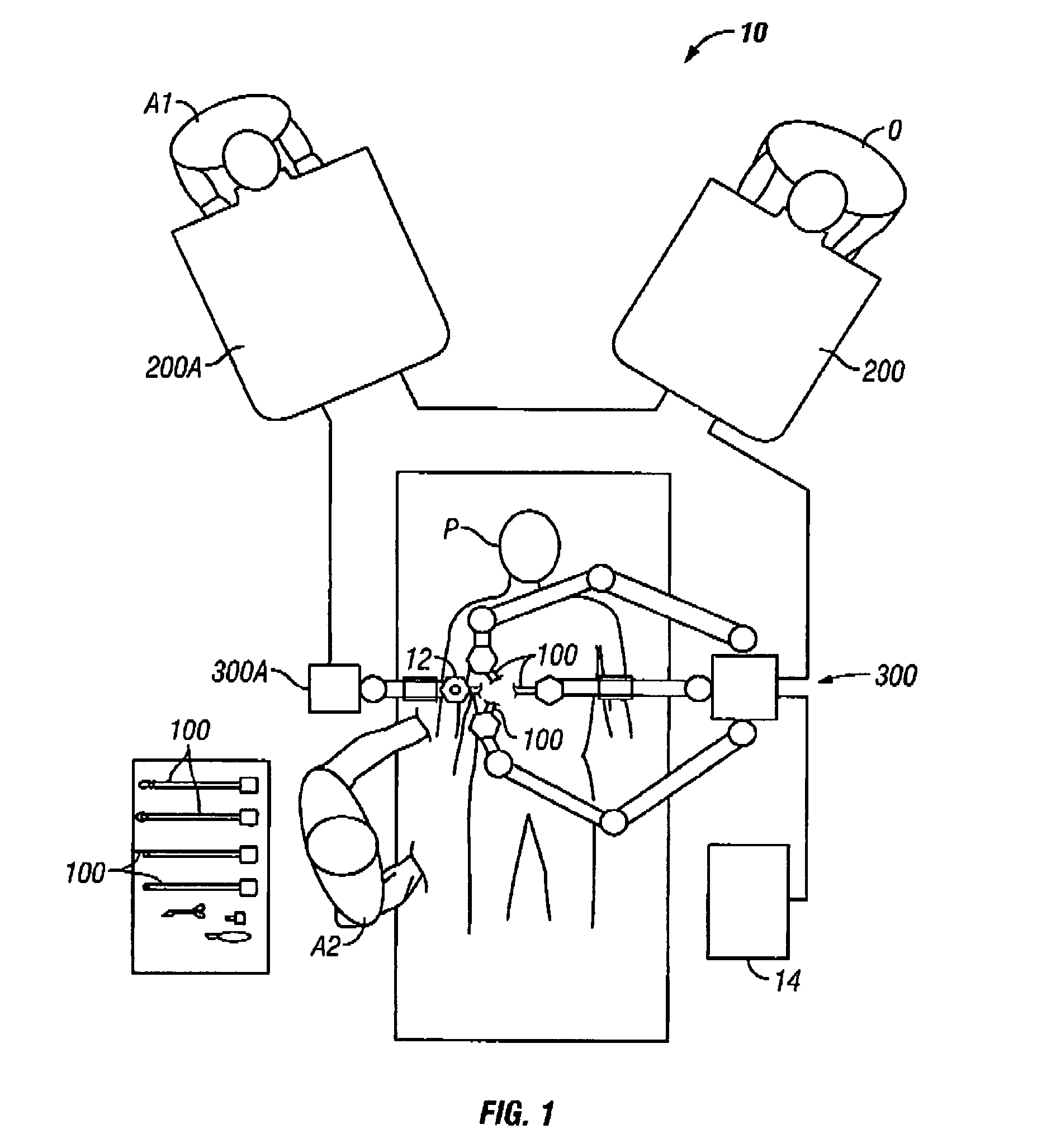

Methods and apparatus for surgical planning

ActiveUS7607440B2Easy to planPrecise positioningProgramme controlProgramme-controlled manipulatorSurgical siteEngineering

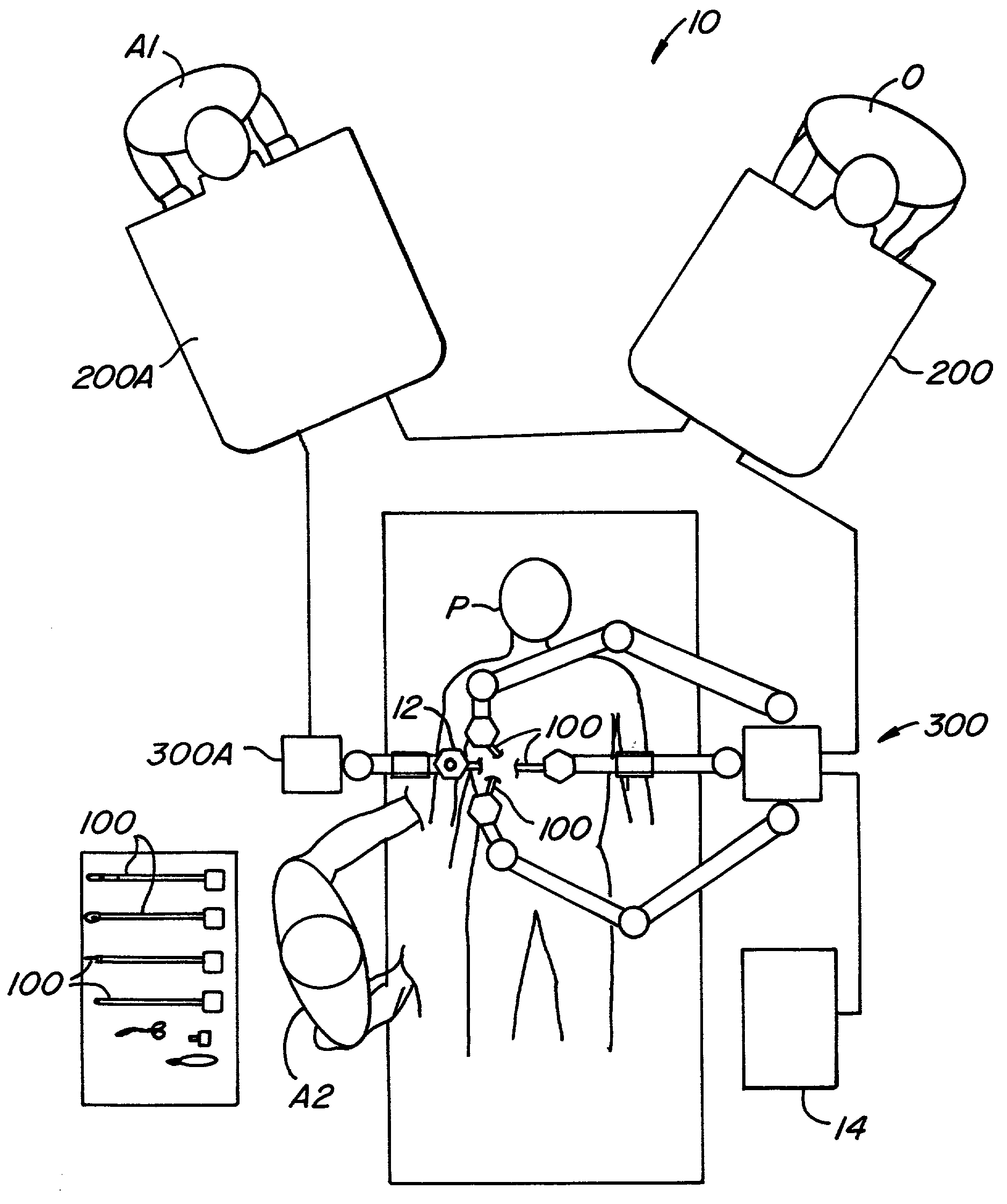

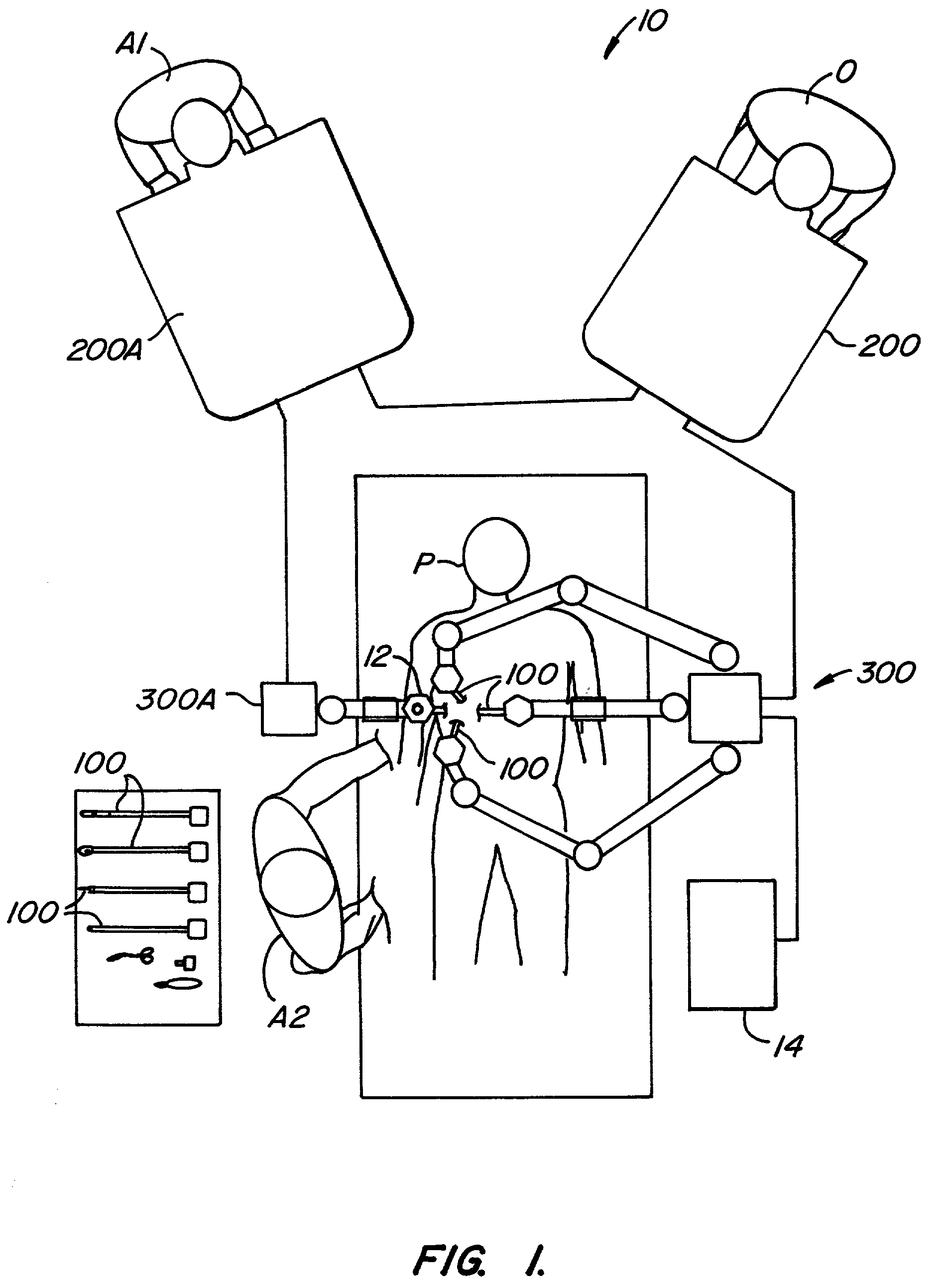

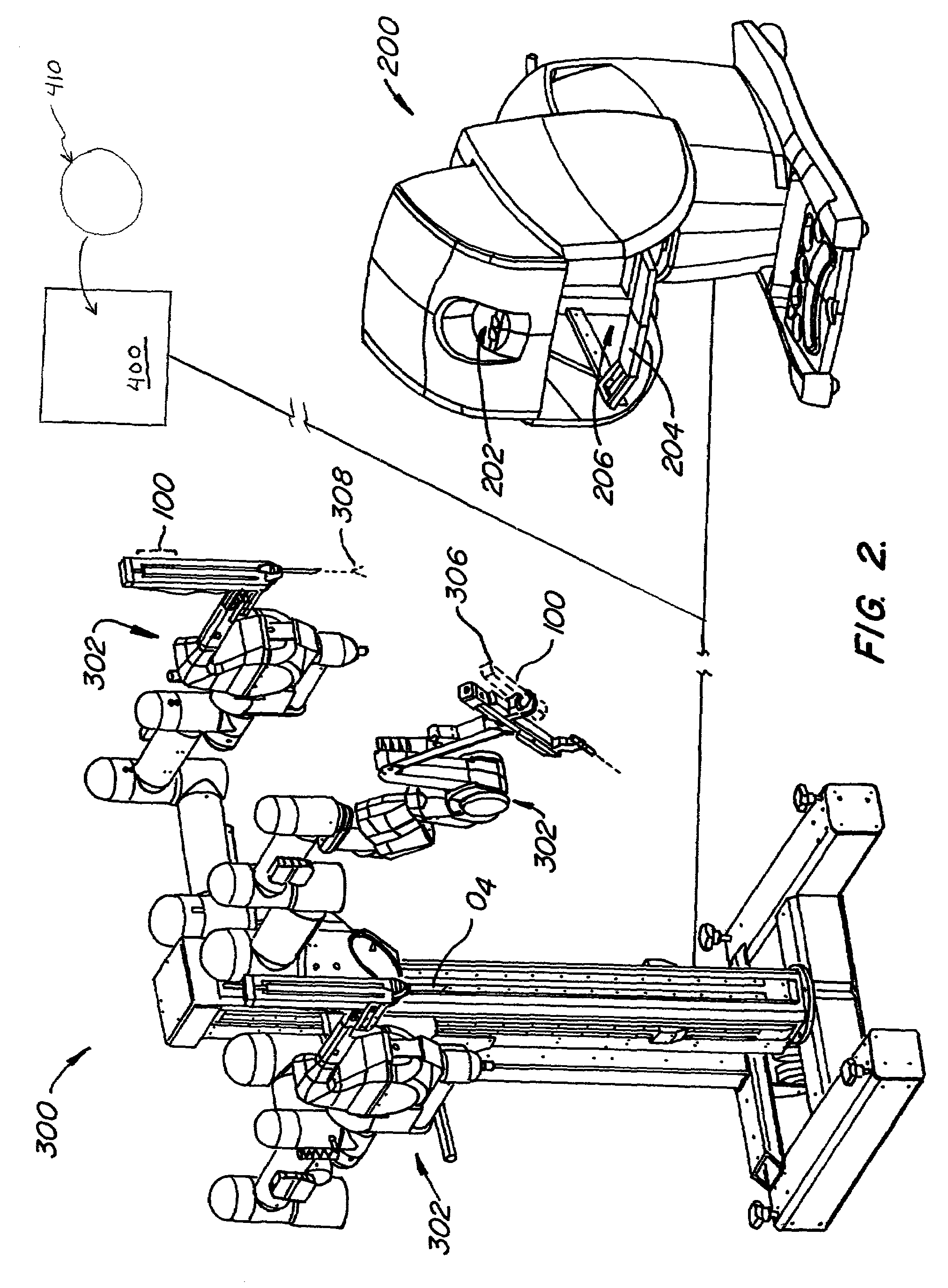

Methods and apparatus for enhancing surgical planning provide enhanced planning of entry port placement and / or robot position for laparoscopic, robotic, and other minimally invasive surgery. Various embodiments may be used in robotic surgery systems to identify advantageous entry ports for multiple robotic surgical tools into a patient to access a surgical site. Generally, data such as imaging data is processed and used to create a model of a surgical site, which can then be used to select advantageous entry port sites for two or more surgical tools based on multiple criteria. Advantageous robot positioning may also be determined, based on the entry port locations and other factors. Validation and simulation may then be provided to ensure feasibility of the selected port placements and / or robot positions. Such methods, apparatus, and systems may also be used in non-surgical contexts, such as for robotic port placement in munitions diffusion or hazardous waste handling.

Owner:INTUITIVE SURGICAL OPERATIONS INC +1

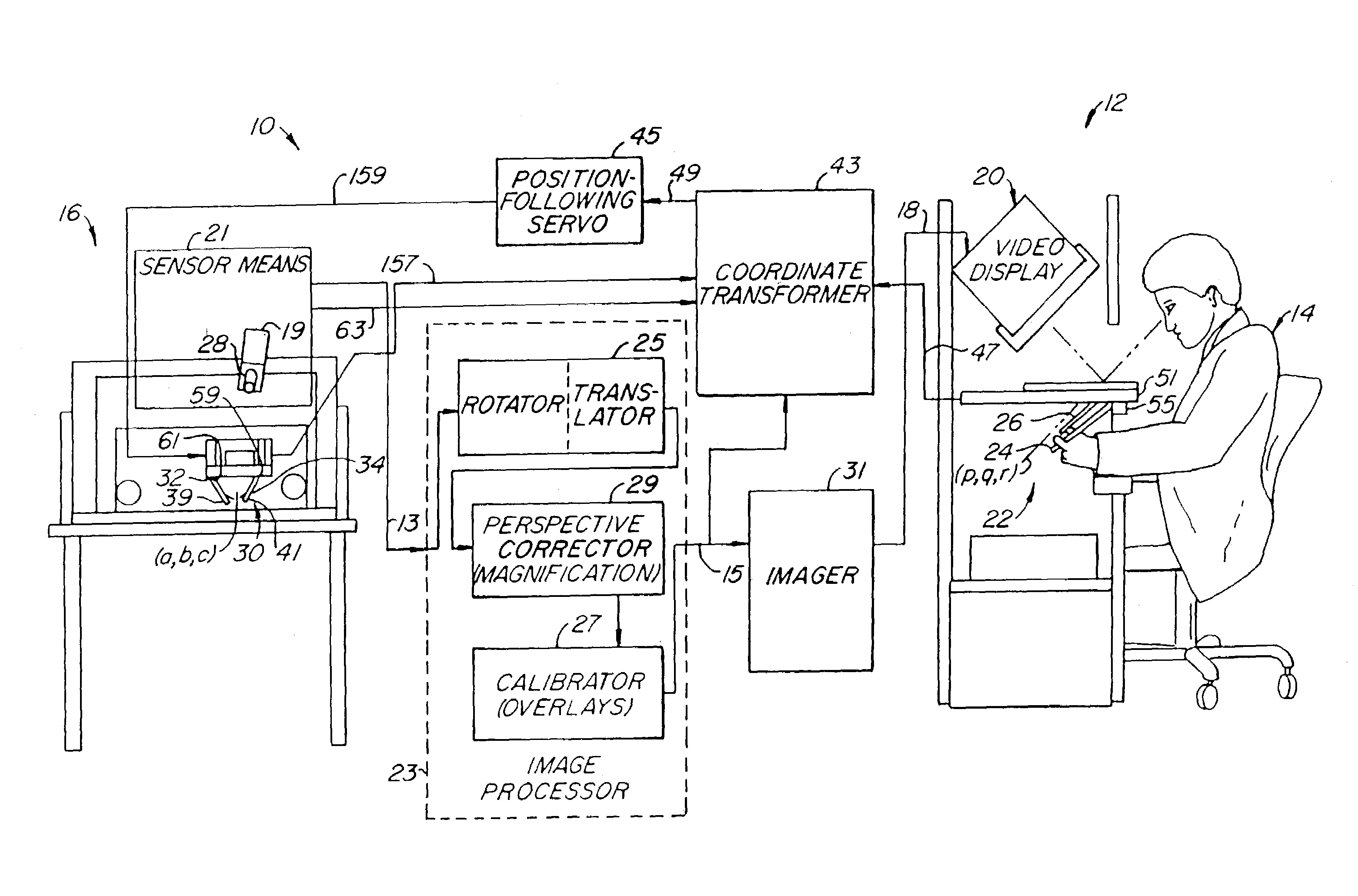

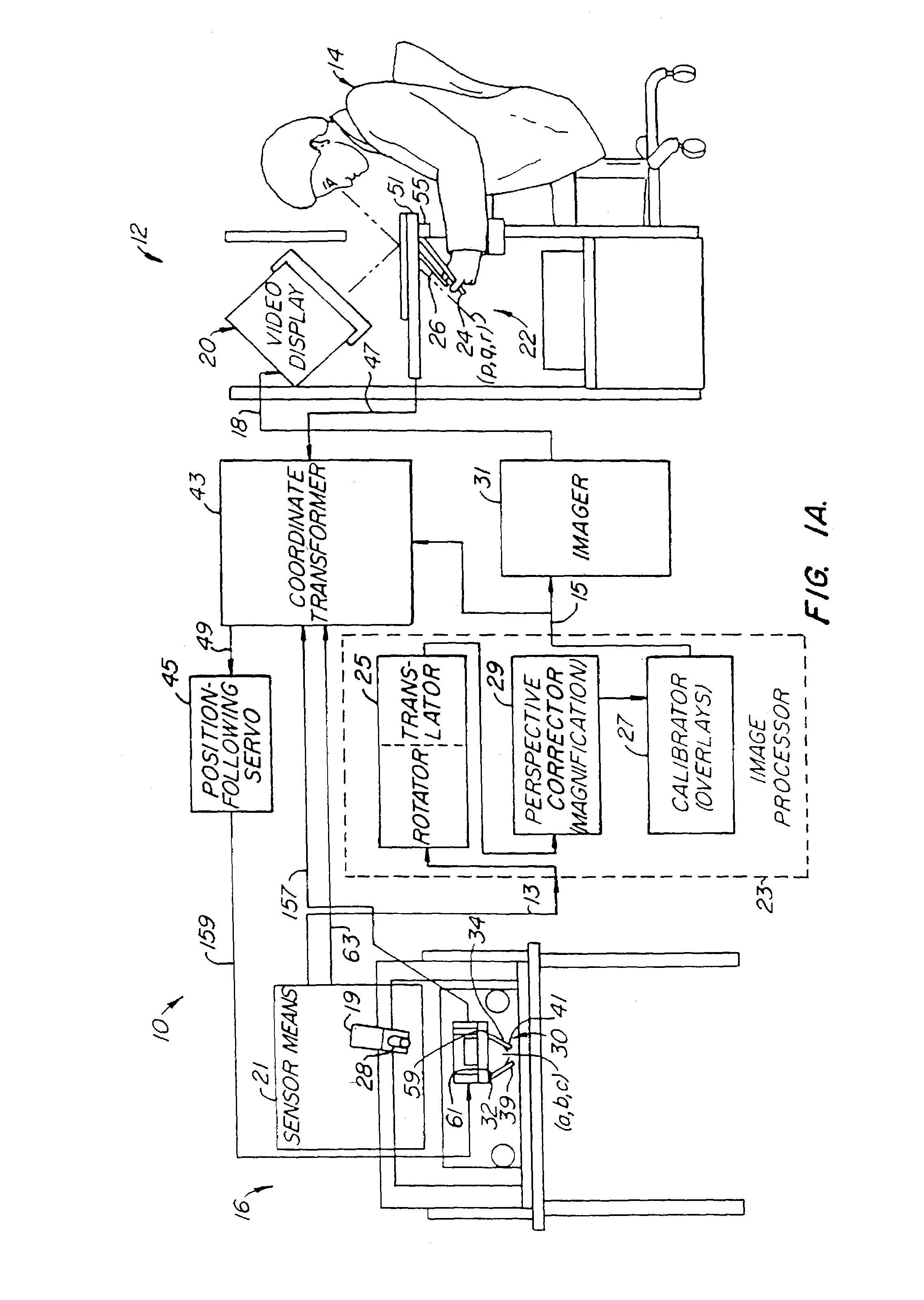

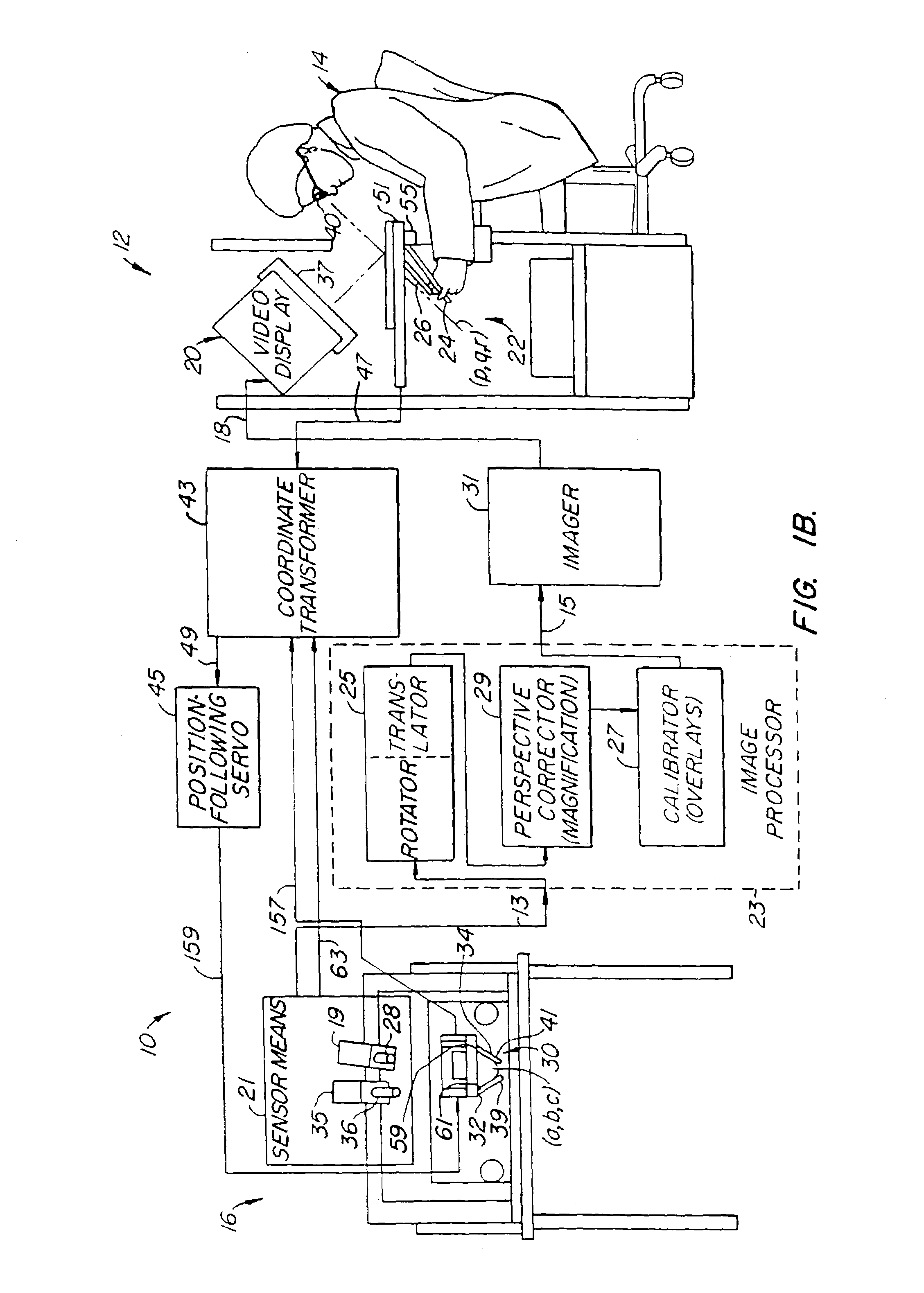

Method and apparatus for transforming coordinate systems in a telemanipulation system

In a telemanipulation system for manipulating objects located in a workspace at a remote worksite by an operator from an operator's station, such as in a remote surgical system, the remote worksite having a manipulator with an end effector for manipulating an object at the workspace, such as a body cavity, a controller including a hand control at the control operator's station for remote control of the manipulator, an image capture device, such as a camera, and image output device for reproducing a viewable real-time image, the improvement wherein a position sensor associated with the image capture device senses position relative to the end effector and a processor transforms the viewable real-time image into a perspective image with correlated manipulation of the end effector by the hand controller such that the operator can manipulate the end effector and the manipulator as if viewing the workspace in true presence. Image transformation according to the invention includes translation, rotation and perspective correction.

Owner:SRI INTERNATIONAL

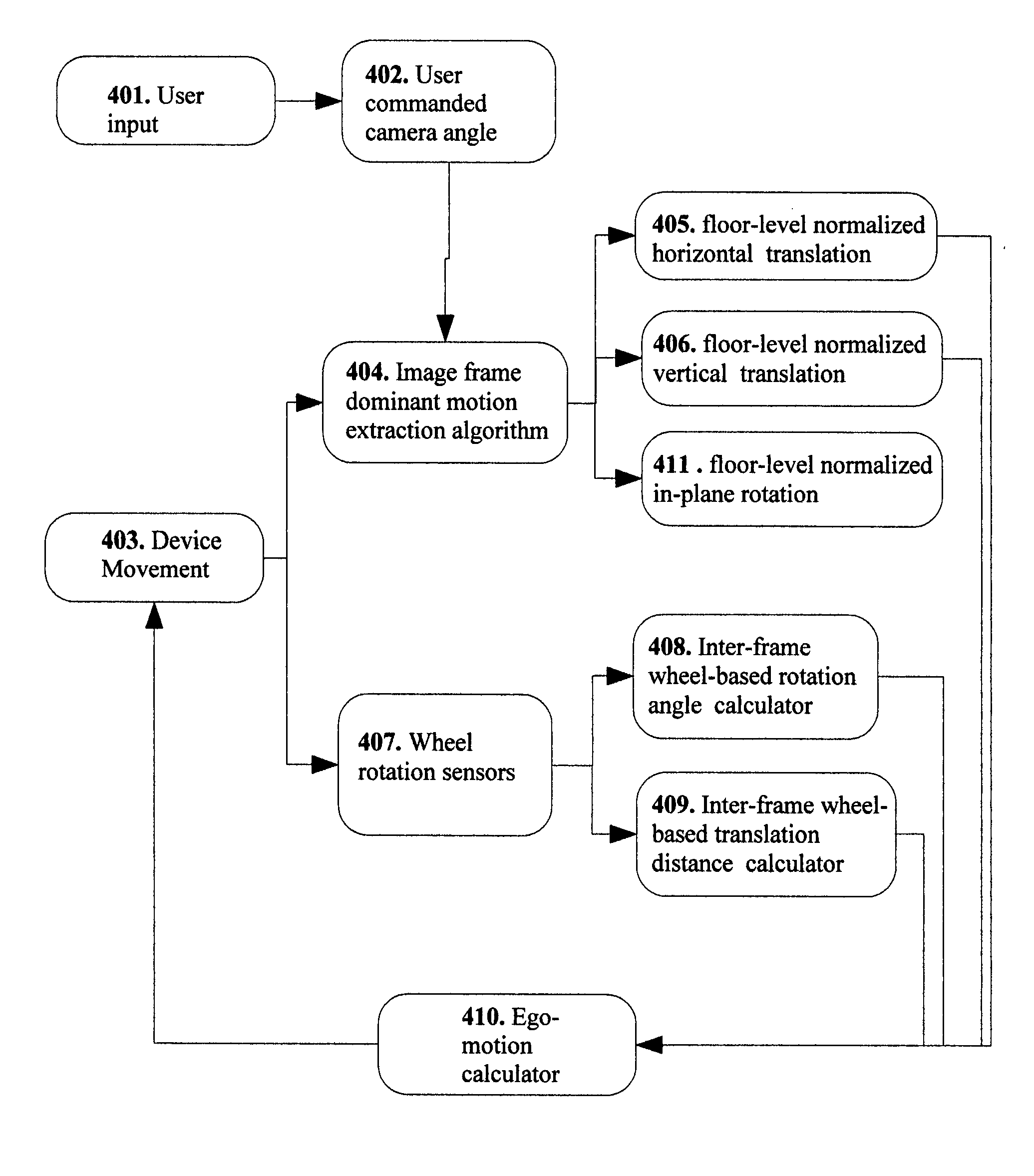

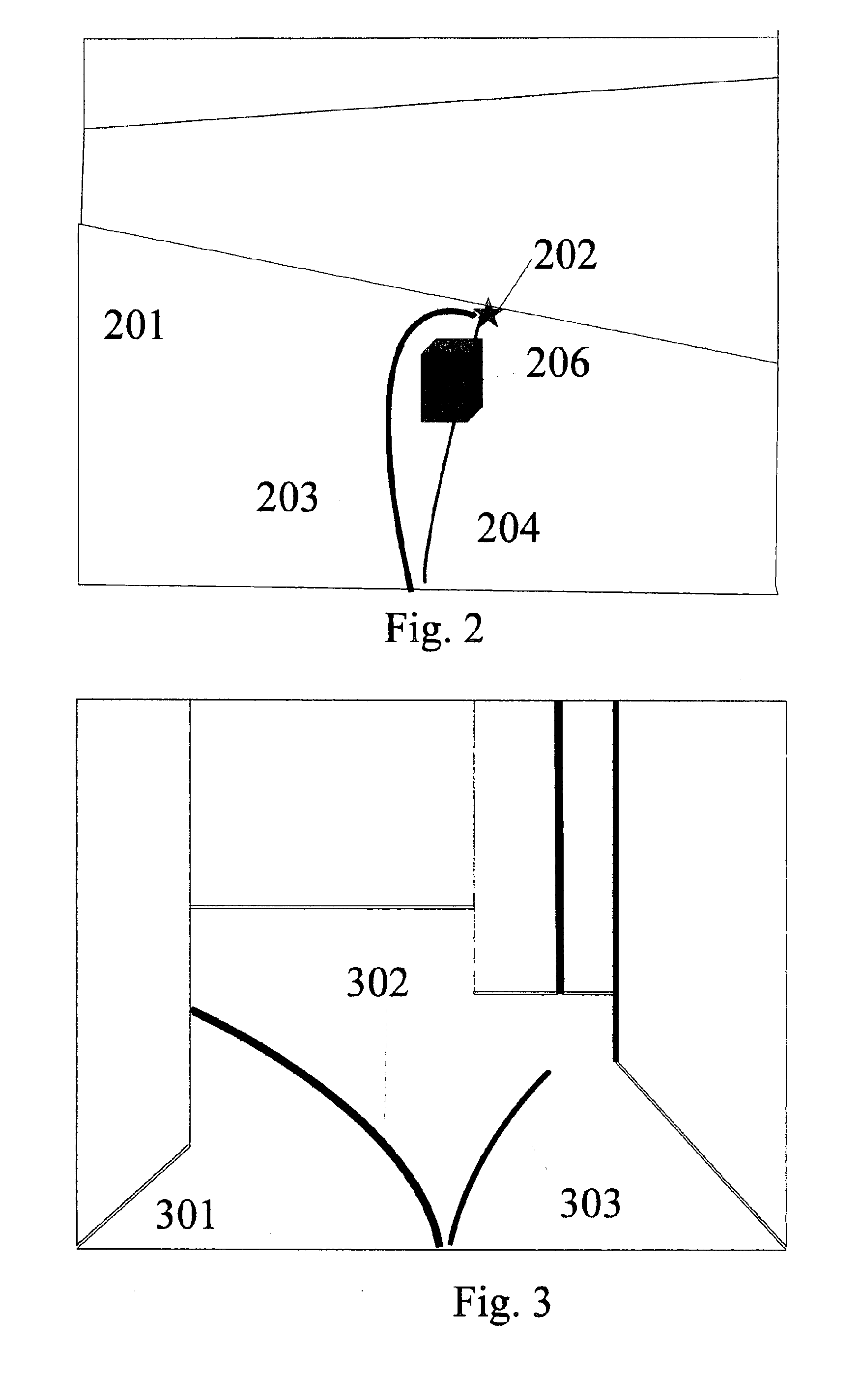

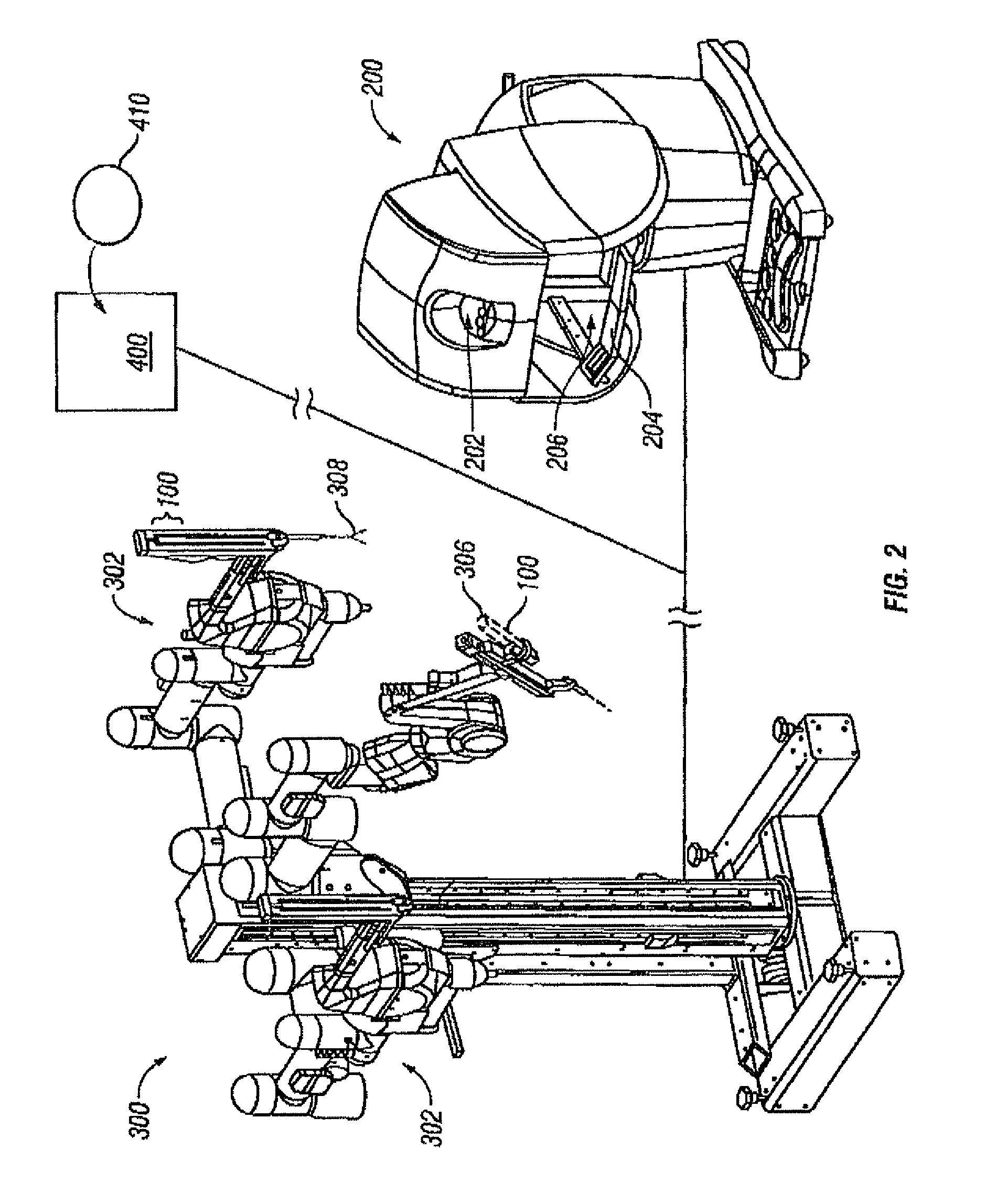

Method and apparatus for path planning, selection, and visualization

InactiveUS20100241289A1Suffer from effectKeep movingProgramme controlProgramme-controlled manipulatorGraphicsThree-dimensional space

New and Improved methods and apparatus for robotic path planning, selection, and visualization are described A path spline visually represents the current trajectory of the robot through a three dimensional space such as a room By altering a graphical representation of the trajectory—the path spline—an operator can visualize the path the robot will take, and is freed from real-time control of the robot Control of the robot is accomplished by periodically updating the path spline such that the newly updated spline represents the new desired path for the robot Also a sensor that may be located on the robot senses the presence of boundaries (obstacles) in the current environment and generates a path that circumnavigates the boundaries while still maintaining motion in the general direction selected by the operator The mathematical form of the path that circumnavigates the boundaries may be a spline

Owner:SANDBERG ROY

Methods and apparatus for surgical planning

ActiveUS8170716B2Easy to planPrecise positioningProgramme controlProgramme-controlled manipulatorSurgical siteEngineering

Methods and apparatus for enhancing surgical planning provide enhanced planning of entry port placement and / or robot position for laparoscopic, robotic, and other minimally invasive surgery. Various embodiments may be used in robotic surgery systems to identify advantageous entry ports for multiple robotic surgical tools into a patient to access a surgical site. Generally, data such as imaging data is processed and used to create a model of a surgical site, which can then be used to select advantageous entry port sites for two or more surgical tools based on multiple criteria. Advantageous robot positioning may also be determined, based on the entry port locations and other factors. Validation and simulation may then be provided to ensure feasibility of the selected port placements and / or robot positions. Such methods, apparatus and systems may also be used in non-surgical contexts, such as for robotic port placement in munitions diffusion or hazardous waste handling.

Owner:INTUITIVE SURGICAL OPERATIONS INC +1

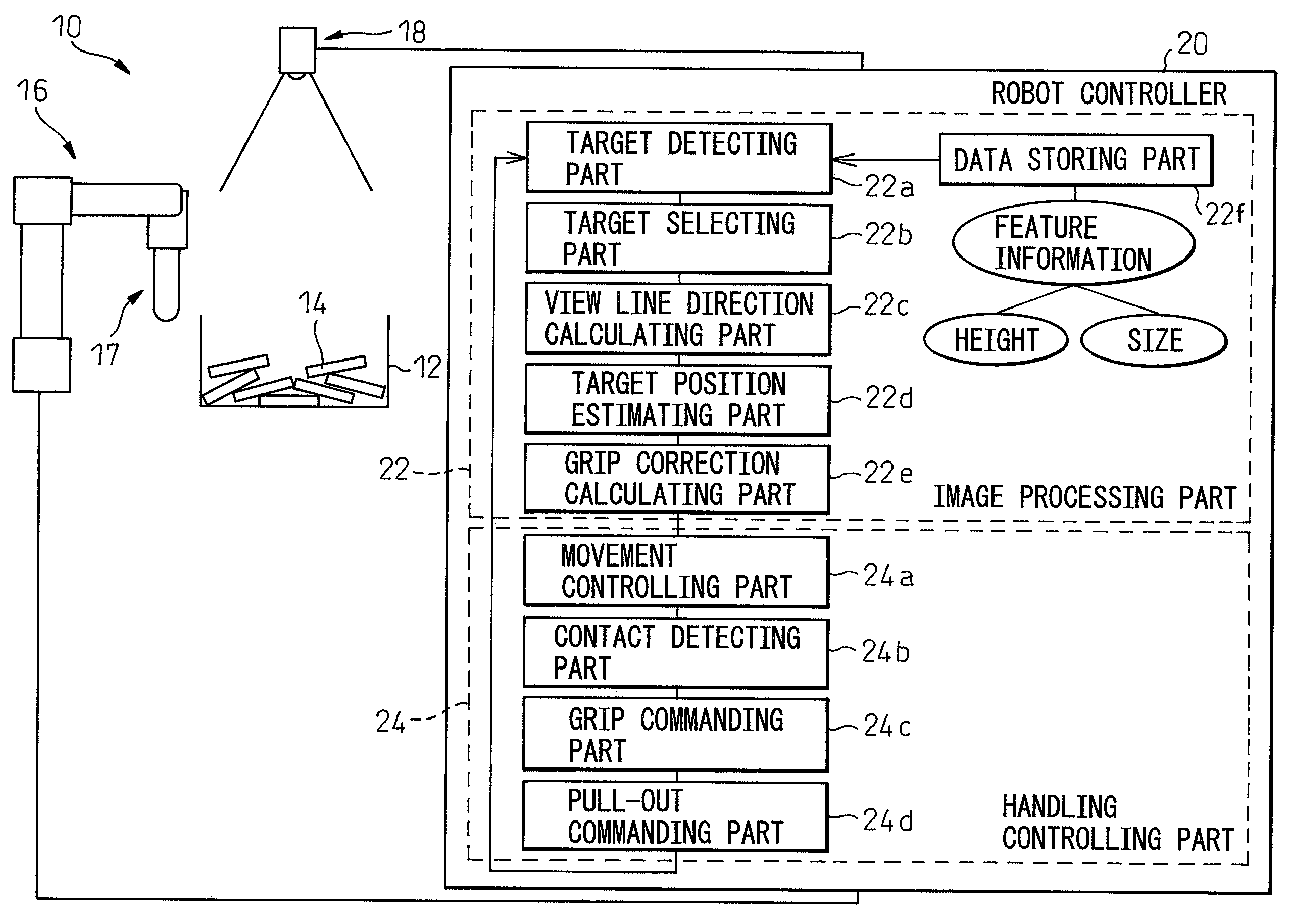

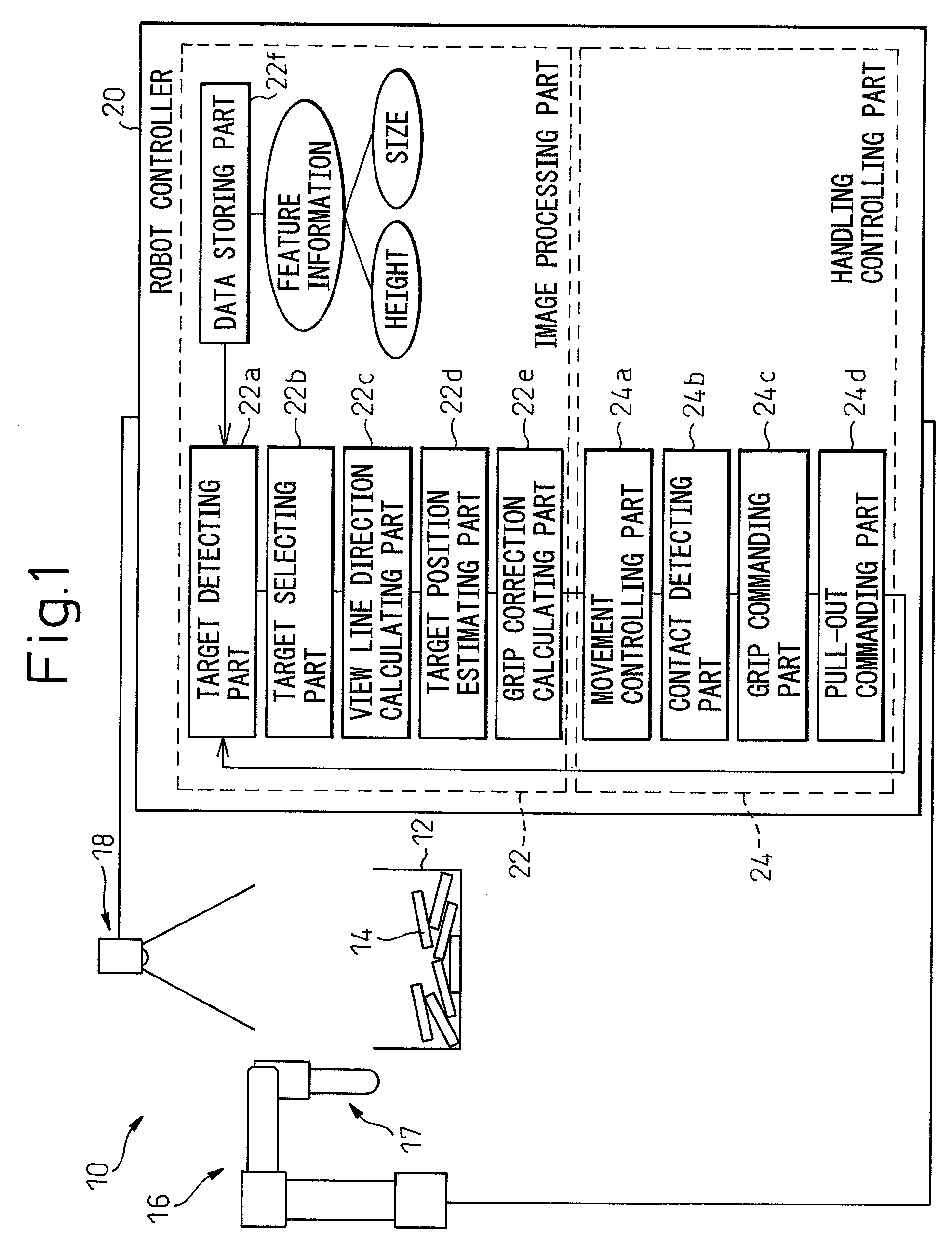

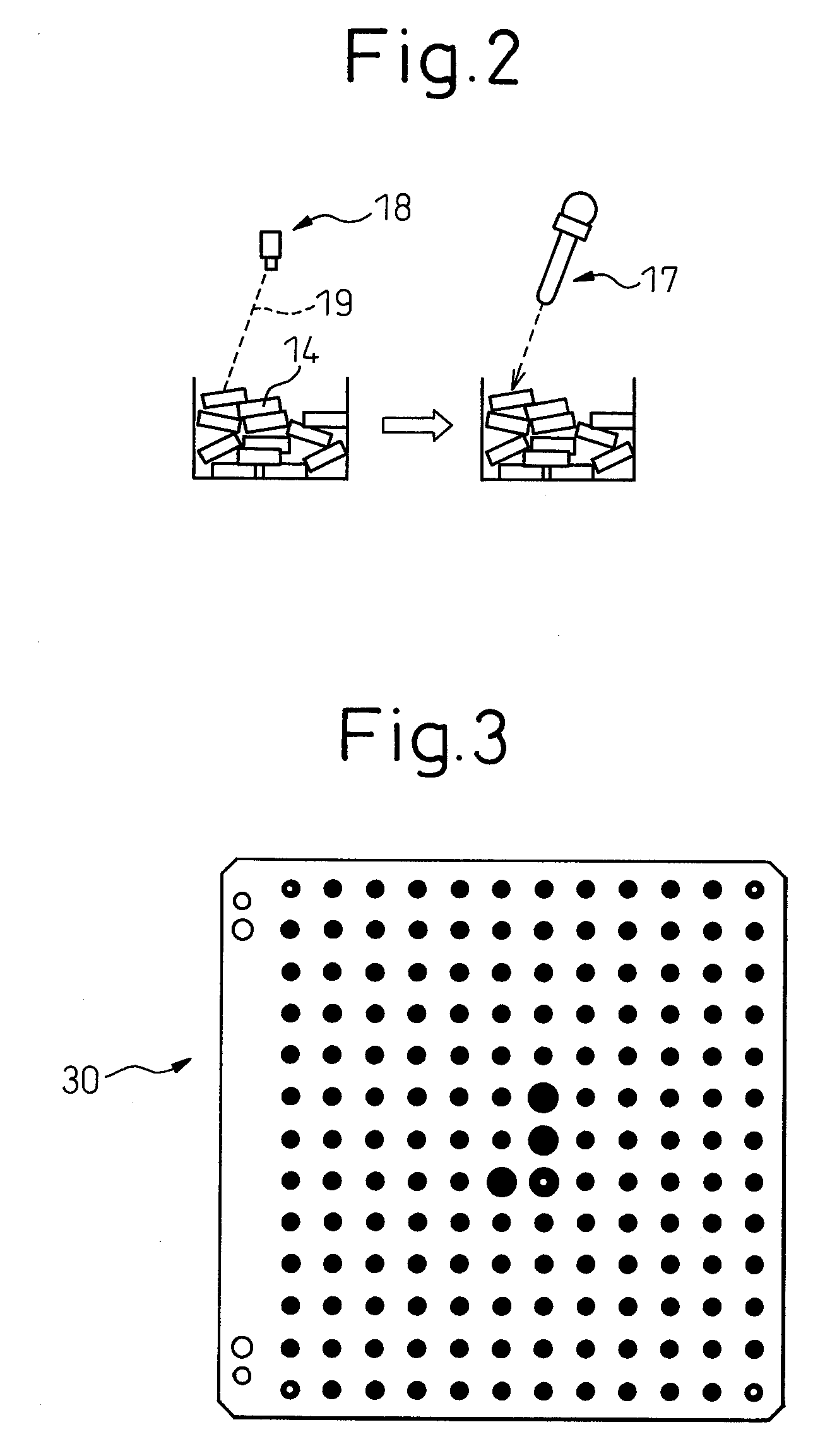

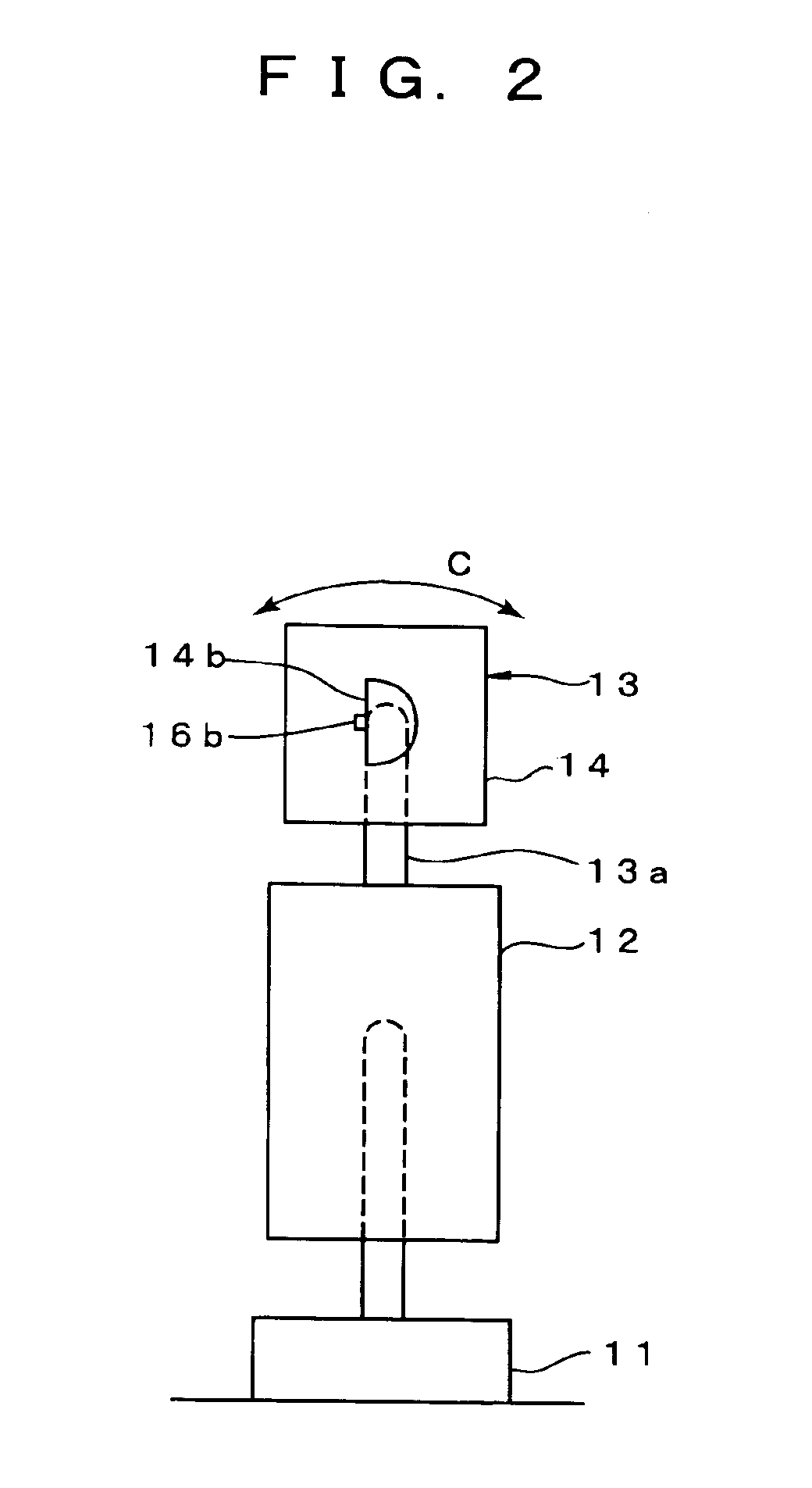

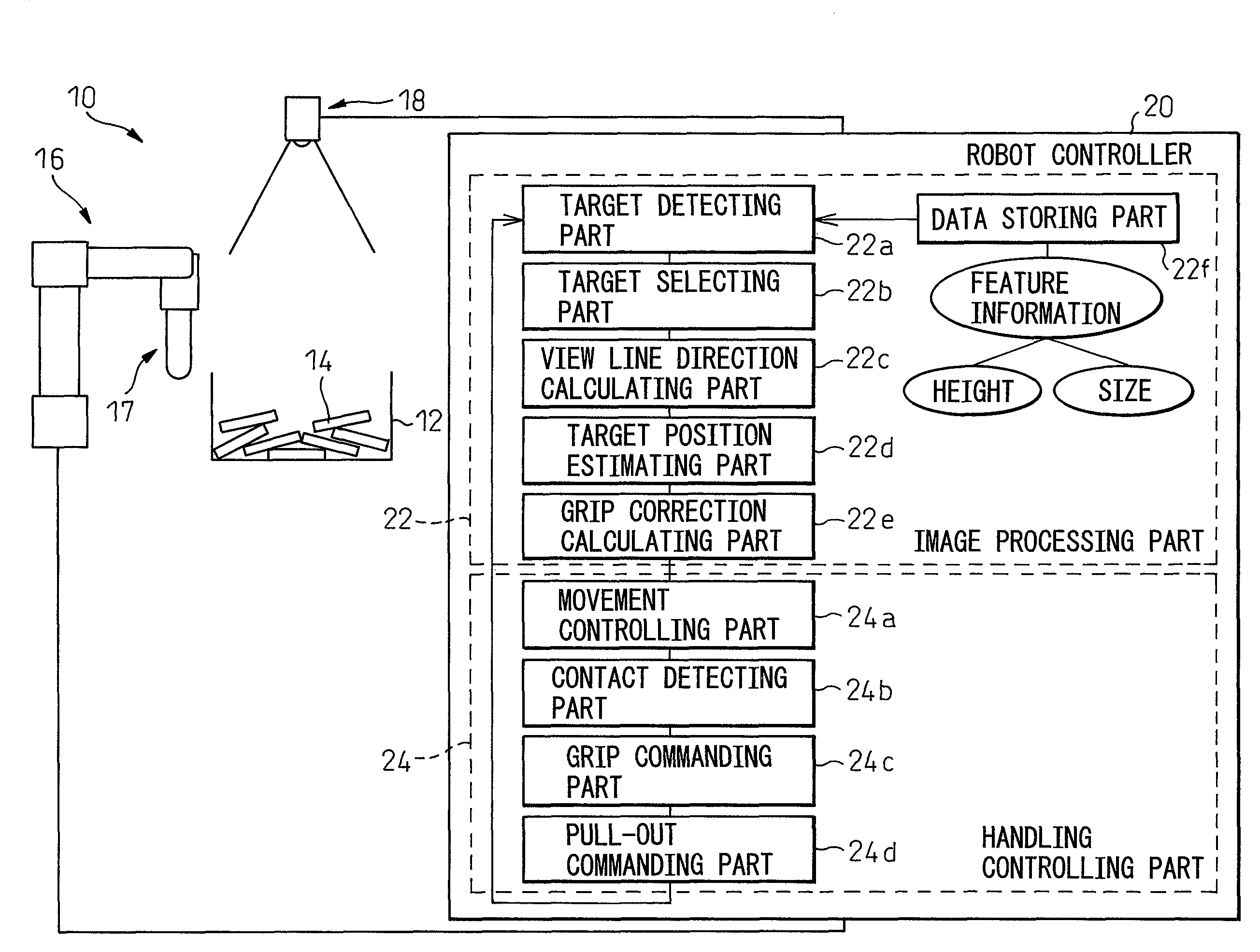

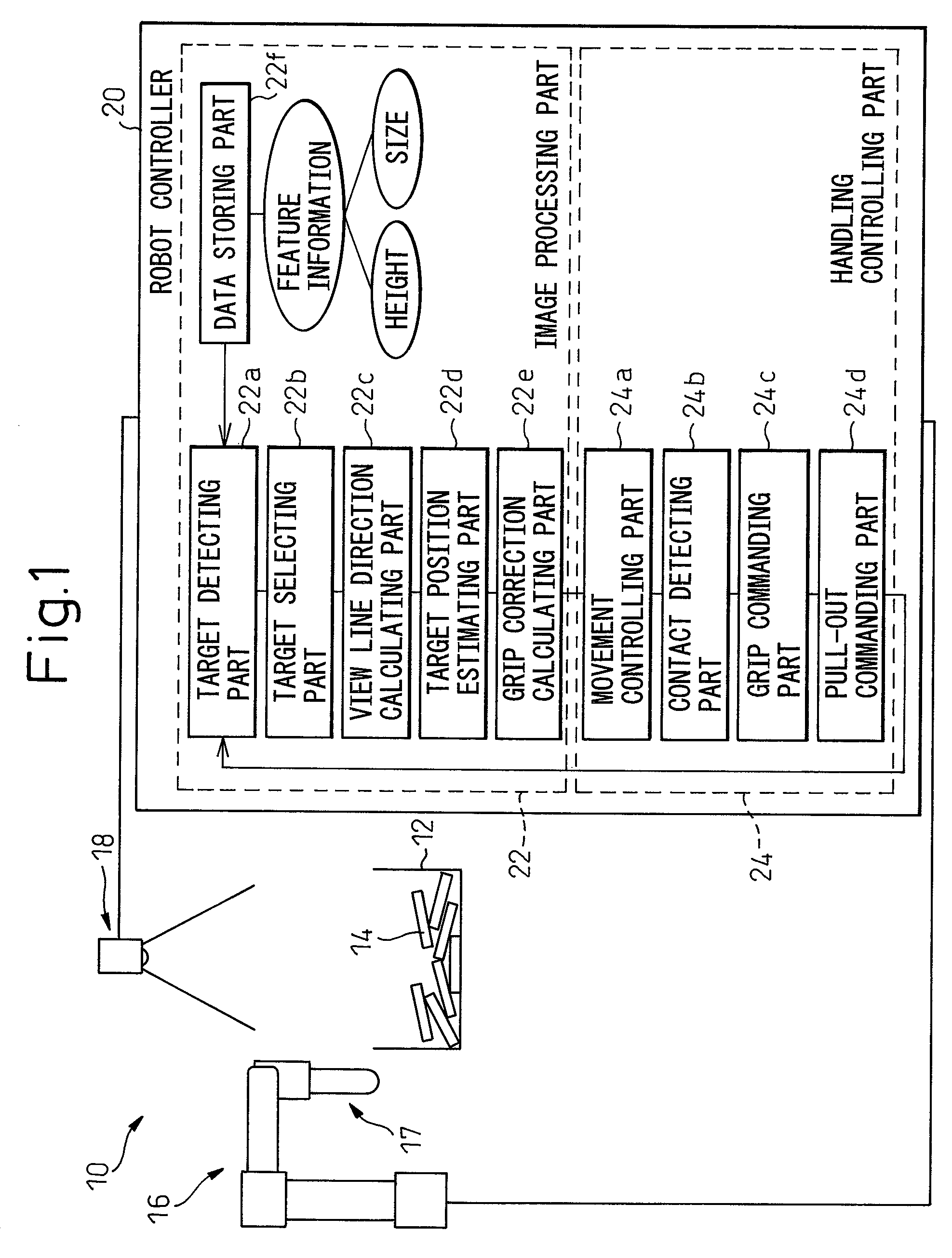

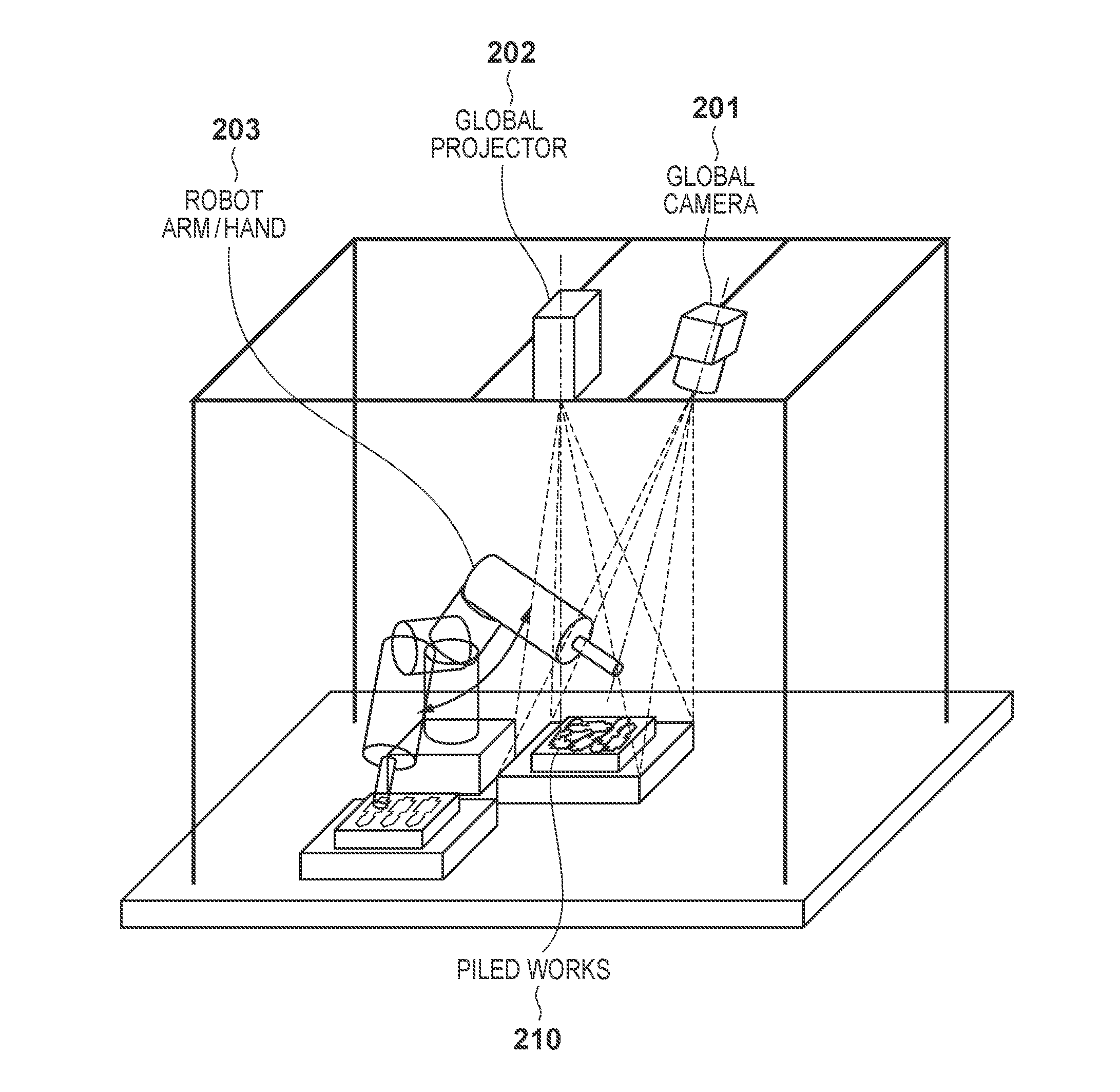

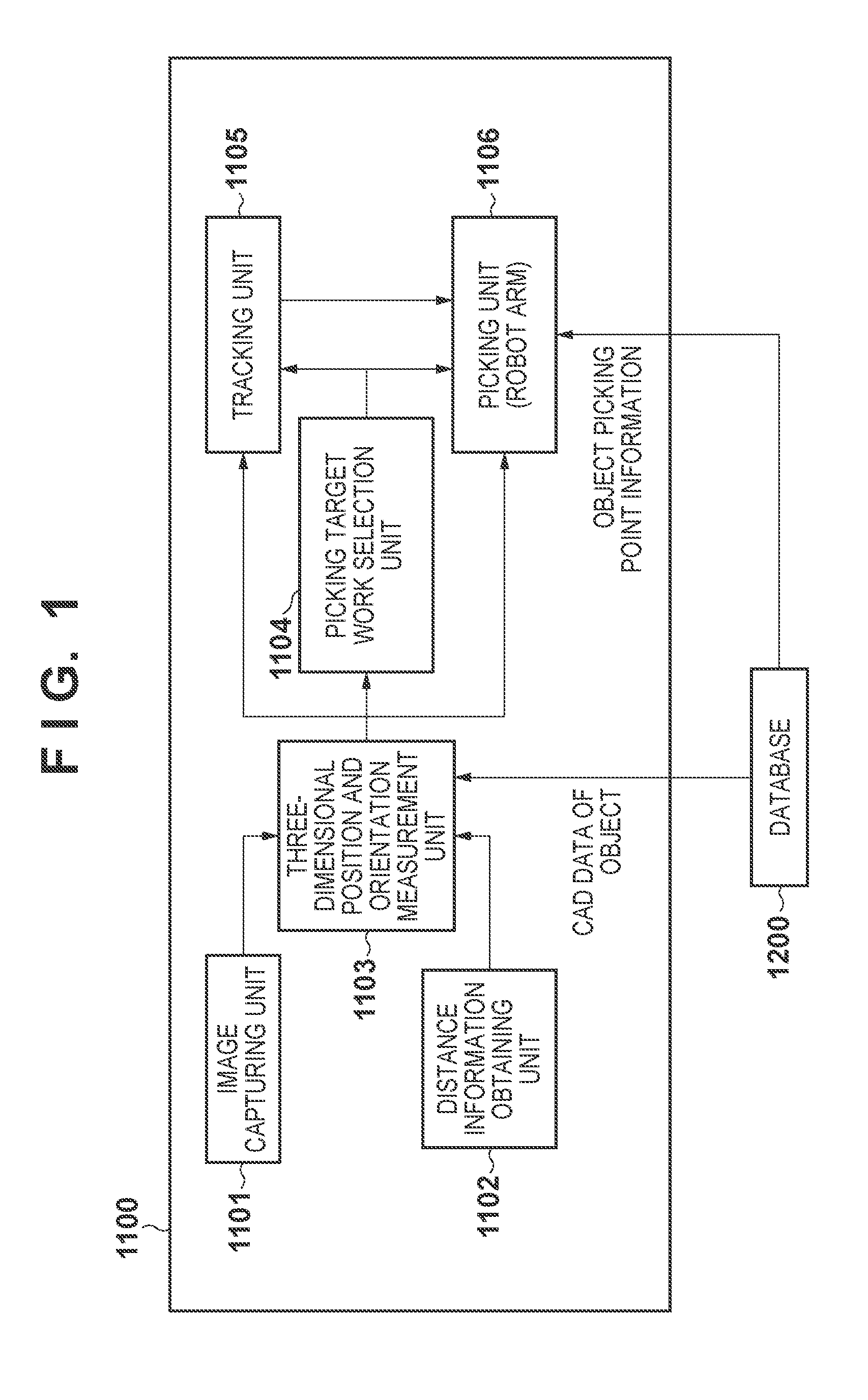

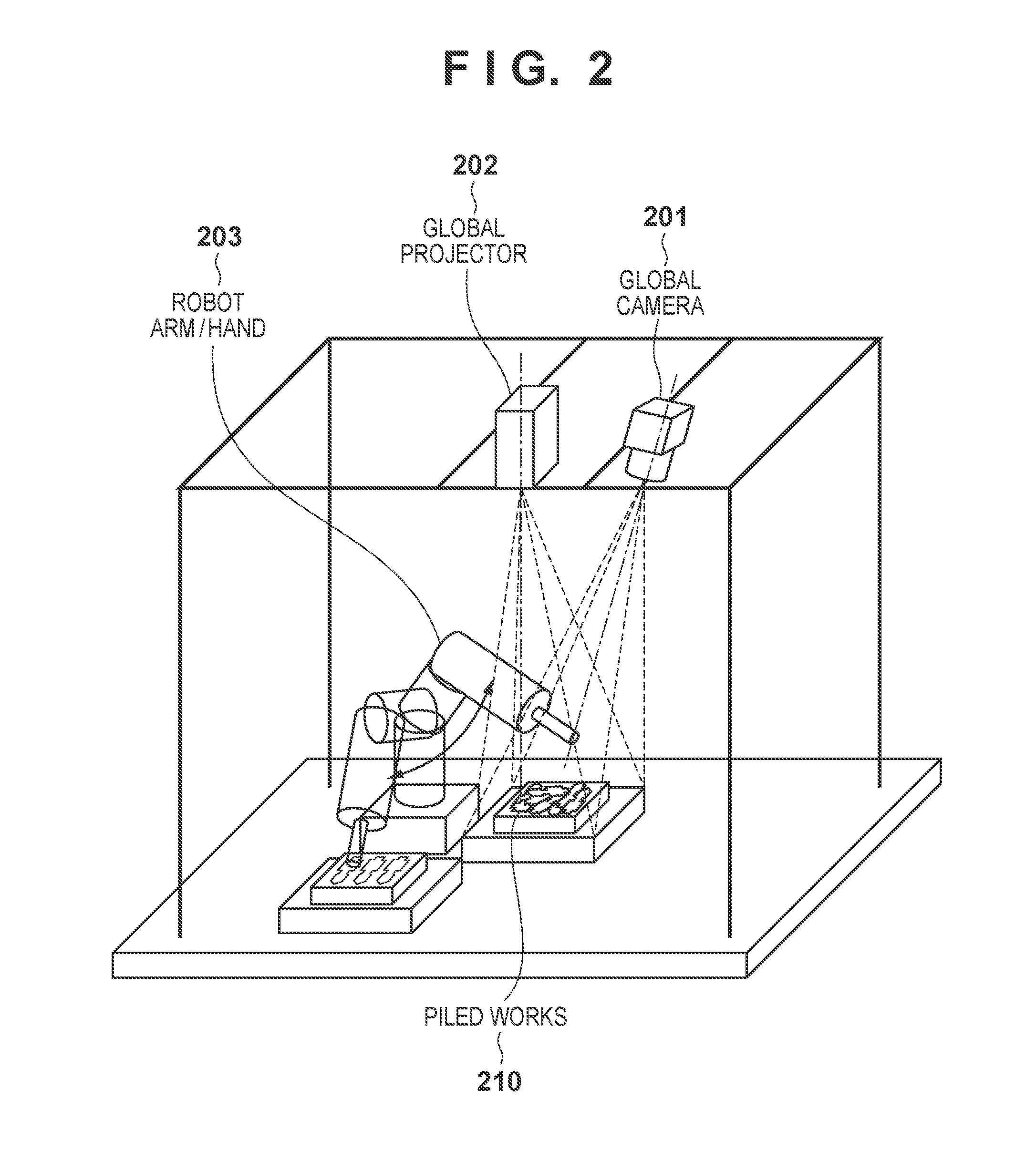

Object picking device

ActiveUS20100004778A1Speedily and accurately picking an objectProgramme controlProgramme-controlled manipulatorImaging processingObject based

An object picking device, which is inexpensive and capable of speedily and accurately picking one object at a time from a random pile state. A target detecting part of an image processing part processes an image captured by a camera and detects objects. A target selecting part selects an object among the detected objects based on a certain rule. A view line direction calculating part calculates the direction of a view line extending to the selected object. A target position estimating part estimates the position including the height of the selected object based on size information of the object in the image. Then, a grip correction calculating part calculates an amount of correction of the movement of a robot so as to grip the object by using the robot.

Owner:FANUC LTD

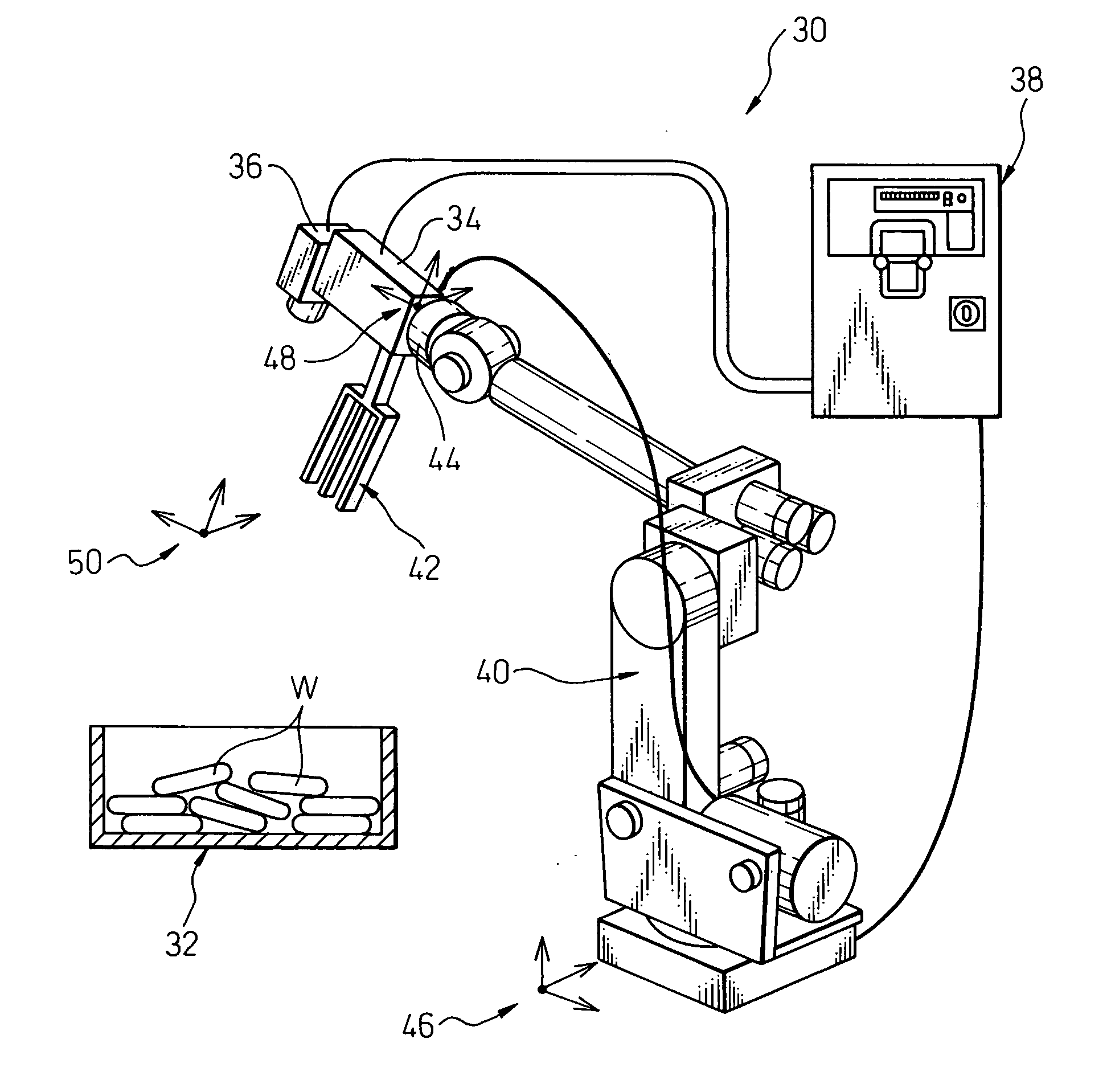

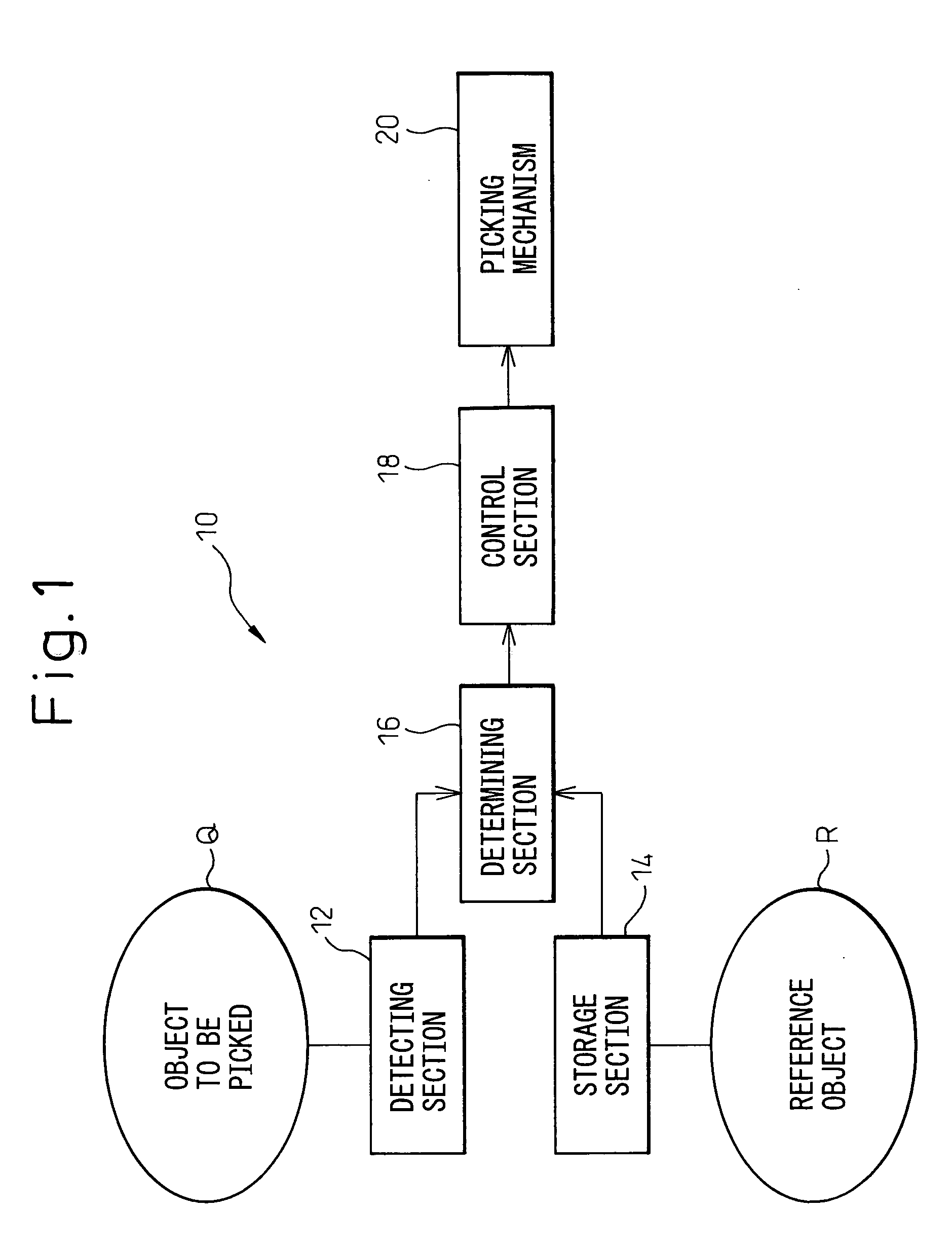

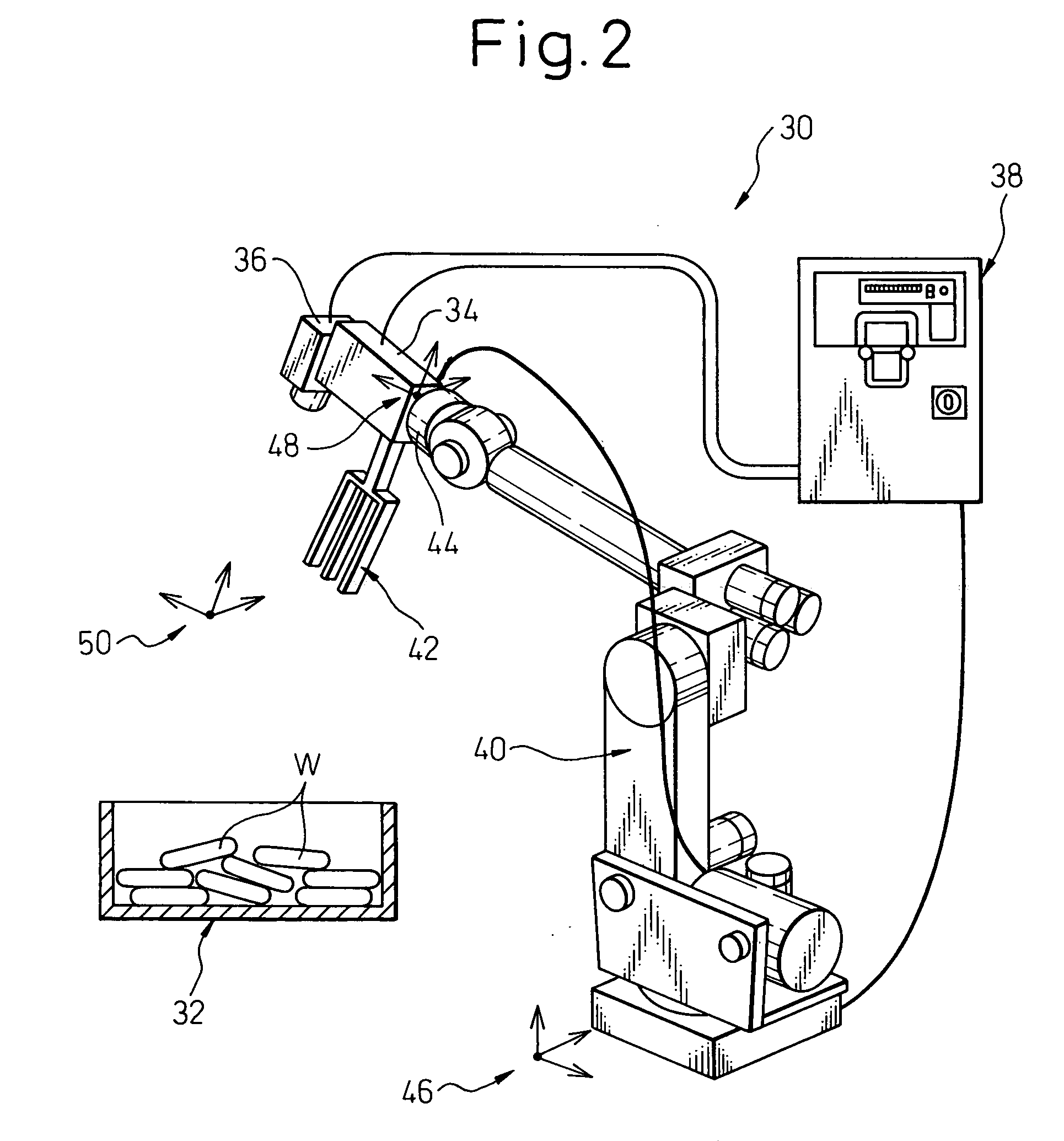

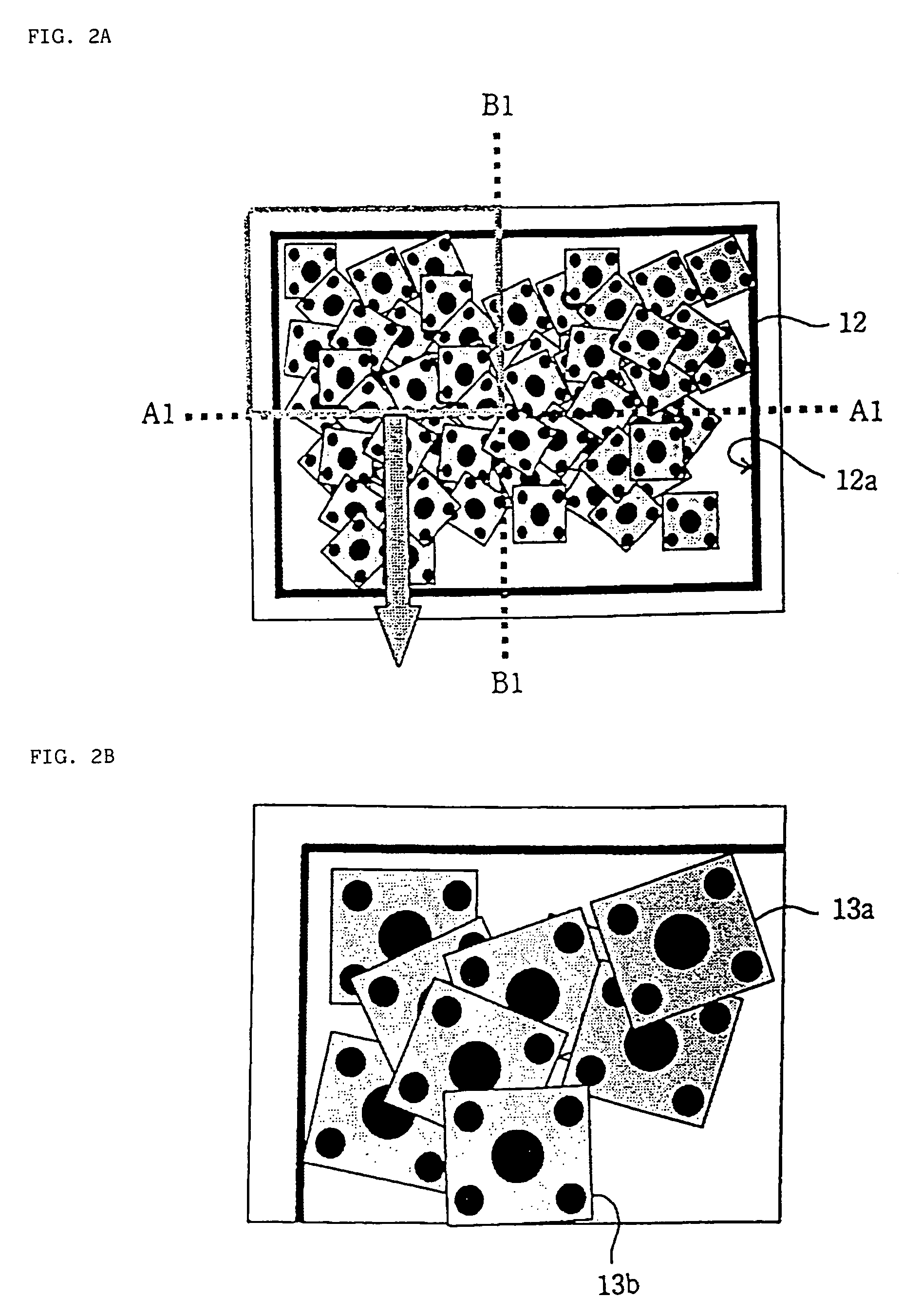

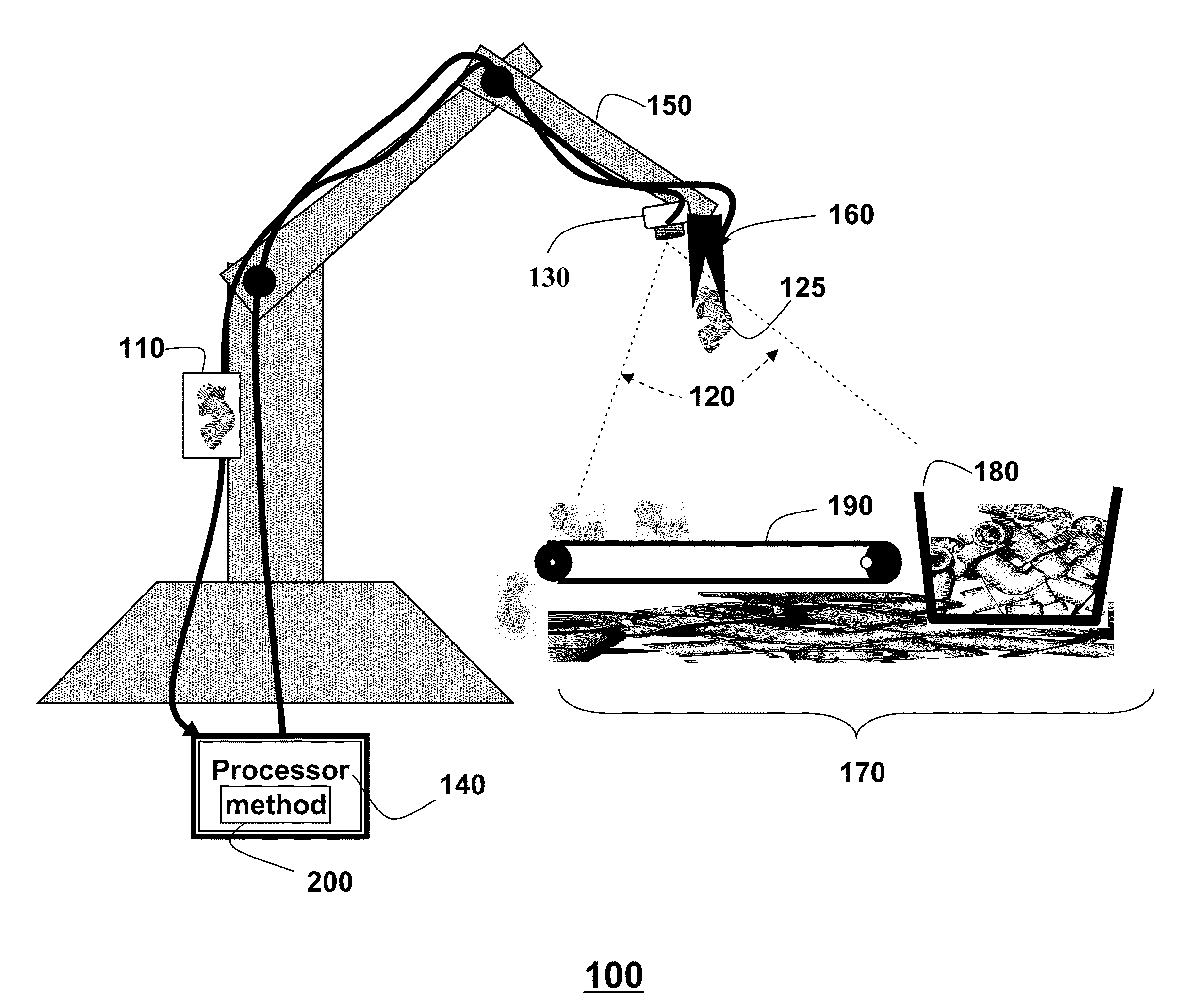

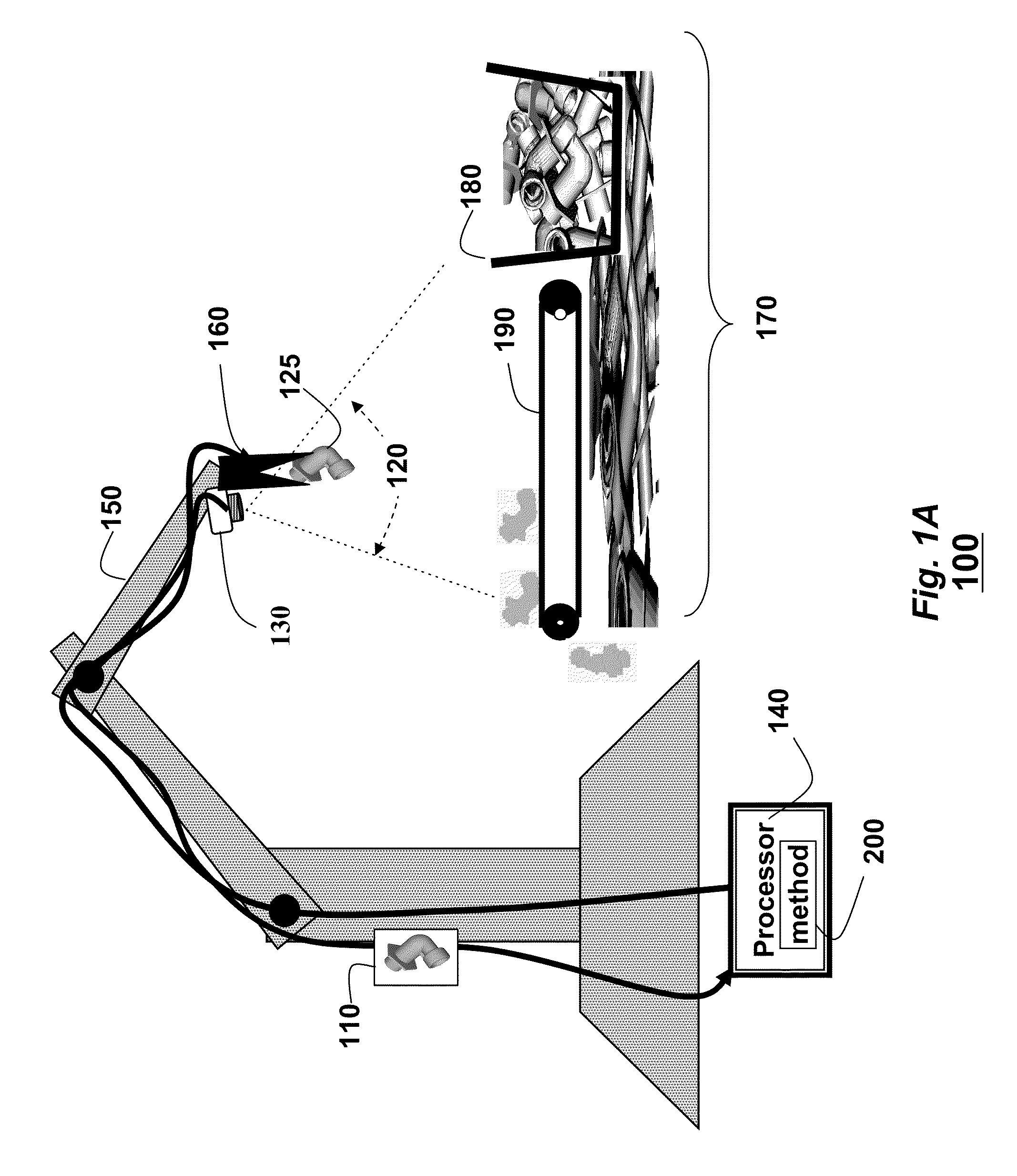

Object picking system

InactiveUS20060104788A1Increase productivityOperational securityImage enhancementProgramme-controlled manipulatorControl signalObject store

An object picking system for picking up, one by one, a plurality of objects. The system includes a detecting section detecting, as an image, an object to be picked, among a plurality of objects placed in a manner as to be at least partially superimposed on each other; a storage section storing appearance information of a predetermined portion of a reference object having an outward appearance identical to an outward appearance of the object to be picked; a determining section determining, in the image of the object to be picked detected by the detecting section, whether an inspected portion of the object to be picked, corresponding to the predetermined portion of the reference object, is concealed by another object, based on the appearance information of the reference object stored in the storage section; a control section deciding a picking motion for the object to be picked and outputting a control signal of the picking motion, based on a determination result of the determining section; and a picking mechanism performing the picking motion on the object to be picked in accordance with the control signal output from the control section.

Owner:FANUC LTD

Robot audiovisual system

A robot visuoauditory system that makes it possible to process data in real time to track vision and audition for an object, that can integrate visual and auditory information on an object to permit the object to be kept tracked without fail and that makes it possible to process the information in real time to keep tracking the object both visually and auditorily and visualize the real-time processing is disclosed. In the system, the audition module (20) in response to sound signals from microphones extracts pitches therefrom, separate their sound sources from each other and locate sound sources such as to identify a sound source as at least one speaker, thereby extracting an auditory event (28) for each object speaker. The vision module (30) on the basis of an image taken by a camera identifies by face, and locate, each such speaker, thereby extracting a visual event (39) therefor. The motor control module (40) for turning the robot horizontally. extracts a motor event (49) from a rotary position of the motor. The association module (60) for controlling these modules forms from the auditory, visual and motor control events an auditory stream (65) and a visual stream (66) and then associates these streams with each other to form an association stream (67). The attention control module (6) effects attention control designed to make a plan of the course in which to control the drive motor, e.g., upon locating the sound source for the auditory event and locating the face for the visual event, thereby determining the direction in which each speaker lies. The system also includes a display (27, 37, 48, 68) for displaying at least a portion of auditory, visual and motor information. The attention control module (64) servo-controls the robot on the basis of the association stream or streams.

Owner:JAPAN SCI & TECH CORP

Object picking device

ActiveUS8295975B2Speedily and accurately picking an objectProgramme controlProgramme-controlled manipulatorImaging processingComputer graphics (images)

An object picking device, which is inexpensive and capable of speedily and accurately picking one object at a time from a random pile state. A target detecting part of an image processing part processes an image captured by a camera and detects objects. A target selecting part selects an object among the detected objects based on a certain rule. A view line direction calculating part calculates the direction of a view line extending to the selected object. A target position estimating part estimates the position including the height of the selected object based on size information of the object in the image. Then, a grip correction calculating part calculates an amount of correction of the movement of a robot so as to grip the object by using the robot.

Owner:FANUC LTD

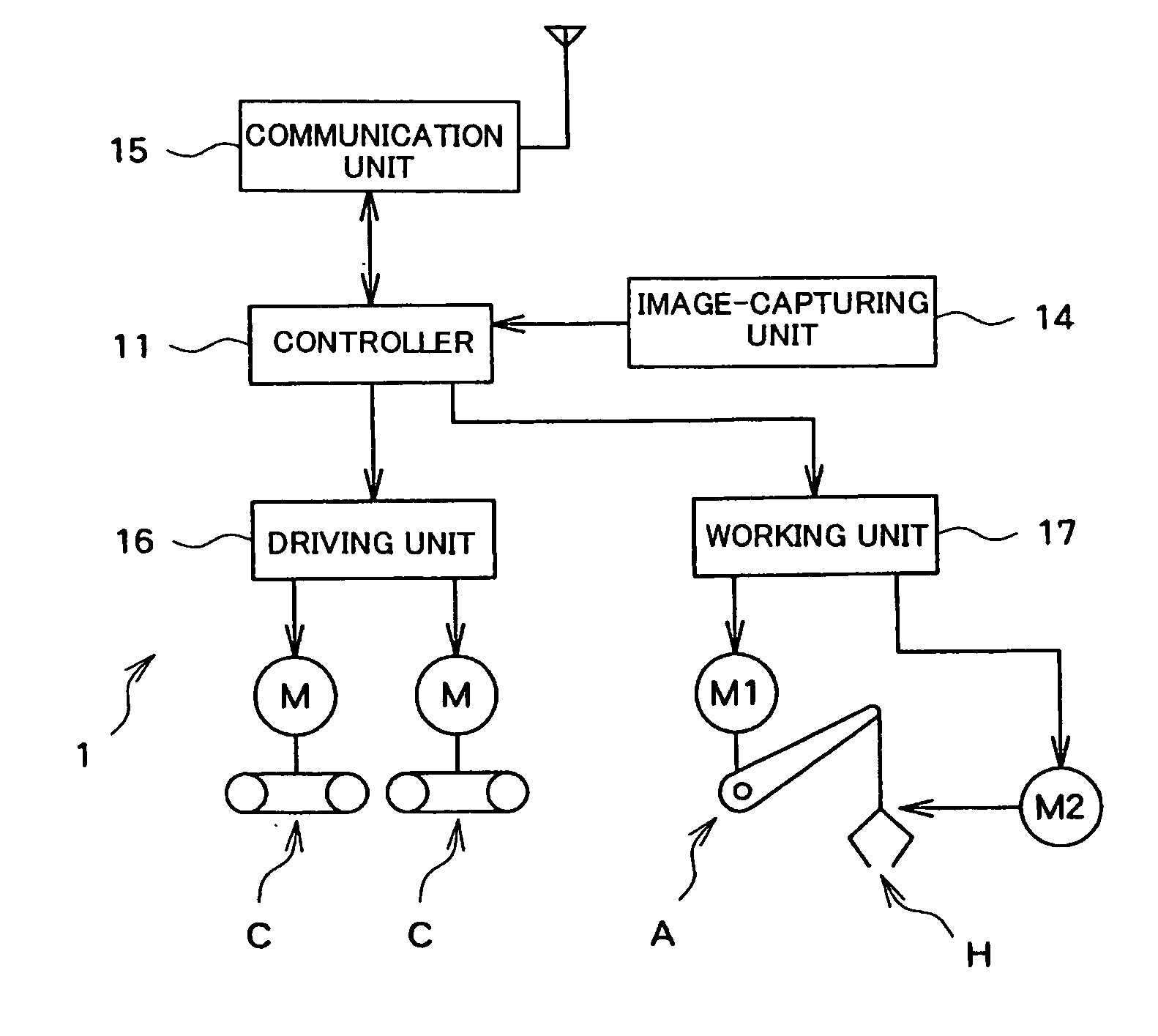

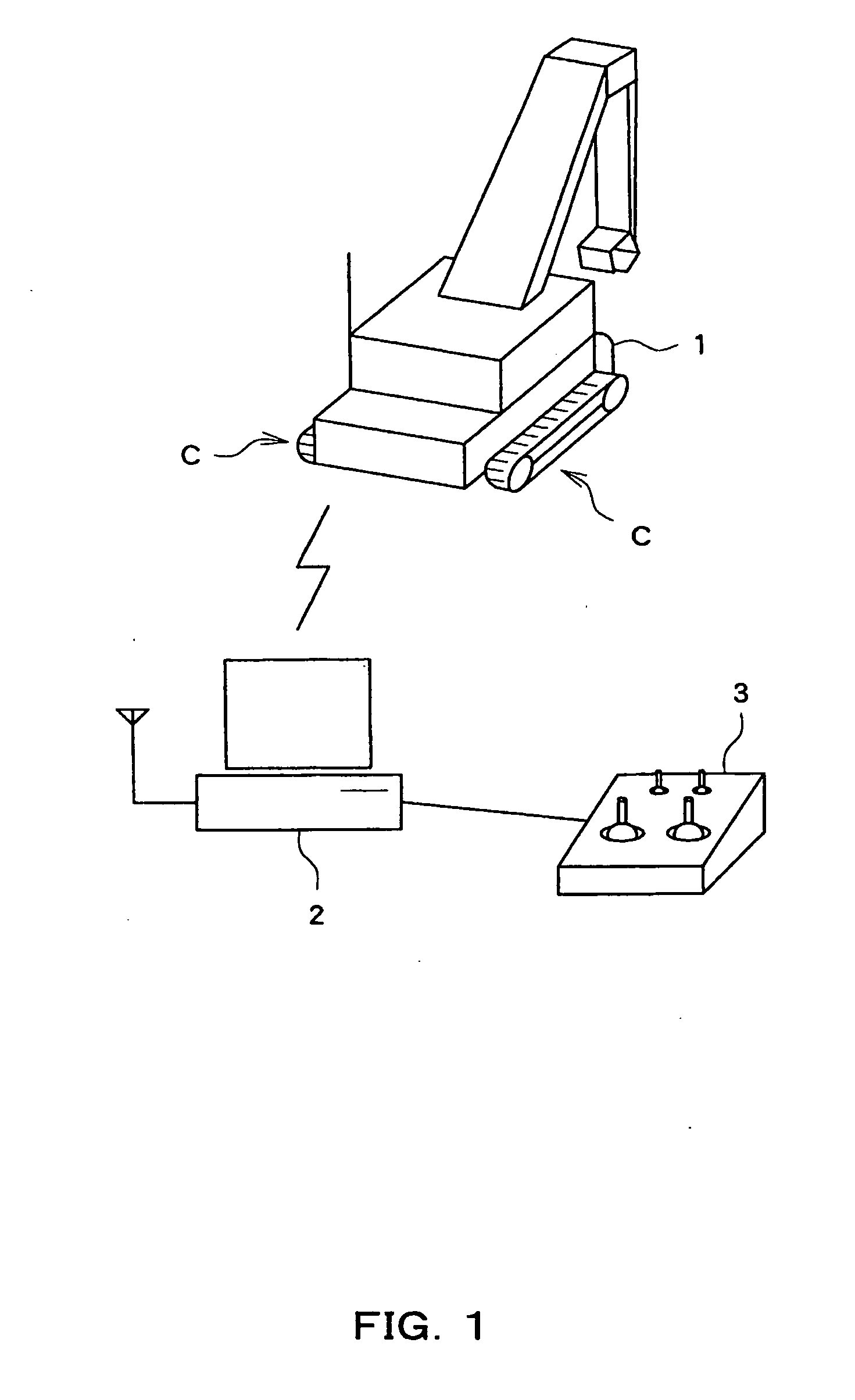

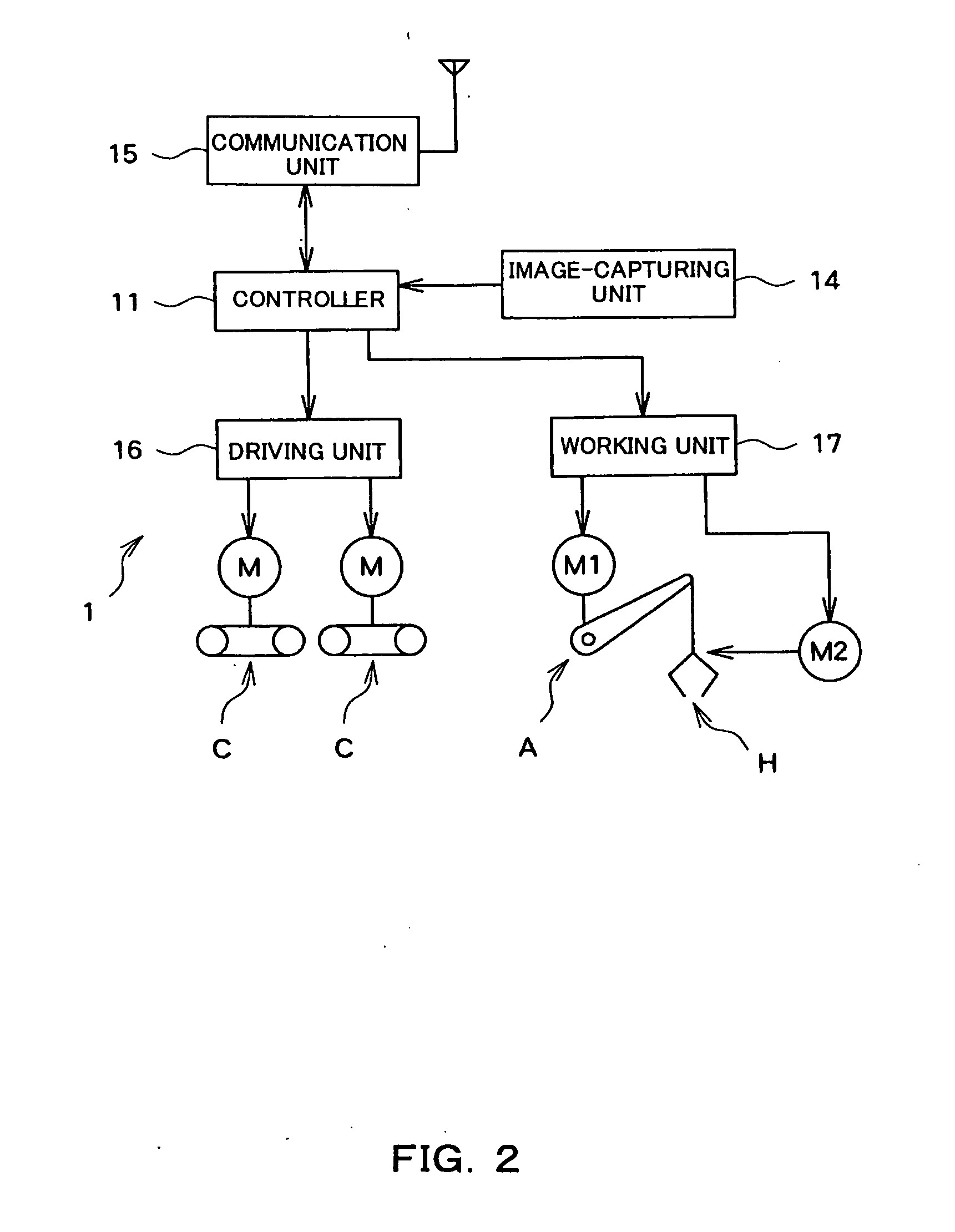

Work Robot

ActiveUS20090234501A1Easy to operateImprove convenienceProgramme controlProgramme-controlled manipulatorWork patternComputer graphics (images)

A work robot for executing a work for operating an object includes a robot body for capturing an image including the object. During a teach mode, the captured image is correlated with an operation content taught by an operator and held. During a work mode, the captured image is acquired, an image similar to the acquired image is searched, and the object is operated according to the operation content correlated with the image captured in the past which has been found as a result of the search.

Owner:ISHIZAKI TAKEHIRO

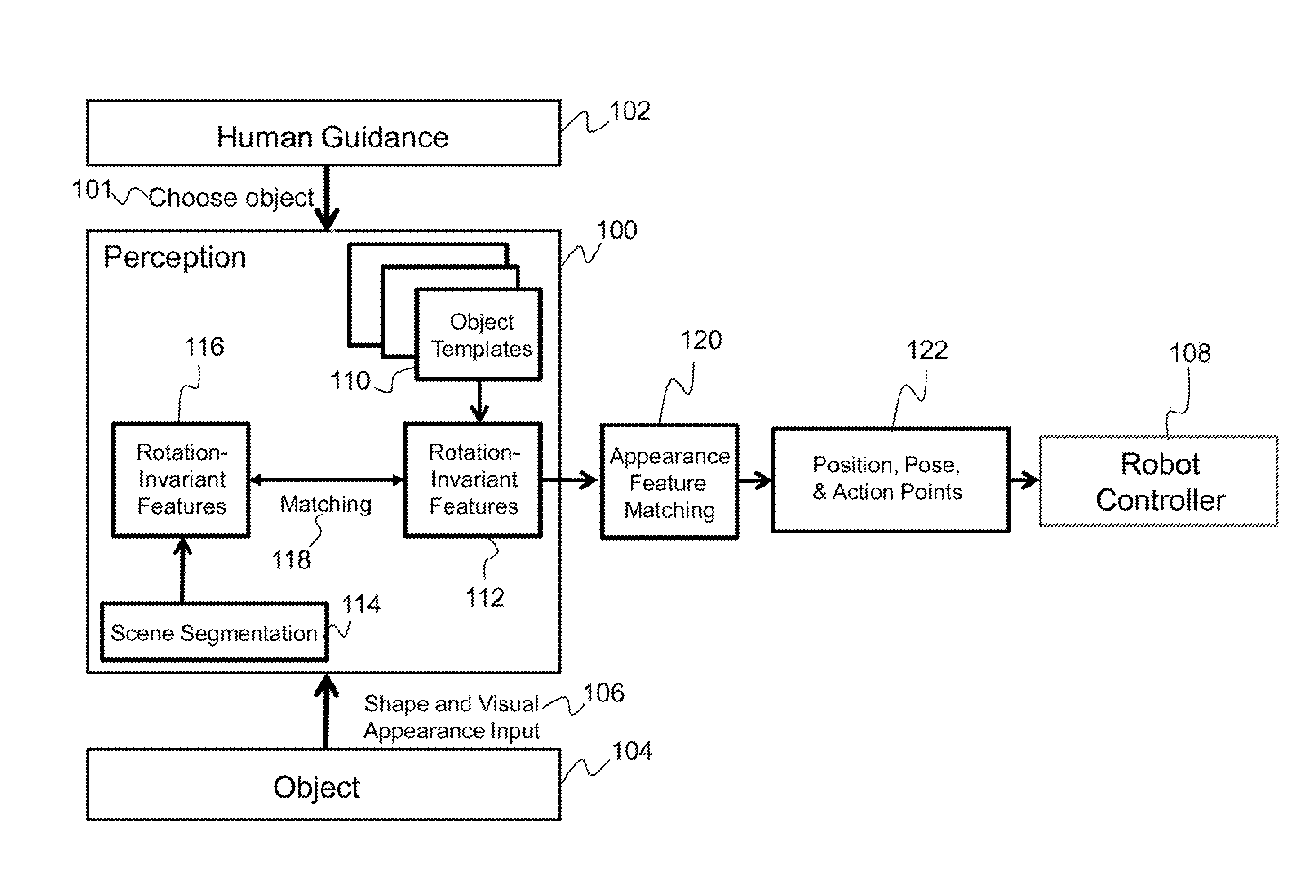

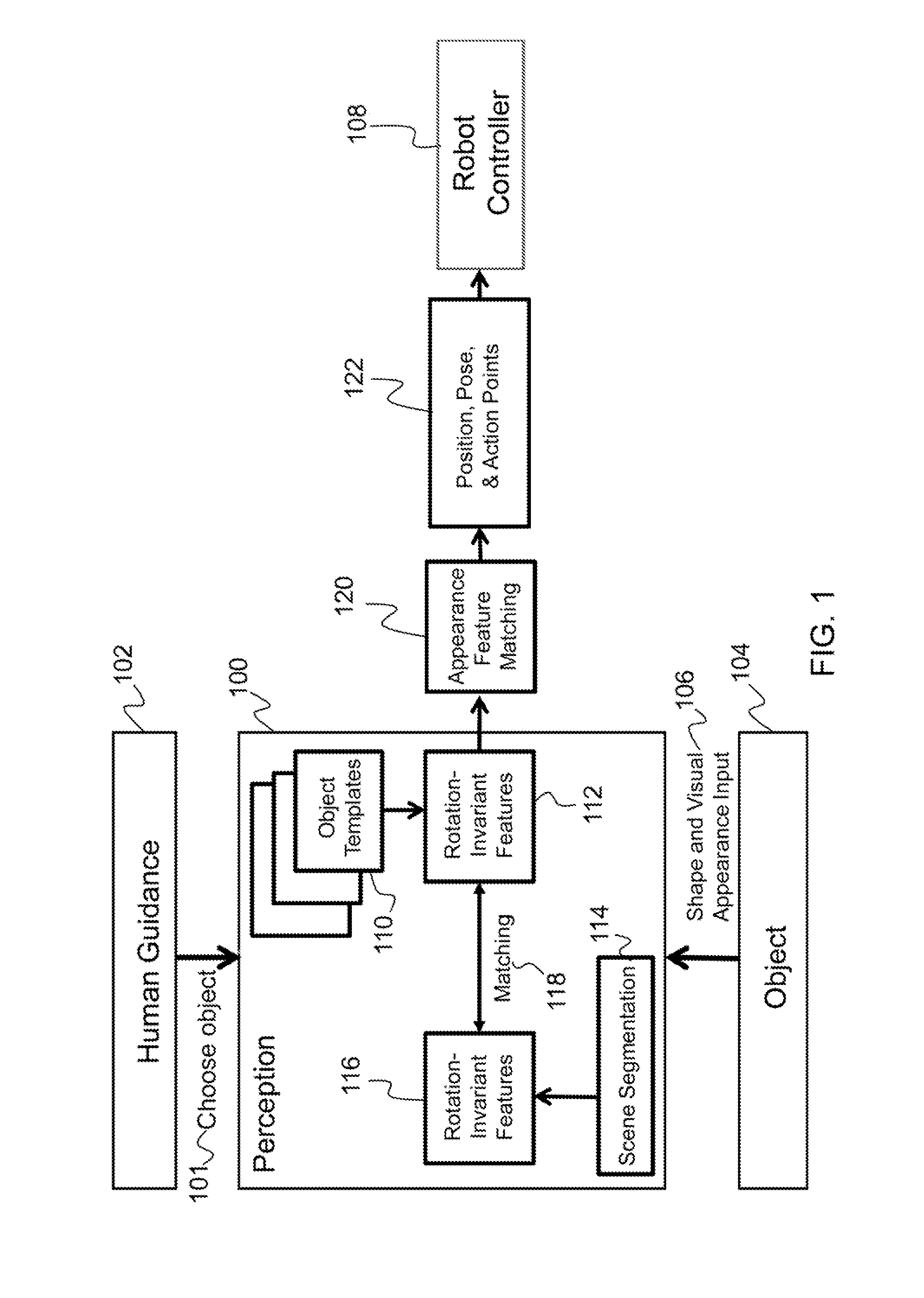

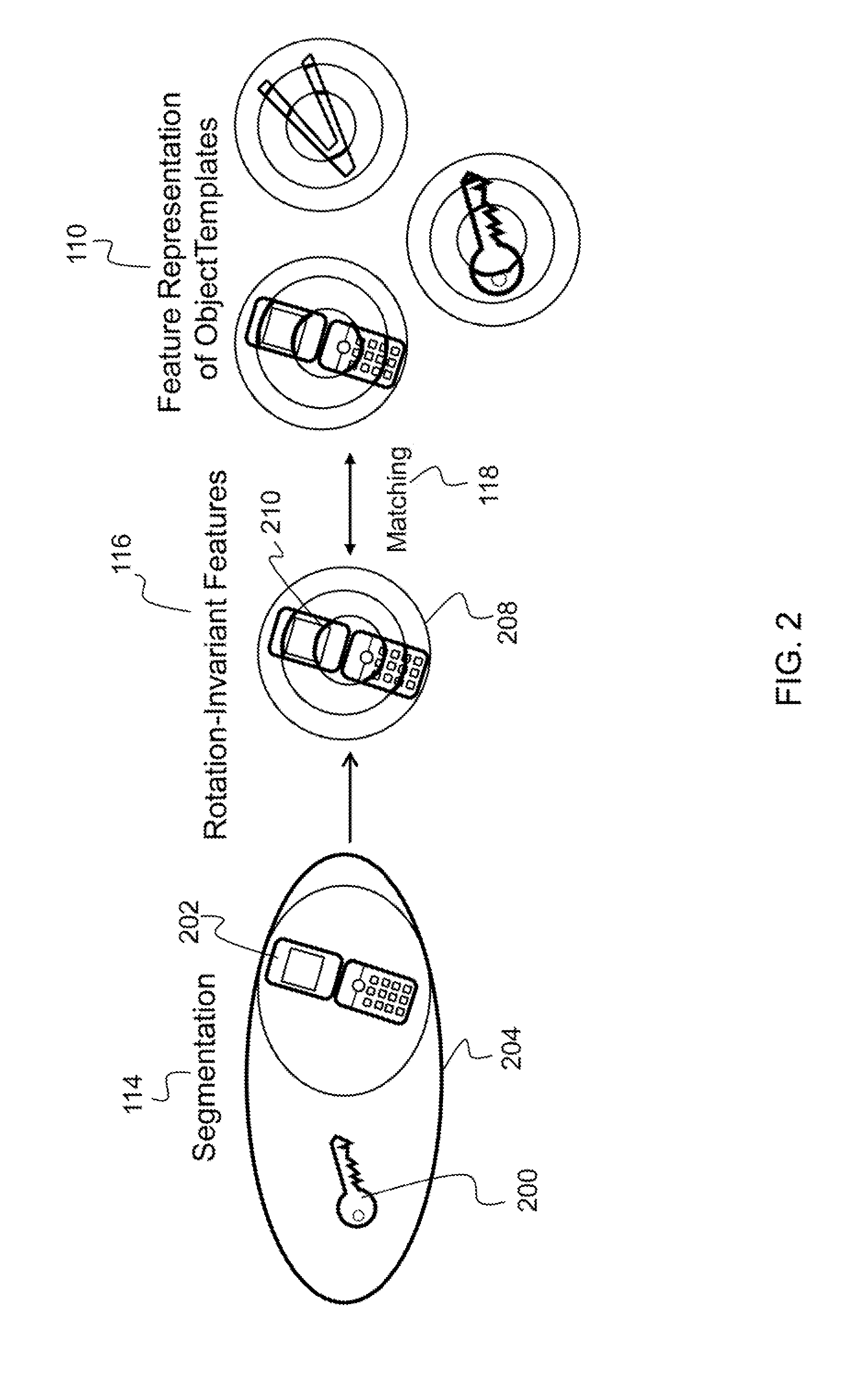

Robotic visual perception system

Described is a robotic visual perception system for determining a position and pose of a three-dimensional object. The system receives an external input to select an object of interest. The system also receives visual input from a sensor of a robotic controller that senses the object of interest. Rotation-invariant shape features and appearance are extracted from the sensed object of interest and a set of object templates. A match is identified between the sensed object of interest and an object template using shape features. The match between the sensed object of interest and the object template is confirmed using appearance features. The sensed object is then identified, and a three-dimensional pose of the sensed object of interest is determined. Based on the determined three-dimensional pose of the sensed object, the robotic controller is used to grasp and manipulate the sensed object of interest.

Owner:HRL LAB

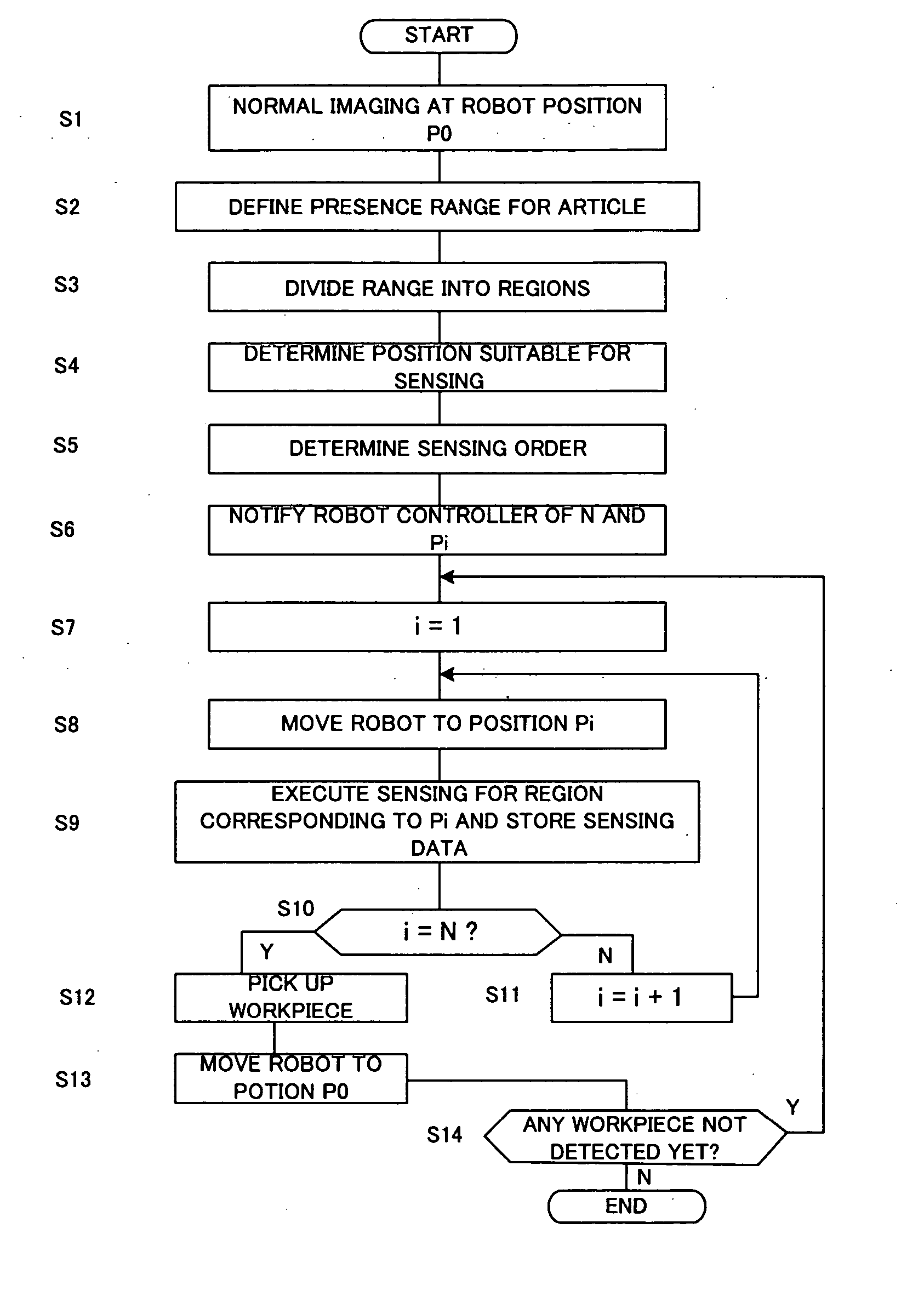

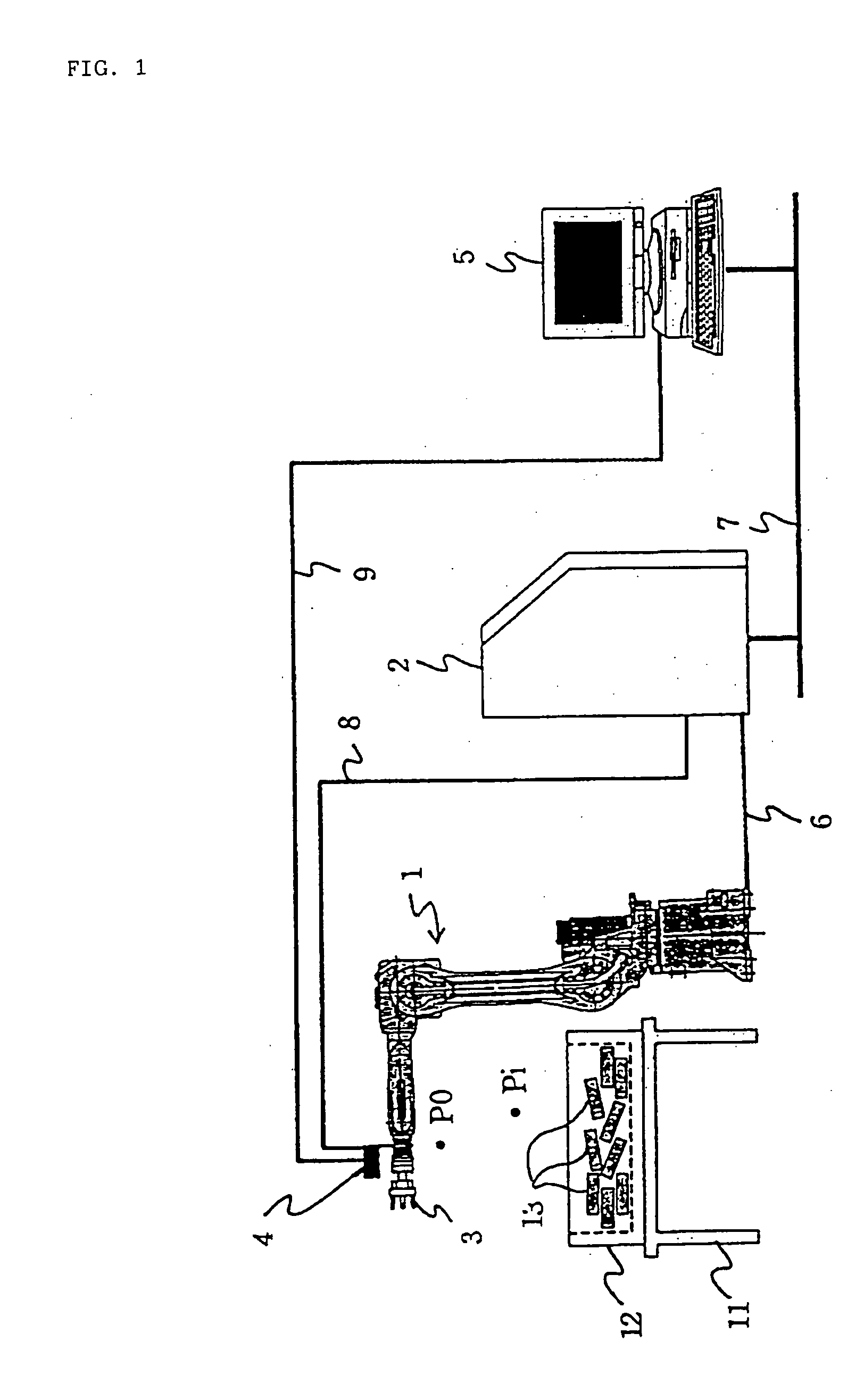

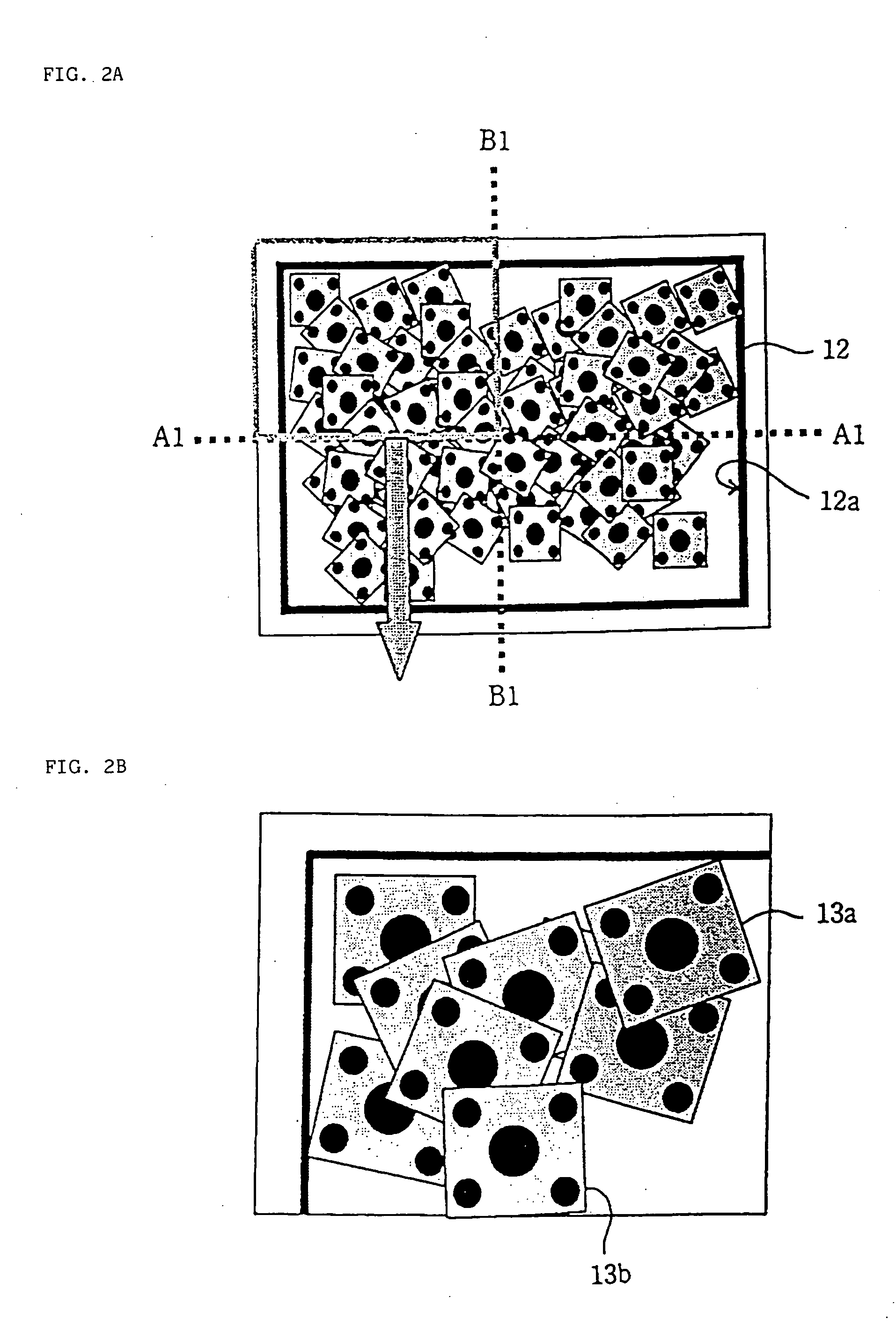

Article pickup device

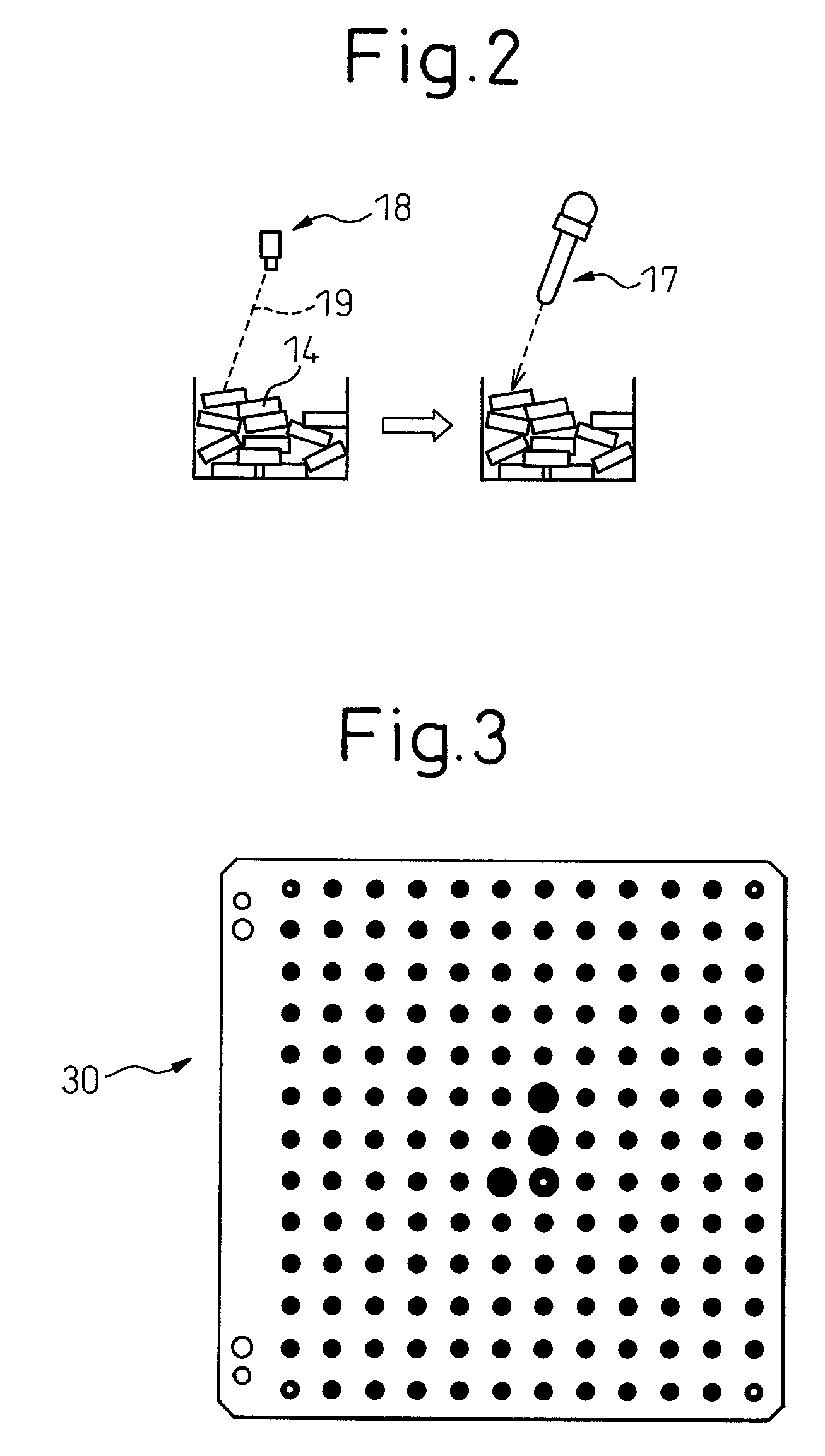

InactiveUS7123992B2Accurate detectionImprove reliability of article pickupProgramme controlProgramme-controlled manipulatorRobot positionEngineering

The presence range for workpieces (the internal edge of a container's opening) is defined with a visual sensor attached to a robot and the range is divided into a specified number of sectional regions. A robot position suitable for sensing each of the sectional regions is determined, and sensing is performed at the position so that workpieces in the container are picked up.

Owner:FANUC LTD

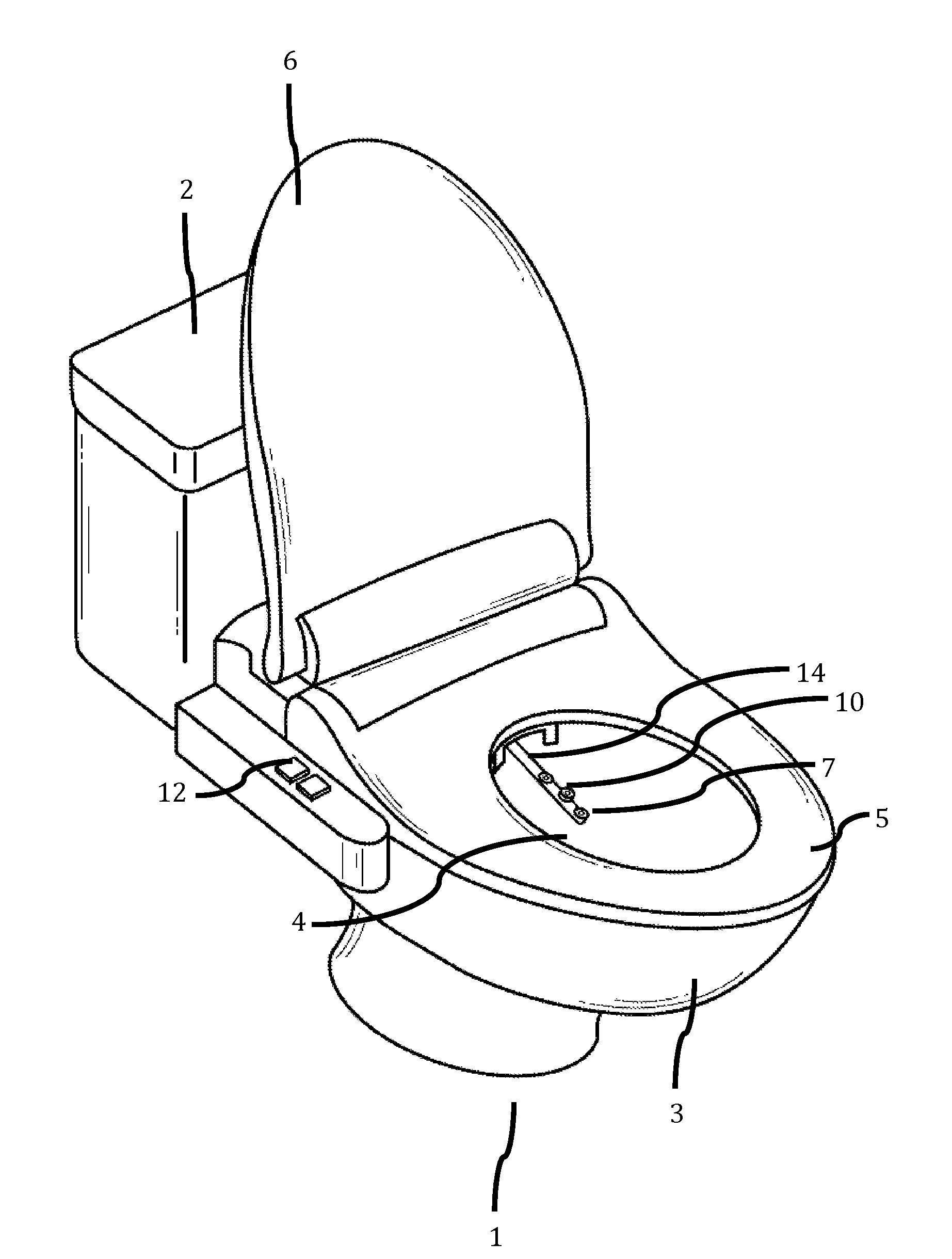

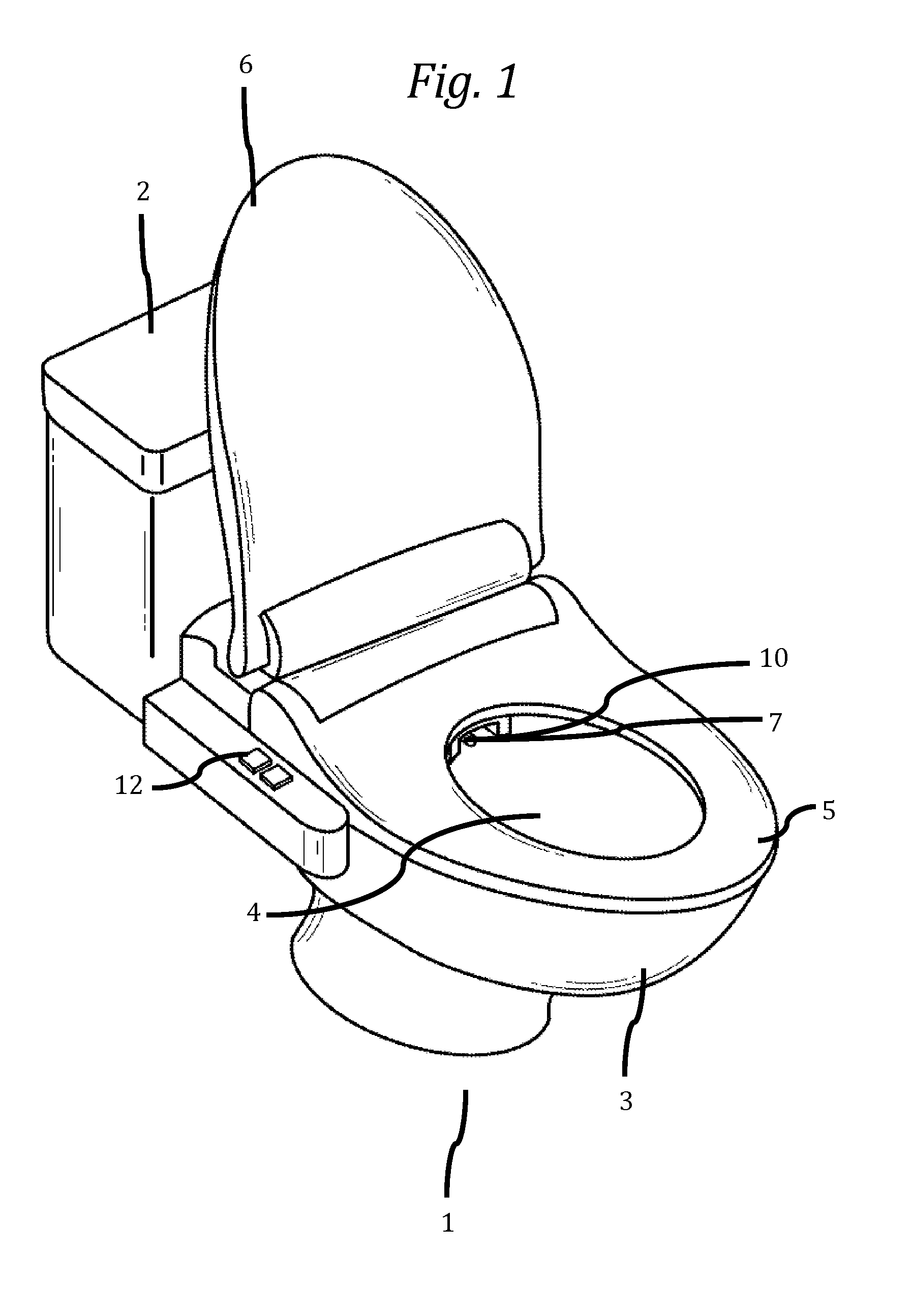

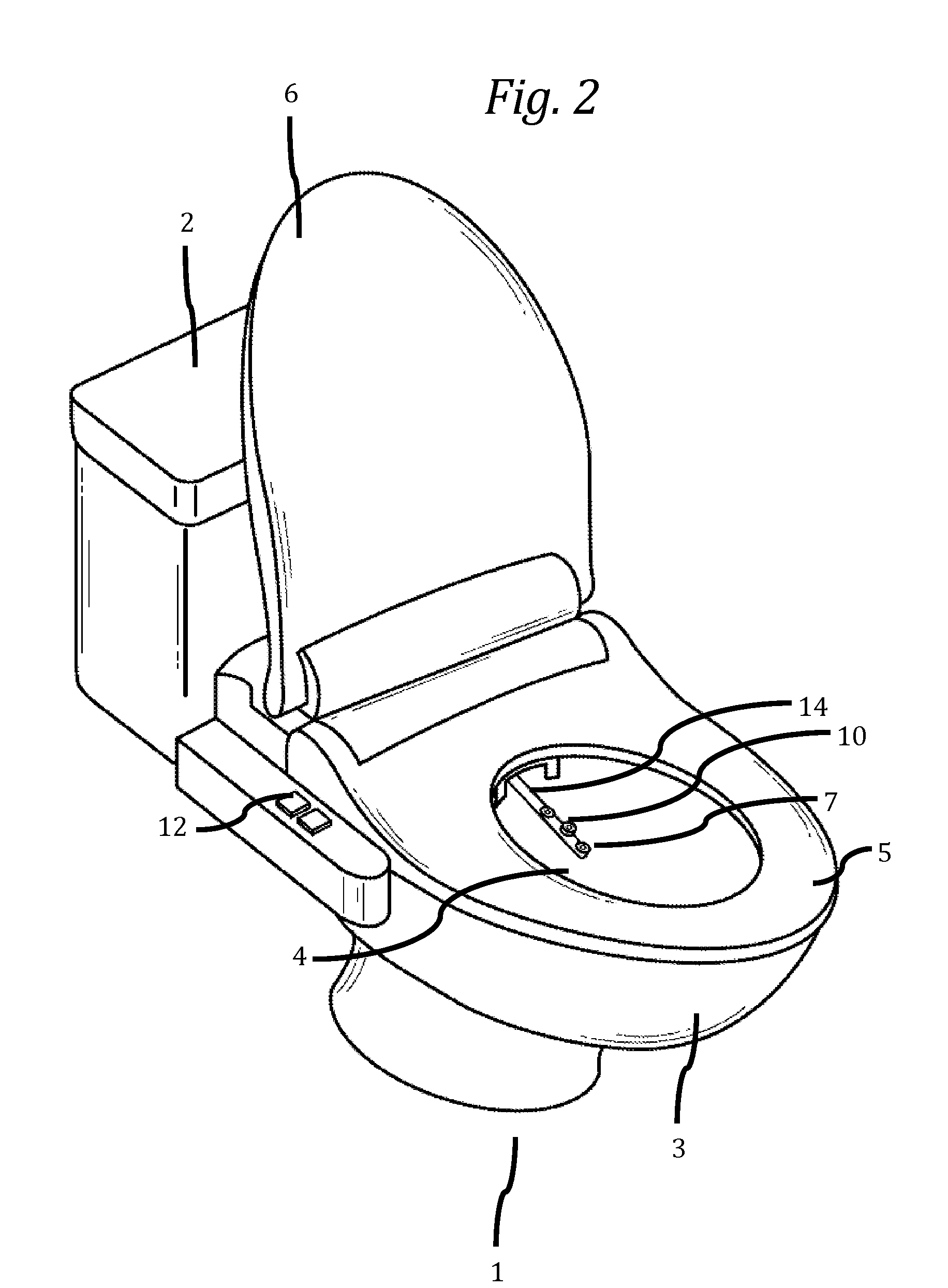

Electronic automatically adjusting bidet with visual object recognition software

An electronic bidet system that uses one or more internal cameras to capture one or more images or video of a user as he or she sits on the bidet prior to use. The images or video are analyzed using object recognition technology to identify and segment / bound the types, sizes, shapes, and positions of the lower body orifices, and conditions (e.g. hemorrhoids), in the user's genital and rectal areas. Based on these analyzed images or video, the system automatically adjusts the bidet settings for the specific conditions (e.g. hemorrhoids) and types of orifices, locations of orifices, sizes of orifices, shapes of orifices, gender, body type, and weight of the user.

Owner:SMART HYGIENE INC

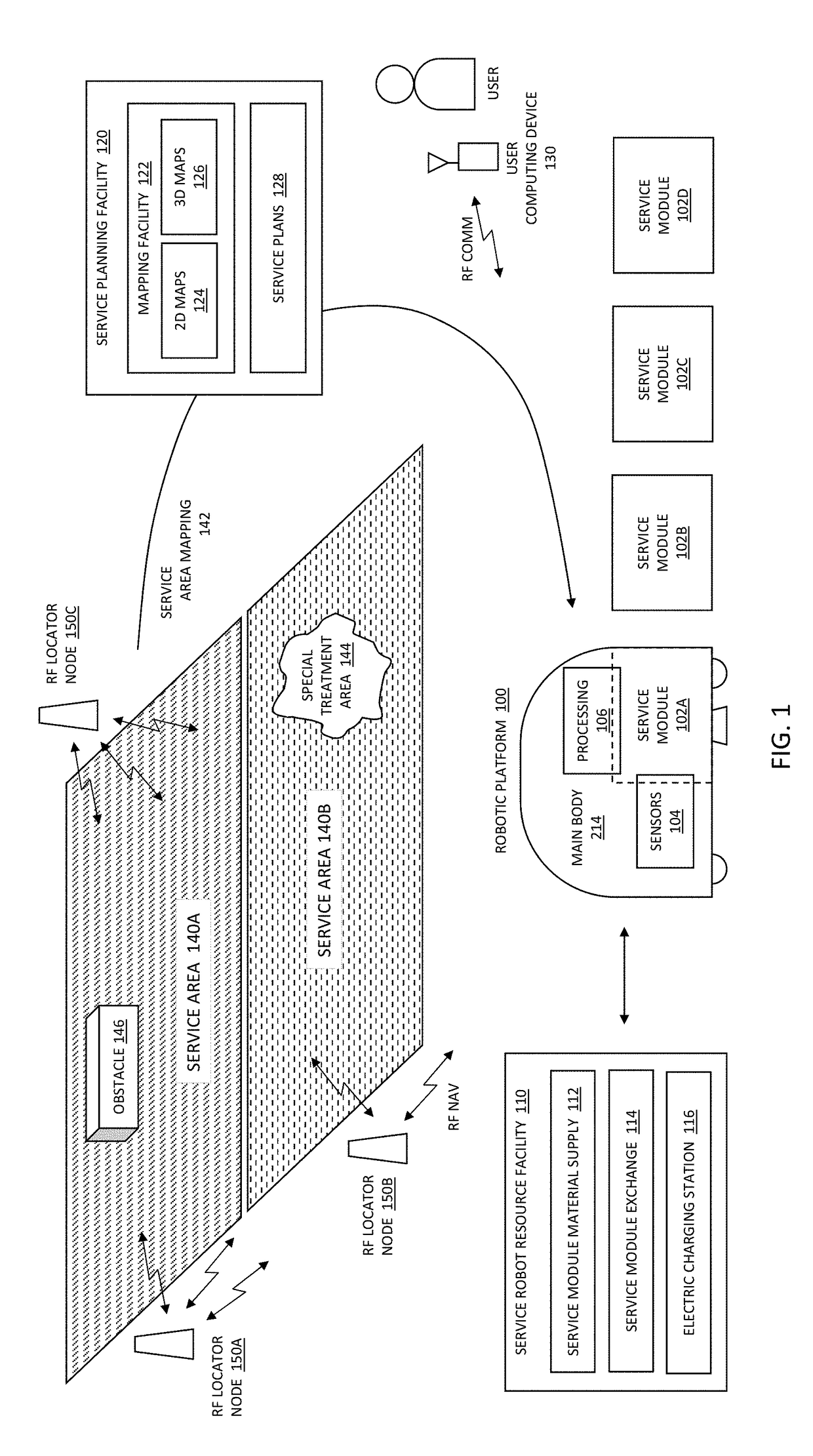

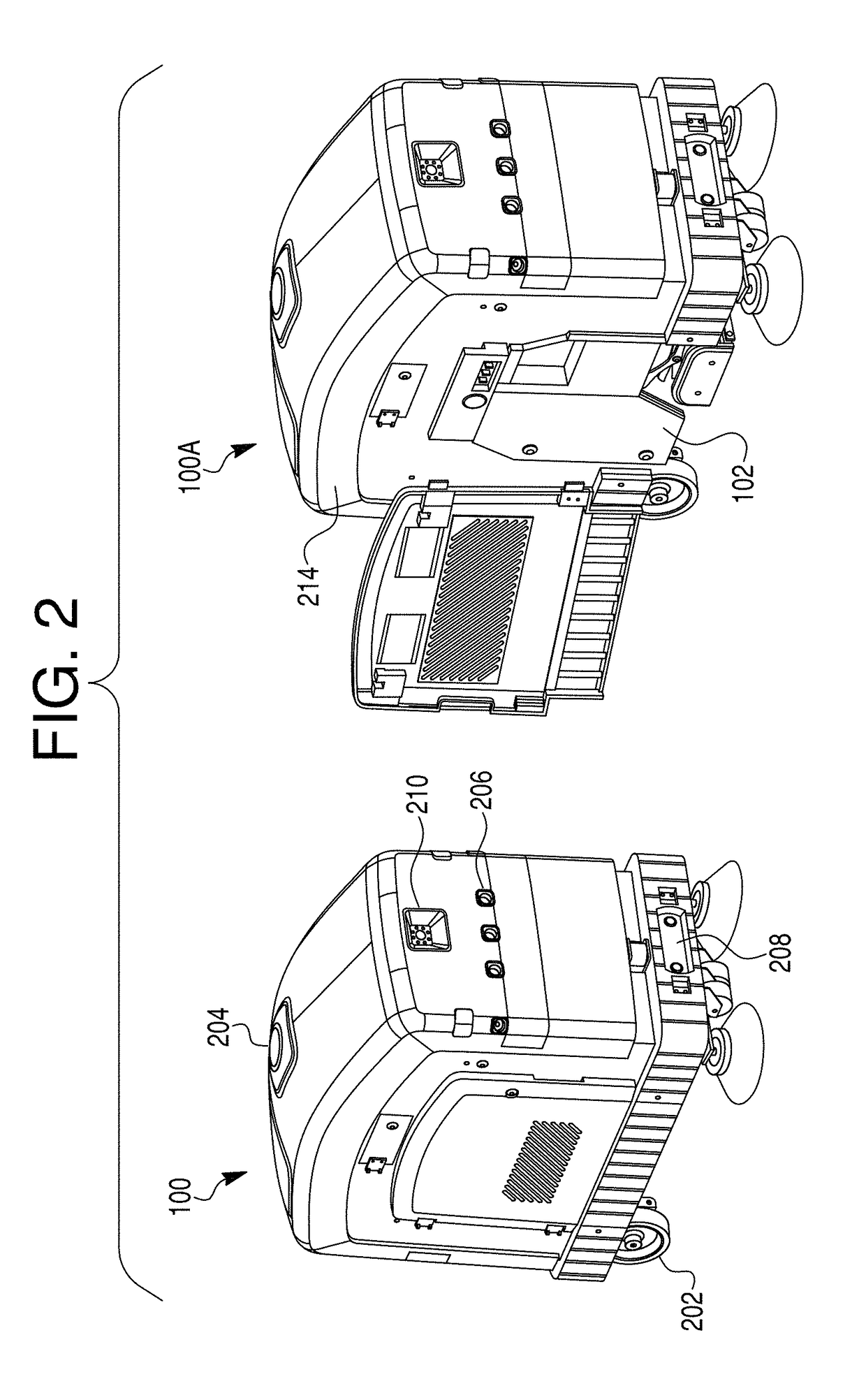

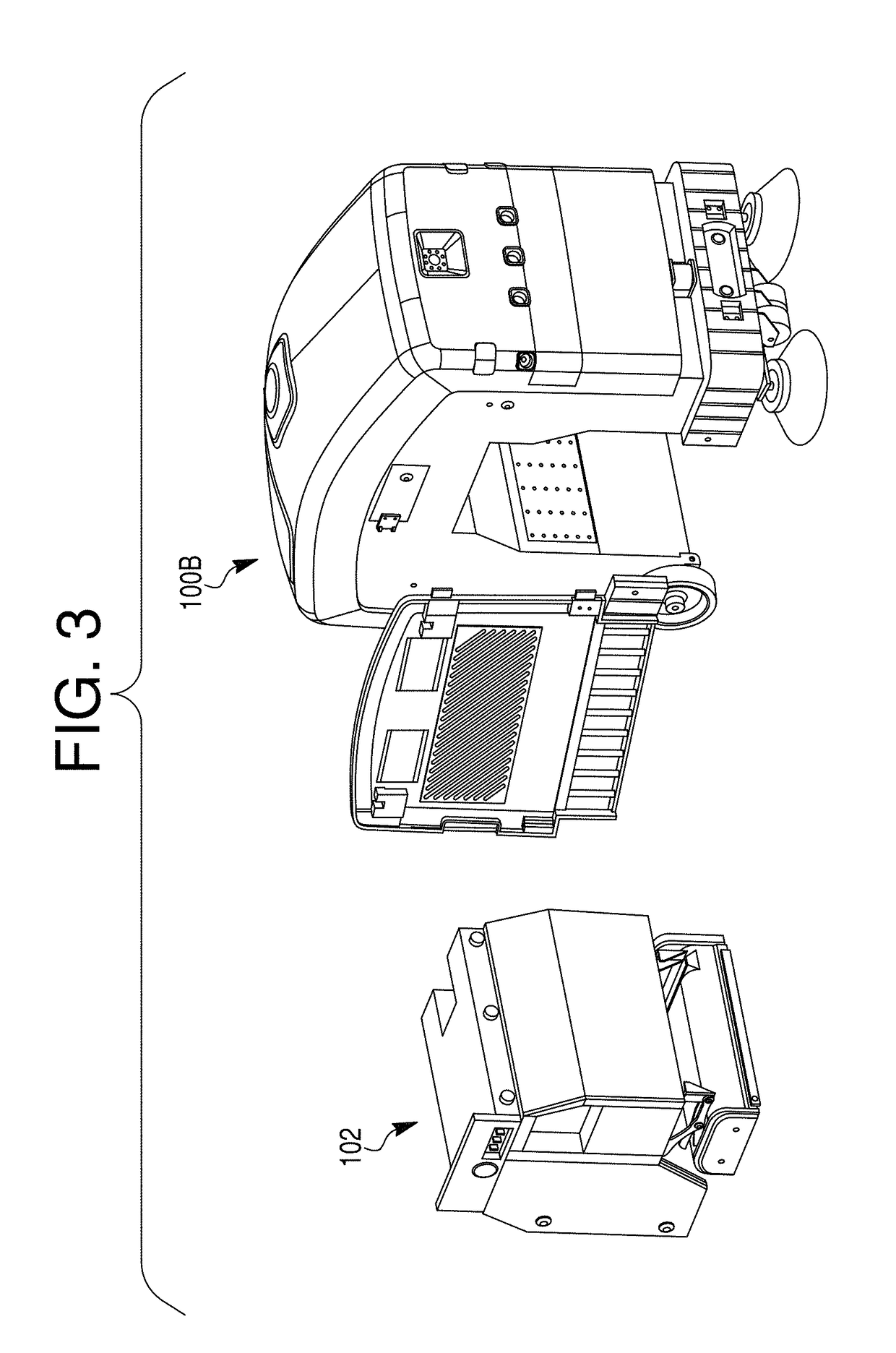

Robotic platform with mapping facility

InactiveUS20180364045A1Programme-controlled manipulatorNavigation instrumentsComputer hardwareRobotic arm

A method and system for a robotic device comprising a propulsion mechanism, a sensor for sensing objects, a localization and mapping system, a processing facility comprising a processor and a memory, the processing facility configured to store a set of instructions that, when executed, cause the robotic device to upon selection by a user, place the robotic device in a mapping mode, wherein the mapping mode causes the robotic device to move through the service area and create a digital map, and upon selection by the user, place the robotic device in a service task mode, wherein while in service task mode the robotic device performs a service task in the service area based on sensing the service area with the sensor and utilizing the created digital map.

Owner:NEXUS ROBOTICS LLC

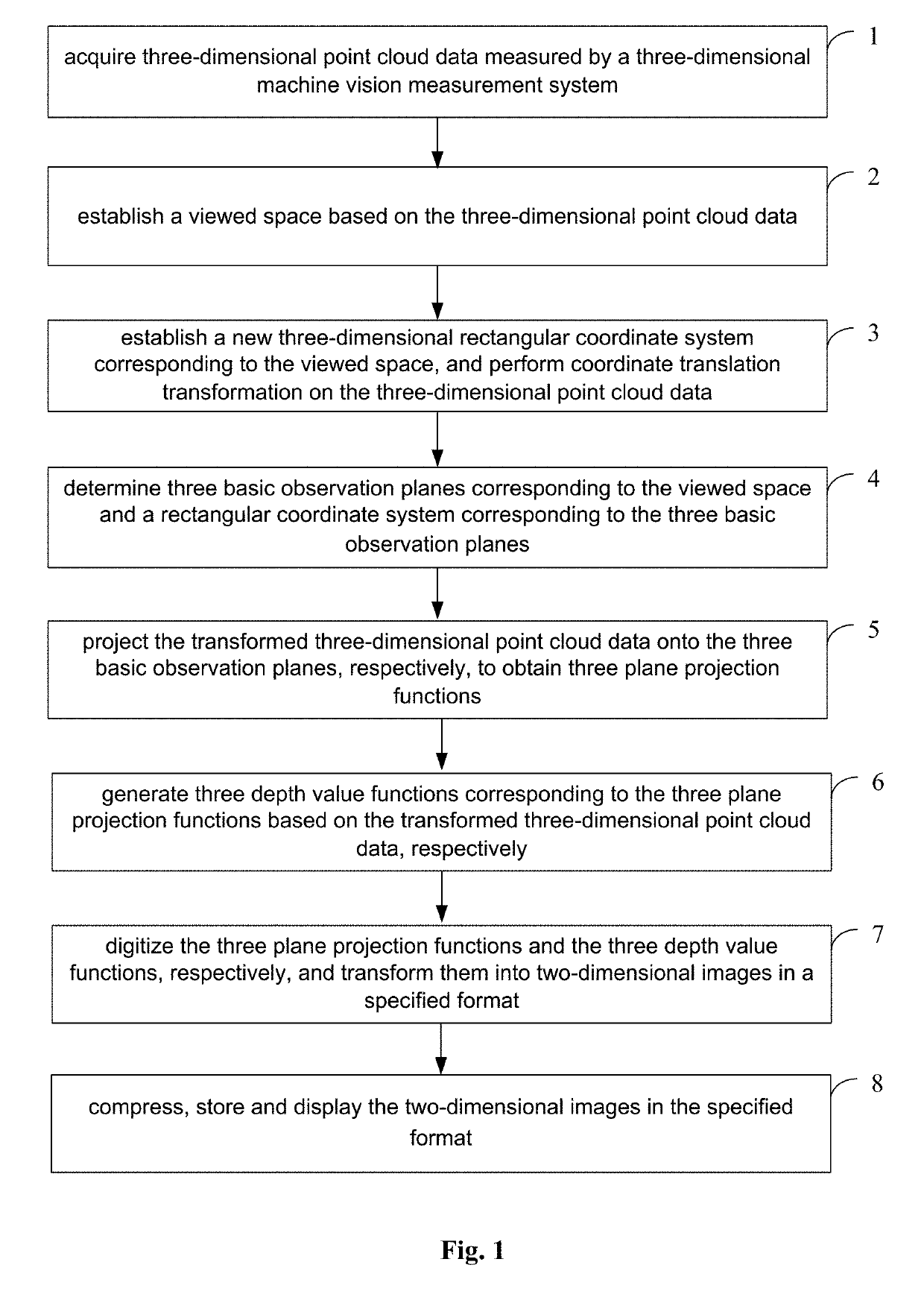

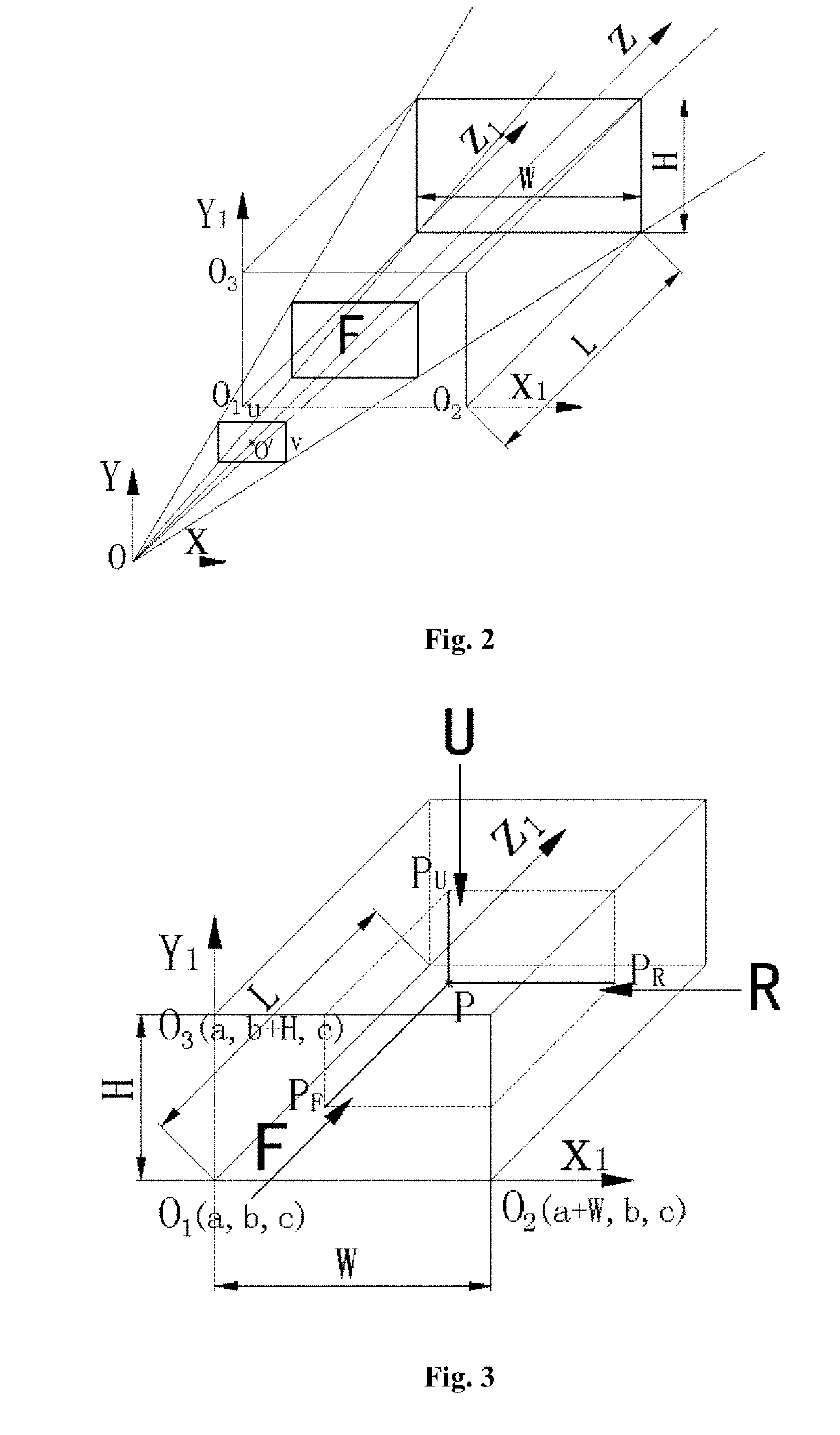

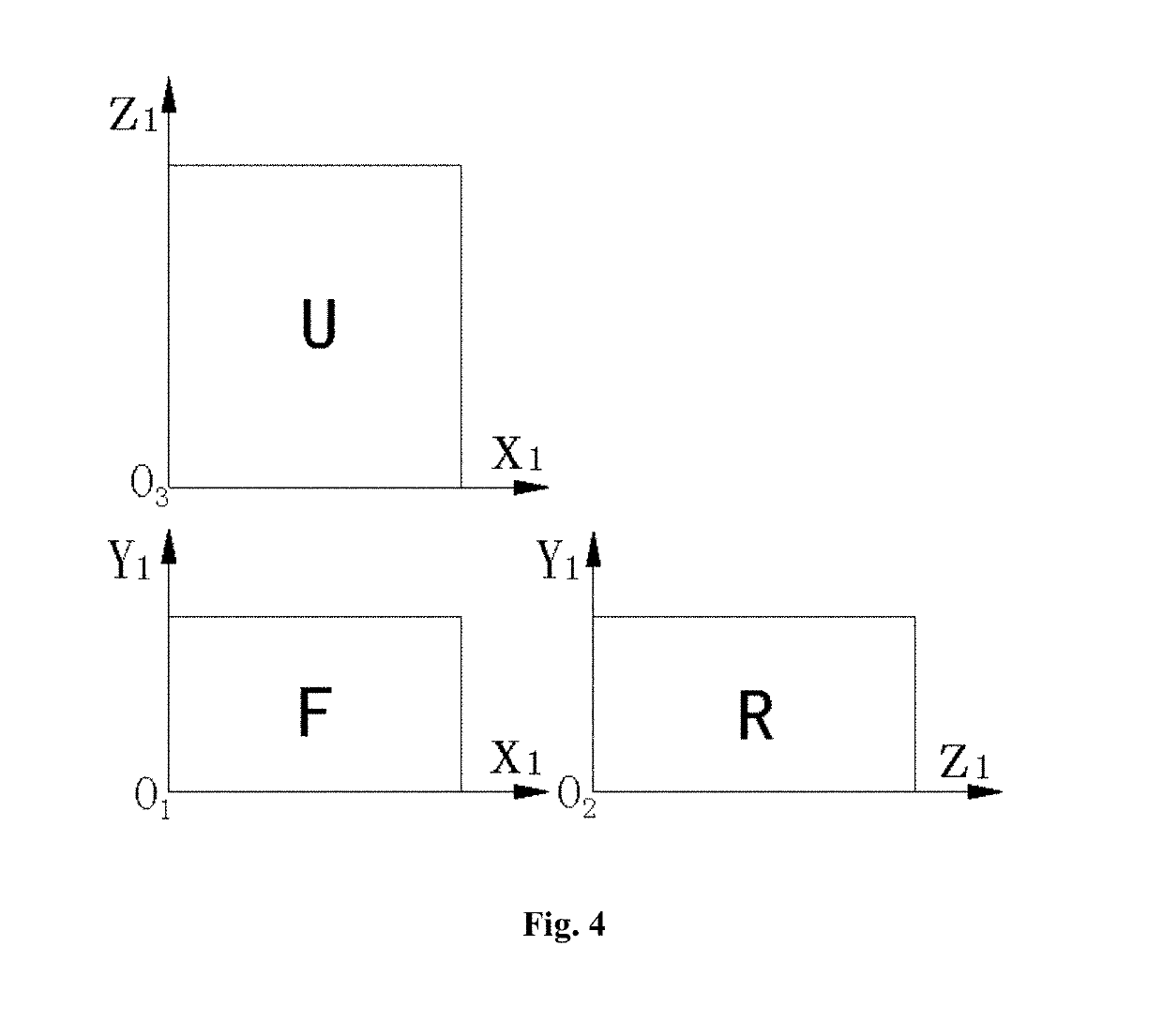

Method and apparatus for processing three-dimensional vision measurement data

ActiveUS20190195616A1Easy to operateAccurate featuresDetails involving processing stepsImage enhancementPoint cloudMachine vision

A method and an apparatus for processing three-dimensional vision measurement data are provided. The method includes: obtaining three-dimensional point cloud data measured by a three-dimensional machine vision measurement system and establishing a visualized space based thereon; establishing a new three-dimensional rectangular coordinate system corresponding to the visualized space, and performing coordinate translation conversion on the three-dimensional point cloud data; determining three basic observation planes corresponding to the visualized space and a rectangular coordinate system corresponding to the basic observation planes; respectively projecting the three-dimensional point cloud data on the basic observation planes to obtain three plane projection functions; respectively generating, according to the three-dimensional point cloud data, three depth value functions corresponding to the plane projection functions; digitally processing three plane projection graphs and three depth graphs respectively, and converting same to two-dimensional images of a specified format; and compressing, storing and displaying the two-dimensional images of the specified format.

Owner:BEIJING QINGYING MACHINE VISUAL TECH CO LTD

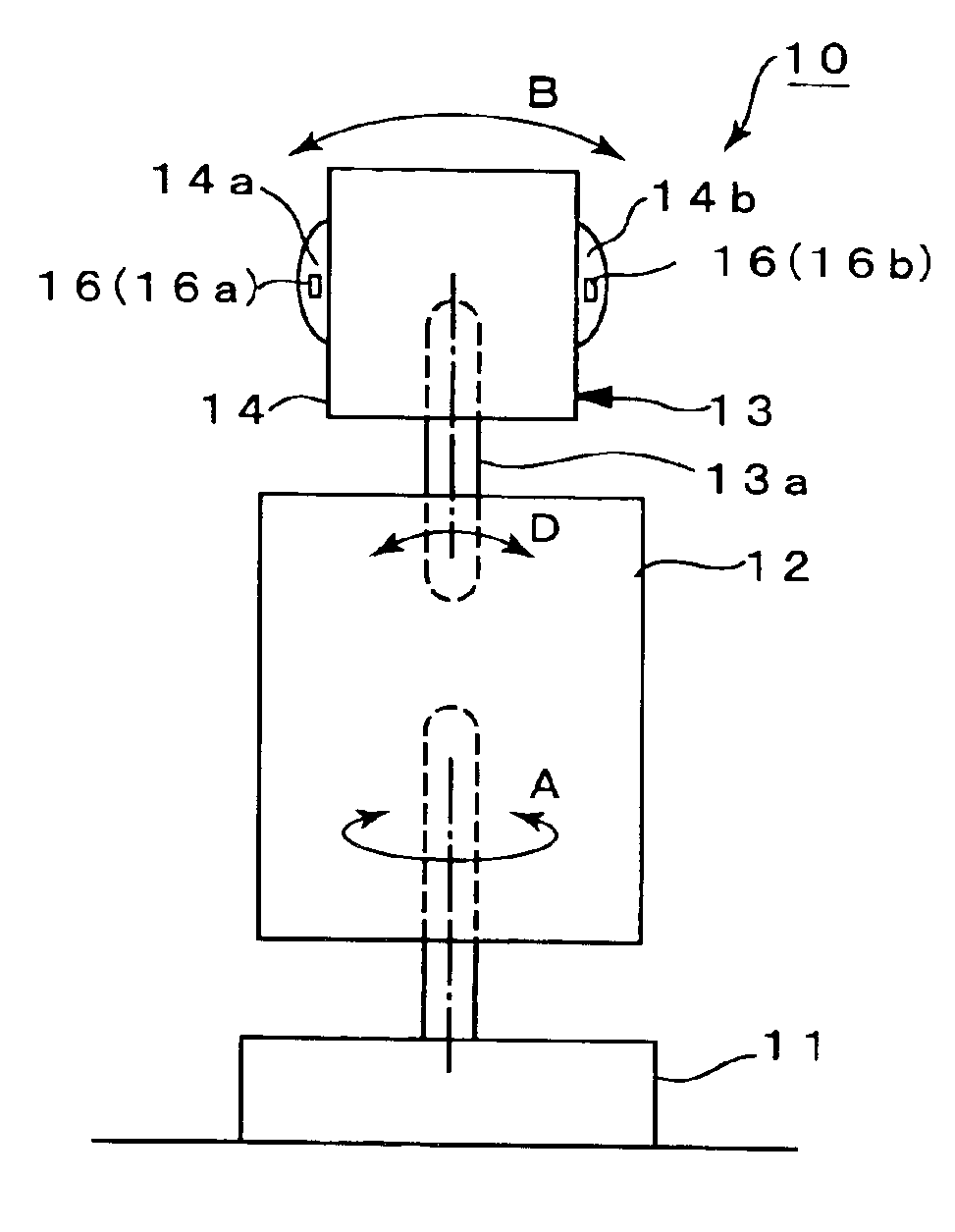

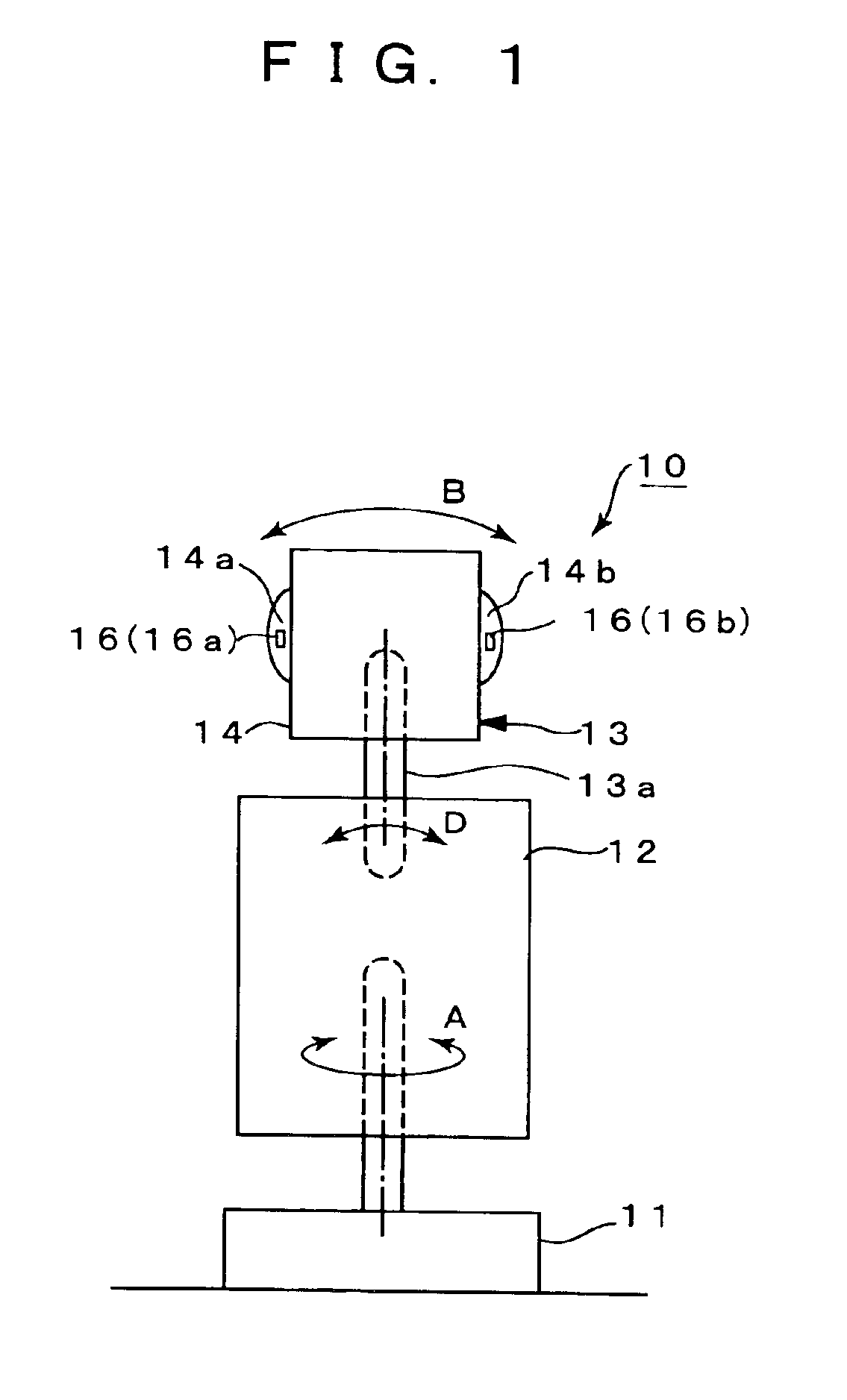

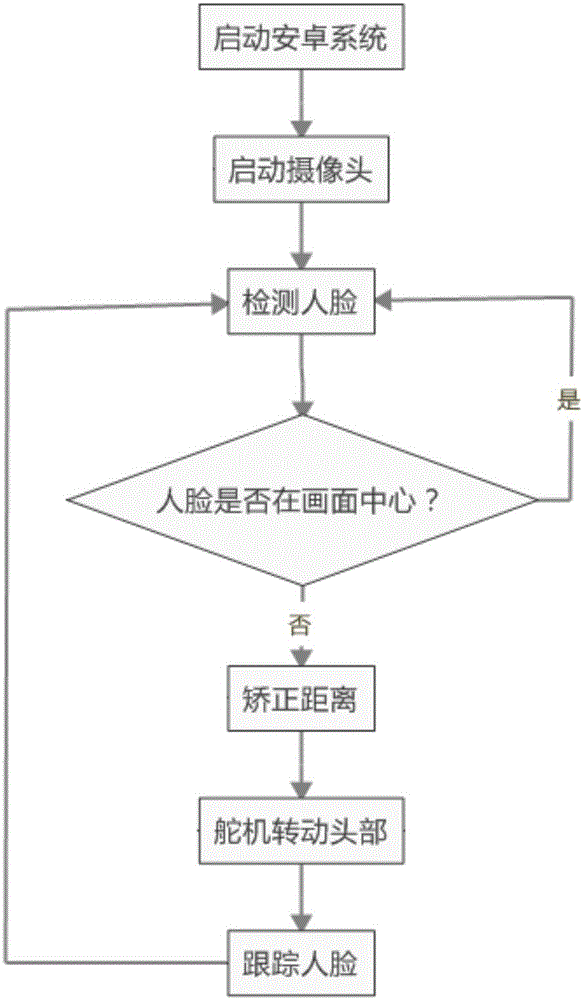

Method and apparatus for realizing head rotation of robot by face detection

InactiveCN106407882AUse is no longer monotonousAdd funImage enhancementProgramme-controlled manipulatorFace detectionComputer graphics (images)

The invention discloses a method for realizing head rotation of a robot by face detection. The method comprises: a control system is started, target face information is set, and a camera is turned on; the camera rotates to obtain an image and feeds back image information to a control system, and the control system reads image information; the control system identifies whether a target face exists in the image; if so, the control system obtains a target face position and the head of a robot rotates with movement of the face; and if not, the camera obtains an image again. The method and apparatus have the following beneficial effects: a robot with following head rotation is realized by face detection; and when a user moves towards a certain direction at a certain angle, the head of the robot rotates with the user. During the using process, the robot can face the face of the user directly; and a defect that some existing robots with stiff movements lack of flexibility and interaction can be overcome, so that the fun of the robot and the user can be increased substantially.

Owner:HEYUAN YONGYIDA TECH HLDG CO LTD

Method and System for Segmenting Moving Objects from Images Using Foreground Extraction

InactiveUS20110141251A1Image enhancementTelevision system detailsForeground detectionMethods statistical

Owner:MITSUBISHI ELECTRIC RES LAB INC

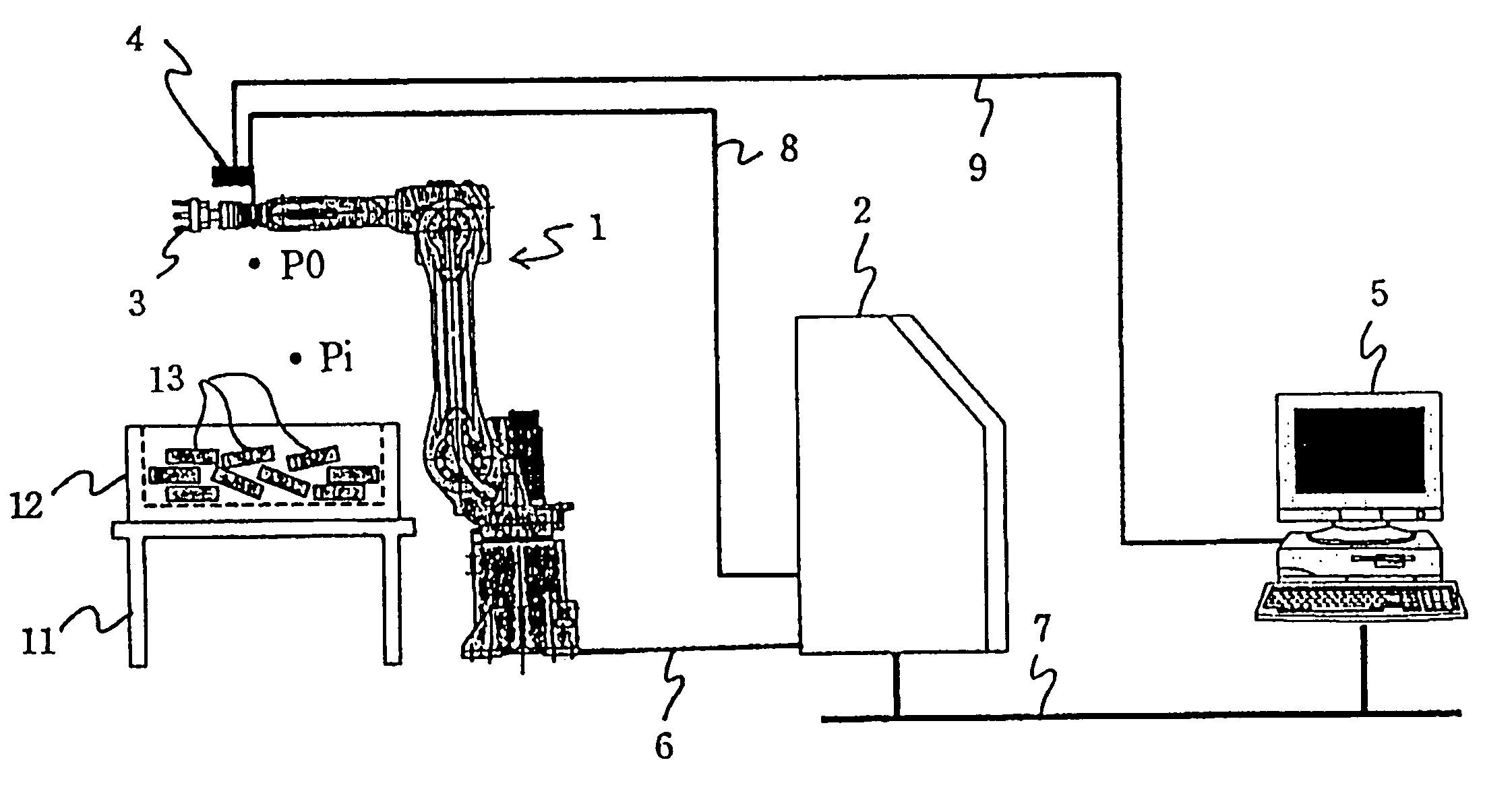

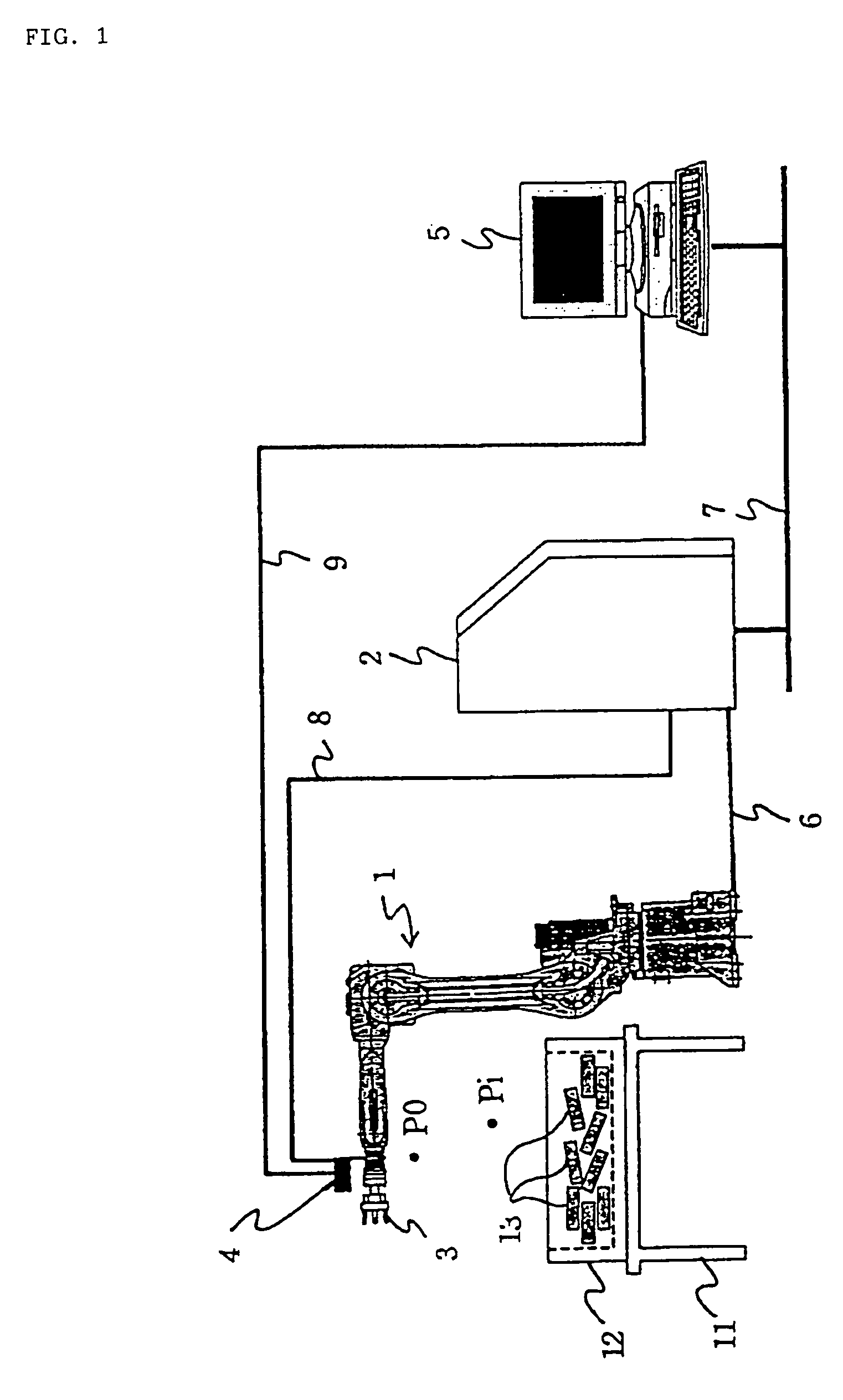

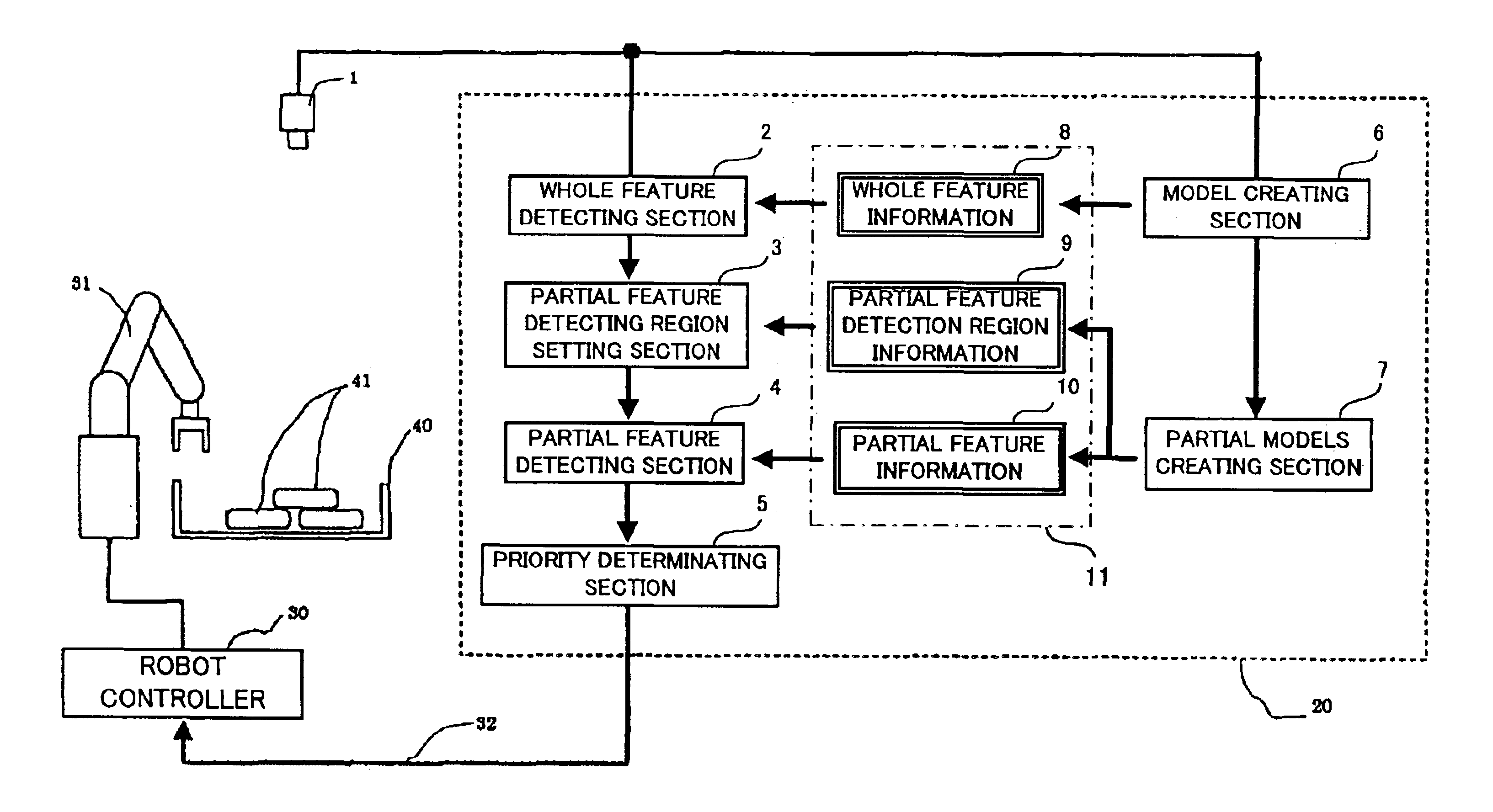

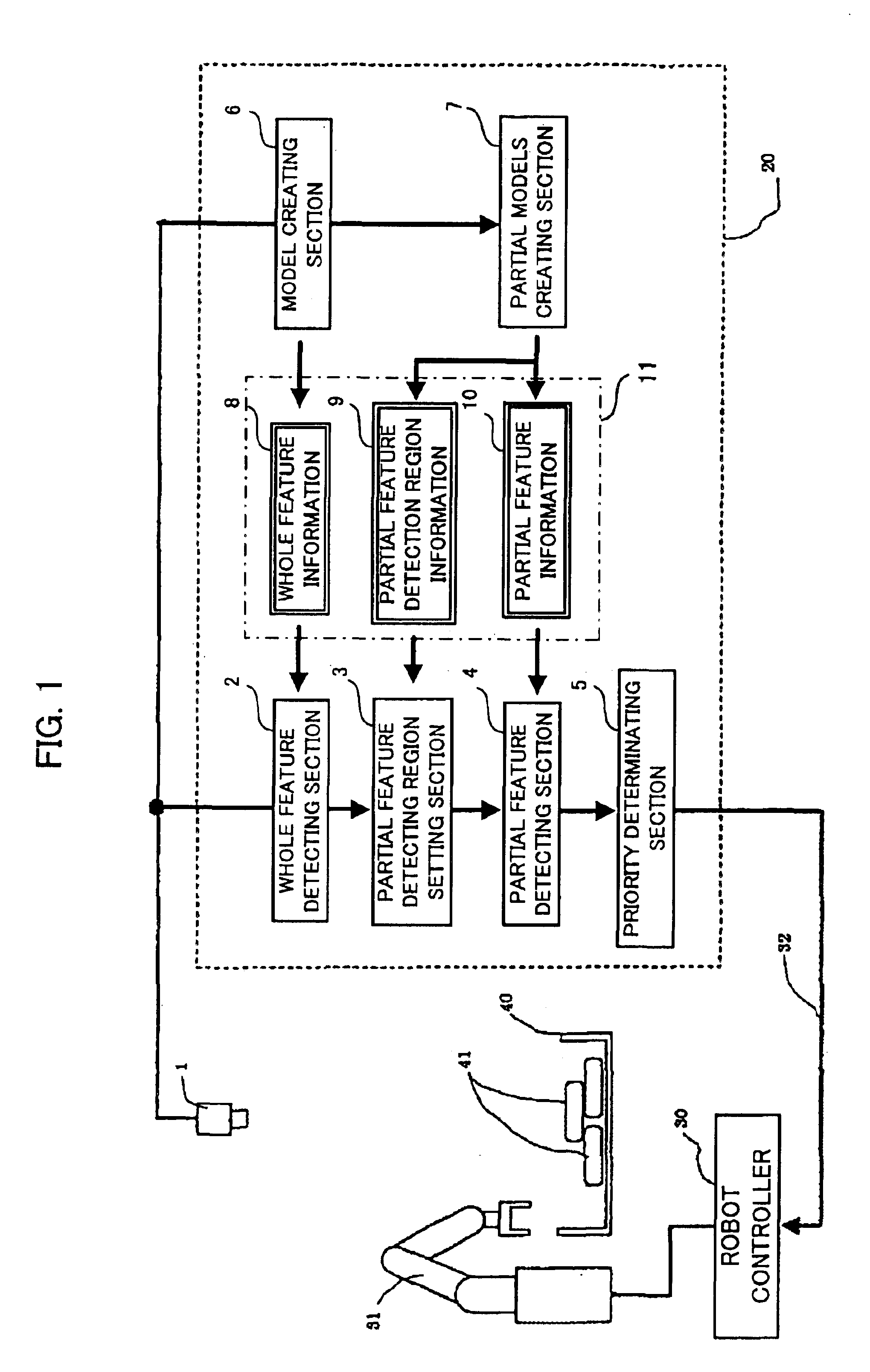

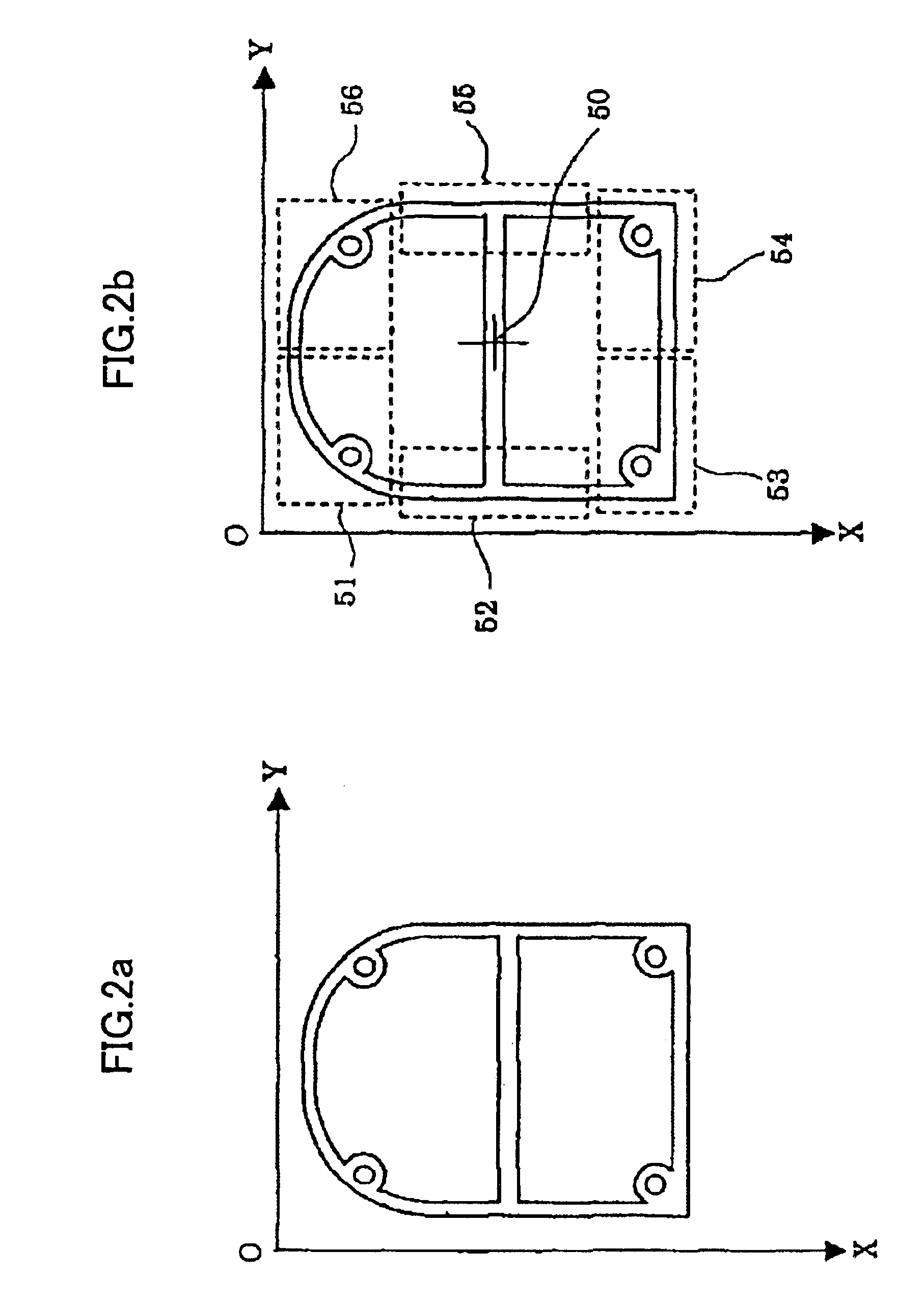

Object taking out apparatus

InactiveUS6845296B2Improve reliabilityLow costImage enhancementProgramme-controlled manipulatorDependabilityComputer science

An object taking out apparatus capable of taking out randomly stacked objects with high reliability and low cost. An image of one of workpieces as objects of taking out at a reference position is captured by a video camera. Whole feature information and partial feature information are extracted from the captured image by a model creating section and a partial model creating section, respectively, and stored in a memory with information on partial feature detecting regions. An image of randomly stacked workpieces is captured and analyzed to determine positions / orientations of images of the respective workpieces using the whole feature information. Partial feature detecting regions are set to the images of the respective workpieces using the determined positions / orientations of the respective workpieces and information on partial feature detecting regions stored in the memory. Partial features of the respective workpieces are detected in the partial feature detecting regions using the partial feature information, and priority of taking out the workpiece is determined based on results of detection of the partial features. A robot is controlled to successively take out the object of the first priority using data of the position / orientation of the object of the first priority.

Owner:FANUC LTD

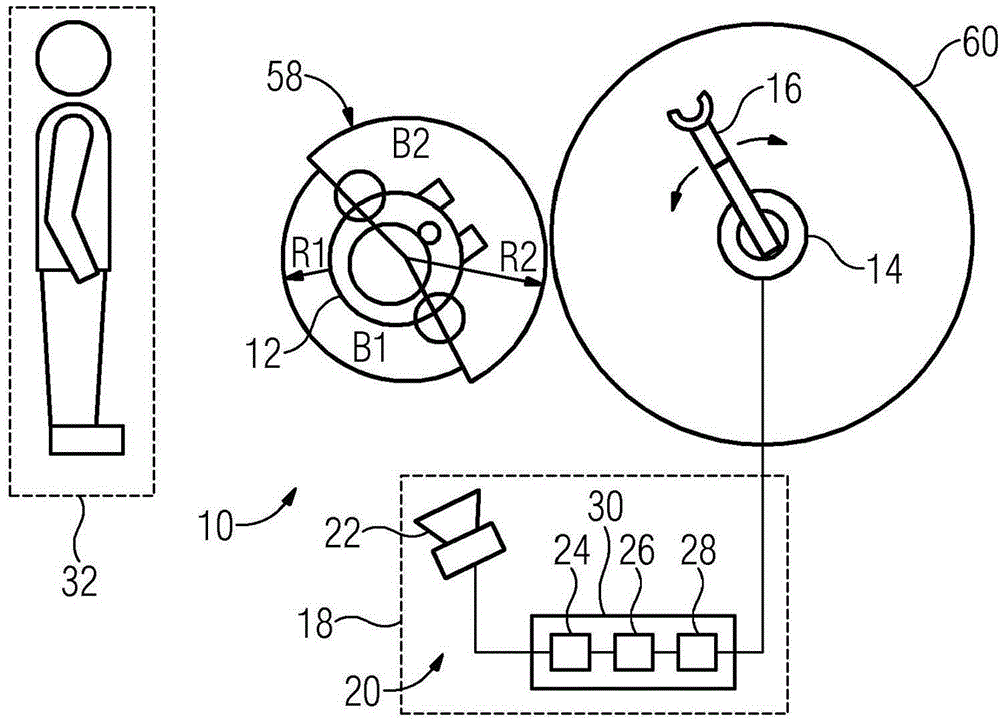

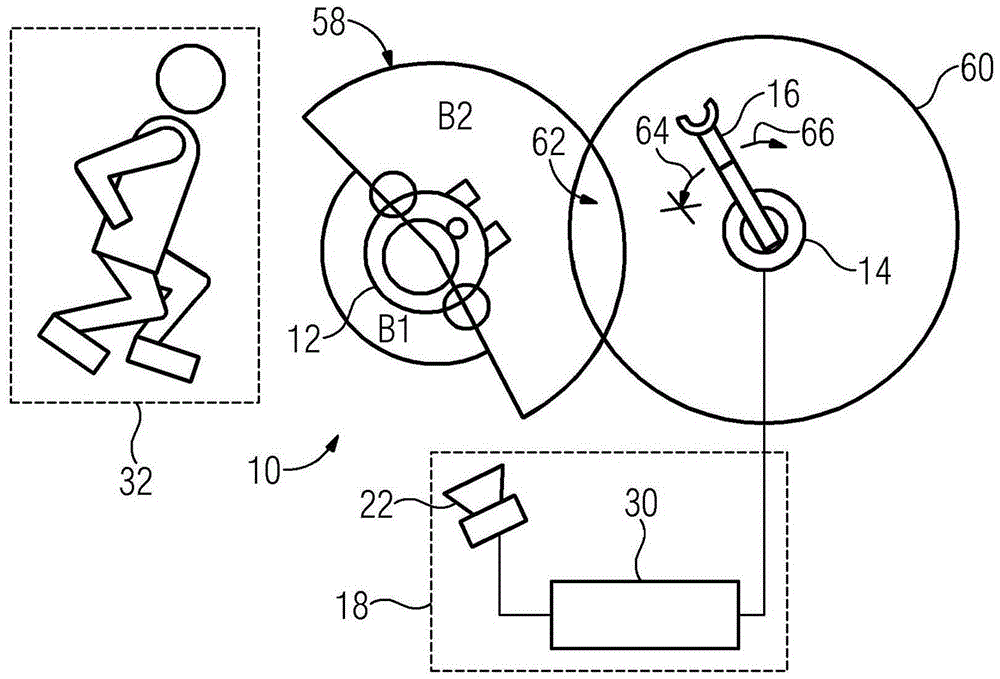

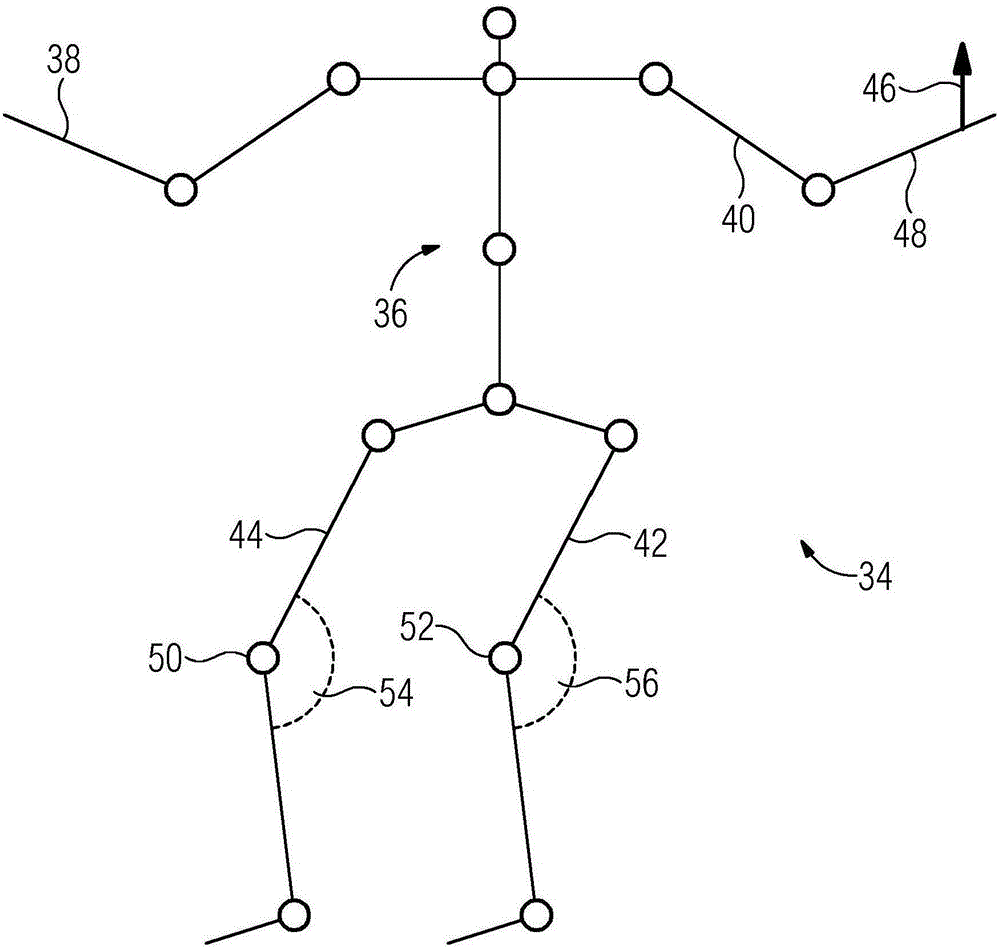

Robot arrangement and method for controlling a robot

The invention relates to a method for controlling a robot (14), which is designed to be operated in a working mode, in which a part (16) of the robot (14) is moved at a speed at which there is a risk of injury to a person (12), the working mode being deactivated if a safety device (18) detects that the person (12) has entered an action region (60) of the displaceable part (16). The aim is to make close cooperation possible between the person (12) and the robot (14). A sensor unit (20) determines a position and a posture of the person (12) while the person (12) is outside the action region (60) of the part (16). A prediction unit (26) determines an action region (58) of the person (12). A collision monitoring unit (28) monitors whether the two action regions (58, 60) overlap (62). The robot (14) can optionally be switched from the working mode into a safety mode.

Owner:SIEMENS AG

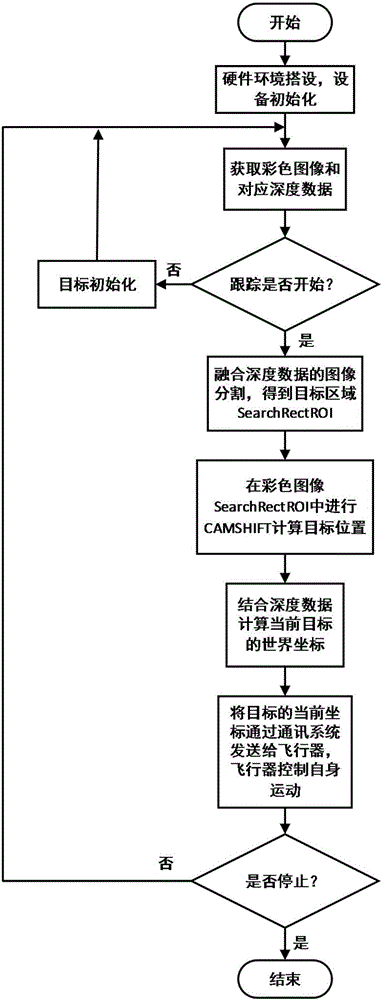

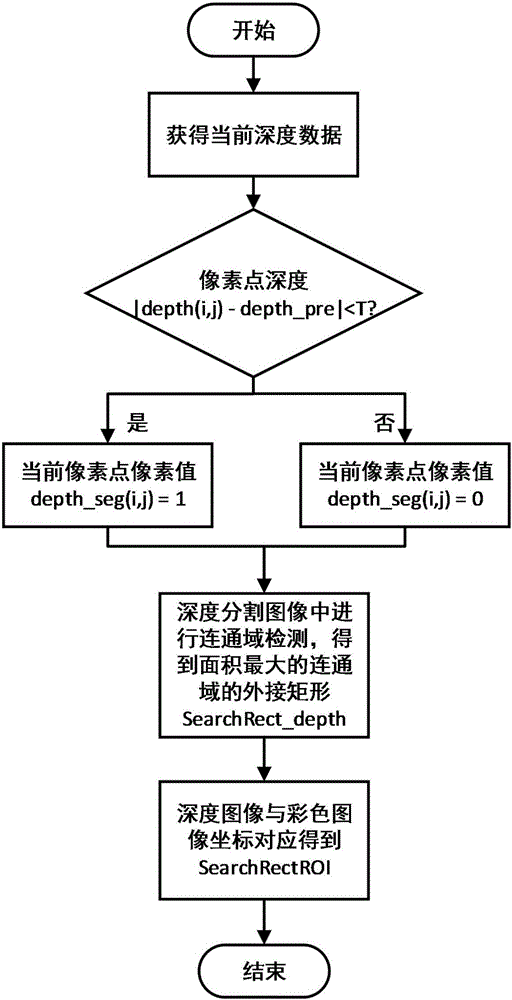

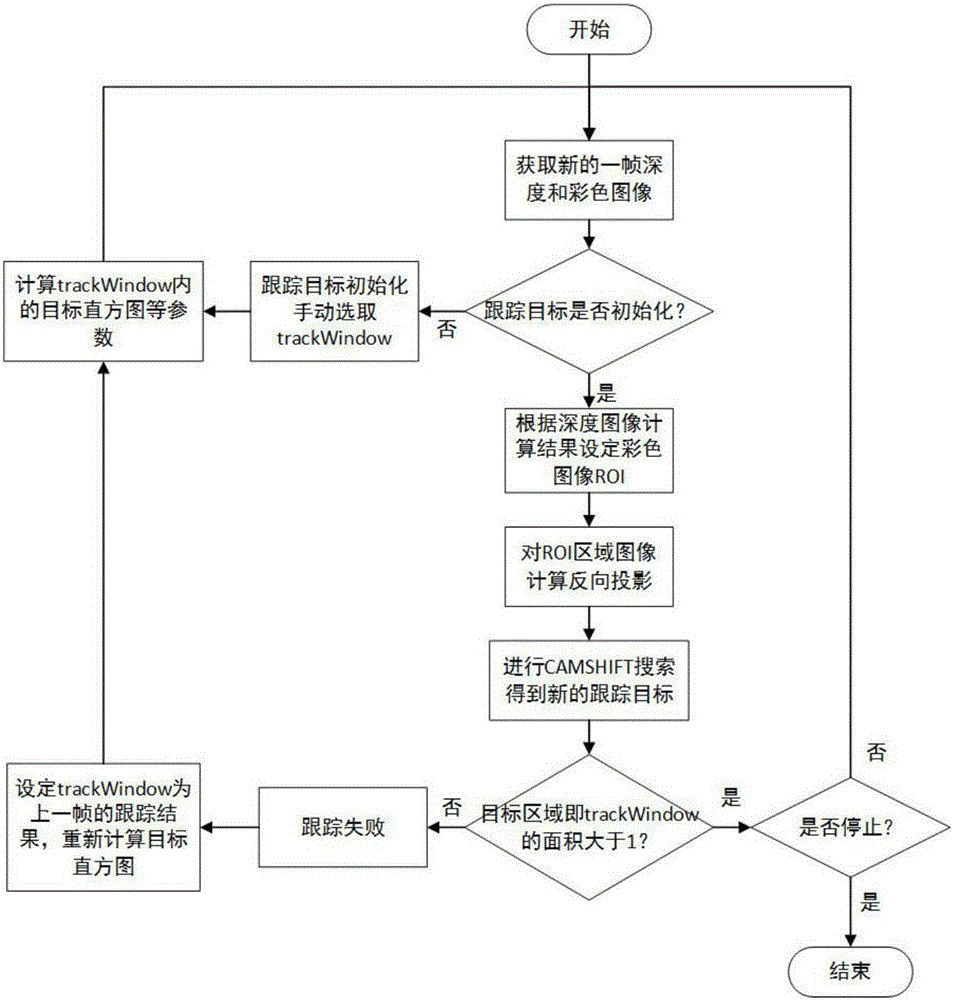

Target positioning method based on RGBD

InactiveCN106384353AReduce sensitivityImprove robustnessImage enhancementImage analysisColor imageCommunications system

The invention provides a target positioning method based on a RGBD, and the main steps comprise hardware environment arrangement and device initialization; the RGBD sensor is adopted to acquire depth data and color image of a mobile robot in current frame, a depth image is segmented based on the depth data to obtain a target region in the depth image, the target image is set as SearchRect_depth and is mapped to the color image, and the corresponding color image part is recorded as SearchRectROI; in the color image SearchRectROI, a CAMSHIFT method is utilized to calculate a position (x, y) of a target in the color image; the depth data is combined to calculate world coordinates (xw, yw, zw) of the current target; the world coordinates (xw, yw, zw) of the current target is transmitted to the mobile robot through a communication system, and the robot can control its self motion. According to the invention, the method has advantage of fast calculation speed, accurate positioning, strong adaptability for environment change and good expandability for different targets.

Owner:FOSHAN NANHAI GUANGDONG TECH UNIV CNC EQUIP COOP INNOVATION INST

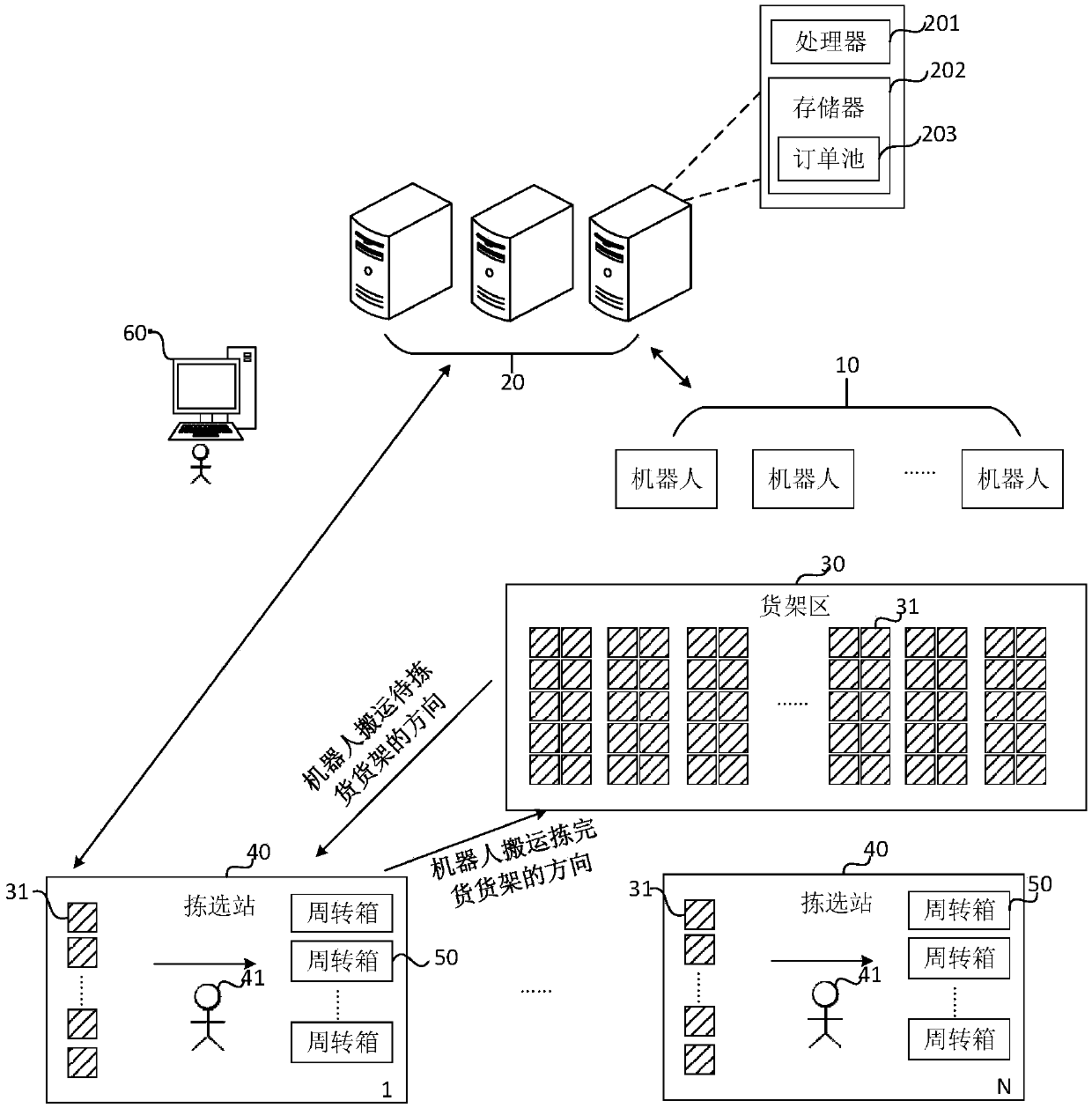

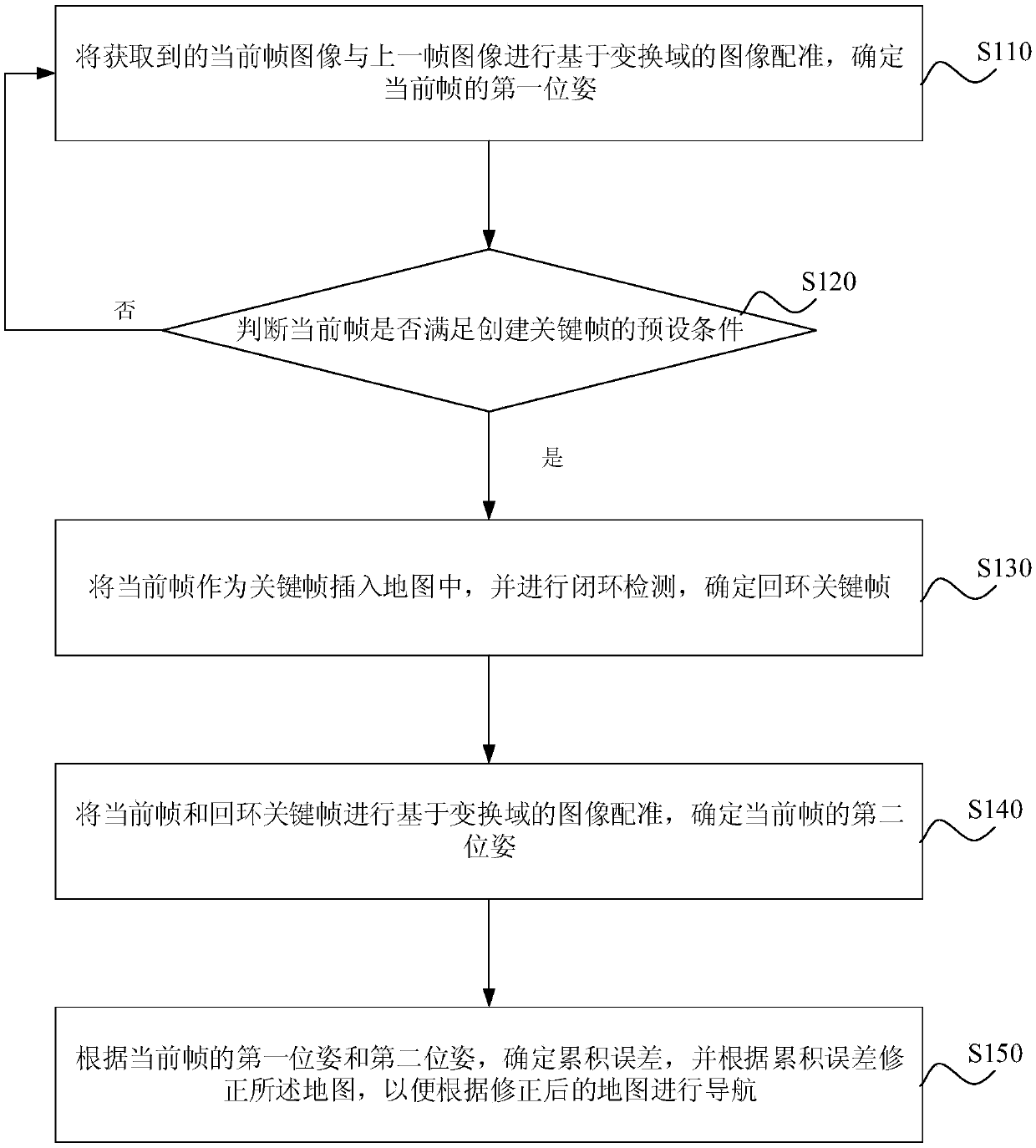

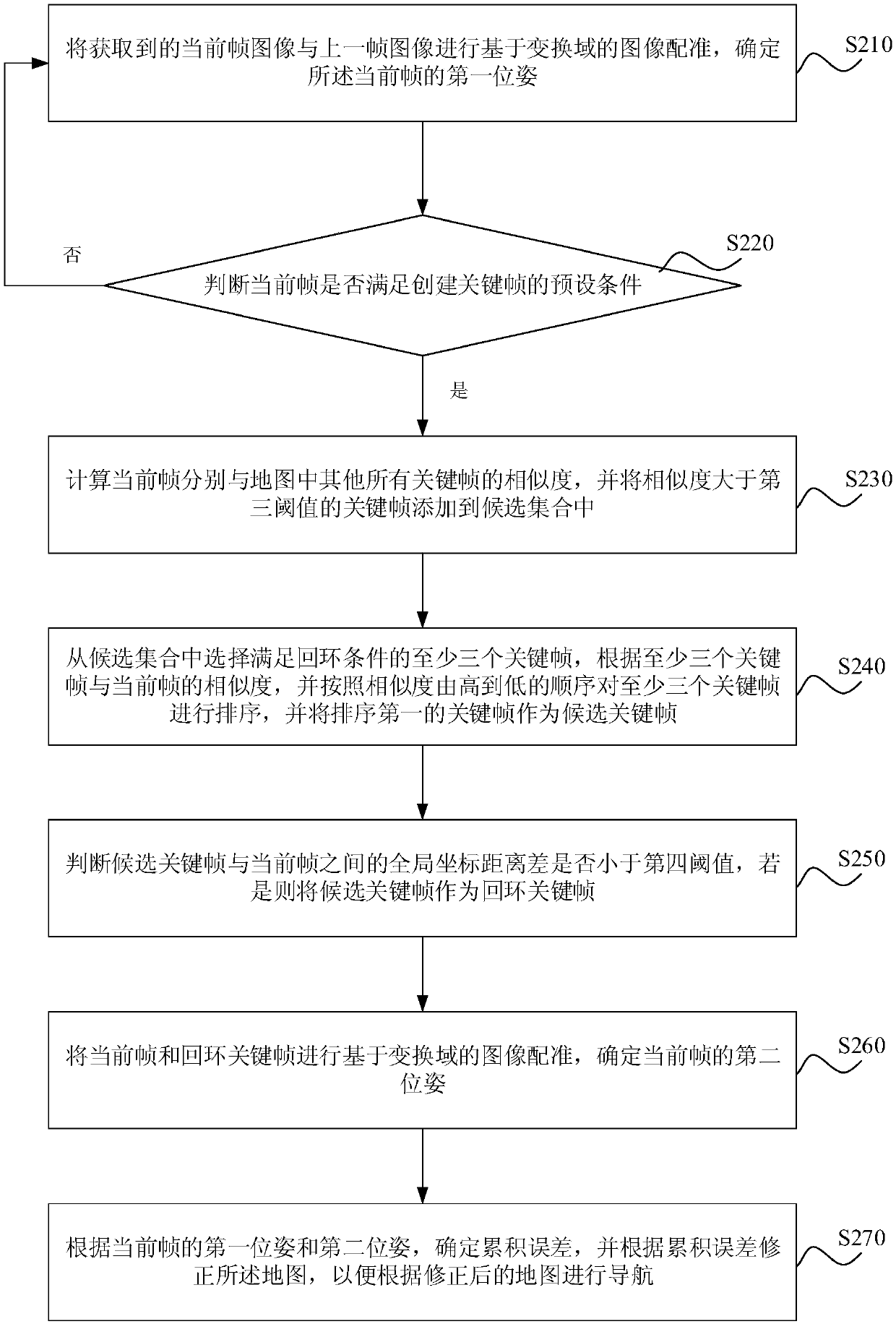

Navigation method, device, apparatus, and storage medium based on ground texture image

Embodiments of the invention disclose a navigation method, a device, an apparatus, and a storage medium based on a ground texture image, wherein the method comprises the following steps: performing animage registration based on a transform domain on an acquired current frame image and a previous frame image to determine the first position of the current frame; determining whether the current frame satisfies a preset condition for creating a key frame, if yes, performing the following steps: inserting the current frame as the key frame into a map, and performing a closed loop detection to determine a loopback key frame; performing the image registration based on the transform domain on the current frame image and the loopback key frame to determine the second position of the current frame;determining a cumulative error according to the first position and the second position of the current frame, and modifying the map according to the cumulative error to navigate according to the modified map. According to the embodiments of the invention, the position can be calculated by the image registration based on the transform domain, the cumulative error is determined and modified, and theaccuracy of the SLAM navigation is improved.

Owner:BEIJING JIZHIJIA TECH CO LTD

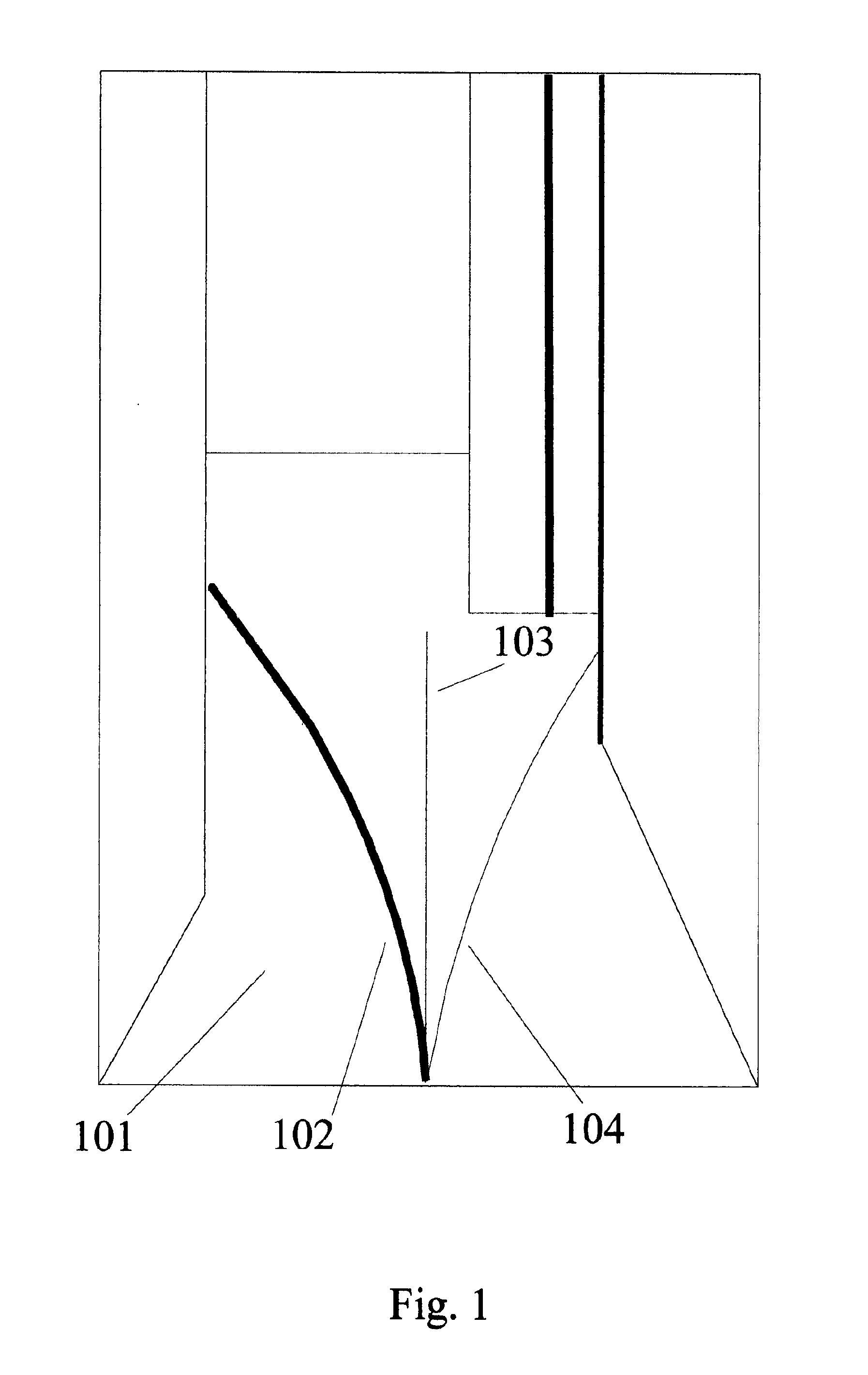

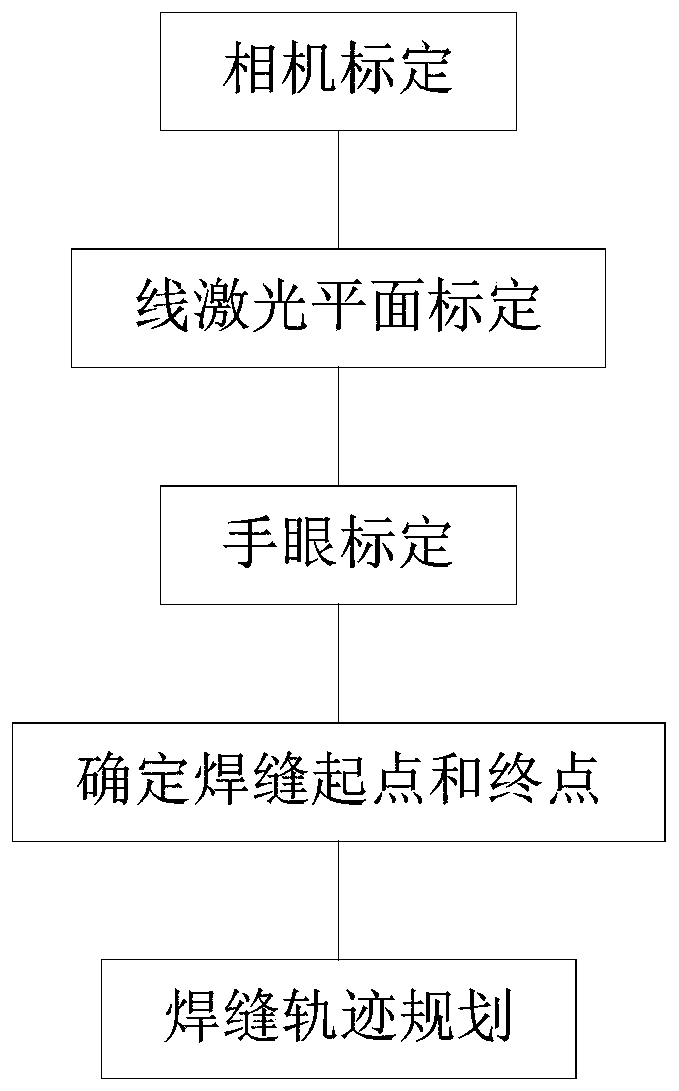

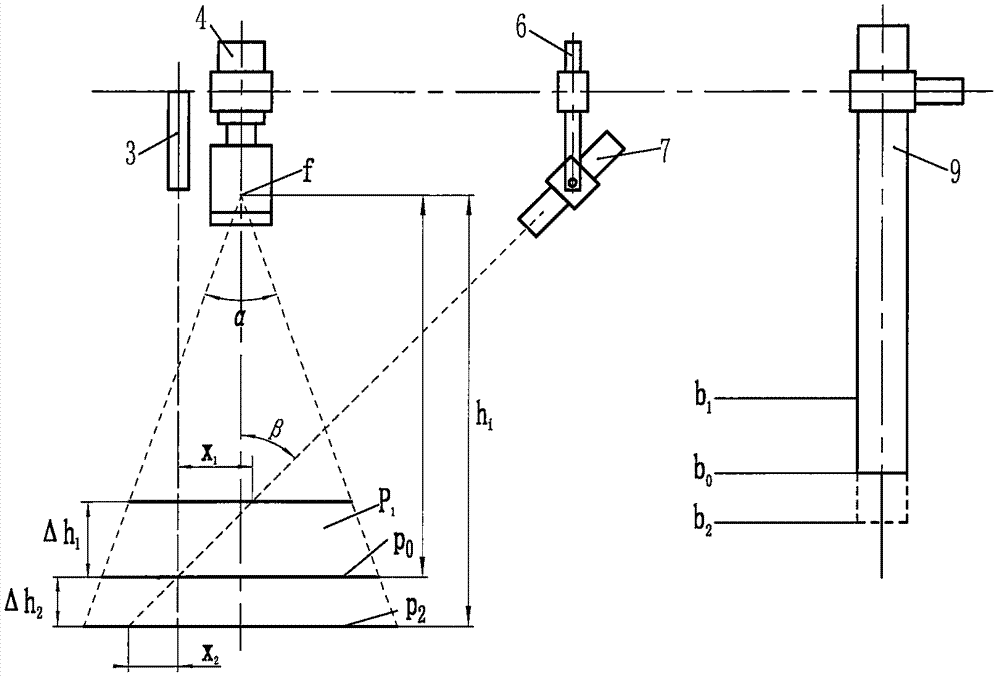

Intelligent three-dimensional autonomous weld joint tracing method

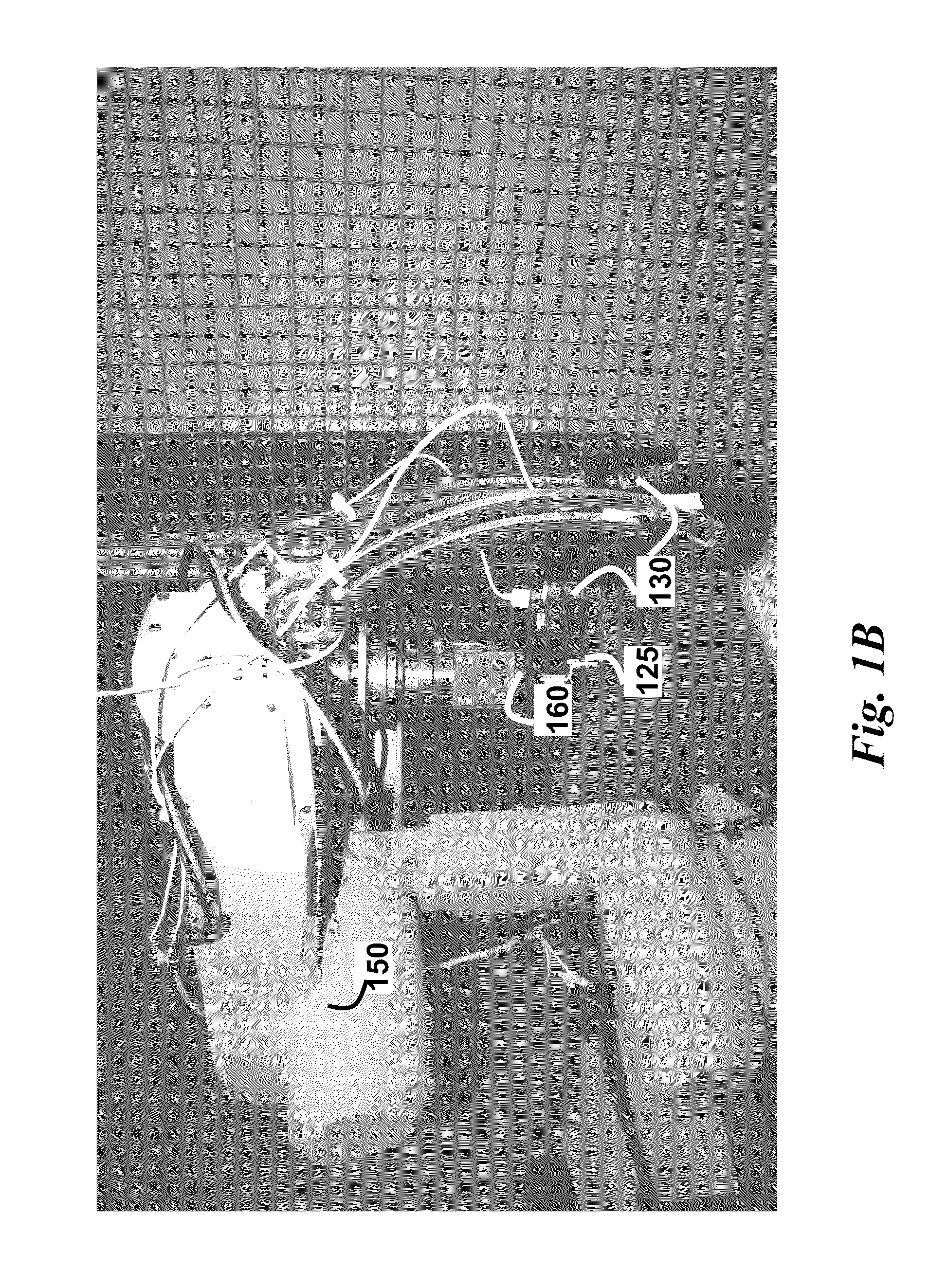

InactiveCN110245599ATrace implementationTracking and solvingImage enhancementProgramme-controlled manipulatorAcute angleHand eye calibration

The invention belongs to the technical field of automatic welding, and provides an intelligent three-dimensional weld joint autonomous tracking method which comprises the following steps: S1, camera calibration: a camera shoots and acquires image information of calibration plates at different spatial positions, establishes a corresponding equation set according to the shot image information, and obtains an internal parameter matrix and an external parameter matrix through analysis; s2, line laser plane calibration; s3, hand-eye calibration; s4, determination of a welding seam starting point and a welding seam ending point; and s5, welding seam track planning. According to the intelligent three-dimensional autonomous welding seam tracing method, any type of weld grooves is identified by utilizing an image algorithm; a linear laser triangulation method and a vision and robot calibration algorithm are adopted to autonomously plan a motion path, so that autonomous tracking of a welding line in any three-dimensional space is realized, three-dimensional orientation information of a welding line groove is obtained, and tracking of a right-angle or acute-angle inflection point is realized in a self-adaptive pre-swing manner.

Owner:深圳市超准视觉科技有限公司

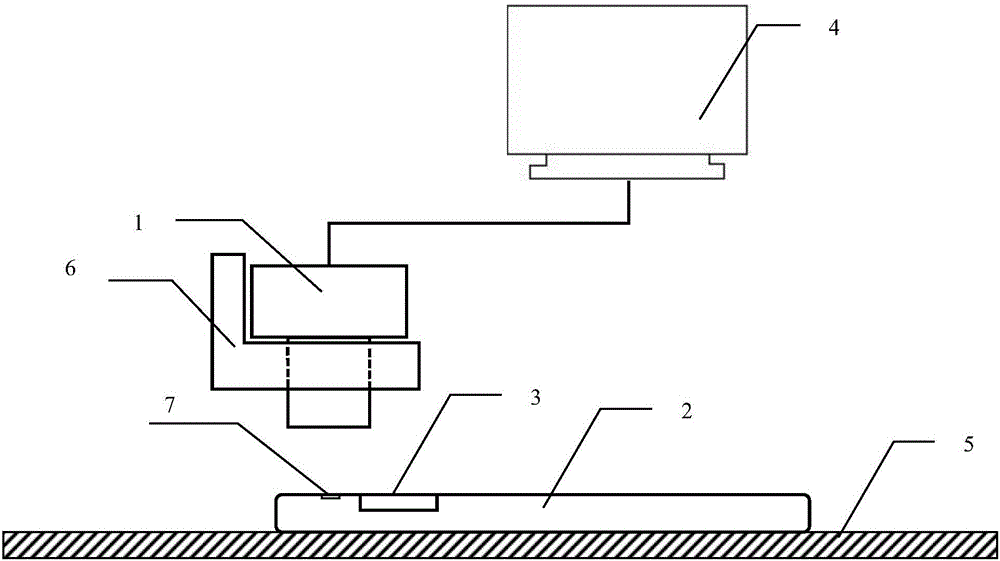

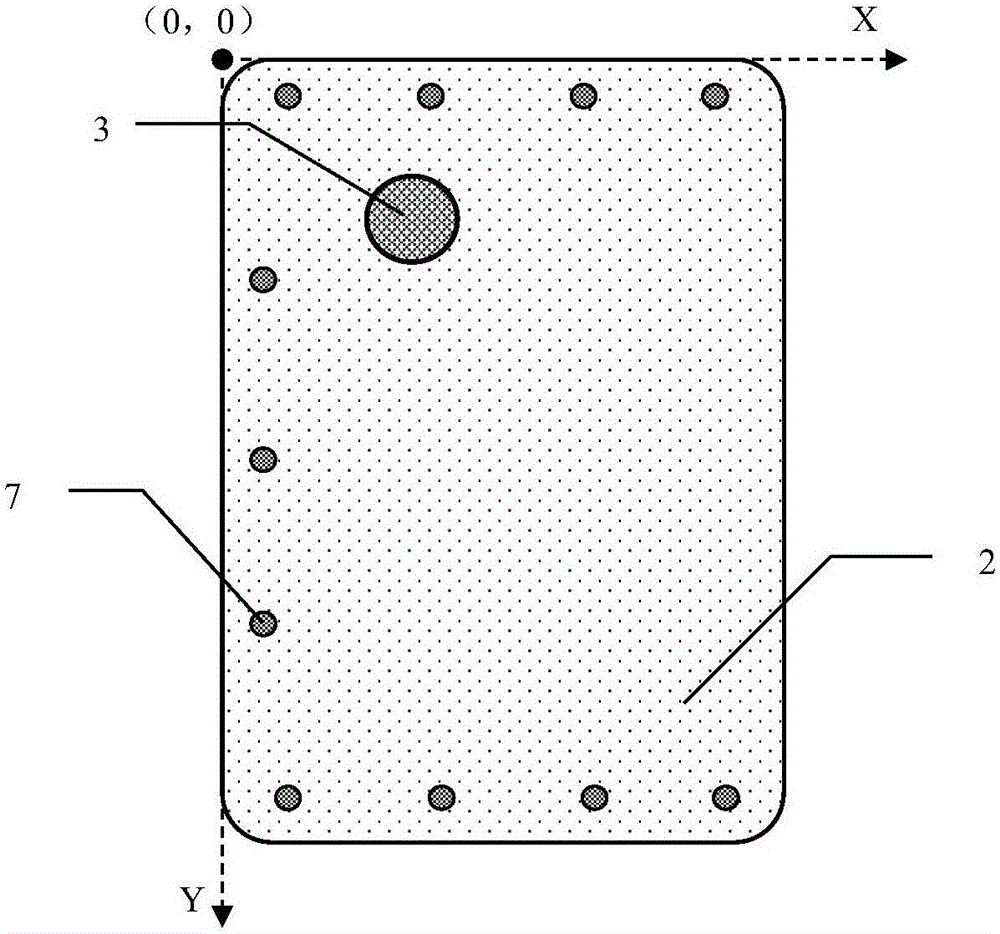

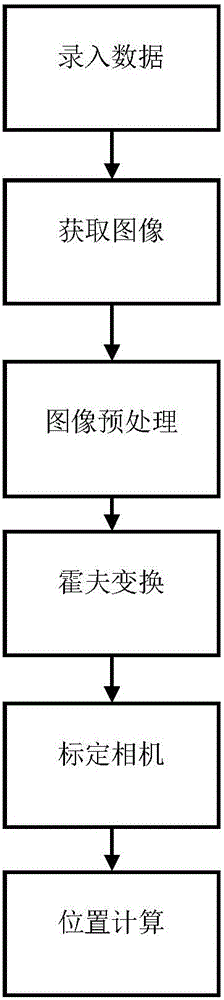

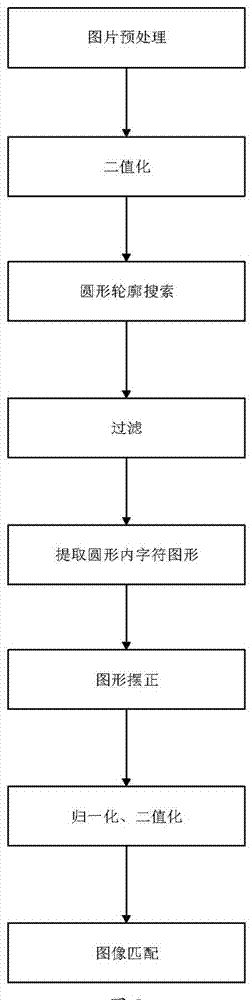

Machine vision positioning method for automatic screw assembling

ActiveCN106251354AOutstanding FeaturesHighlight significant progressImage enhancementImage analysisMachine visionOptical depth

The invention, which relates to the field of metering equipment employing an optical method as a feature, provides a machine vision positioning method for automatic screw assembling. The method comprises steps of inputting and recording data, obtaining an image, carrying out image pretreatment, carrying out Hough transform treatment, calibrating a camera, and calculating a position. According to the method, a circle in an image is searched by using the Hough transform method and the center of the circle is used as a positioning point, so that defects of high selection requirement and low precision for feature point selection according to the existing visual positioning method can be overcome; and a defect of an assembling failure caused by inaccurate product position fixation by a clamp during the automatic screw assembling process in the prior art can be overcome.

Owner:HEBEI UNIV OF TECH

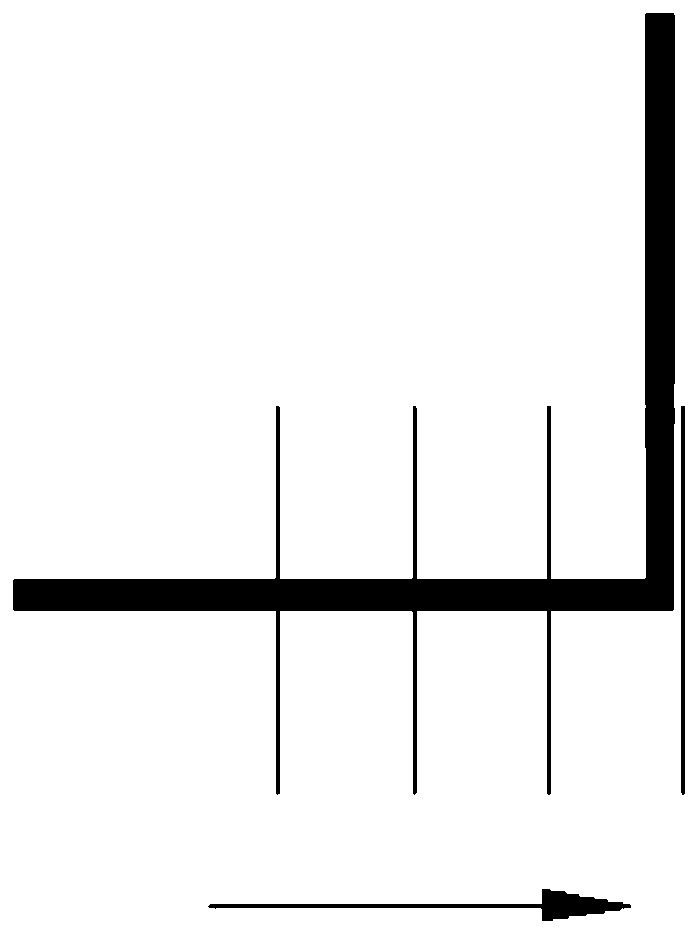

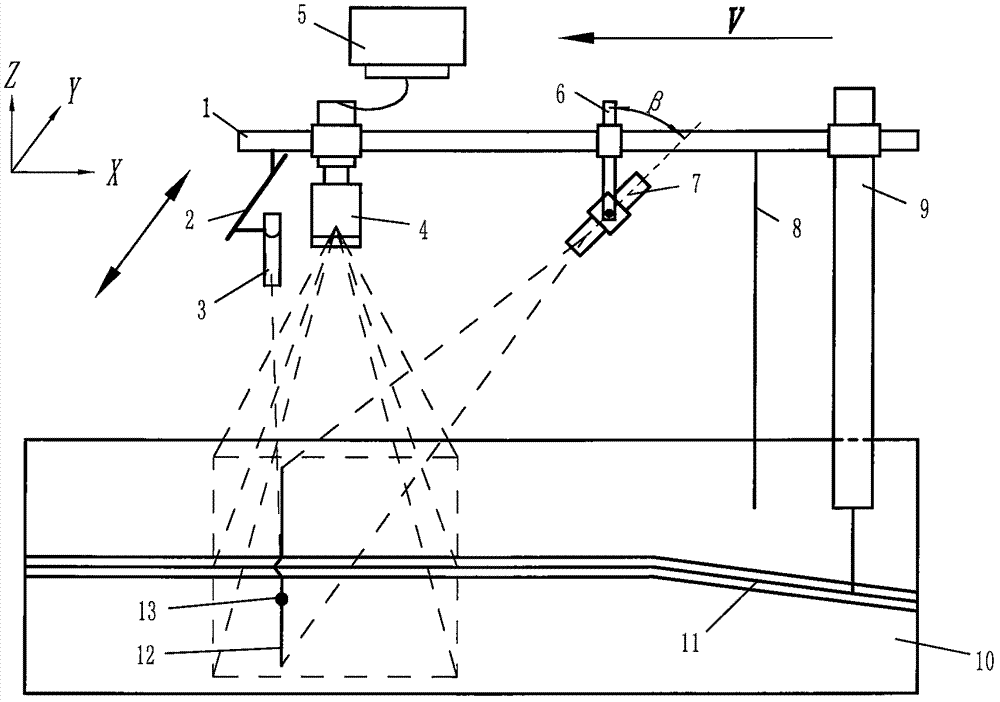

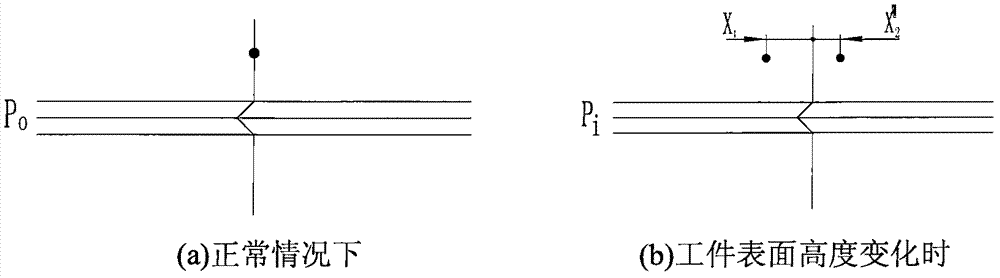

Welding seam tracking system and welding seam tracking method

ActiveCN106984926AHigh precisionImprove adaptabilityImage analysisWelding/cutting auxillary devicesEngineeringWeld seam

The invention discloses a welding seam tracking system and a welding seam tracking method. The welding seam tracking system comprises a vision sensing device, a bracket, a welding gun, a movement mechanism, a computer and a baffle, wherein the vision sensing device comprises a spot laser, a line laser and an area-array camera; the area-array camera collects images and transmits the collected images to the computer; the computer gives a command to control the movement mechanism to move in three-dimensional directions, so that during the welding process, the height of the welding gun can be automatically adjusted by monitoring the altitude changes of the workpiece surface. According to the welding seam tracking system and the welding seam tracking method, the welding seam height recognition tracking method and the welding gun adjusting method which are integrated based on the dot and line lasers is firstly adopted, the tracking method has a better adaptability to various working conditions, the limitation of the tracking method in the prior art is overcome, the response speed of the method is high, the altitude changes of the workpiece surface can be accurately identified, the accuracy is high, and the welding seam tracking can be completed high efficiently.

Owner:扬州力德工程技术有限公司

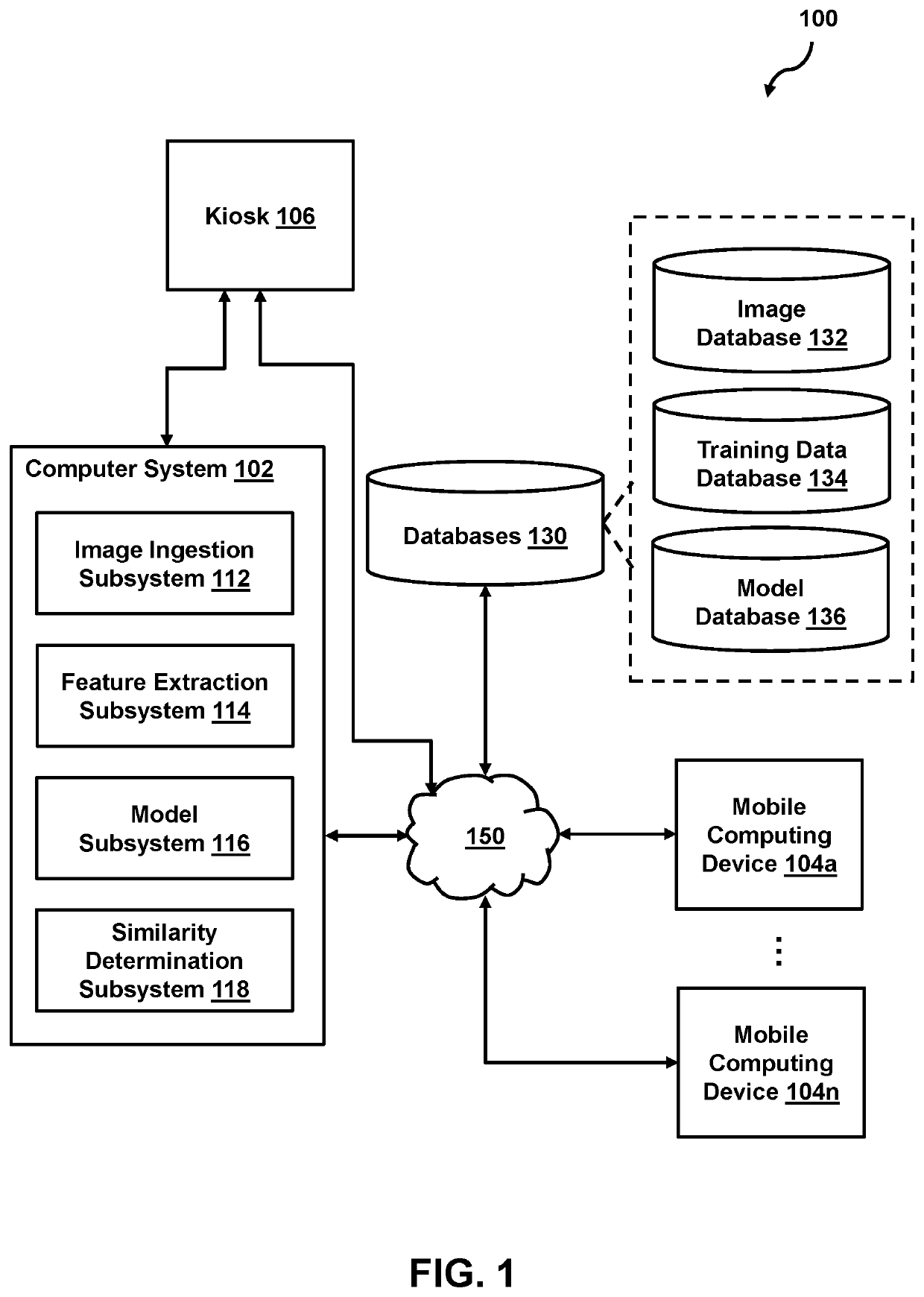

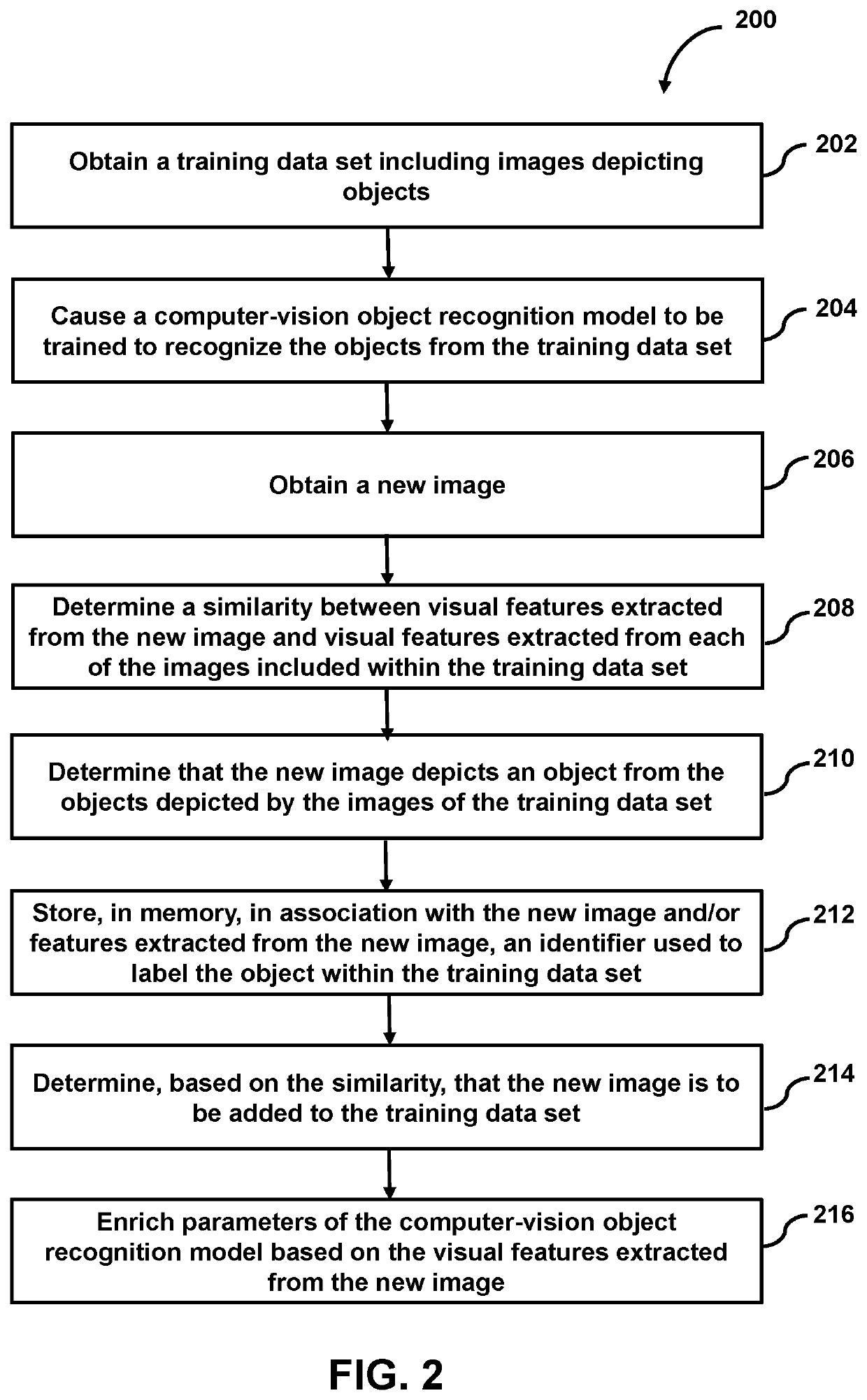

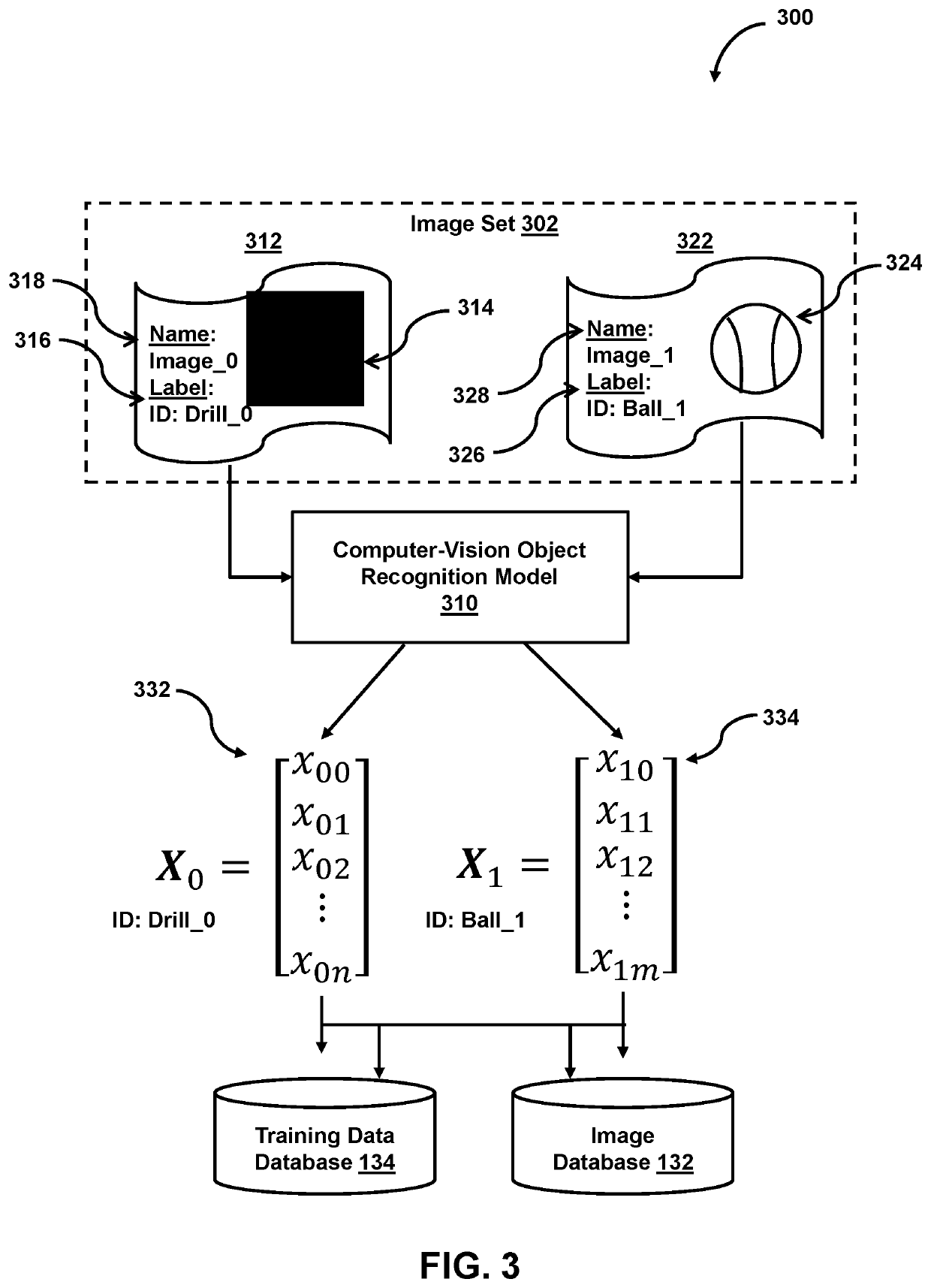

Sparse learning for computer vision

Provided is a process that includes training a computer-vision object recognition model with a training data set including images depicting objects, each image being labeled with an object identifier of the corresponding object; obtaining a new image; determining a similarity between the new image and an image from the training data set with the trained computer-vision object recognition model; and causing the object identifier of the object to be stored in association with the new image, visual features extracted from the new image, or both.

Owner:SLYCE ACQUISITION INC

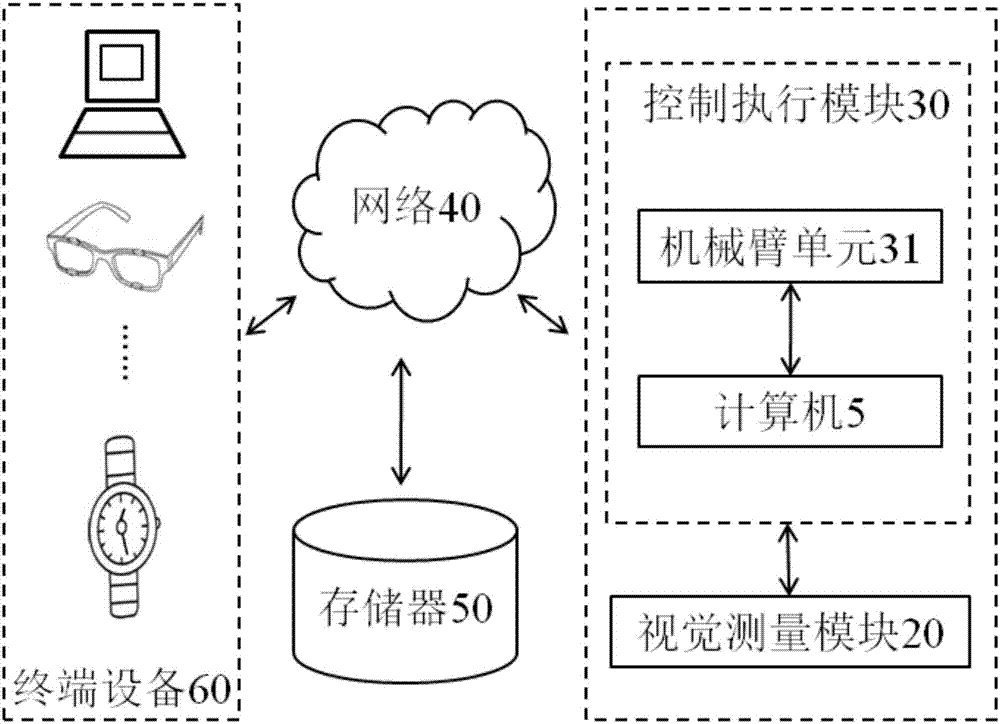

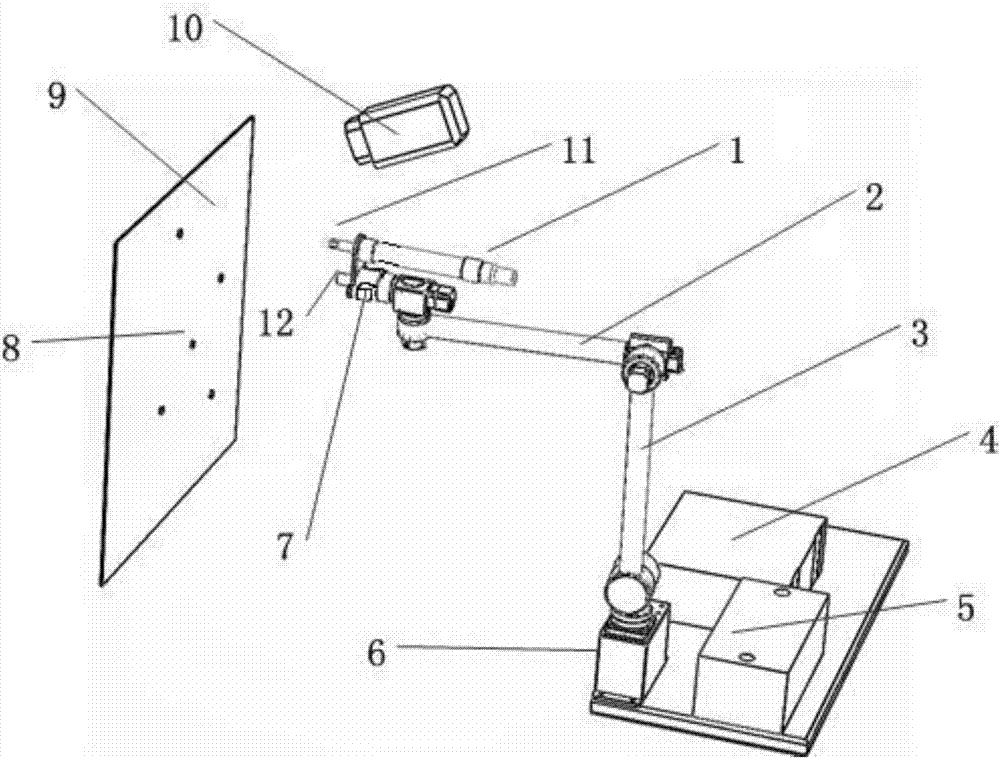

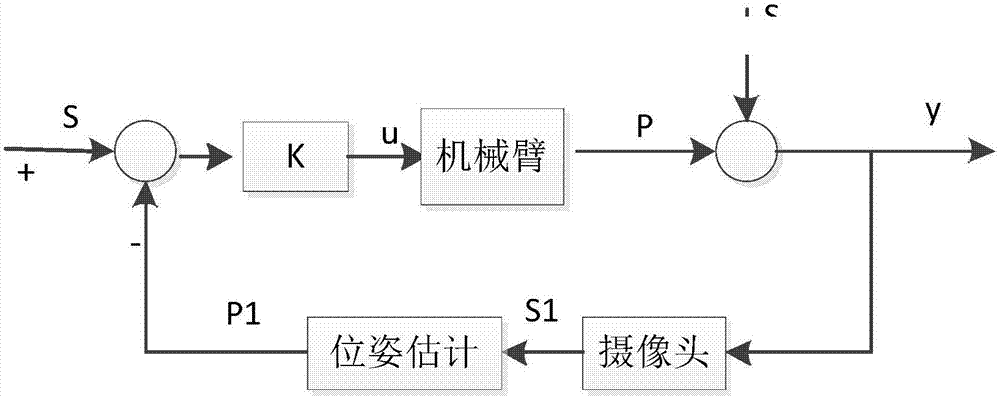

Visual-servo-based screw hole locating and screw locking-removing method

ActiveCN107984201AHigh precisionImprove efficiencyImage enhancementImage analysisEngineeringWorking range

The invention relates to a visual-servo-based screw hole locating and screw locking-removing method. A screw hole is located and a screw is locked and removed through a visual-servo-based screw hole locating and screw locking-removing device. The position information and pose information of the target screw hole or screw are obtained through a visual measuring module of the visual-servo-based screw hole locating and screw locking-removing device. Screw hole locating and screw locking and removing are controlled to be performed through a control performing module of the visual-servo-based screwhole locating and screw locking-removing device. Through the visual-servo-based screw hole locating and screw locking-removing method, locating precision of the screw can be improved to a large extent. A mechanical arm is used as a moving carrier, the work range is increased, and locking and removing work of the screw can also be conducted in an environment to which a worker cannot go conveniently. A point-to-surface screw or screw hole locating method is provided.

Owner:CHINA UNIV OF GEOSCIENCES (WUHAN)

Article pickup device

The presence range for workpieces (the internal edge of a container's opening) is defined with a visual sensor attached to a robot and the range is divided into a specified number of sectional regions. A robot position suitable for sensing each of the sectional regions is determined, and sensing is performed at the position so that workpieces in the container are picked up.

Owner:FANUC LTD

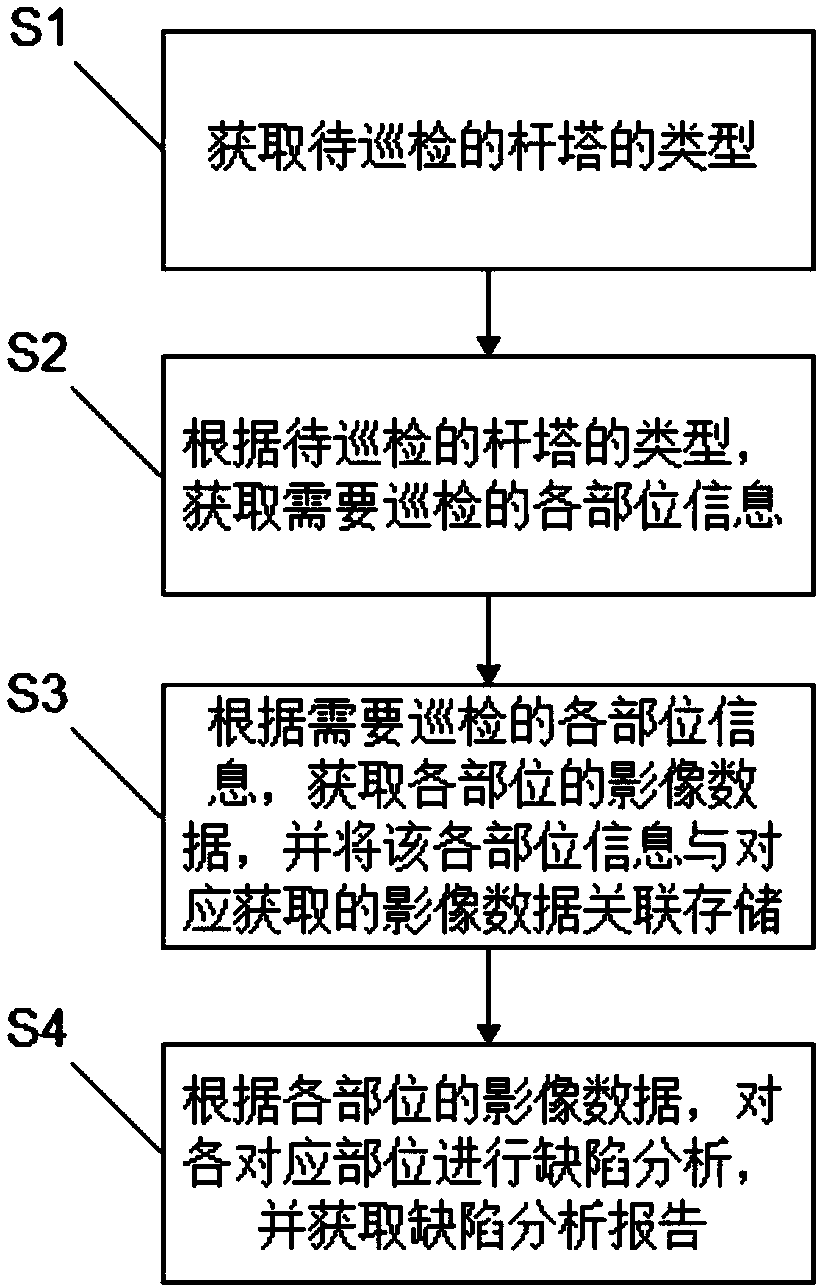

Method and system for fine routing inspection of power transmission line through unmanned plane

ActiveCN108365557AImplement automatic classificationReduce workloadImage enhancementChecking time patrolsPattern recognitionComputer vision

The invention relates to a method and system for the fine routing inspection of a power transmission line through an unmanned plane, and the method comprises the following steps: obtaining the type ofa pole to be inspected; obtaining the information of all to-be-inspected parts according to the type of the pole to be inspected; obtaining the image data of all parts according to the information ofall to-be-inspected parts, and enabling the information of all parts and the corresponding obtained image data to be correlated and stored; carrying out the analysis of the defects of all corresponding parts according to the image data of all parts, and obtaining a defect analysis report. compared with the prior art, the method achieves the automatic obtaining of the image data of all to-be-inspected parts of the pole according to the type of the pole, effectively solves a problem of the disordered collection of image data or there is the shortage of data, achieves the automatic classification of the collected image data through the correlation of the collected image data with the information of all parts, greatly reduces the workload, improves the work efficiency, and improves the data classification accuracy.

Owner:GUANGDONG POWER GRID CORP ZHAOQING POWER SUPPLY BUREAU

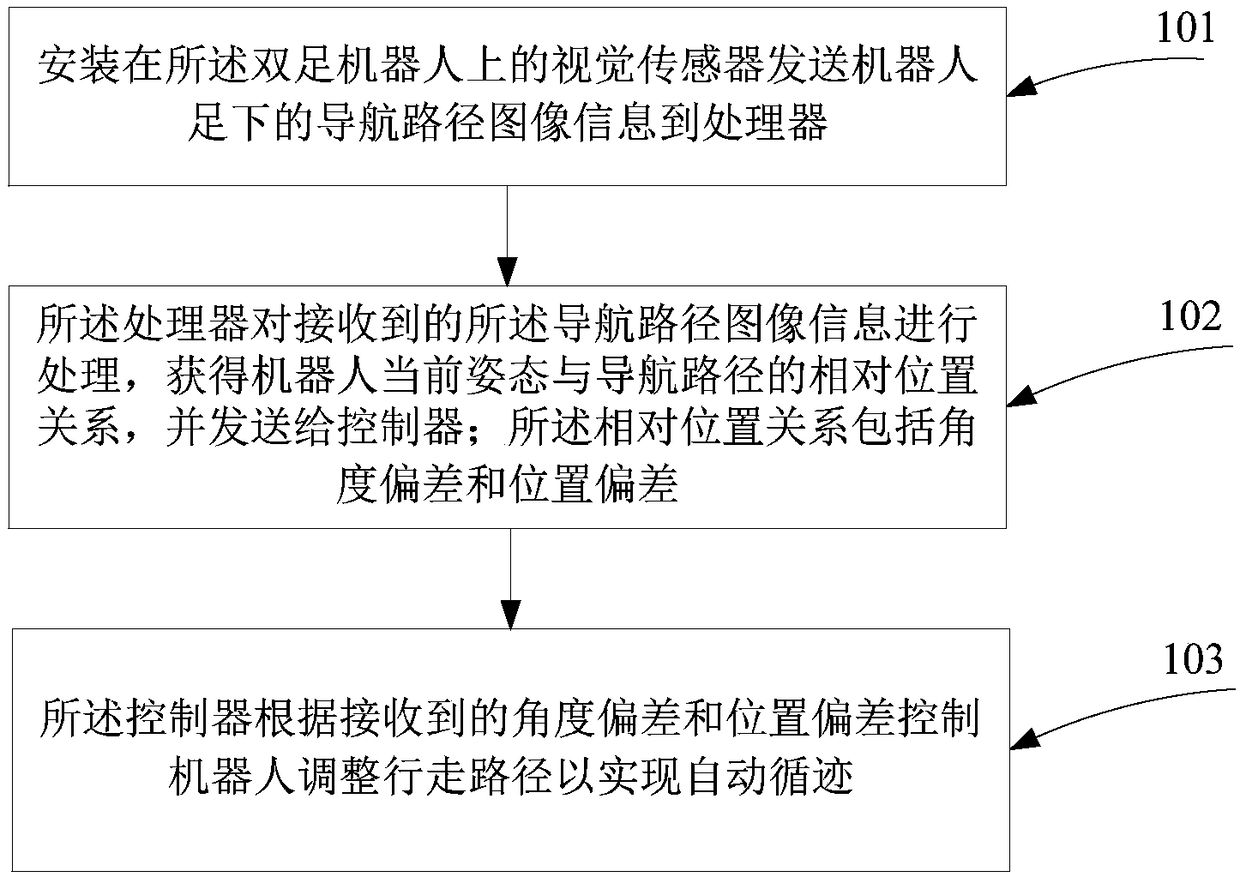

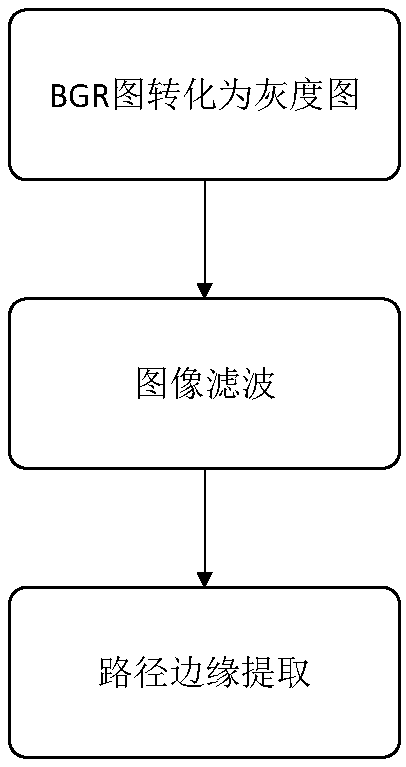

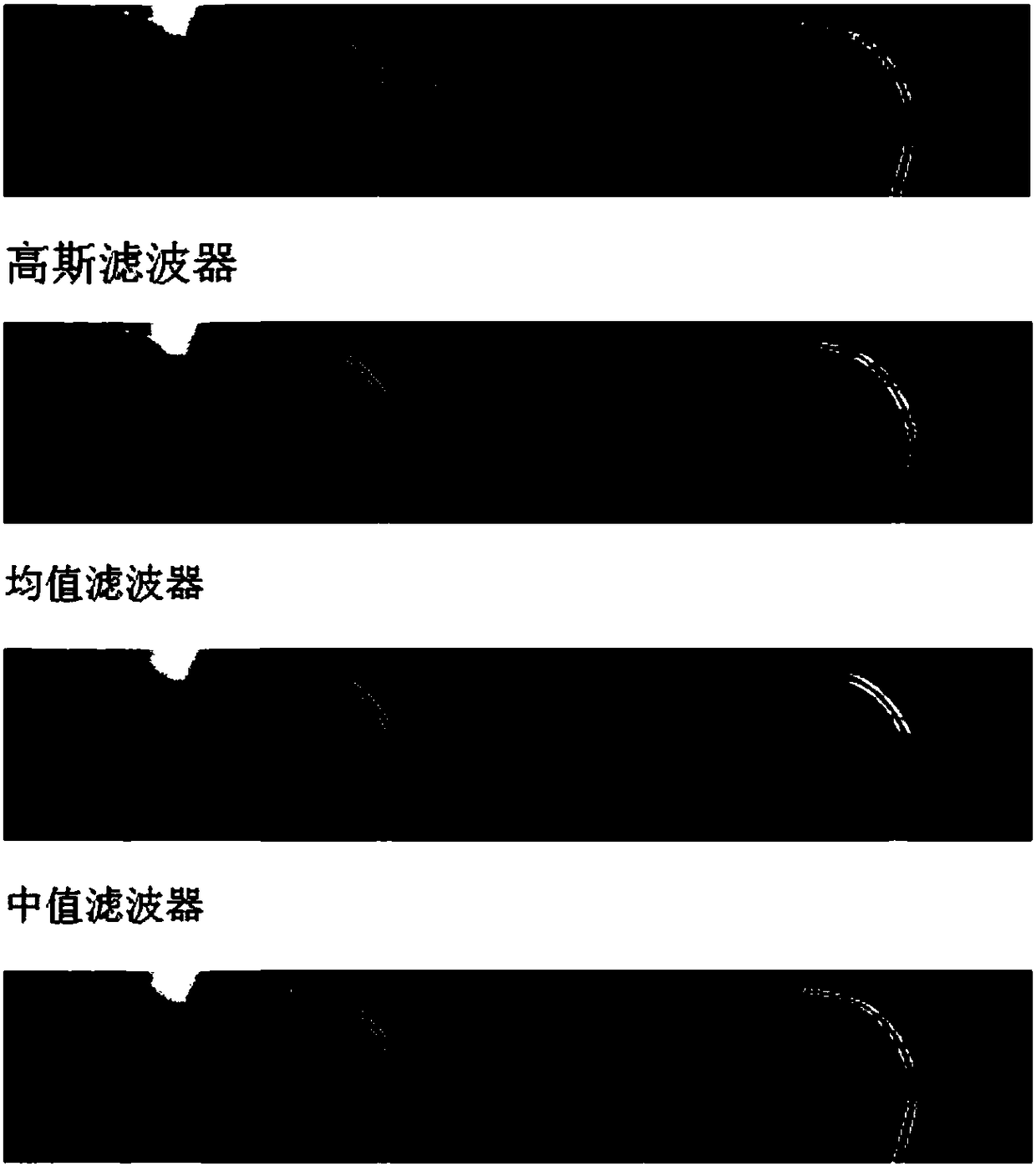

Automatic tracking method of double-foot robot

InactiveCN108181897AImprove accuracyReduce processingImage enhancementImage analysisInformation accuracyVision sensor

The invention provides an automatic tracking method of a double-foot robot. The method includes: a vision sensor arranged on the double-foot robot sends navigation path image information under the feet of the robot to a processor; the processor processes the received navigation path image information, obtains a relative position relation between a current attitude of the robot and a navigation path, and sends the relation to a controller, wherein the relative position relation includes an angle deviation and a position deviation; and the controller controls the robot to adjust the walking pathto realize automatic tracking according to the received angle deviation and the position deviation. According to the method, the navigation path image information acquired by the robot is sent through the vision sensor; graying, mean filtering, the Canny edge detection algorithm and extraction of path edge coordinates are performed on the image to obtain path information, and the accuracy of thepath information is improved through interval scanning; in path recognition, a slope matching method is proposed for a cross path to select an optimal forward path; and therefore, good timeliness andanti-interference capability are achieved.

Owner:HUAQIAO UNIVERSITY

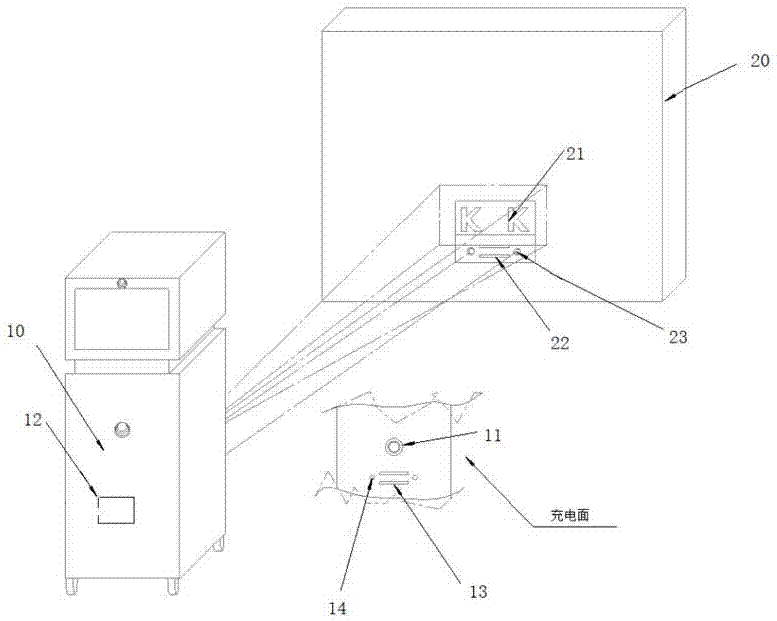

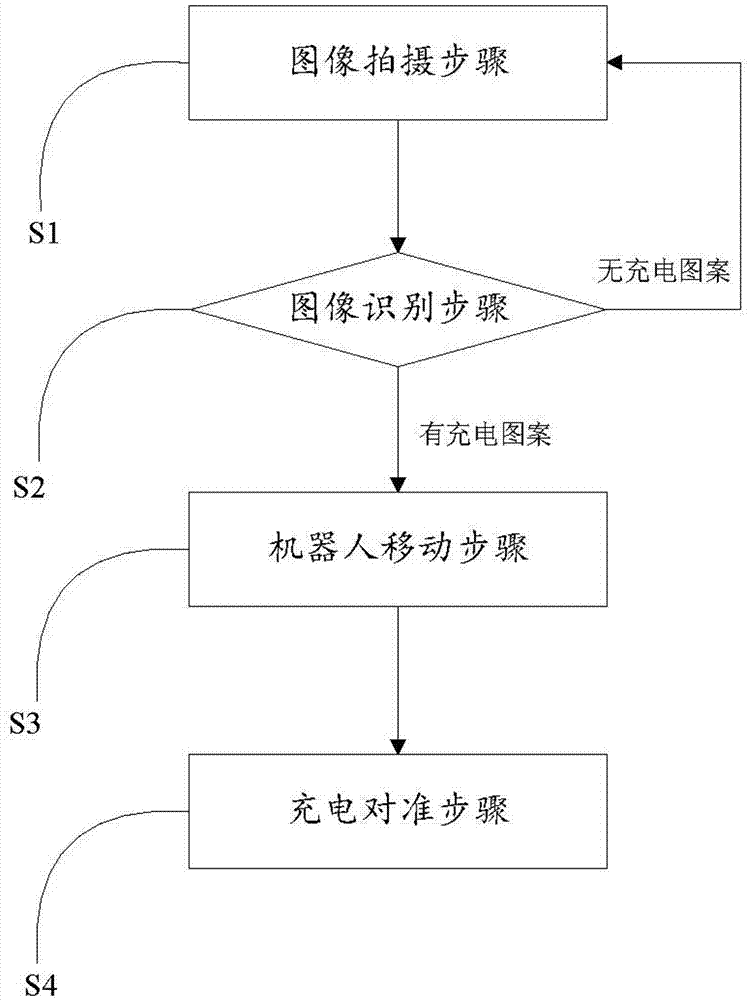

Robot charging docking system and method based on pattern recognition

InactiveCN107392962APrecise positioningStrong ability to draw closerImage enhancementImage analysisControl theoryRobot

The invention provides a robot charging docking system based on pattern recognition. The system comprises a robot and a charging post. The charging post is provided with a charging recognition pattern. The robot is provided with a camera and a central control device. The central control device is used to process an image photographed by the camera to recognize the charging recognition pattern, determine the distance and angle between the robot and the charging post according to the charging recognition pattern, and controls the robot to move toward the charging post. The robot charging docking system provided by the invention allows the large robot to automatically charge with high degree of autonomy and reliability. The invention further provides a robot charging docking method based on pattern recognition.

Owner:深圳市思维树科技有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com