Block floating point computations using shared exponents

A block floating point, exponent technology, applied in the field of block floating point computing using shared exponents, can solve problems such as reduction, network accuracy impact, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

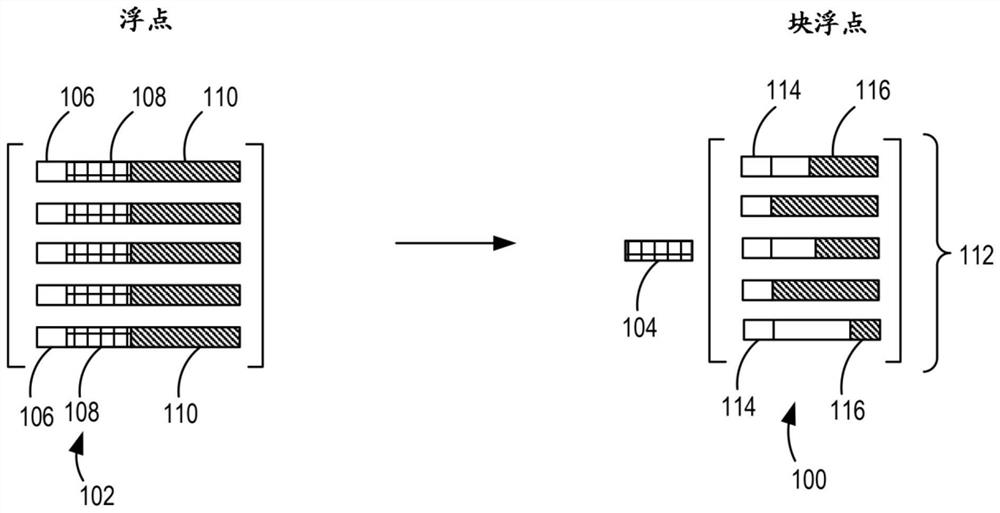

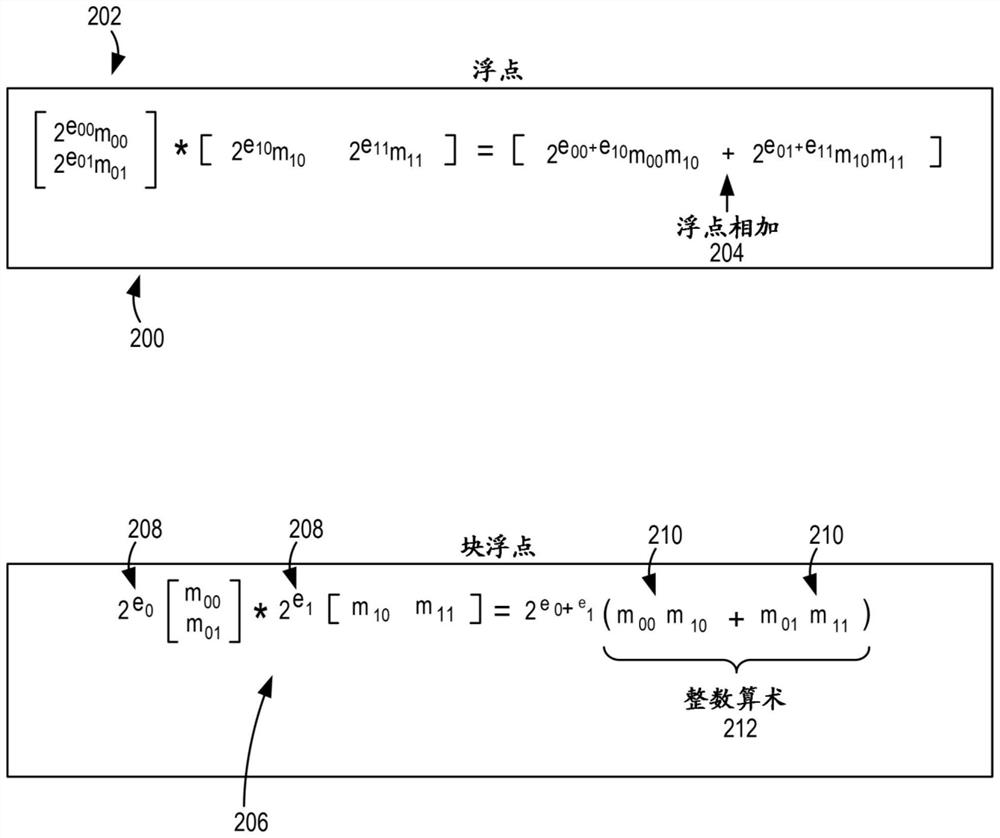

[0016] Computing devices and methods described herein are configured to perform block floating point calculations using multiple layers of shared exponents. For example, subvector components with mantissas that share exponents both at the global level and at a finer-grained level (or finer-grained) are clustered, allowing calculations to be performed with integers. In some examples, the finer granularity of block floating point exponents allows for enormous effective precision of the expressed values. As a result, the computational burden is reduced while improving overall accuracy.

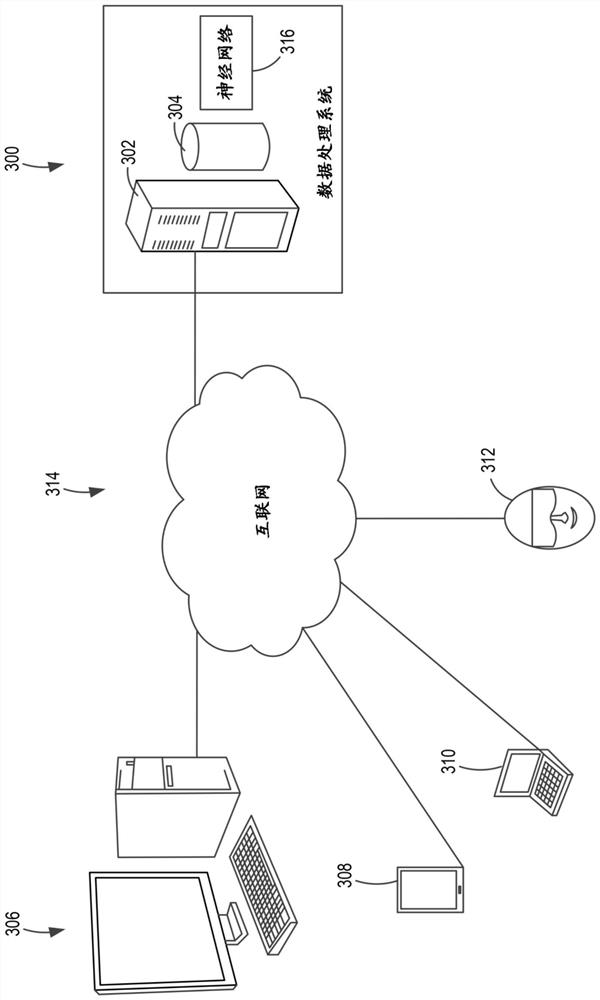

[0017] In the case of various examples of the present disclosure, a neural network such as a deep neural network (DNN) can be trained and trained using block floating point or a numerical format that is less precise than a single precision floating point format (e.g., 32-bit floating point numbers). Deployment with minimal or reduced loss of accuracy. On dedicated hardware such as Field Program...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com