Mobile equipment human body pose estimation method based on three-dimensional skeleton extraction

A mobile device, three-dimensional skeleton technology, applied in computing, computer parts, image analysis, etc., can solve the problems of missing dimension, large calculation error, poor portability, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0066]The following embodiments are only used to illustrate the technical solutions of the present invention more clearly, and cannot be used to limit the protection scope of the present invention.

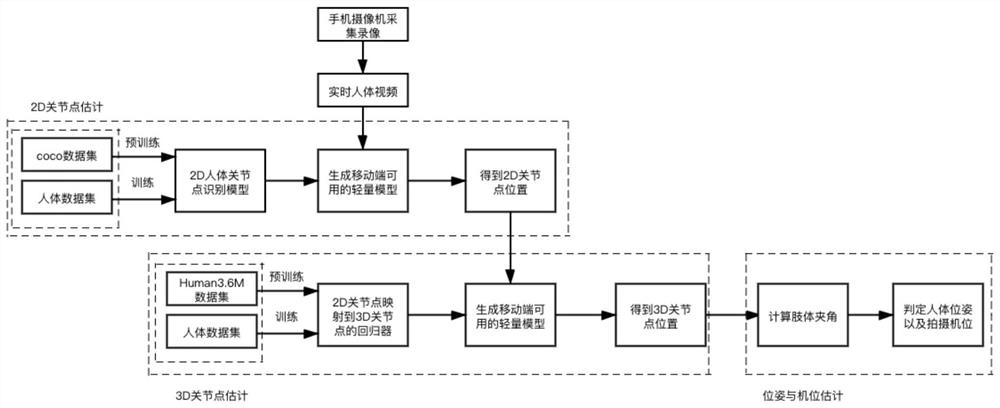

[0067]The method for estimating the human body pose of a mobile device based on three-dimensional skeleton extraction includes the following steps:

[0068]Input data collection: use mobile devices to collect real-time video of the person's body;

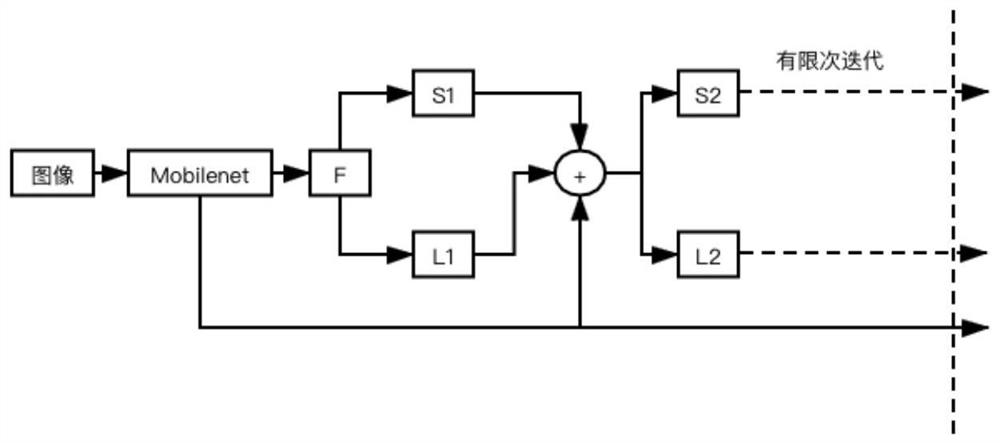

[0069]2D joint point acquisition: Pass the obtained human body video to the background service, and put the human body video into the lightweight human skeleton recognition model to obtain 2D human body joint points;

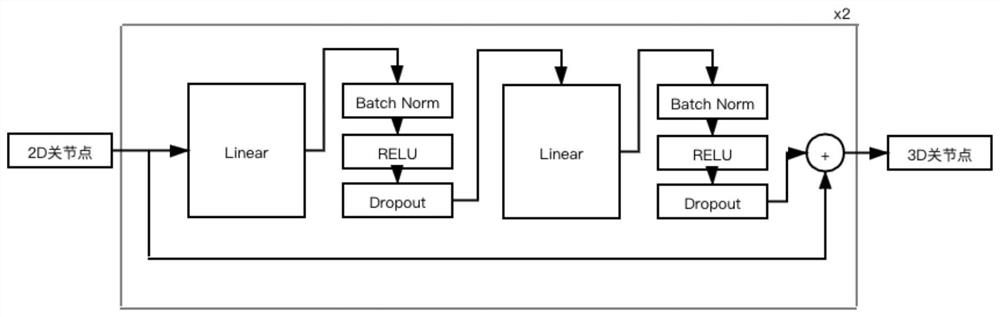

[0070]3D joint point estimation: map the obtained 2D human body joint points through the neural network regressor to obtain the 3D human body joint points in the three-dimensional space;

[0071]Human skeleton acquisition: Put the obtained 3D human body joint points back to the corresponding position in the human body video frame, and connect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com