Advanced residual prediction method based on reconfigurable array processor

An advanced residual prediction, array processor technology, applied in the direction of image communication, digital video signal modification, electrical components, etc., can solve the problems of long coding time, increase the complexity of video image coding process, and high computational complexity, achieve coding The effect of short time, meeting real-time coding requirements, and low computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

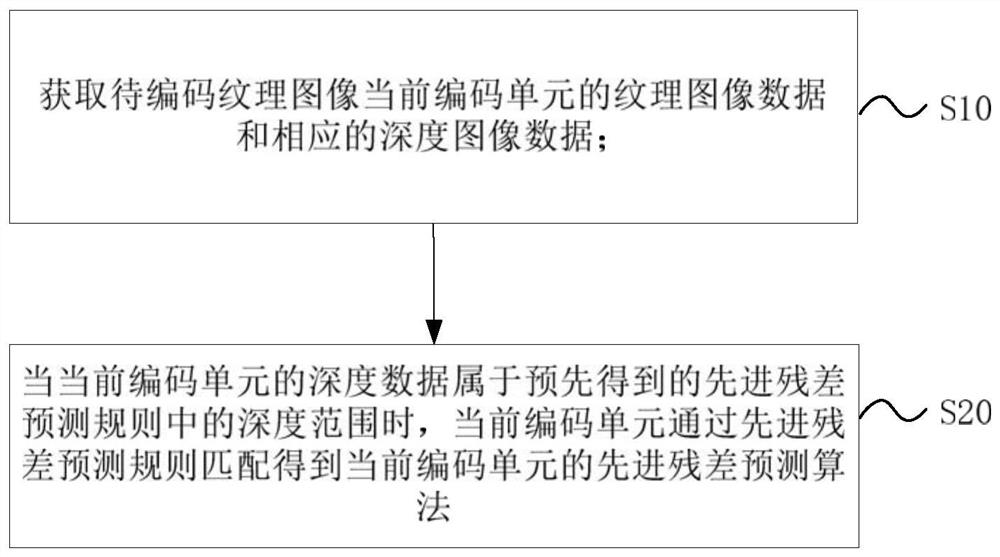

[0077] image 3 A schematic flowchart of an advanced residual prediction method based on a reconfigurable array processor in one embodiment of the present application is shown. As shown in the figure, the advanced residual prediction method based on the reconfigurable array processor of this embodiment includes:

[0078] S10. For predictive encoding of non-base-view texture images, acquire texture image data and corresponding depth image data of the current coding unit of the texture image to be encoded;

[0079] S20. Perform predictive coding on the texture image data of the current coding unit based on the advanced residual prediction;

[0080] Among them, when the depth data of the current coding unit belongs to the depth range in the pre-obtained advanced residual prediction rule, the current coding unit obtains the advanced residual prediction algorithm of the current coding unit through the advanced residual prediction rule matching, and the texture of the current codin...

Embodiment 2

[0129] Another embodiment of the present application proposes an advanced residual prediction method based on a reconfigurable array processor, including:

[0130] S100. Determining a depth threshold.

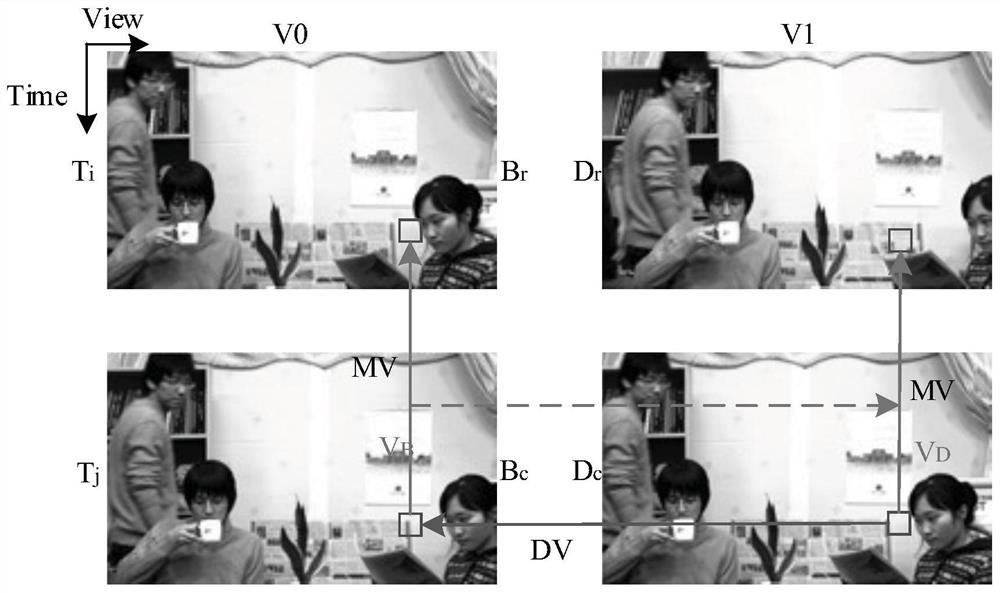

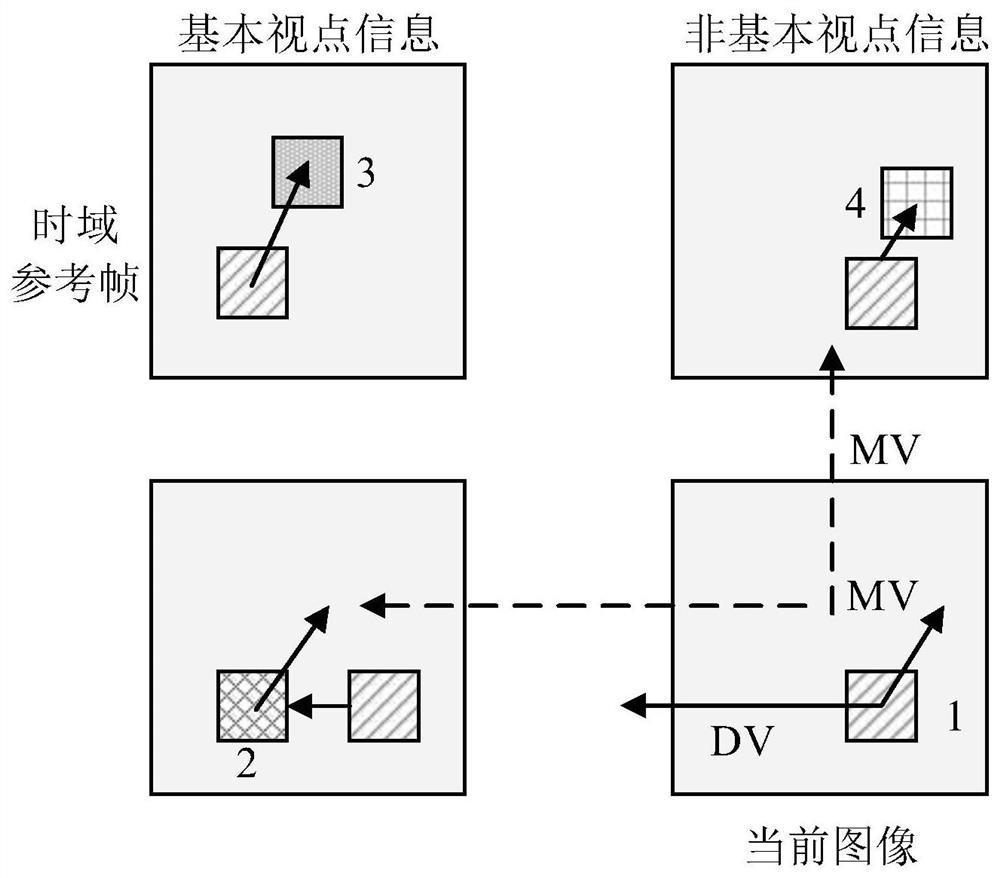

[0131] The depth information of an object in 3D video represents the relative distance from the camera to the object. For depth values between 0 and 255, let the Zfar and Znear values be 0 and 255, respectively. In the HTM-16.1 version, first assume that Z 0 = Z near, Z 1 = Zfar, statistics are made on the results of the temporal advanced residual prediction (ARP) or inter-view advanced residual prediction algorithm selected by the coding unit (CU) in different regional modes. If the currently set depth threshold counts that the selection times of the ARP algorithm between the time domain and the viewpoint are all 0, or if one of the selection times of the ARP algorithm between the time domain and the viewpoint is 0, set the depth threshold Z 0 and Z 1 Decrease and inc...

Embodiment 3

[0155] The parallelization of the temporal domain advanced residual prediction algorithm and the inter-view advanced residual prediction algorithm uses two clusters of hardware storage. Although the parallel implementation of two advanced residual prediction algorithms in the time domain and between viewpoints achieves the purpose of greatly reducing the number of calculation cycles of the algorithm, it increases the area and power consumption of the circuit. In order to reduce unnecessary hardware overhead, combined with the depth threshold, this embodiment utilizes the reconfigurable feature of the PE function of the array processor, and completes the function switching between the advanced residual prediction in the time domain and the advanced residual prediction algorithm between viewpoints by issuing configuration instructions , implements temporal-domain ARP and inter-view ARP function-selectable advanced residual prediction on a single PE cluster.

[0156] Figure 6 F...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com