Memory management method and device for neural network reasoning

A neural network and memory management technology, applied in the direction of reasoning methods, biological neural network models, multi-programming devices, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

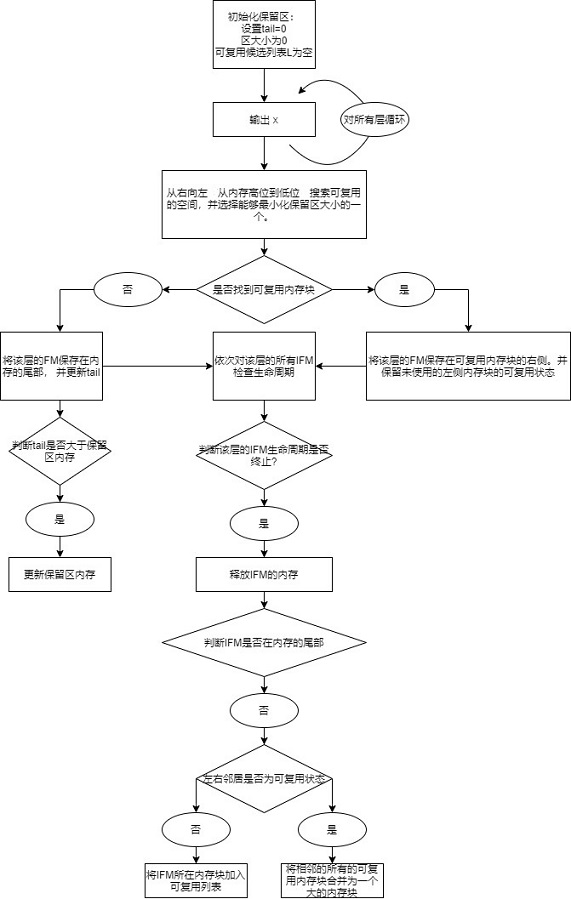

[0087] As described below, an embodiment of the present invention provides a memory management method for neural network reasoning.

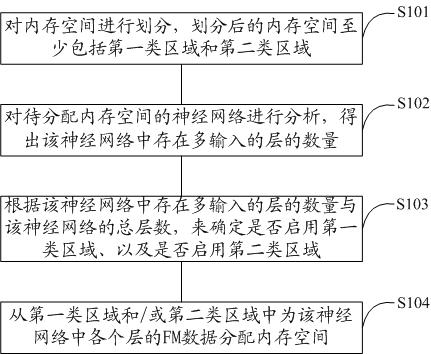

[0088] refer to figure 1 The flow chart of the memory management method for neural network reasoning is shown in detail below through specific steps:

[0089] S101. Divide a memory space, where the divided memory space includes at least a first-type area and a second-type area.

[0090] Wherein, the first type of area can only be used to store FM data with a life cycle of 1, and the second type of area can be used to store FM data with any life cycle.

[0091] In other embodiments, the divided memory space includes at least one of the first type of area and the second type of area.

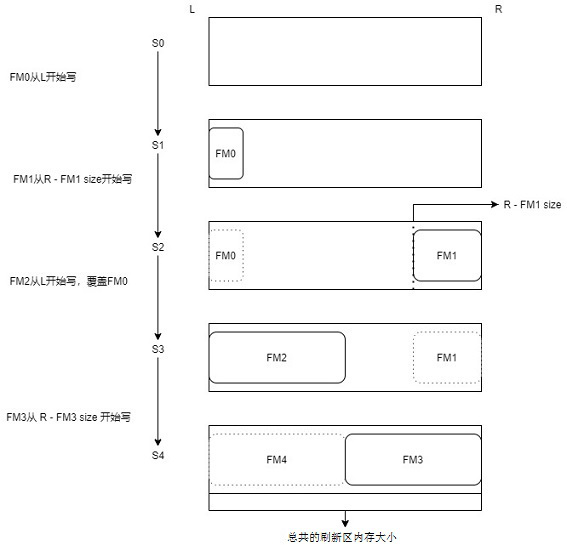

[0092] The writing method of the first type of area is:

[0093]This memory area is used to store FM data with a lifetime of only 1, that is, the OFM data of the nth layer of the neural network will only be used by its next layer, so the network will start to cal...

Embodiment 2

[0170] As described below, an embodiment of the present invention provides a memory management device for neural network reasoning.

[0171] The memory management device of the neural network reasoning comprises:

[0172] a processor adapted to load and execute instructions of a software program;

[0173] A memory adapted to store a software program comprising instructions for performing the following steps:

[0174] Divide the memory space, and the divided memory space includes at least the first type of area and the second type of area; wherein, the first type of area will only be used to store FM data with a lifetime of 1, and the second type Regions can be used to store FM data of any life cycle;

[0175] Analyze the neural network to be allocated memory space to obtain the number of layers with multiple inputs in the neural network;

[0176] Determine whether to enable the first type of area and whether to enable the second type of area according to the number of layer...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com