Adaptive multi-sensor data fusion method and system based on mutual information

A multi-sensor and data fusion technology, applied in image data processing, instruments, biological neural network models, etc., can solve problems such as lack of quantitative tools, insufficient data adaptability, and adjustment of different sensors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

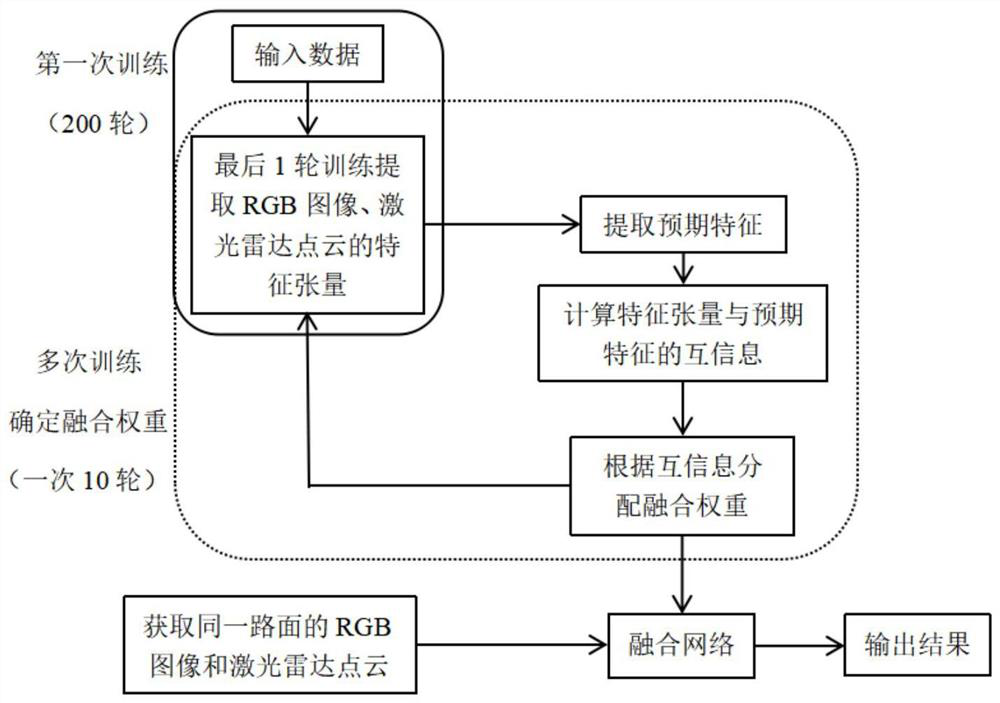

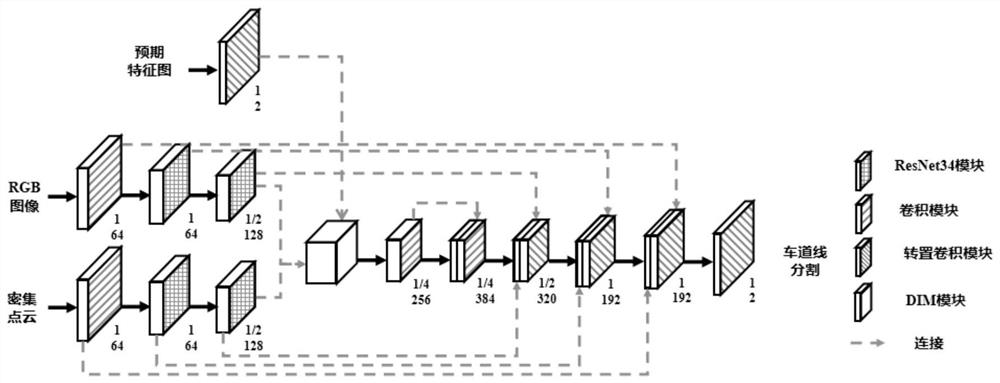

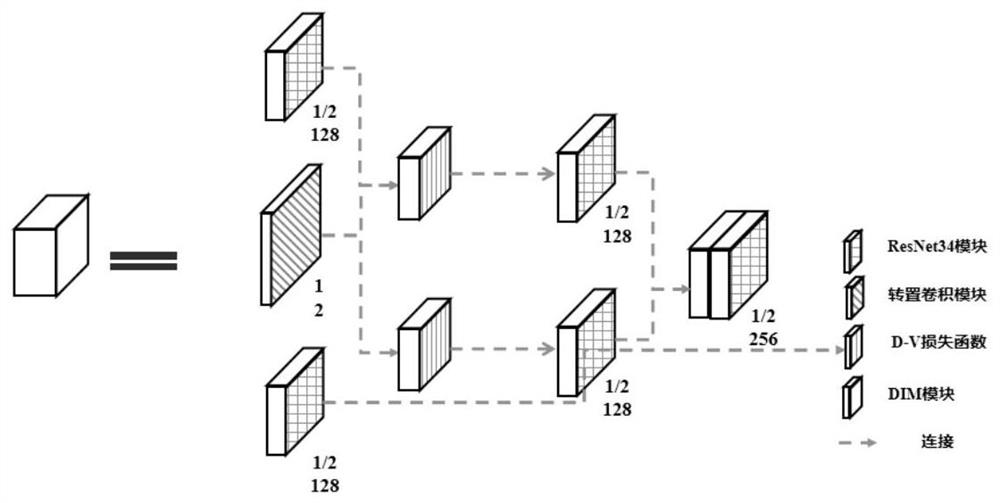

[0100] Such as figure 1 As shown, Embodiment 1 of the present invention provides an adaptive multi-sensor data fusion method based on mutual information, taking RGB images and laser radar point cloud data as examples, specifically including the following steps:

[0101] Step 1) Obtain the RGB image and lidar point cloud of the same road surface; specifically include:

[0102] Step 101) obtain the RGB image G by the vehicle-mounted camera;

[0103] The road surface image information is collected by a forward-facing monocular camera or a forward-facing monocular camera installed on a moving vehicle. The forward-facing monocular camera collects road surface image information directly in front of the driving direction of the vehicle and above the road surface. That is, the collected road surface image information is a perspective view corresponding to information directly in front of the driving direction of the collected vehicle and above the road surface.

[0104] Step 102) O...

Embodiment 2

[0148] Such as Figure 4 As shown, Embodiment 2 of the present invention provides an adaptive multi-sensor data fusion method based on mutual information for time series data information, taking RGB images and laser radar point cloud data as examples, specifically including steps:

[0149] Step 1) Obtain the RGB image and lidar point cloud of the same road surface;

[0150] The RGB image is required to be sequential, that is, the previous frame image has a certain continuity with the current frame image, and the expected features are consistent. We believe that the normalized mutual information between any two consecutive frames of images is greater than 0.5, and the images are temporal. LiDAR point cloud data requirements are consistent.

[0151] All the other are consistent with embodiment 1.

[0152] Step 2) is consistent with embodiment 1;

[0153] Step 3) Extract expected feature Y;

[0154] For time-series data, if it is assumed that the fusion network has a good in...

Embodiment 3

[0162] Based on the methods of Embodiment 1 and Embodiment 2, Embodiment 3 of the present invention proposes an adaptive multi-sensor data fusion system based on mutual information, the system includes: a camera and a laser radar installed on a car, preprocessing module, result output module, time series data adaptive adjustment module and fusion network; wherein,

[0163] The camera is used to collect RGB images of the road surface;

[0164] The laser radar is used to synchronously collect the point cloud data of the road surface;

[0165] The preprocessing module is used to preprocess point cloud data to obtain dense point cloud data;

[0166] The result output module is used to input RGB images and dense point cloud data into a pre-established and trained fusion network, and output data fusion results;

[0167] The fusion network is used to calculate the mutual information of the feature tensor of the input data and the expected feature, assign the fusion weight of the in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com