Three-dimensional data encoding method, three-dimensional data decoding method, three-dimensional data encoding device, and three-dimensional data decoding device

A technology of three-dimensional data and decoding method, applied in the field of three-dimensional data encoding, three-dimensional data decoding, three-dimensional data encoding device, and three-dimensional data decoding device, can solve the problem of large amount of point group data, etc., and achieve the effect of shortening the processing time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach 1

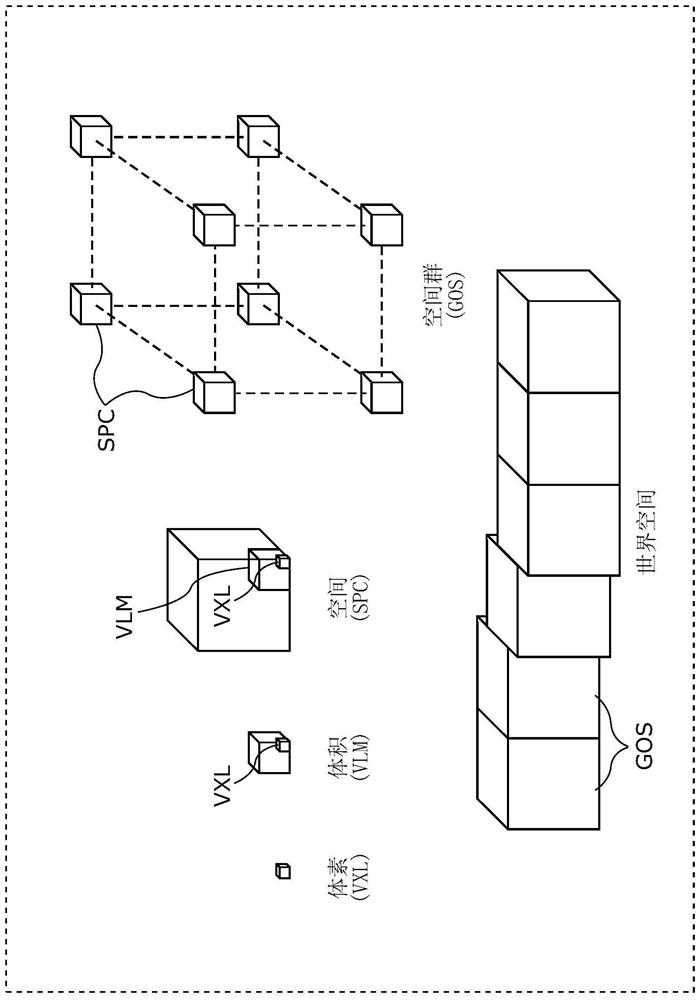

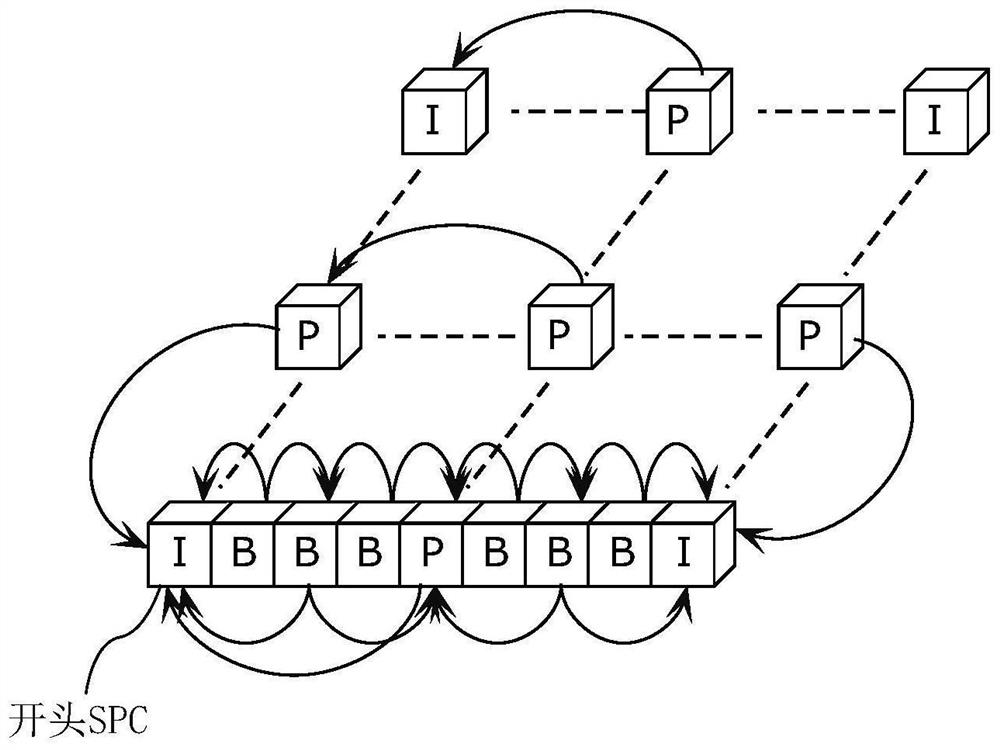

[0131] First, the data structure of encoded three-dimensional data (hereinafter also referred to as encoded data) according to the present embodiment will be described. figure 1 The structure of encoded three-dimensional data according to this embodiment is shown.

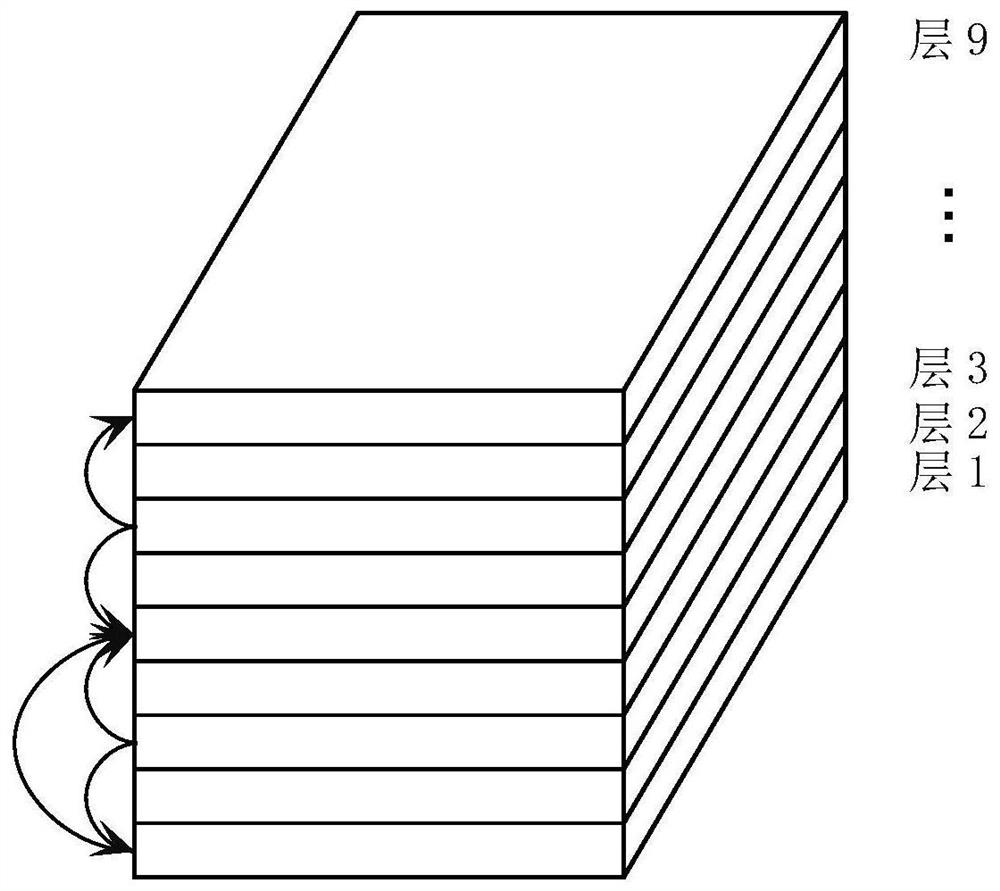

[0132] In the present embodiment, the three-dimensional space is divided into spaces (SPC) corresponding to pictures in encoding of moving images, and three-dimensional data is encoded in space units. The space is further divided into volumes (VLMs) equivalent to macroblocks in video coding, and prediction and conversion are performed in units of VLMs. The volume includes voxels (VXL), which are the smallest unit corresponding to position coordinates. In addition, prediction refers to generating predicted three-dimensional data similar to the processing unit of the processing target by referring to other processing units, and calculating the difference between the predicted three-dimensional data and the processin...

Embodiment approach 2

[0243] When point cloud coded data is used in an actual device or service, it is desirable to transmit and receive required information according to usage in order to suppress network bandwidth. However, such a function does not exist in the encoding structure of the three-dimensional data so far, and therefore there is no encoding method corresponding to it.

[0244] In this embodiment, a three-dimensional data coding method and a three-dimensional data coding device for providing a function of transmitting and receiving required information in accordance with usage in three-dimensional point cloud coded data, as well as the A three-dimensional data decoding method and a three-dimensional data decoding device for decoding coded data.

[0245] A voxel (VXL) having a certain feature value or more is defined as a feature voxel (FVXL), and a world space (WLD) configured with FVXL is defined as a sparse world space (SWLD). Figure 11 A sparse world space and a configuration examp...

Embodiment approach 3

[0341] In this embodiment, a method for transmitting and receiving three-dimensional data between vehicles will be described. For example, three-dimensional data is transmitted and received between the own vehicle and surrounding vehicles.

[0342] Figure 24 is a block diagram of the three-dimensional data creating device 620 according to this embodiment. The 3D data creating device 620 is included in the own vehicle, for example, and creates denser third 3D data 636 by combining the received second 3D data 635 with the first 3D data 632 created by the 3D data creating device 620 .

[0343] This 3D data creation device 620 includes a 3D data creation unit 621 , a request range determination unit 622 , a search unit 623 , a reception unit 624 , a decoding unit 625 , and a synthesis unit 626 .

[0344] First, the three-dimensional data creation unit 621 creates the first three-dimensional data 632 using the sensor information 631 detected by the sensor of the own vehicle. Ne...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com