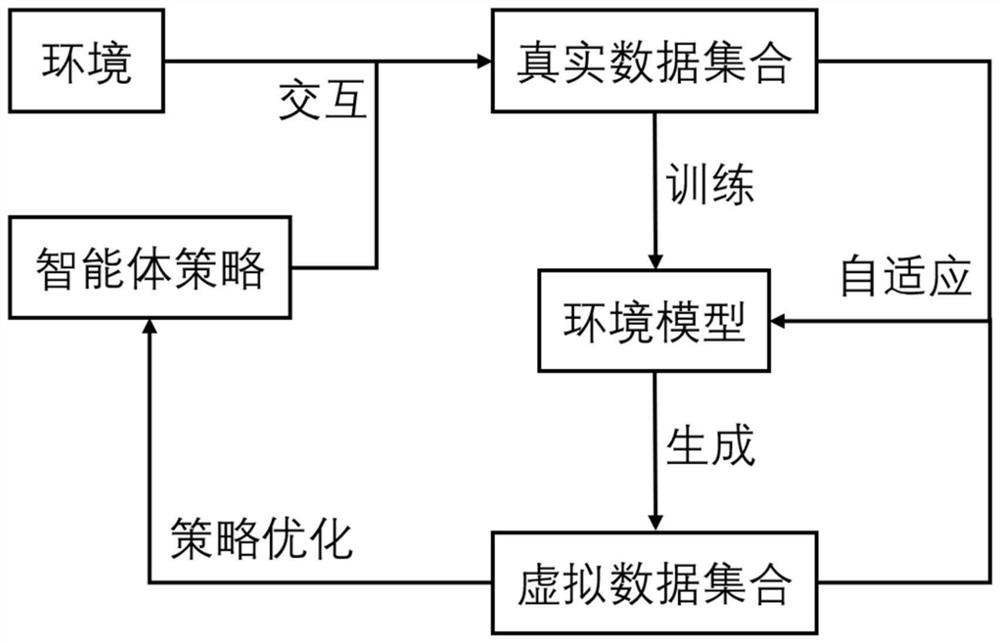

Robot reinforcement learning method based on adaptive model

An adaptive model and reinforcement learning technology, applied in the field of artificial intelligence, can solve problems such as data distribution deviation, achieve high accuracy, excellent progressive performance, and small feature distribution distance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0036] The following describes the preferred embodiments of the present application with reference to the accompanying drawings to make the technical content clearer and easier to understand. The present application can be embodied in many different forms of embodiments, and the protection scope of the present application is not limited to the embodiments mentioned herein.

[0037]The idea, specific structure and technical effects of the present invention will be further described below to fully understand the purpose, features and effects of the present invention, but the protection of the present invention is not limited thereto.

[0038] For an environment model built by a neural network, we can regard its first few layers as feature extractors, and the latter few layers as decoders. Given a data input (s, a), the state s is the position and velocity of each part of the robot, and the action a is the force applied to each part. First, the hidden layer feature h is obtained ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com