3D character action generation systems and methods that mimic character actions in given video

A technology in generating systems and videos, applied in the computer field, can solve problems such as little research work, and achieve the effect of optimizing network parameters and optimizing timing continuity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

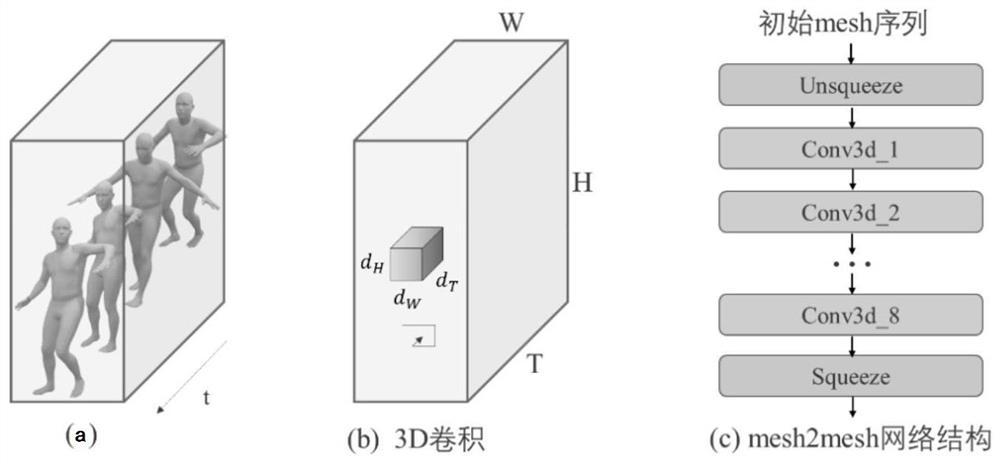

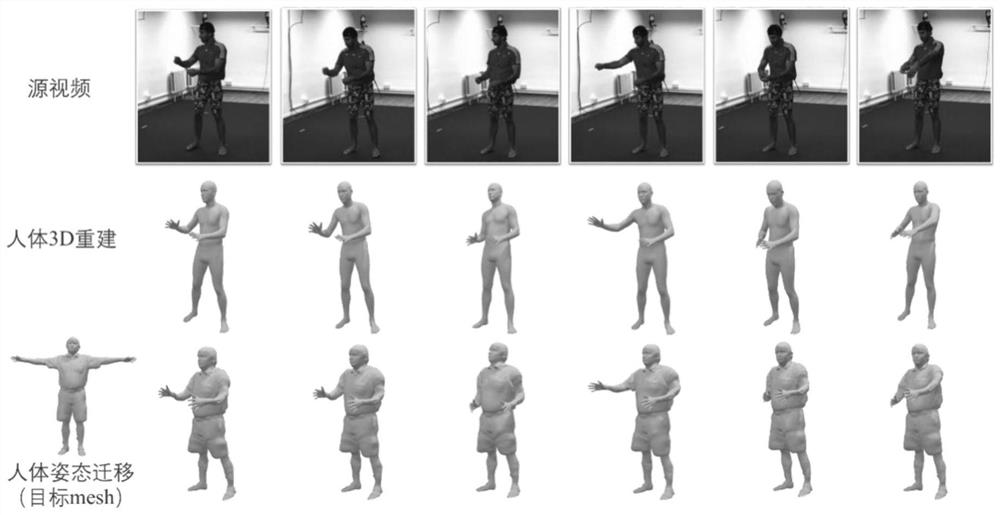

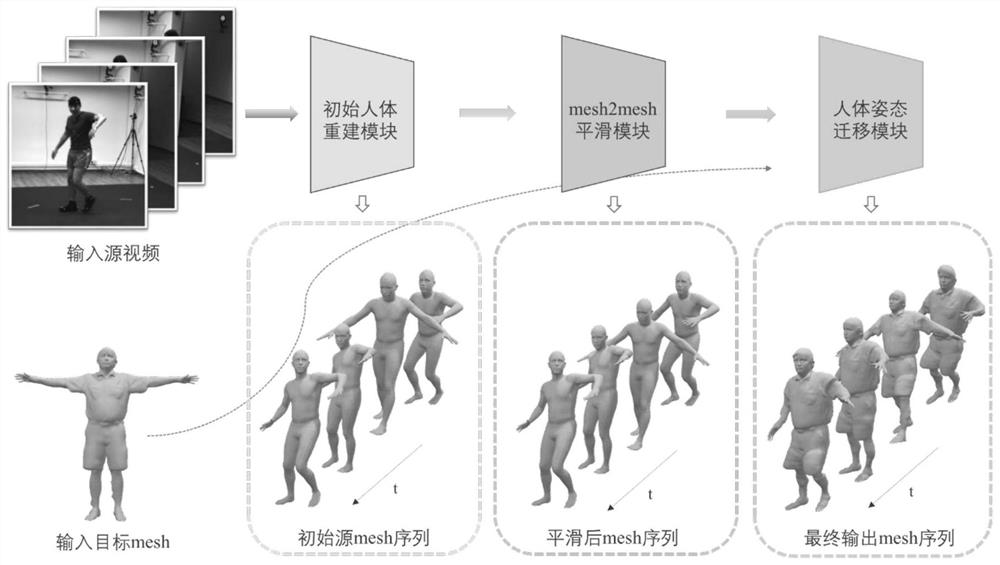

Method used

Image

Examples

Embodiment Construction

[0041] Step 1. Video frame image preprocessing. For the source video data, first of all, the frequency of taking one frame every 25 frames is used to collect image frames. For the video that needs to be used in the training phase, each time a continuous T frame is randomly selected from the collected video frames as the input data of the network. For the video that needs to be used in the test phase, each time the middle T frame is taken from the collected video as the input data of the network. T takes 16. The resulting video clips are Indicates that I t Indicates the tth image frame.

[0042] Step 2. Obtain the location area of the actor in the image. For each selected video frame image I t , input it into the existing openpose network structure (article [9]), predict the corresponding human bone joint points, and then determine the position area of the actor according to the position coordinates of the joint points to help the initial human body reconstruction T...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com