Sequence labeling method based on multi-head self-attention mechanism

A technology of sequence labeling and attention, applied in neural learning methods, computer components, natural language data processing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

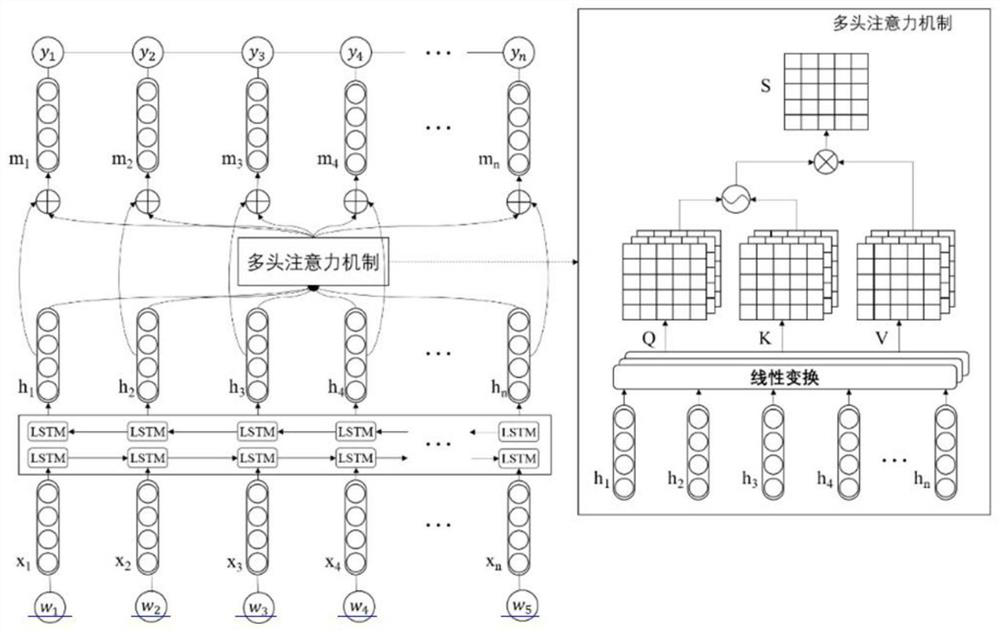

[0070] The present invention first uses a bidirectional long-short-term memory unit (BLSTM) to learn contextual semantic features of words in a text. Then, based on the hidden representation learned by BLSTM, a multi-head self-attention mechanism is used to model the semantic relationship between any two words in the text, and then the global semantics that each word should pay attention to is obtained. In order to fully consider the complementarity of local context semantics and global semantics, the present invention designs three feature fusion methods to fuse the two parts of semantics, and uses the conditional random field model (CRF) to predict the label sequence based on the fused features.

Embodiment 2

[0072] The present invention mainly uses deep learning technology and natural language processing related theoretical methods to realize the sequence labeling task. In order to ensure the normal operation of the system, in the specific implementation, the computer platform used is required to be equipped with no less than 8G of memory, and the number of CPU cores Not less than 4 and the main frequency is not lower than 2.6GHz, GPU environment, Linux operating system, and the necessary software environment such as Python3.6 and above, pytorch0.4 and above.

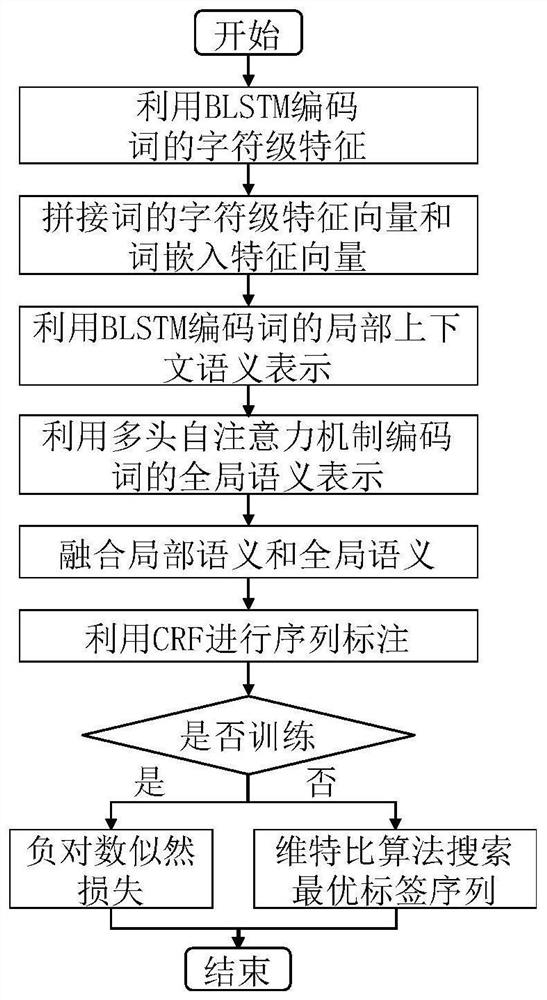

[0073] Such as figure 1 As shown, the sequence labeling method based on the multi-head self-attention mechanism provided by the present invention mainly includes the following steps performed in order:

[0074] Step 1, Local Context Semantic Encoding: Use Bidirectional Long Short-Term Memory (BLSTM) to sequentially learn the local context semantic representation of words in the text.

[0075] Step 1.1) Use the Stanford NLP...

Embodiment 3

[0094] The sequence labeling method based on the multi-head self-attention mechanism mainly includes the following steps performed in order:

[0095] Step 1, Local Context Semantic Encoding: Use Bidirectional Long Short-Term Memory (BLSTM) to sequentially learn the local context semantic representation of words in the text.

[0096] Step 1.1, use the Stanford NLP toolkit to segment the input text to obtain the corresponding word sequence X={x 1 ,x 2 ,...,x N}.

[0097]For example, given the text "I participated in a marathon in Tianjin yesterday", after word segmentation, the word sequence {"I", "yesterday", "in", "Tianjin", "participated", "了", "one", "marathon", "race"}.

[0098] Step 1.2, considering that the words in the text usually contain rich morphological features, such as prefix and suffix information, so this step is for each word in the word sequence Encode each word x using a bidirectional LSTM (BLSTM) structure i Corresponding character-level vector repres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com