Vision and laser radar fused outdoor mobile robot pose estimation method

A technology of mobile robot and laser radar, which is applied in the fields of instrumentation, calculation, image data processing, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0086] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

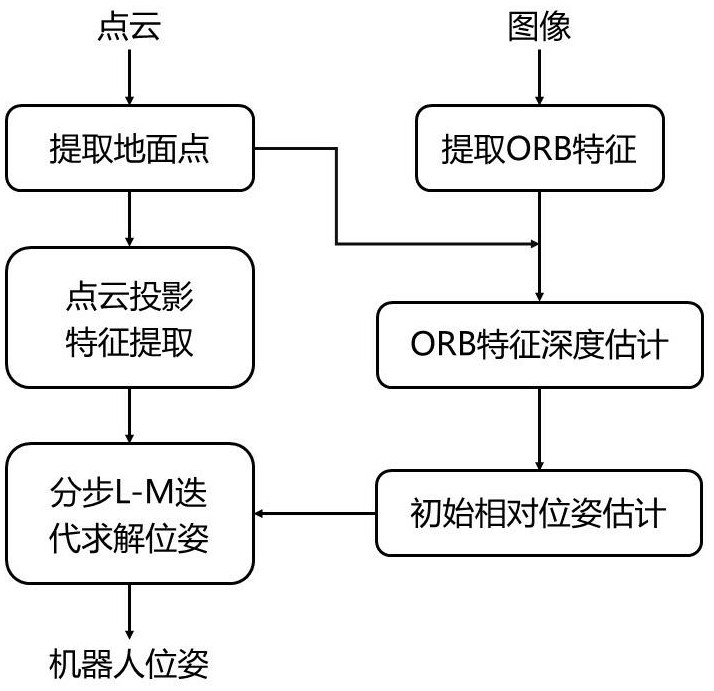

[0087] Please refer to figure 1 , the present invention provides a method for estimating the pose of an outdoor mobile robot that combines vision and laser radar, comprising the following steps:

[0088] Step S1: obtain point cloud data and visual image data;

[0089] Step S2: adopt the algorithm of iterative fitting to accurately estimate the ground model of the point cloud of each frame and extract the ground point;

[0090] Step S3: extract the ORB feature point to the lower half region of the visual image, and estimate the depth for the corresponding visual feature point according to the extracted ground point;

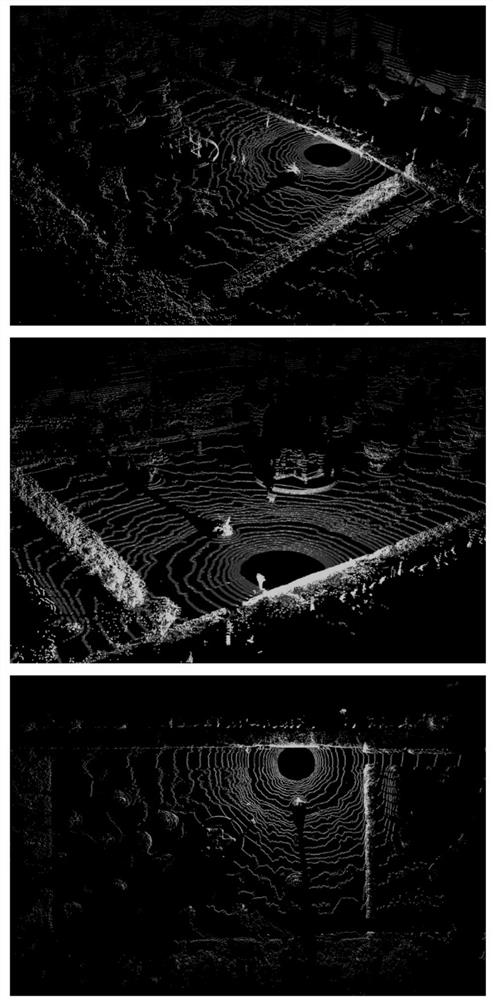

[0091] Step S4: according to the number of lines and the angular resolution of the laser radar, obtain the depth image formed by the depth information of the point cloud;

[0092] Step S5: according to the obtained depth image, calculate...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com