Training method and device of graph neural network

A neural network and training method technology, applied in the computer field, can solve problems such as difficult to guarantee training convergence graph neural network embedding effect, consumption, large computing resources, etc., to reduce calculation time consumption, reduce calculation times, and excellent representation performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

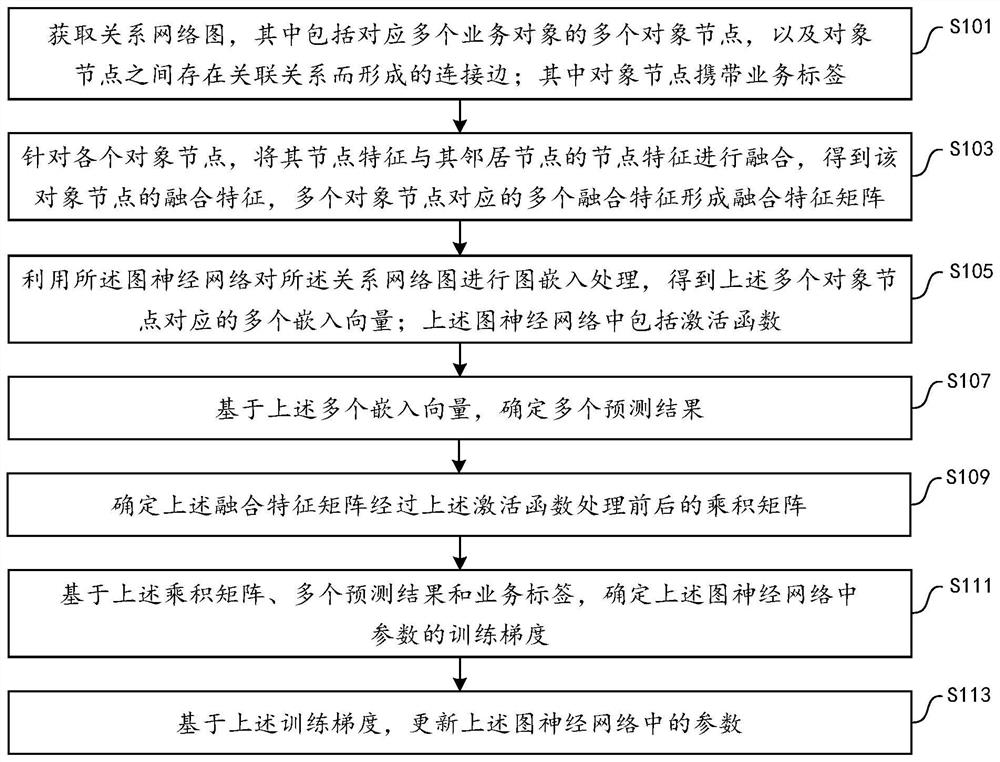

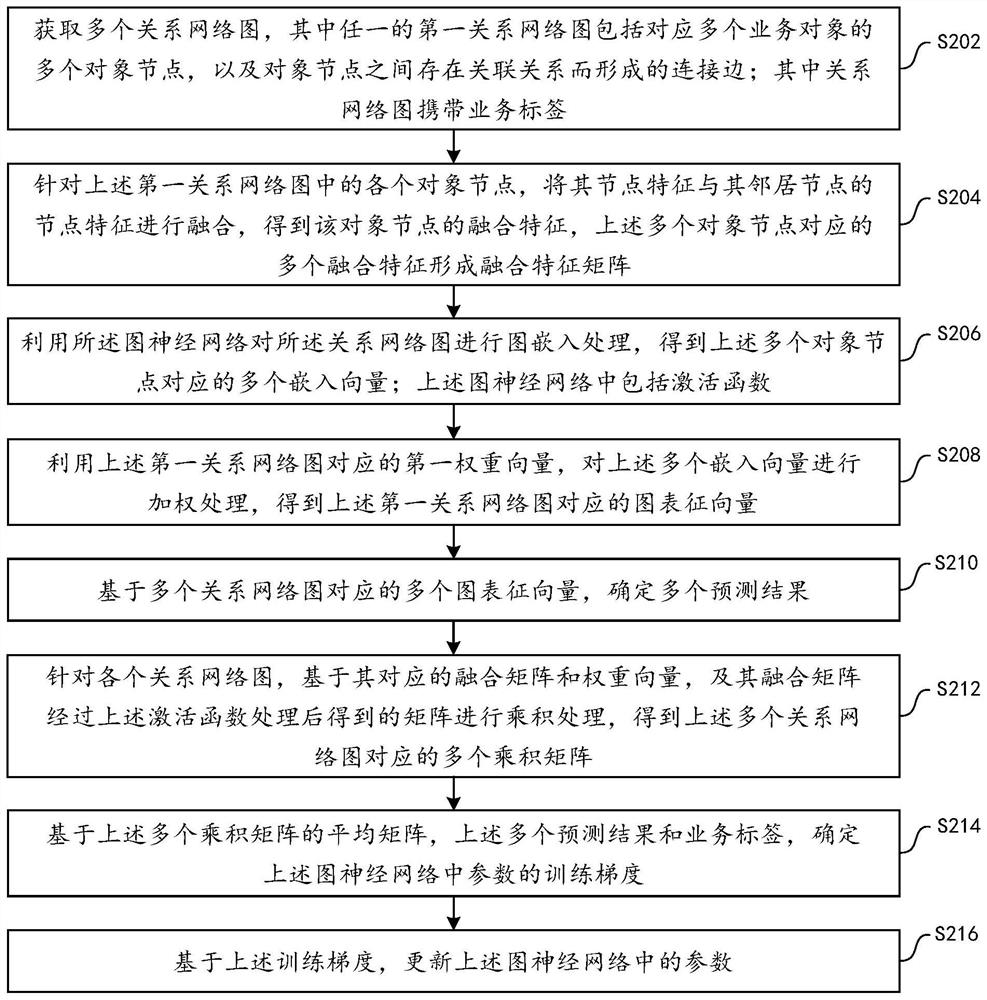

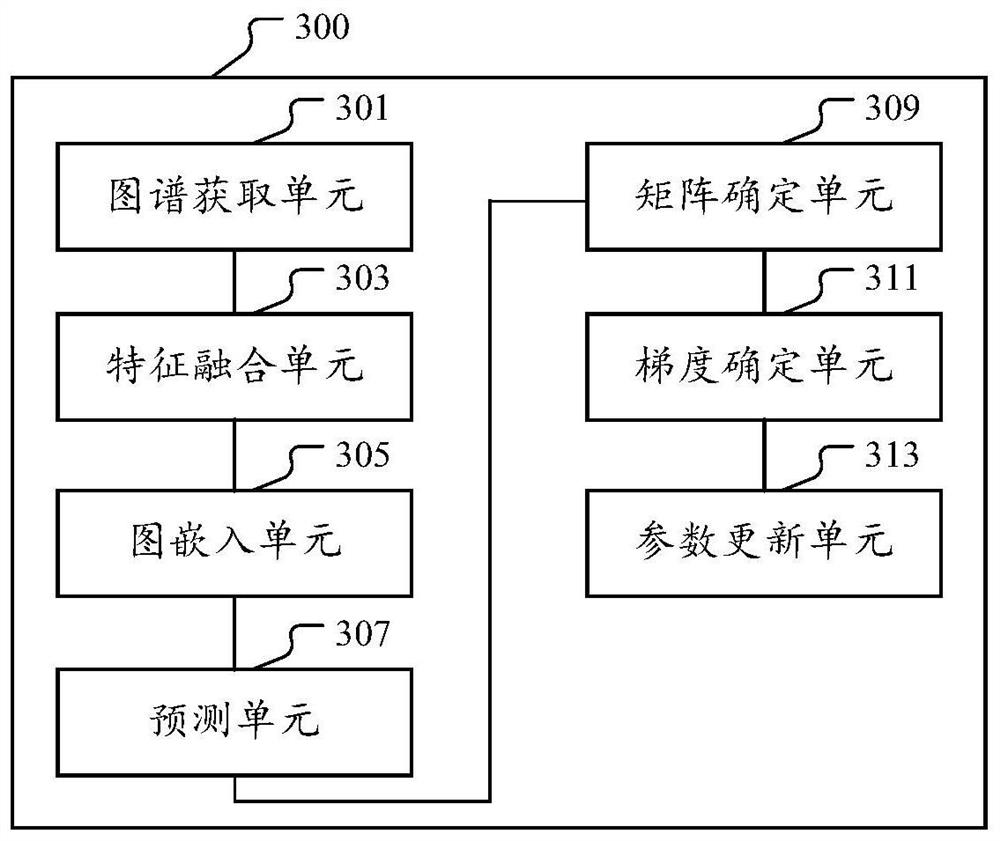

[0033] Multiple embodiments disclosed in this specification will be described below in conjunction with the accompanying drawings.

[0034] As mentioned earlier, at present, the training of graph neural networks encounters a bottleneck. Specifically, the graph neural network is a deep learning architecture that can run on social networks or other graph-based topological data. It is a generalized neural network based on graph topology. Graph neural networks generally use the underlying relational network graph as a computational graph, and learn neural network primitives to generate single-node embedding vectors by transferring, transforming, and aggregating node feature information on the entire graph. The generated node embedding vectors can be used as the input of the prediction layer and used for node classification or to predict the connection between nodes, and the complete model can be trained in an end-to-end manner.

[0035] Due to the highly non-convex nature of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com