On-chip neural network-oriented synaptic implementation architecture

A neural network and synaptic technology, applied in the field of synaptic implementation architecture based on three-level index and storage compression sharing, can solve the problem of a large number of connections

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] In order to make the object, technical solution and technical effect of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

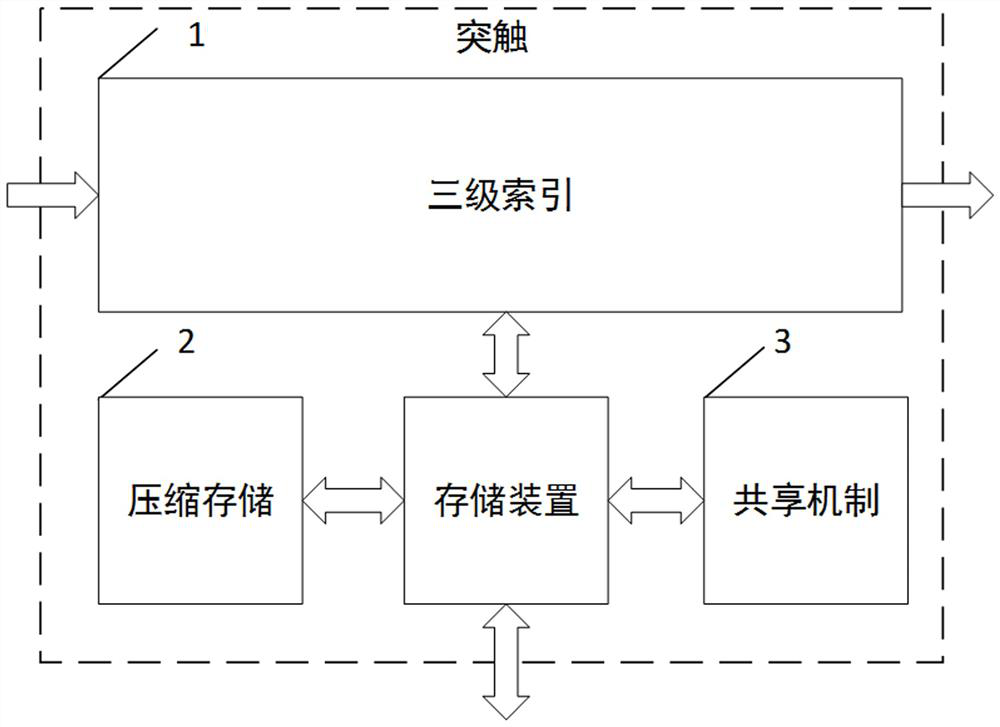

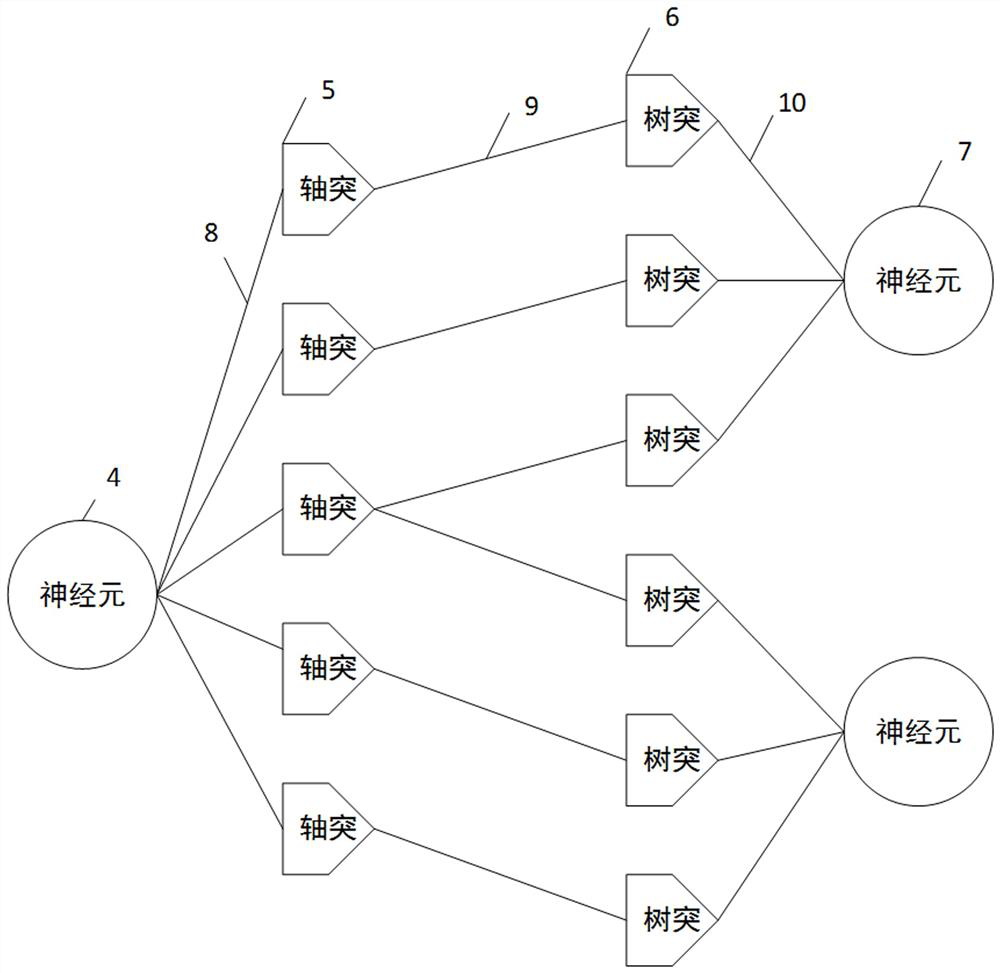

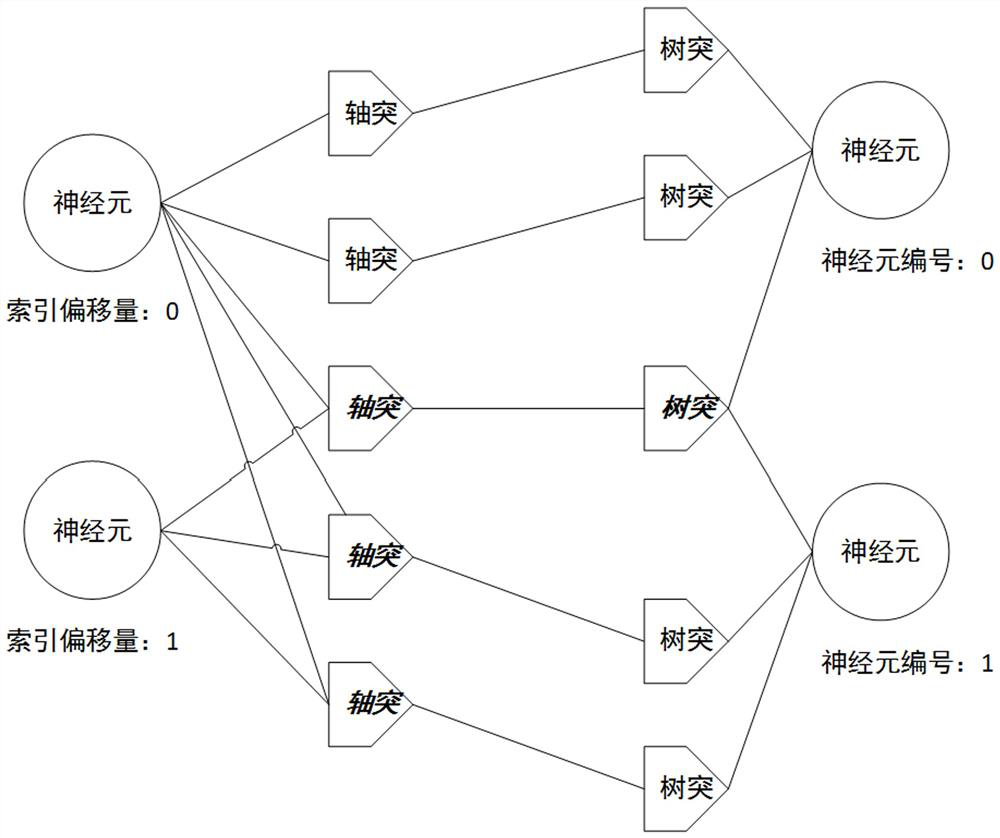

[0018] Such as figure 1 and figure 2 As shown, a synapse implementation architecture based on three-level index and storage compression sharing for on-chip neural network is specifically: through the synapse of the three-level index structure mode 1, the pre-synaptic neuron 4 and the post-synaptic neuron are connected 7. The connection information and weights of the three-level index structure can be stored in the synaptic storage device, or allocated to the pre-synaptic neuron 4 and the post-synaptic neuron 7 according to requirements, so as to optimize synaptic resources and benefit the on-chip neural network. Network implementation. The synaptic storage device adopts the compressed storage method 2 of shared weight and the storage space...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com