Hyperspectral image classification method and device and electronic equipment

A technology of hyperspectral images and classification methods, applied in the field of devices and electronic equipment, and classification methods of hyperspectral images, can solve the problems of unmarked, large coverage area of hyperspectral images, and reduced accuracy of classification results, so as to improve the accuracy of sexual effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

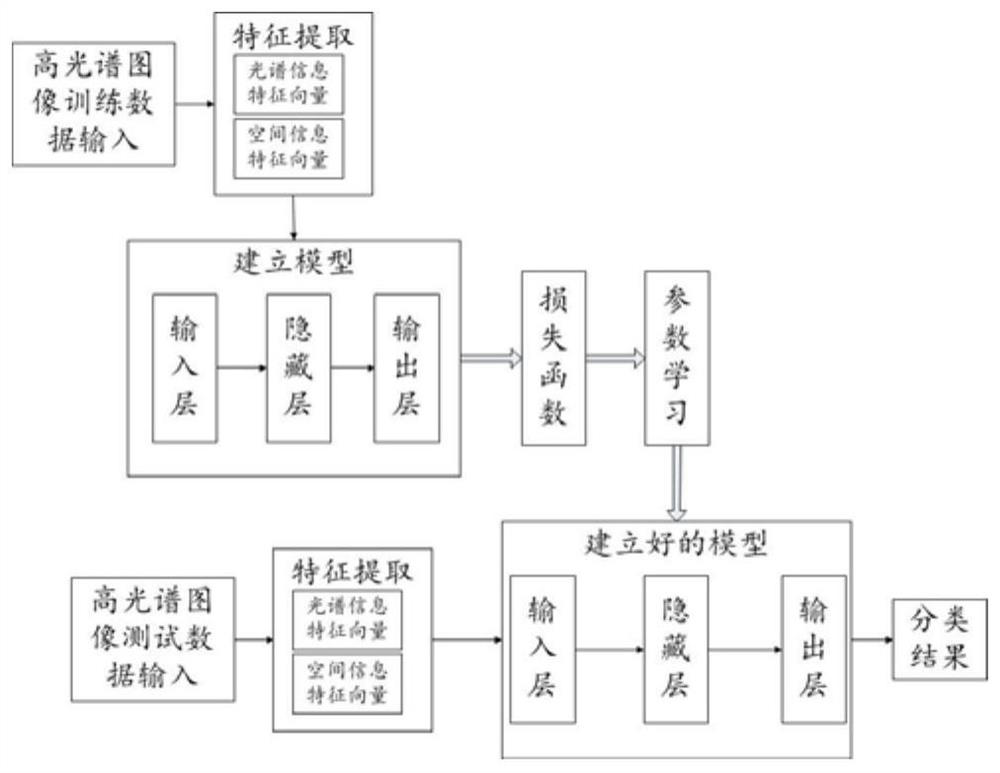

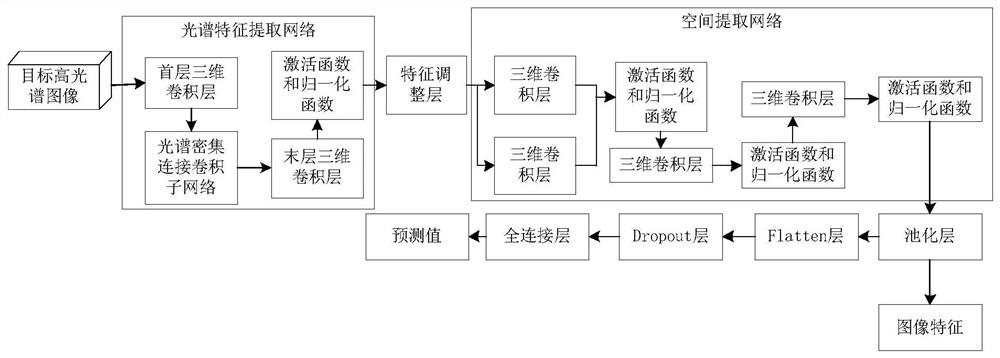

Method used

Image

Examples

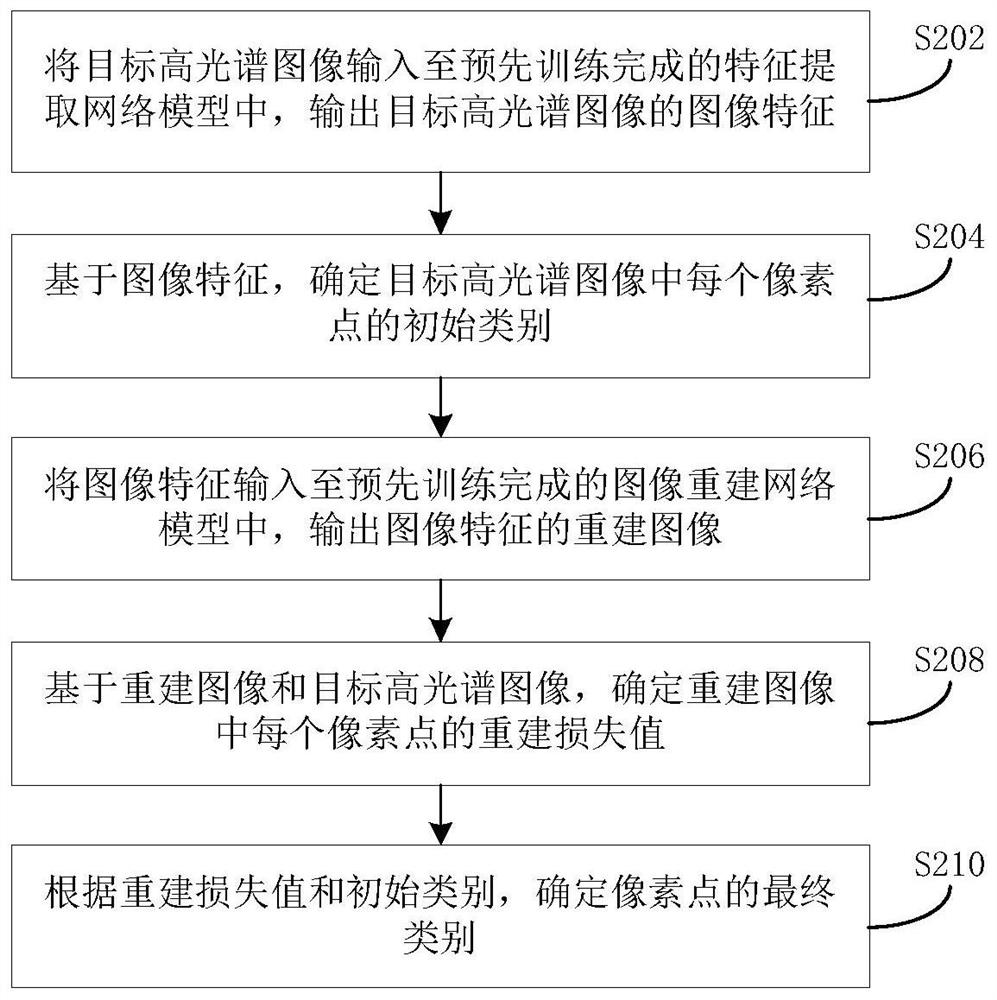

Embodiment approach

[0073] (1) According to the reconstruction loss value, calculate the probability value that the reconstruction loss value is greater than the preset loss threshold through the pre-established probability model of the reconstruction loss value;

[0074] The above probabilistic models include:

[0075]

[0076] G in the above formula ξ,u (v) represents the probability model; v represents the reconstruction loss value; ξ represents the shape parameter; v represents the scale parameter.

[0077] The above probability model represents the conditional probability that the reconstruction loss function value is greater than the preset loss threshold; specifically, it can be obtained in the following way:

[0078] Obtain multiple reconstruction loss values obtained during training and testing, and create a histogram of multiple reconstruction loss values, such as Figure 6 The distribution histogram of the reconstruction loss value is shown, (a) in the figure represents the hist...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com