Accent classification method based on deep neural network and model thereof

A technology of deep neural network and classification method, which is applied in the field of accent classification method and its model based on deep neural network, which can solve problems such as inaccurate learning process and difficulties in accent detection and recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] The preferred embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings, so that the advantages and features of the present invention can be more easily understood by those skilled in the art, so as to define the protection scope of the present invention more clearly.

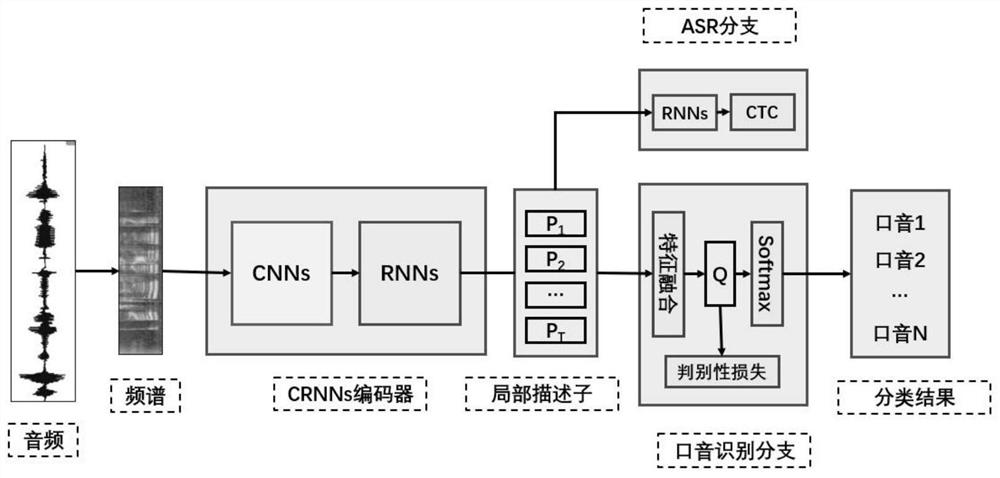

[0057] see figure 1 , the embodiment of the present invention includes:

[0058] A kind of accent classification method based on deep neural network, comprises the following steps:

[0059] S1: Extract the frame-level frequency domain features of the original audio, and construct a 2D speech spectrum as the network input X;

[0060] Regarding the preprocessing of the input spectrogram X, for a piece of speech signal, the common MFCC or FBANK frequency domain features in speech recognition tasks are extracted in each frame to construct a 2D spectrum, and then one dimension is expanded for CNN operation.

[0061] S2: Construct a multi-task weight...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com