Lane line detection method and system

A lane line detection and lane line technology, applied in the field of image recognition, can solve problems such as lane line occlusion, lane line missed detection, and limited computing power, and achieve the effects of improving accuracy, alleviating lane line missed detection, and enhancing feature expression

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

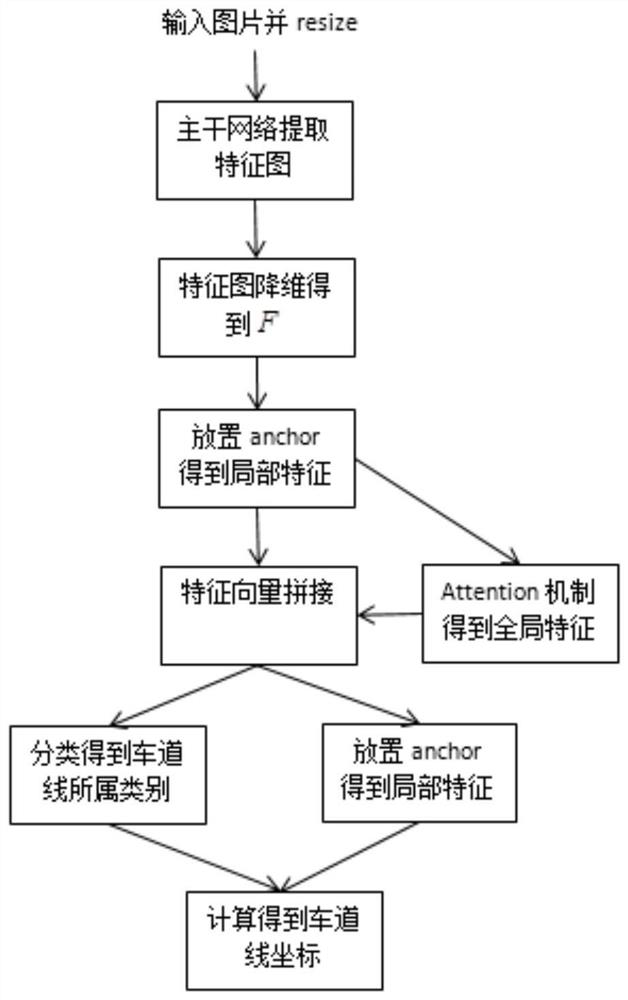

[0037] Such as figure 1 As shown, a lane line detection method is a process of constructing a detection lane line model, including:

[0038] S1 inputs the picture to be detected, the picture to be detected includes the lane, and the acquisition device is a camera, installed in front of the intelligent driving vehicle, and resized to a size of 320*640.

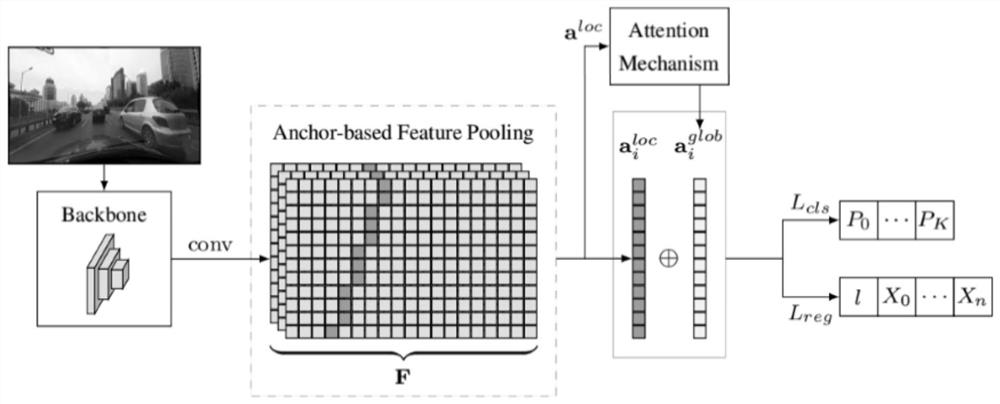

[0039] S2 as image 3 As shown, use the resnet34 network as the backbone network to extract the feature map F, and add a DCT-basedglobal context block to the last layer of c3, c4, and c5 of the backbone network (ie, the 3rd, 4th, and 5th convolutional Block blocks of Resnet34) to Extract the global context features, and strengthen the lane features.

[0040] image 3 Among them, backbone represents the backbone network, conv represents a 1*1 convolutional layer, and L cls and L reg Represents two parallel fully connected networks.

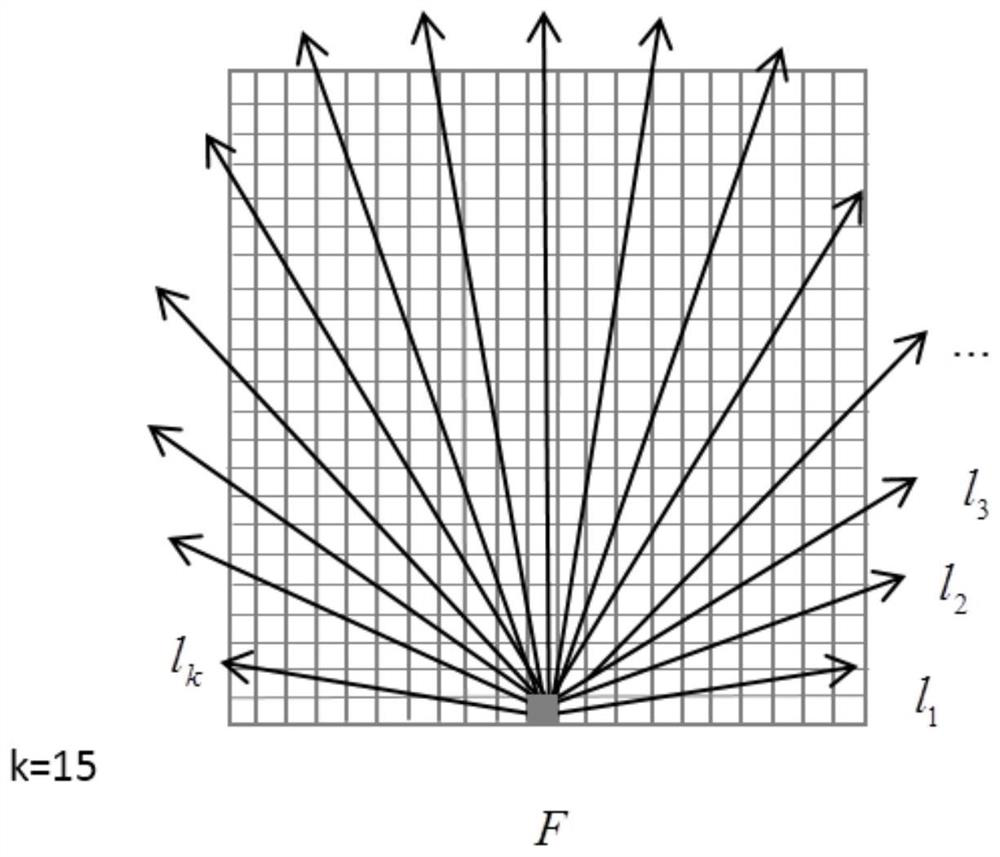

[0041] S3 uses 1*1 convolution layer to feature map F back Dimensionality reduction to ob...

Embodiment 2

[0051] A lane line detection system, comprising:

[0052] Acquisition module, used for obtaining the image to be detected;

[0053] The feature map acquisition module is used to obtain the feature map of the image to be detected by the resnet34 network equipped with the DCT-based Global Context Block, as shown in Figure 4(a) and Figure 4(b) for the structure of the DCT-based Global Context Block schematic diagram.

[0054] The feature map dimensionality reduction module uses a 1*1 convolutional layer to reduce the dimensionality of the feature map of the feature map acquisition module,

[0055] Prediction module: specifically two parallel fully connected networks, one for classification and one for regression.

[0056] The above five parts constitute the entire detection model.

[0057] Model training process:

[0058] Kmeans pre-acquires the angle of the anchor——input picture——after the detection model outputs the predicted category, offset value and length—calculates the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com