System, method and device for labeling continuous frame data

A continuous frame and frame data technology, applied in the field of continuous frame data labeling system, can solve the negative impact of neural network training, the complexity of data form, labor-intensive and other issues, to improve the labeling speed and accuracy, reduce labeling workload , the effect of reducing the cost of labeling

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

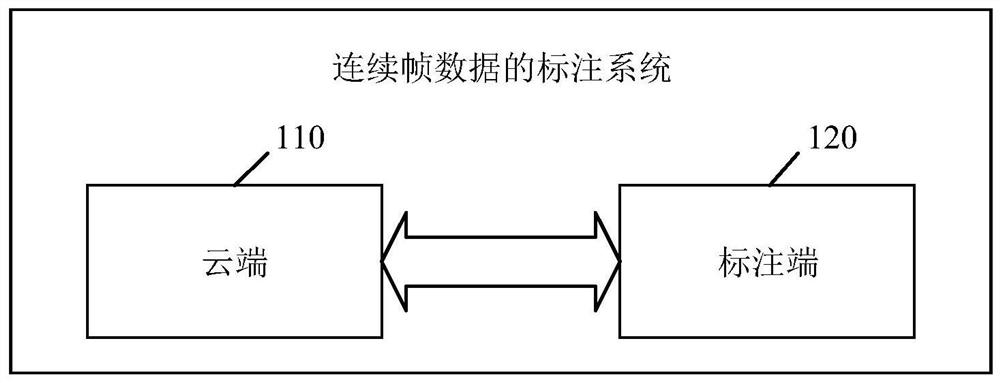

[0068] see figure 1 , figure 1 It is a schematic structural diagram of a labeling system for continuous frame data provided by an embodiment of the present invention. The system can be applied to automatic driving, through which a large amount of labeled data can be generated faster and more efficiently for model training. Such as figure 1 As shown, a continuous frame data labeling system provided in this embodiment specifically includes: a cloud 110 and a labeling terminal 120; wherein,

[0069] The cloud 110 is configured to: obtain a labeling task, the labeling task includes the category, location and output file format of the object to be labeled;

[0070] Among them, the labeling task is used as the prior information of the labeling process, including the object to be labeled (such as vehicles, pedestrians, etc.), the category of the object to be labeled (such as a tricycle, bus or car, etc.), the preset size and the output file of the labeling file format etc. The l...

Embodiment 2

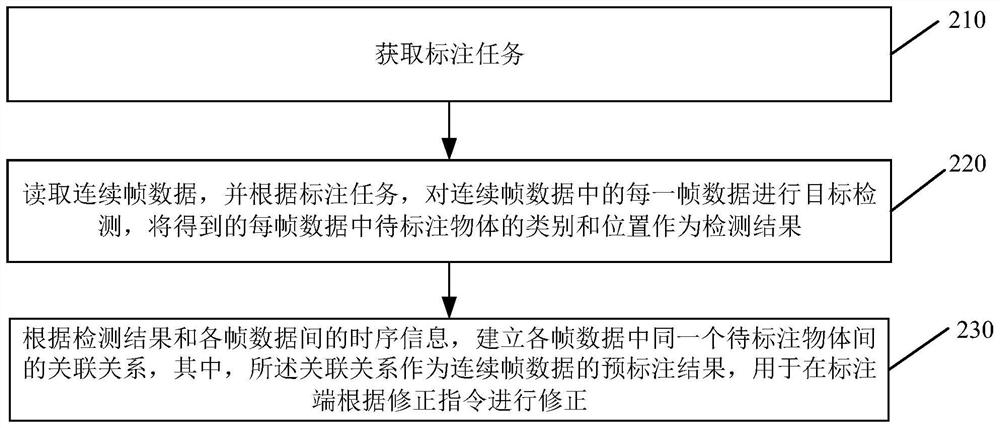

[0086] see figure 2 , figure 2 It is a schematic flowchart of a method for labeling continuous frame data applied to the cloud provided by an embodiment of the present invention. The method of this embodiment can be performed by a device for labeling continuous frame data, which can be implemented by means of software and / or hardware, and can generally be integrated in cloud servers such as Alibaba Cloud and Baidu Cloud, which are not limited by the embodiments of the present invention . Such as figure 2 As shown, the method provided in this embodiment specifically includes:

[0087] 210. Obtain the labeling task.

[0088] Among them, the labeling task includes the category and position of the object to be labeled.

[0089] 220. Read the continuous frame data, and according to the labeling task, perform target detection on each frame of data in the continuous frame data, and use the category and position of the object to be marked in each frame of data obtained as the ...

Embodiment 3

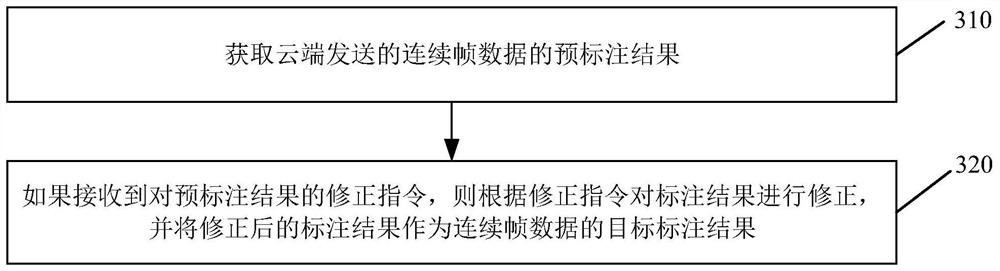

[0094] see image 3 , image 3 It is a schematic flowchart of a labeling method applied to continuous frame data at the labeling end provided by an embodiment of the present invention. The method can be executed by a device for labeling continuous frame data, which can be implemented by means of software and / or hardware, and can generally be integrated into a labeling terminal. Such as image 3 As shown, the method provided in this embodiment specifically includes:

[0095] 310. Obtain the pre-marking result of the continuous frame data sent by the cloud.

[0096] 320. If a correction instruction for the pre-labeled result is received, correct the labeling result according to the correction instruction, and use the corrected labeling result as the target labeling result of the continuous frame data.

[0097] Among them, the pre-labeling result is: after the cloud reads the continuous frame data, according to the labeling task, the target detection result of the object to b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com