Feature sparse representation multi-dictionary pair learning pedestrian re-identification method

A technology of sparse representation and dictionary, applied in the field of surveillance video pedestrian identification, can solve the problems of complex pedestrian identification, lack of theoretical basis and technical support, and failure to correctly view different characteristics and characteristics, and achieve the effect of making up for visual limitations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0084] In the following, with reference to the accompanying drawings, a further description will be given of the technical solution for learning the pedestrian re-identification method provided by the present invention with feature sparse representation multi-dictionary, so that those skilled in the art can better understand and implement the present invention.

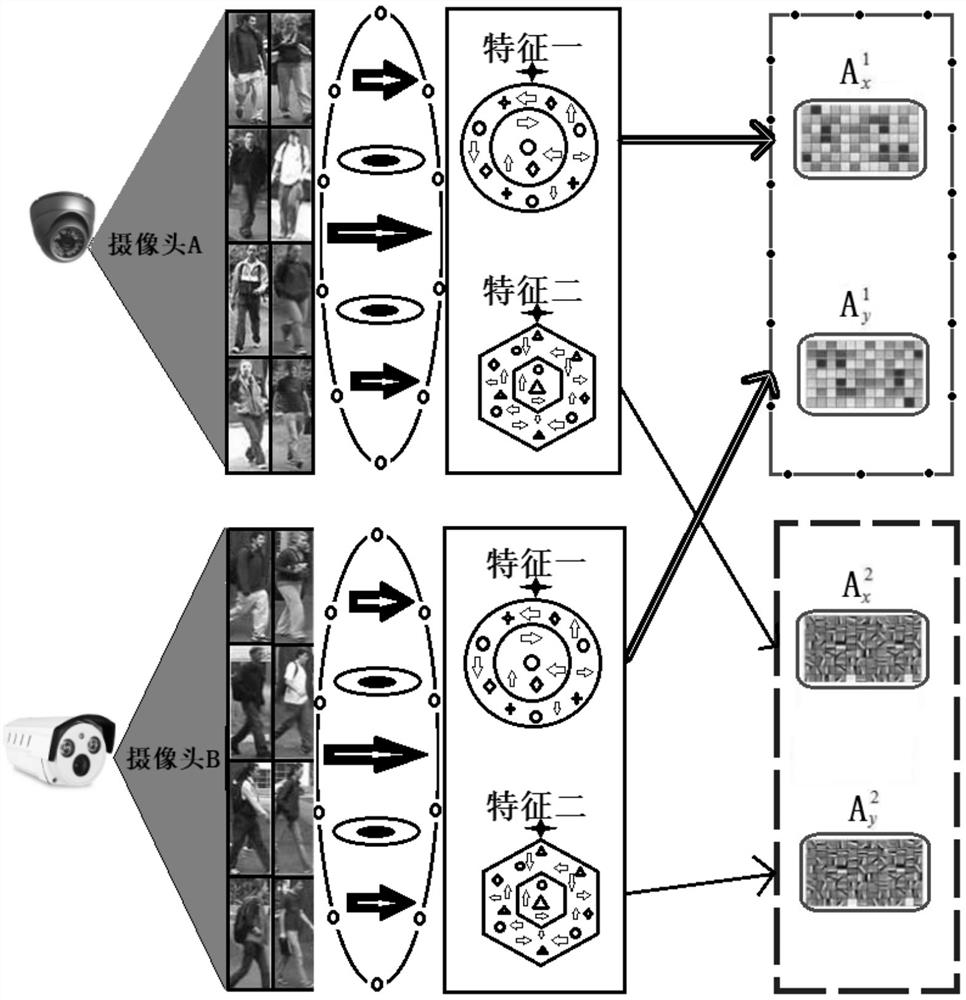

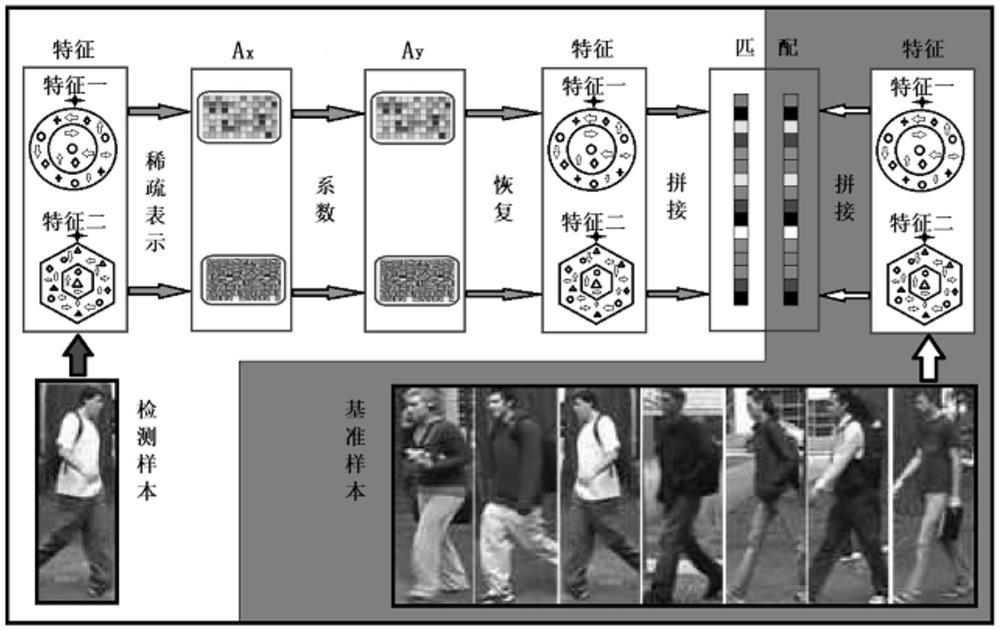

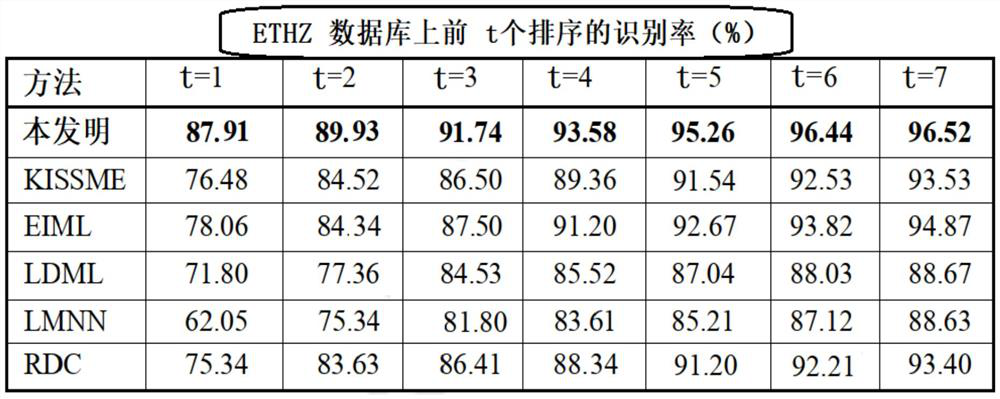

[0085] The present invention proposes a feature sparse representation multi-dictionary pair learning method for pedestrian re-identification, while considering the differences between different cameras and the differences between different features, in the actual pedestrian re-identification environment, the same image of different types There are differences between features, and even between features of the same kind (such as color features) in two different cameras. Therefore, the present invention makes the same kind of features (such as color features) in the two cameras obtain a coupling dictionary pair by sparse...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com