Vehicle infrared image compression and enhancement method and system

An infrared image and vehicle technology, applied in the field of image processing, can solve the problems of low precision, low speed, and large image space of vision sensors, and achieve the effects of simple hardware implementation, fast transmission rate, and high compression rate.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

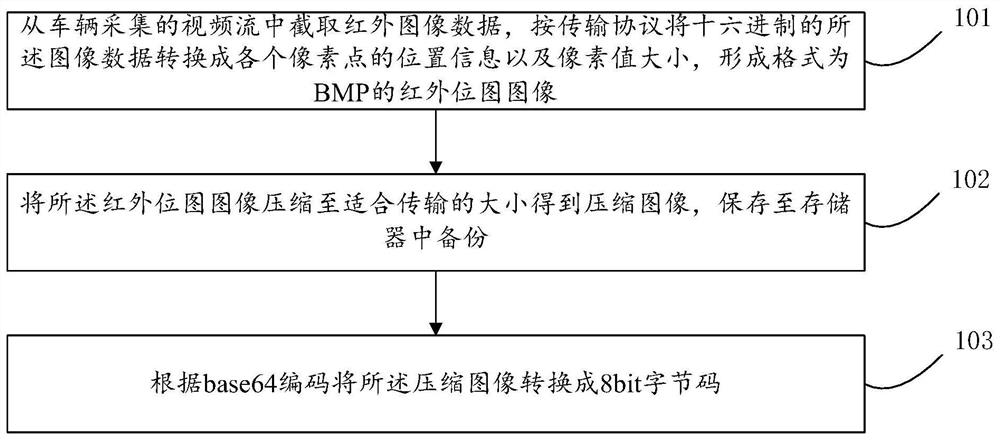

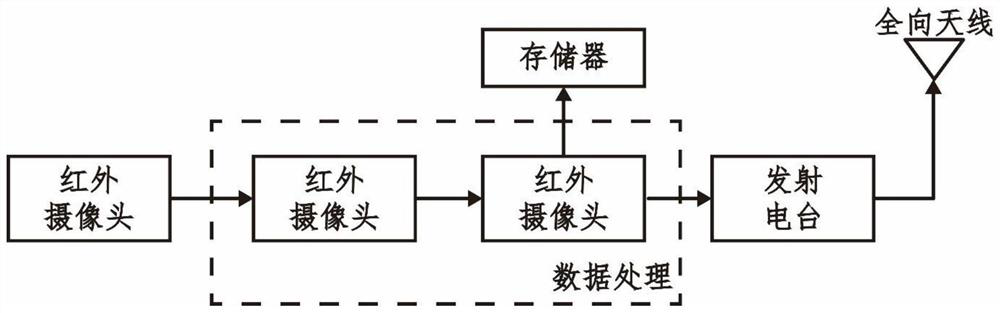

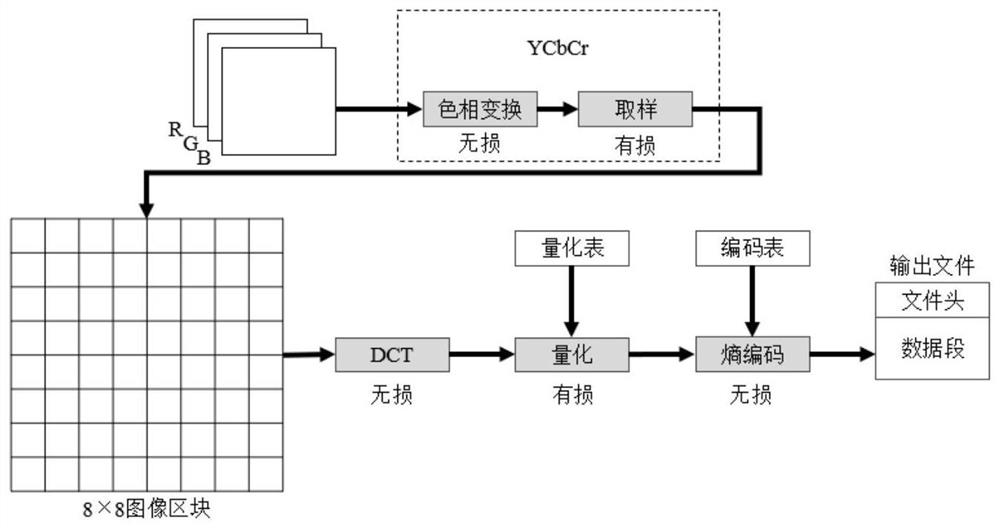

Method used

Image

Examples

Embodiment Construction

[0092] The preferred embodiments of the present invention will be described below with reference to the accompanying drawings. It should be understood that the preferred embodiments described herein are only used to illustrate and explain the present invention, but not to limit the present invention.

[0093] Usually, a visible image is a plane energy distribution map, which can be a radiation source of a luminous object itself, or the energy reflected or transmitted by the object after being irradiated by a light radiation source.

[0094] Digital images can be represented by two-dimensional discrete functions:

[0095] I=f(x, y)

[0096] Among them, (x, y) represents the coordinates of the image pixel, and the function value f(x, y) represents the gray value of the pixel at the coordinates.

[0097] It can also be represented by a two-dimensional matrix:

[0098] I=A(M, N)

[0099] Among them, A is the matrix representation, and M and N are the matrix row and column lengt...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com