End-to-end character image generation method guaranteeing consistent style

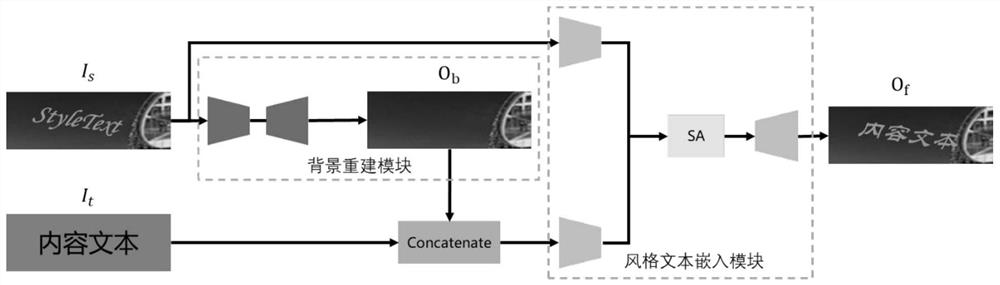

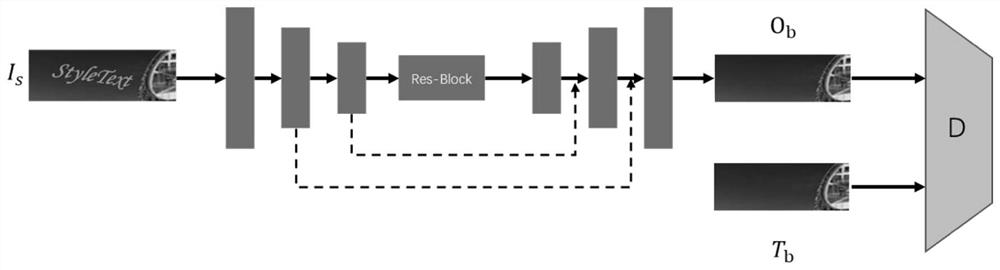

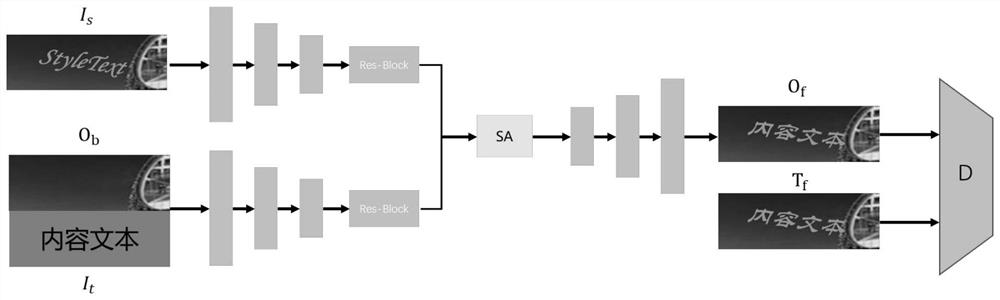

An image generation and style technology, applied in neural learning methods, editing/combining graphics or text, electronic digital data processing, etc., can solve problems such as easy to generate artifacts, unable to handle scenes with complex backgrounds, and difficult to ensure semantic coherence. Achieve the effect of reducing the loss of effect and performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0067] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in detail below in conjunction with natural scene images.

[0068] The system development platform is the Linux operating system CentOS7.2, and the GPU is an NVIDIA GeForce GTXTITAN X GPU. The recognition program is written in python3.7 and uses the PyTorch1.6 framework.

[0069] Since there is no group data after text replacement in reality, and there is no related data set, the training data adopts synthetic data.

[0070] 1. Training data synthesis

[0071] Collect data such as font files, corpus, and images without text to generate training data.

[0072] Collect Chinese and English thesaurus. An English thesaurus (more than 160,000 words) and a Chinese thesaurus THUOCL (more than 150,000 words) were collected from the Internet. In order to prevent the font file from rendering a certain Chinese character, delete words other than ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com