Mechanical arm autonomous moving grabbing method based on visual-tactile fusion under complex illumination condition

A technology of lighting conditions and autonomous movement, applied in manipulators, program-controlled manipulators, manufacturing tools, etc., can solve the problem of inability to obtain the precise three-dimensional spatial position of the target object, prevent the instability of the fuselage, and improve the positioning accuracy and grasping of objects. The effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

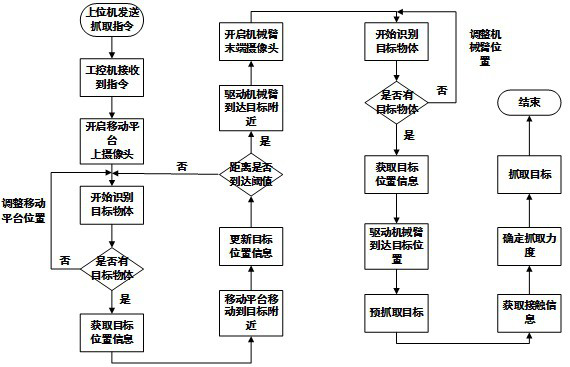

[0069] The present invention will be further explained below in conjunction with the accompanying drawings and specific embodiments. It should be understood that the following specific embodiments are only used to illustrate the present invention and are not intended to limit the scope of the present invention.

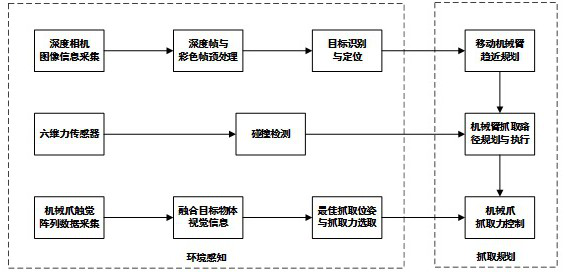

[0070] Embodiments of the present invention provide a visual-touch fusion-based robotic arm autonomous moving and grasping method under complex lighting conditions. The overall system block diagram is as follows figure 1 shown, including:

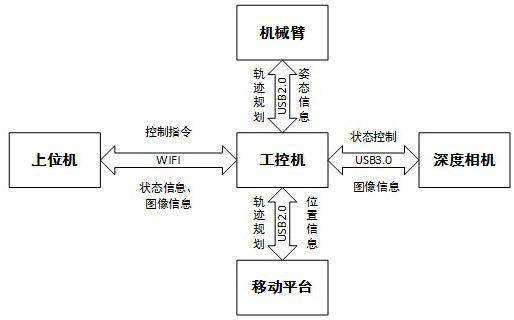

[0071] The communication module is used to control the transmission of instructions, images and pose information, including the upper computer system installed on the remote console and the industrial computer system installed on the mobile platform. The two systems are connected through WiFi and use the SSH communication protocol. The upper computer sends control instructions to the industrial computer, and the industrial computer...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com