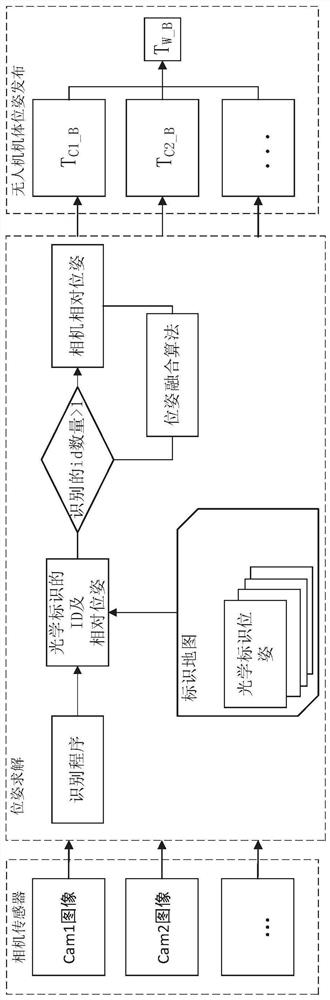

Multi-camera fusion unmanned aerial vehicle in-building navigation and positioning method

An in-building, navigation and positioning technology, used in navigation, mapping and navigation, navigation calculation tools, etc., can solve problems such as difficult to popularize and use, incompetent for positioning requirements, and achieve reduced positioning failures, data processing speed, and robustness. strong effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0088] According to a preferred embodiment of the present invention, after the camera obtains the observation value of the passive optical marker, the observation value needs to be corrected,

[0089] Among them, it is assumed that camera j observes an optical marker [i, T W_Codei ,Σ Codei ], its observed value is {T Cj_Codei ,Σ Cj_Codei}, i∈[1,n],

[0090] The correcting the observed value is correcting the uncertainty of the observed value.

[0091] The inventor found that the uncertainty of the given observations in the prior art is usually a fixed value or given according to the residual value in the optimization process, which cannot accurately reflect the accuracy of the current measurement, resulting in easy observations. Accumulated errors occur, resulting in unsatisfactory subsequent pose fusion results, which significantly reduce the positioning accuracy of the drone. Therefore, the uncertainty of the observed value is redesigned in the present invention, which ...

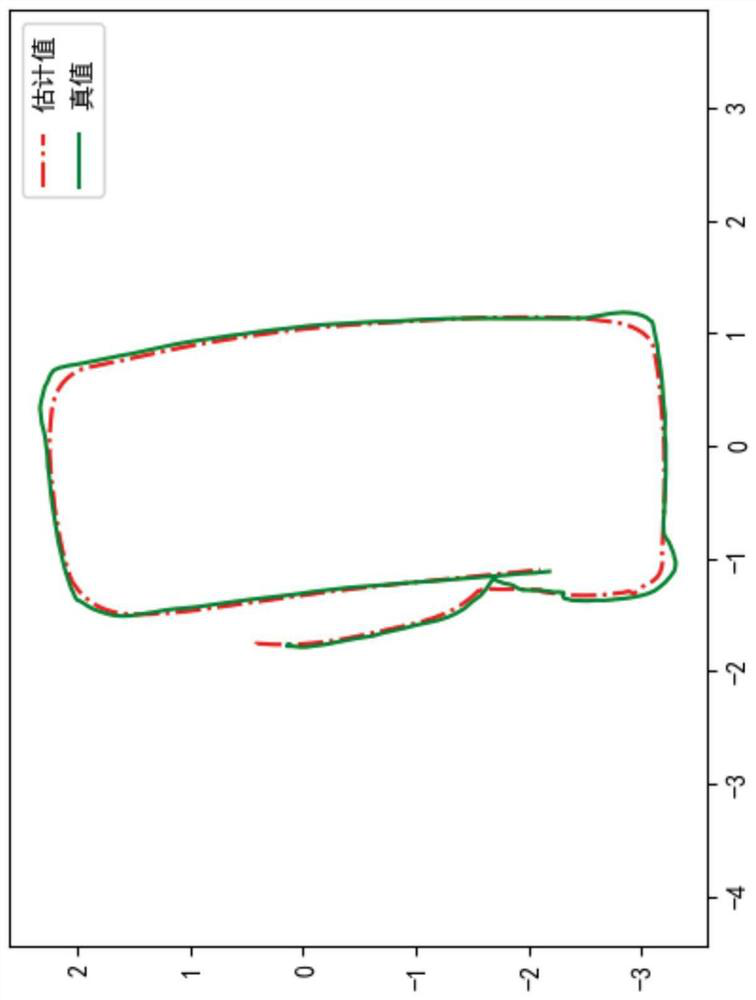

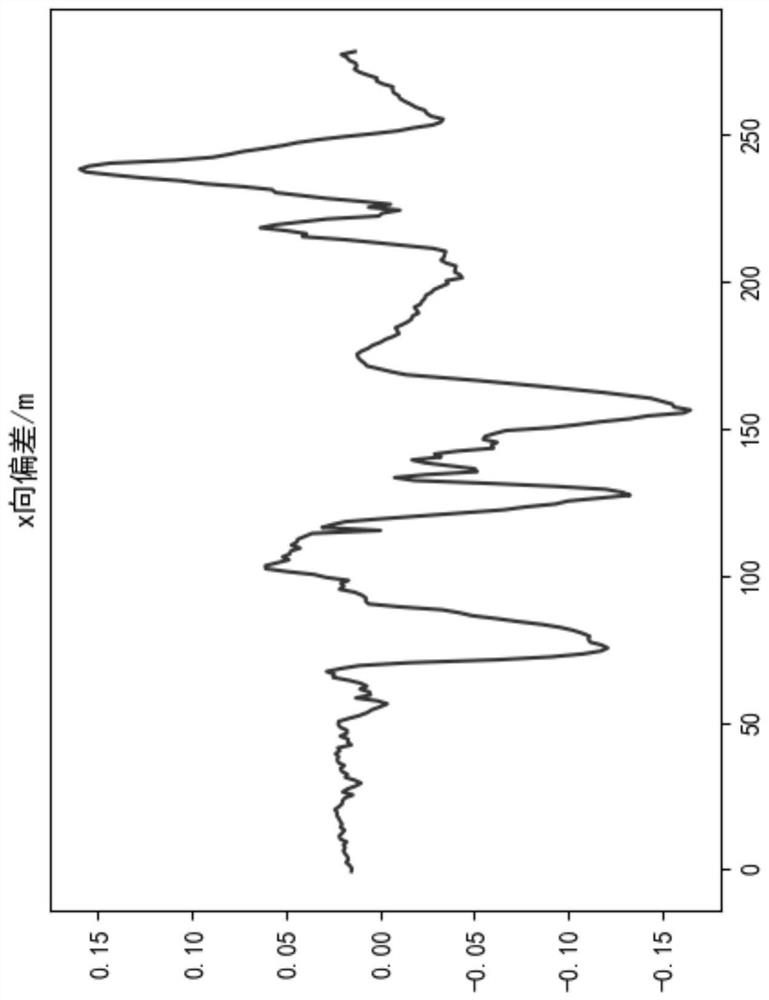

Embodiment 1

[0189] UAV type: quadrotor UAV;

[0190] Number of cameras: 5

[0191] Camera installation position: The camera is installed around the drone and below the bottom bracket of the drone, surrounded by cameras at 60° intervals, and the field of view of the camera below is vertically downward;

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com