Deep reinforcement learning training acceleration method for collision avoidance of multiple unmanned aerial vehicles

A technology of reinforcement learning and collision avoidance, applied in the field of drones, can solve the problems of tediousness, low degree of automation, and low degree of automation, and achieve the effect of accelerating the training process, good control strategy, and simple principle

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

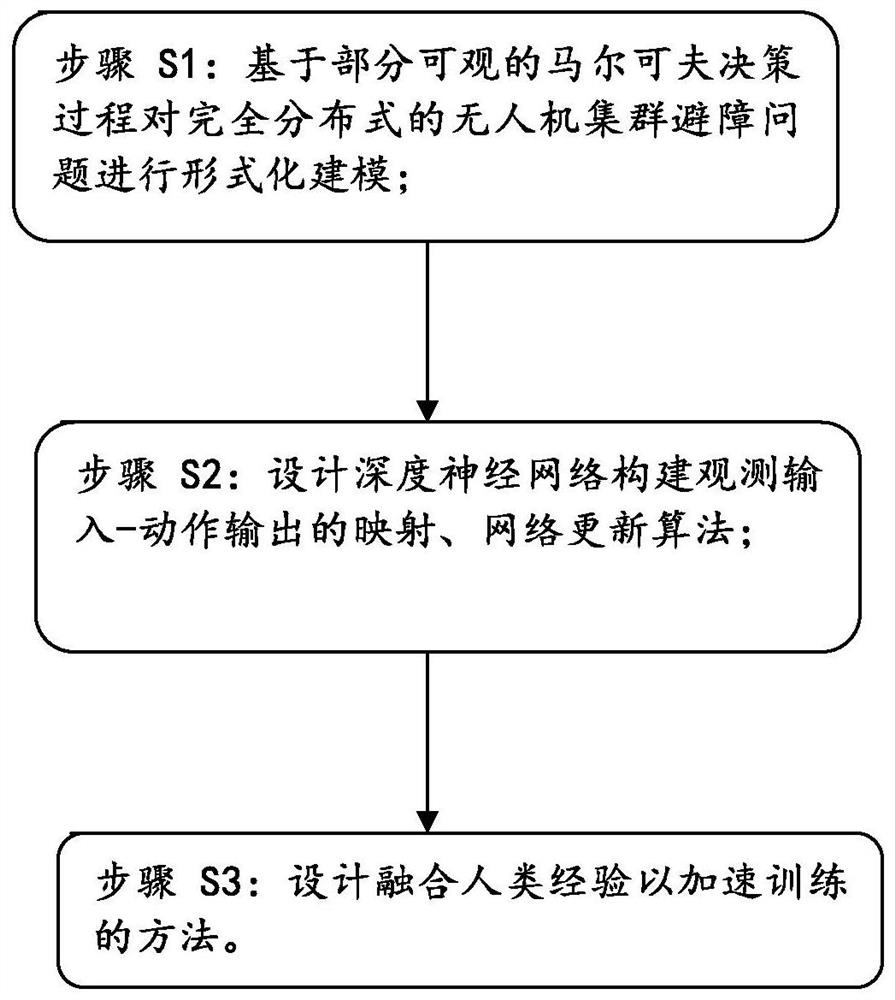

[0050] Such as figure 1 with figure 2 As shown, the deep reinforcement learning training acceleration method for multi-UAV collision avoidance of the present invention is a deep reinforcement learning method based on human experience assistance, which includes:

[0051]Step S1: Formally model the fully distributed UAV swarm obstacle avoidance problem based on a partially observable Markov decision process;

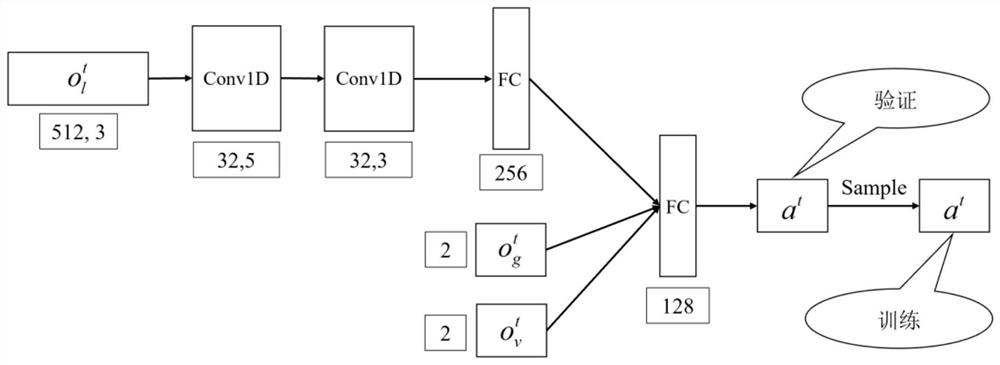

[0052] Step S2: Design a deep neural network to construct a mapping of observation input-action output and a network update algorithm;

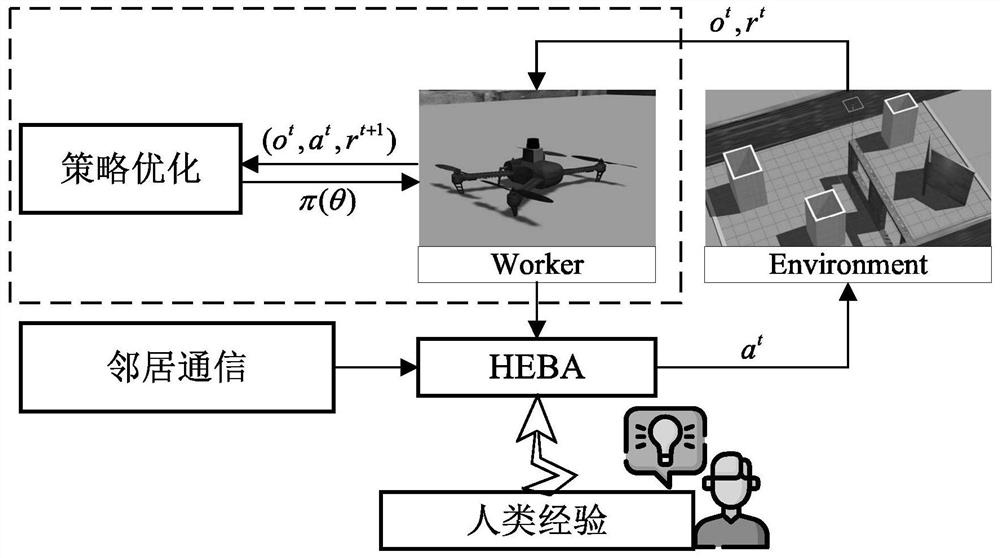

[0053] Step S3: Design methods to incorporate human experience to accelerate training.

[0054] In a specific application example, in step S1, the formal modeling process includes:

[0055] The problem of cooperative obstacle avoidance in the process of multi-UAV going to the target location...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com