Patents

Literature

38 results about "Partially observable Markov decision process" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

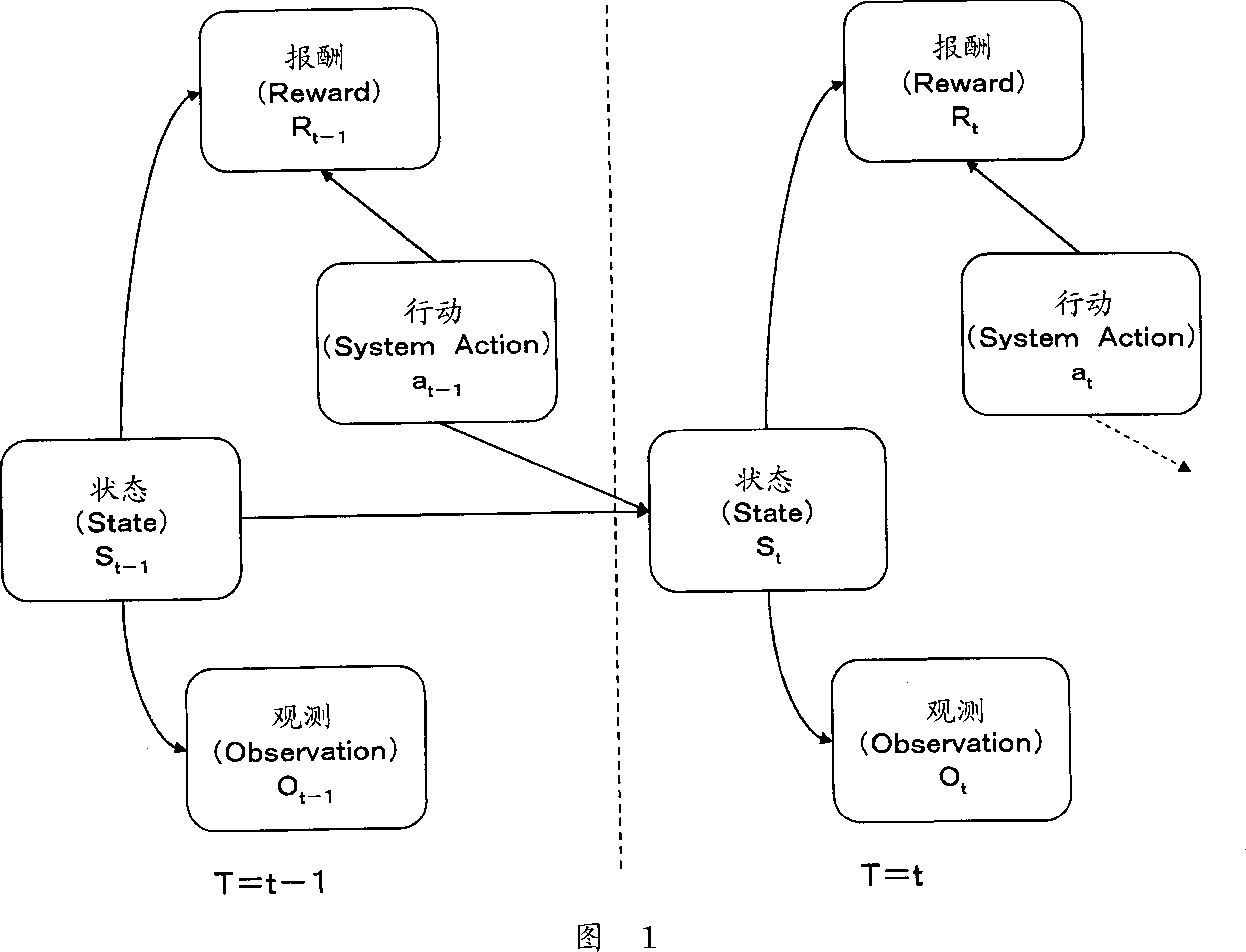

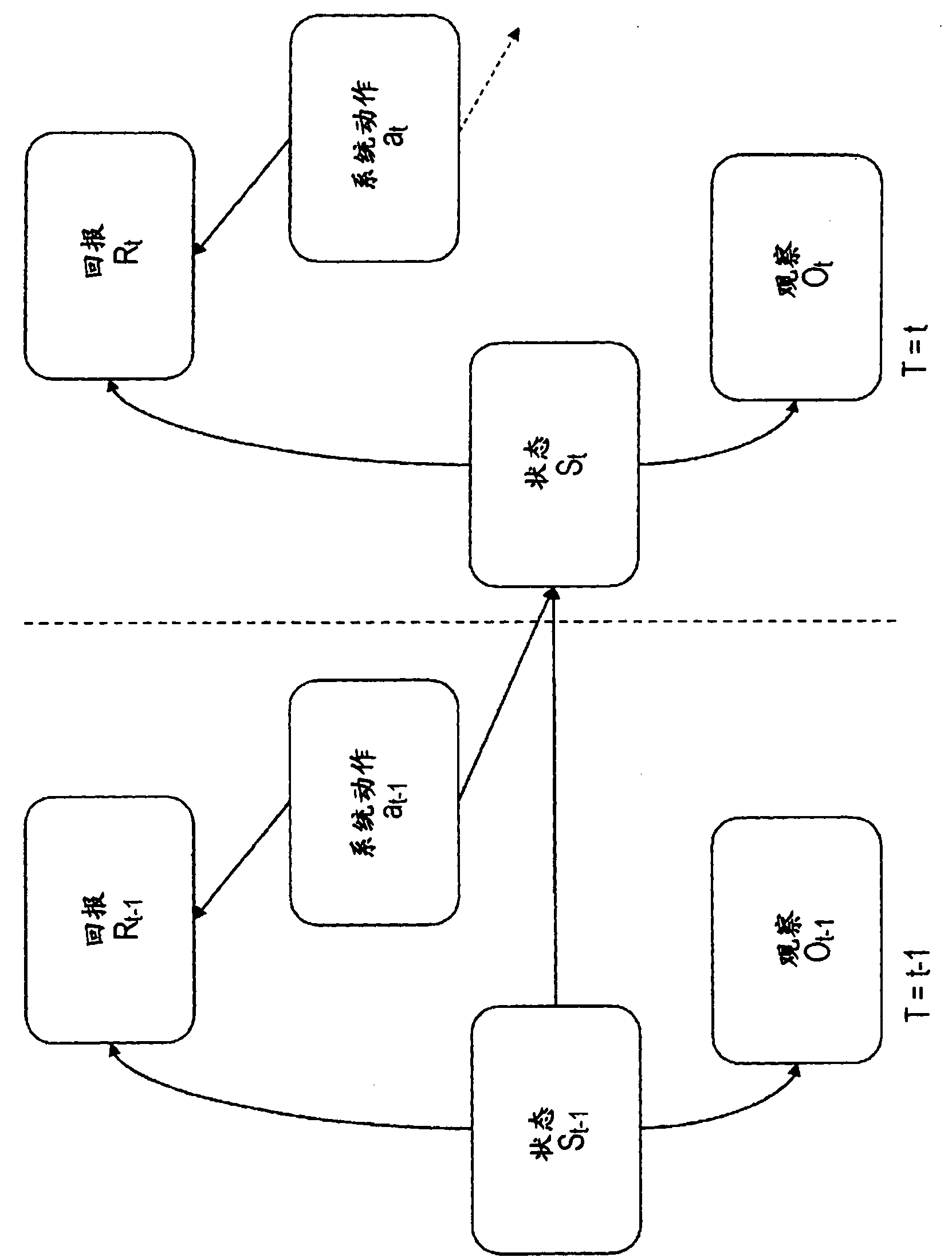

A partially observable Markov decision process (POMDP) is a generalization of a Markov decision process (MDP). A POMDP models an agent decision process in which it is assumed that the system dynamics are determined by an MDP, but the agent cannot directly observe the underlying state. Instead, it must maintain a probability distribution over the set of possible states, based on a set of observations and observation probabilities, and the underlying MDP.

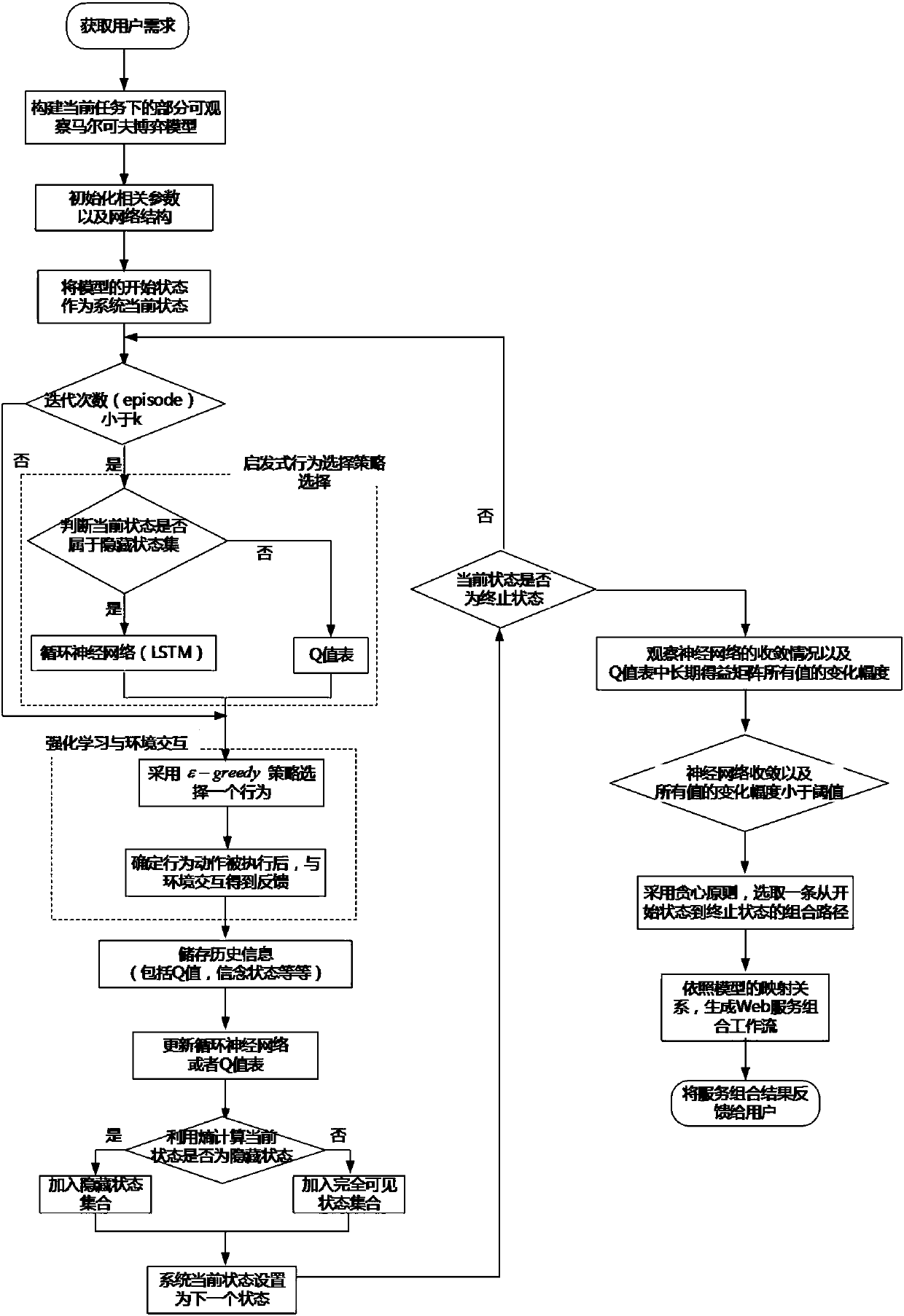

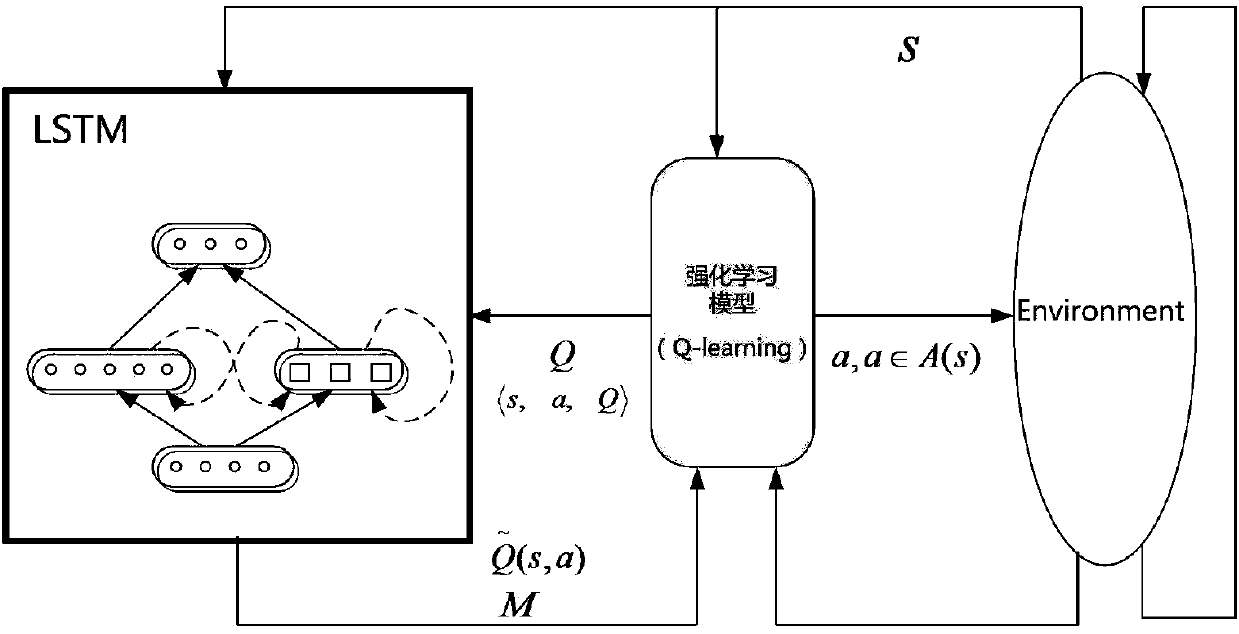

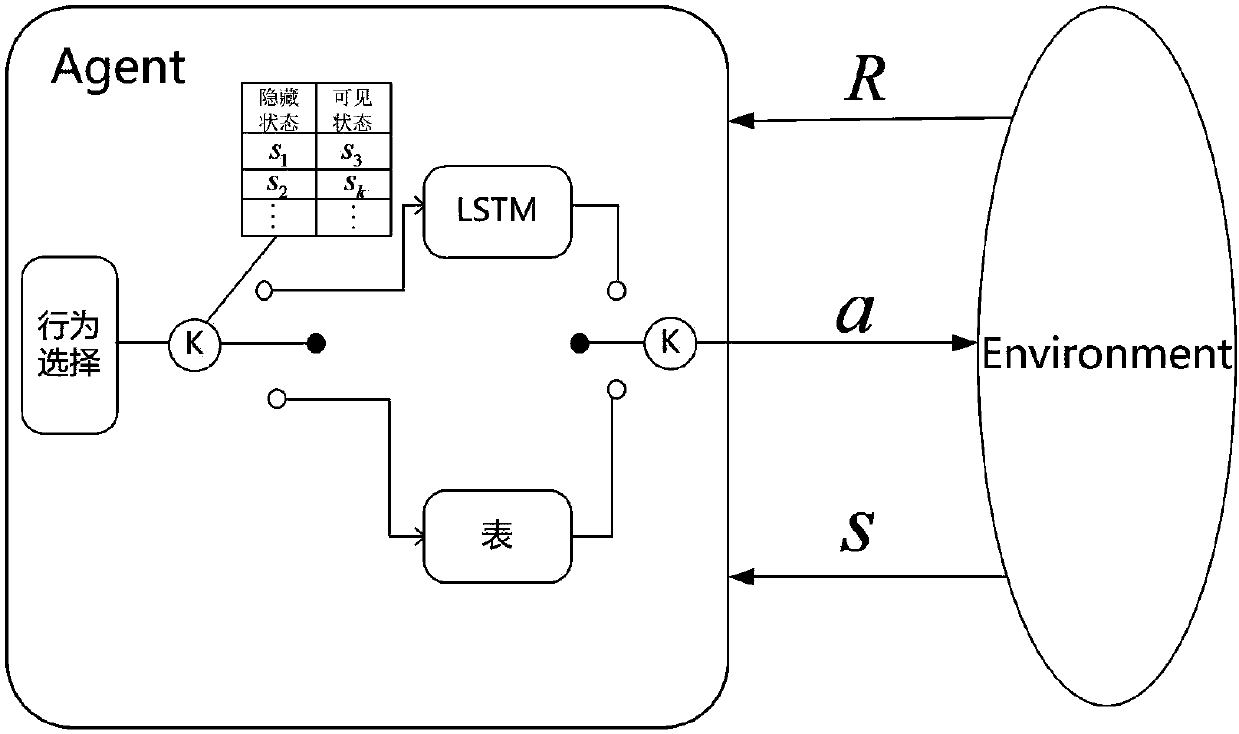

Web service combination method based on depth reinforcement learning

ActiveCN107241213ASolving Partial ObservabilityAccurately combine resultsData switching networksNeural learning methodsService compositionCurse of dimensionality

The invention discloses a web service combination method based on depth reinforcement learning for overcoming the problems of long time consumption, poor flexibility and non-ideal combination effect of the traditional service combination method in large-scale service scenes. The depth reinforcement learning technology and the heuristic thought are applied to the service combination problem. In addition, by considering the partial observability of the real environment, the service combination process is converted into a partially-observable Markov decision process POMDP, the solution problem of the POMDP is solved by using a recurrent neural network, and the method still expresses high efficiency encountering the challenge of curse of dimensionality. By adoption of the method provided by the invention, the solution speed can be effectively improved, the dynamic service combination environment is automatically adapted on the basis of ensuring the quality of the service combination scheme, and the adaptability and the flexibility of the service combination efficiency is effectively improved in a large-scale dynamic service combination scene.

Owner:SOUTHEAST UNIV

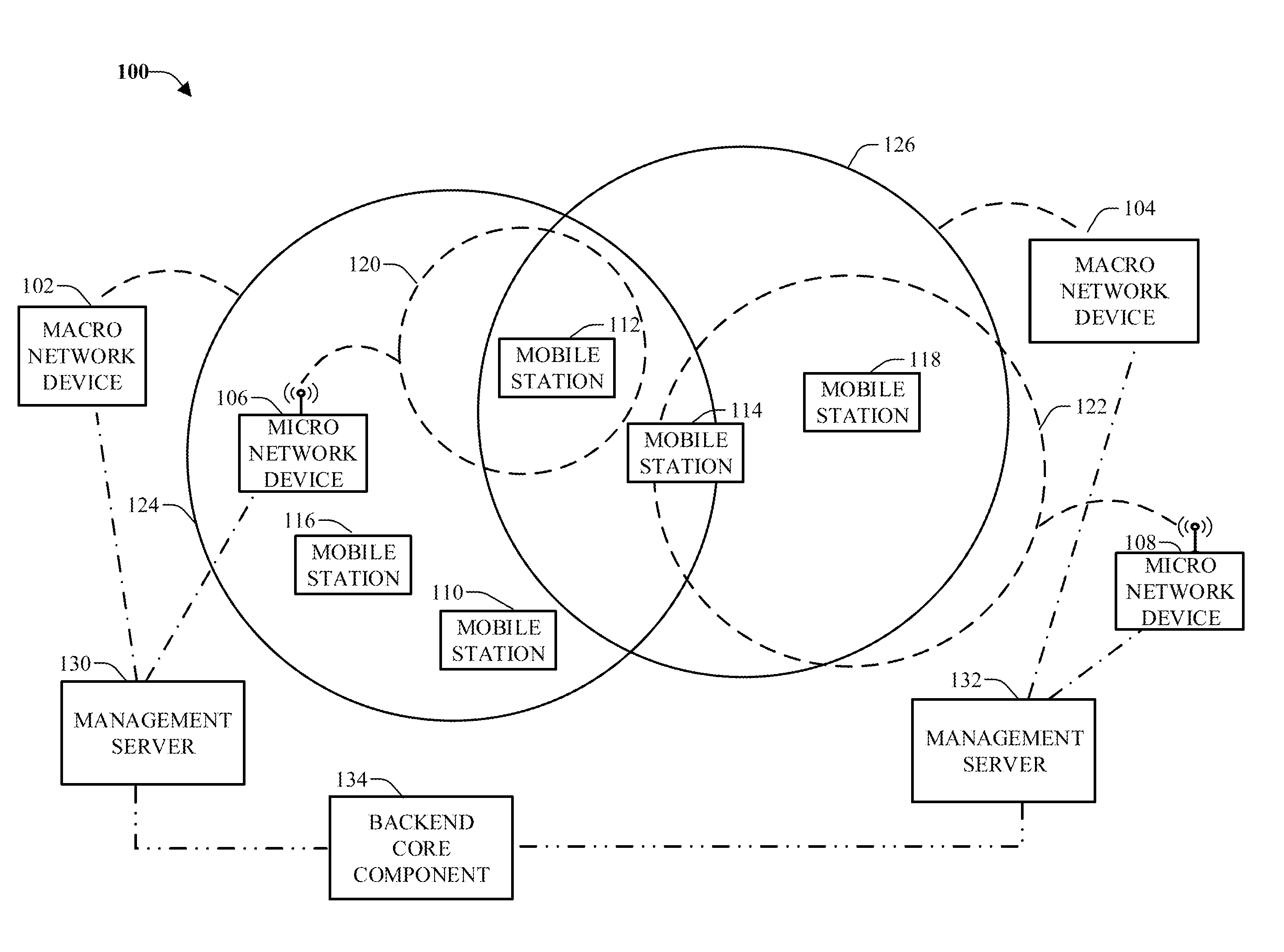

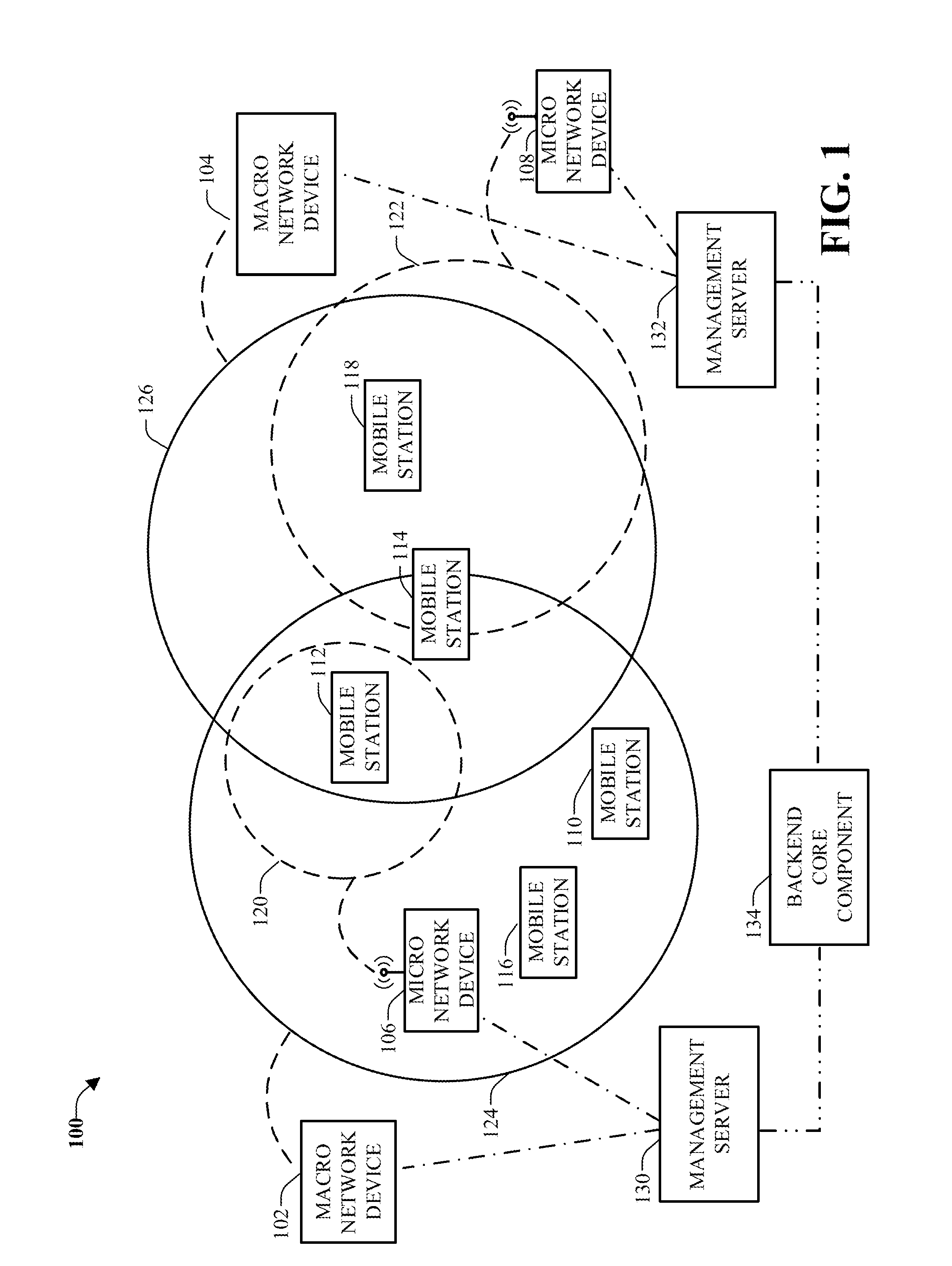

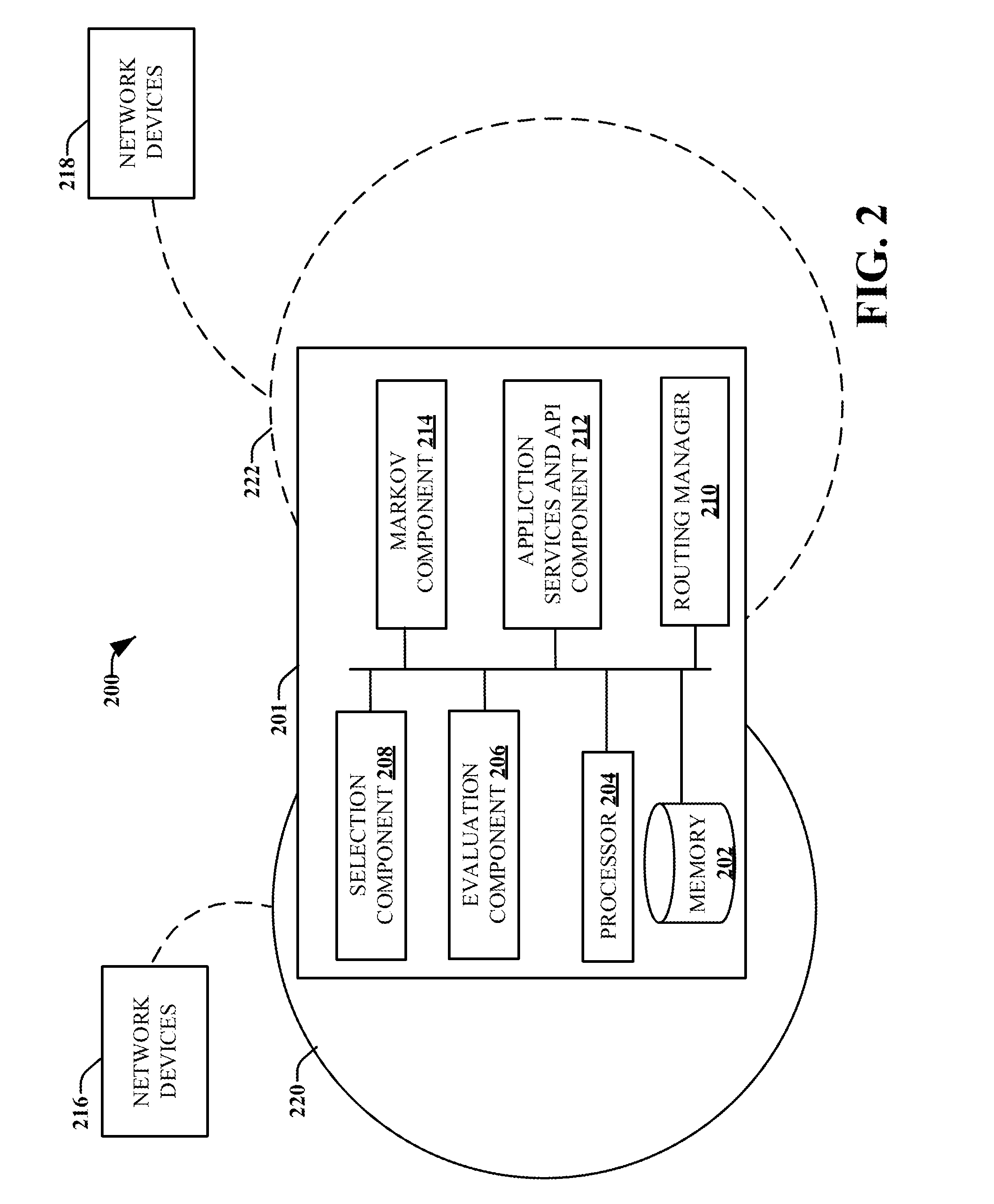

Cell selection or handover in wireless networks

ActiveUS20150126193A1Easy to implementExecuteWireless communicationSystem capacityWireless mesh network

Network device selection or handover schemes enable higher network capacity based on partially-observable Markov decision processes. Unavailable cell loading information is observed and / or predicted from non-serving base stations and actions are taken to maintain an active base station set or network device candidate data for selection in routing communications of a mobile device in a mobile device cell selection or handover procedure. A reward function is considered in the selection based on various parameters comprising system capacity, handover times, and mobility of a mobile device or mobile station.

Owner:TAIWAN SEMICON MFG CO LTD

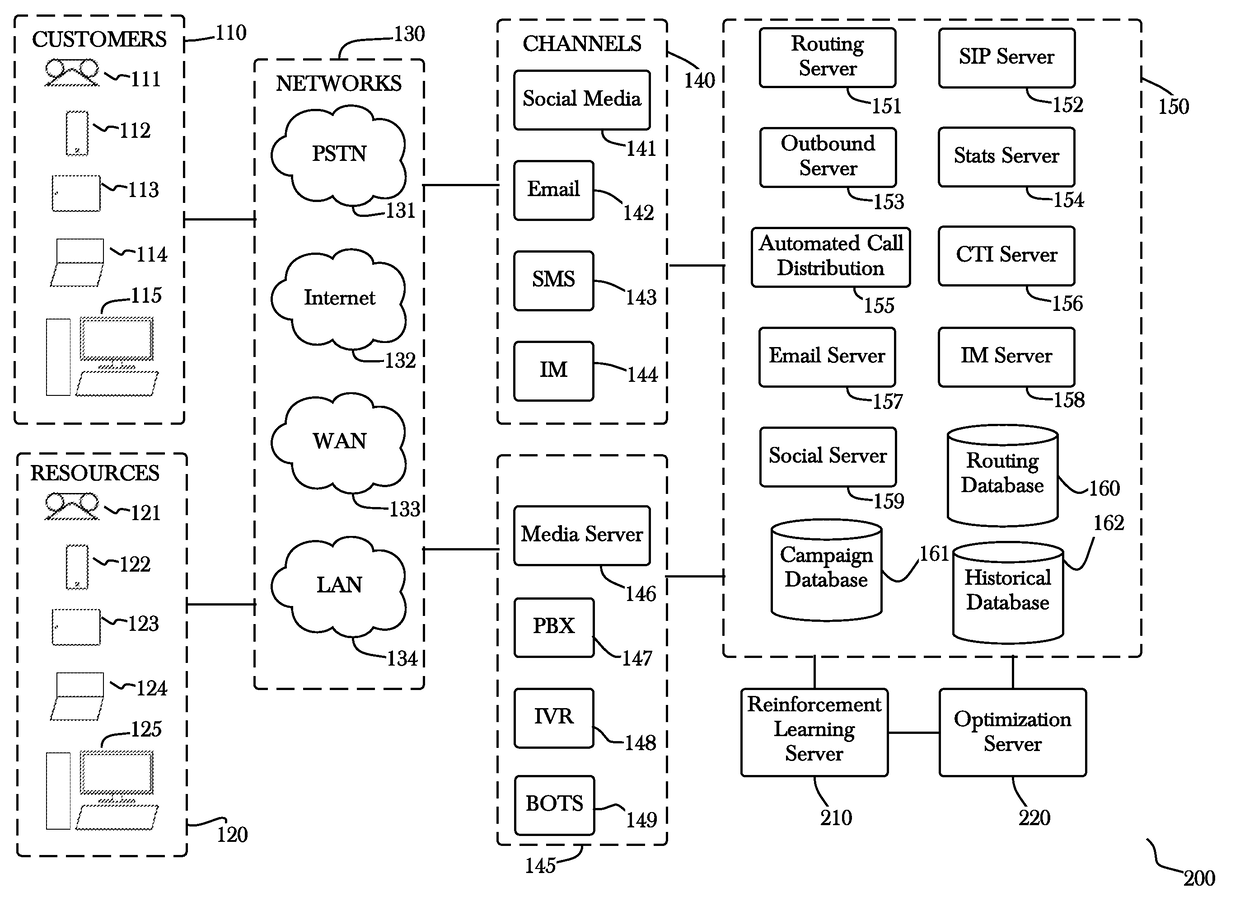

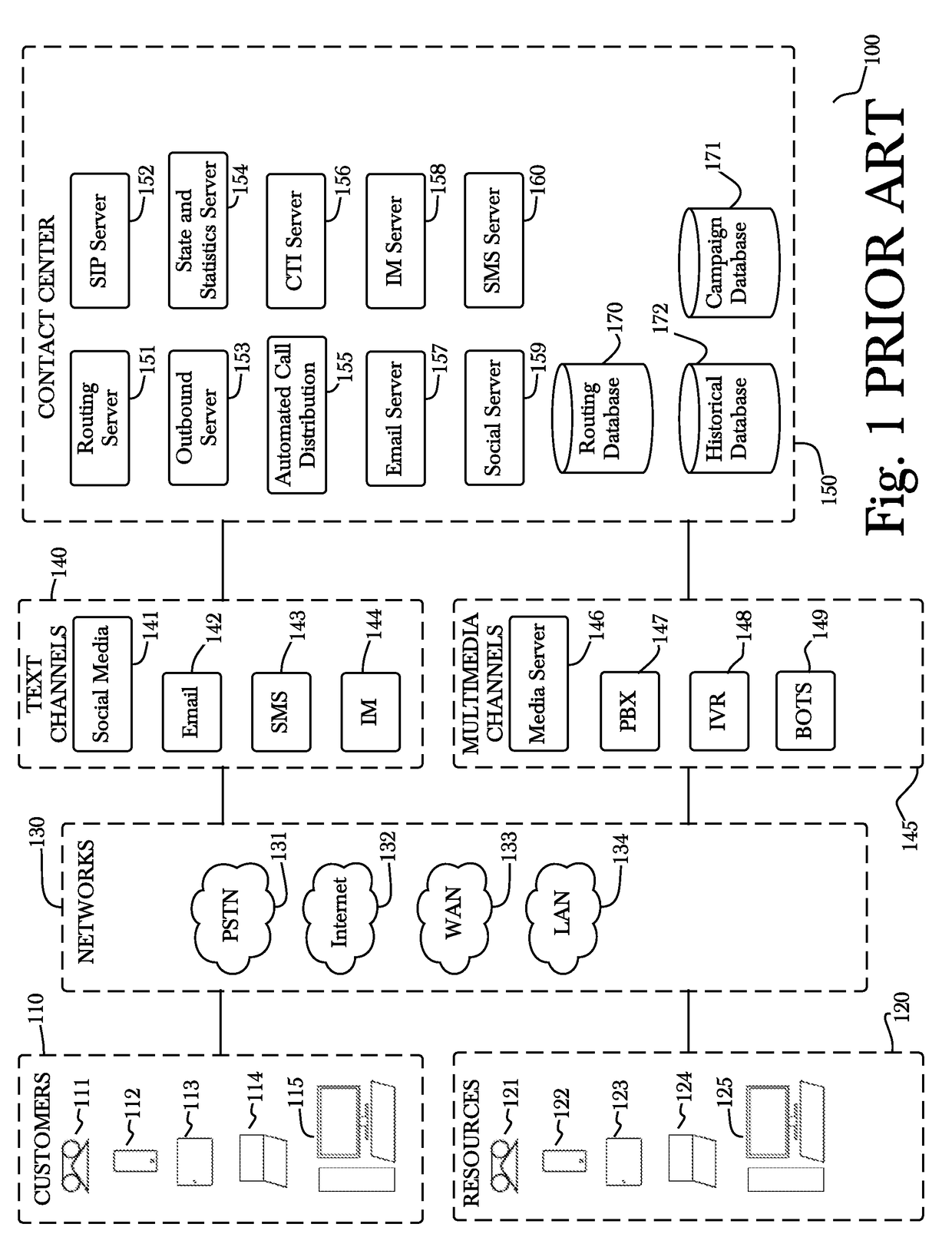

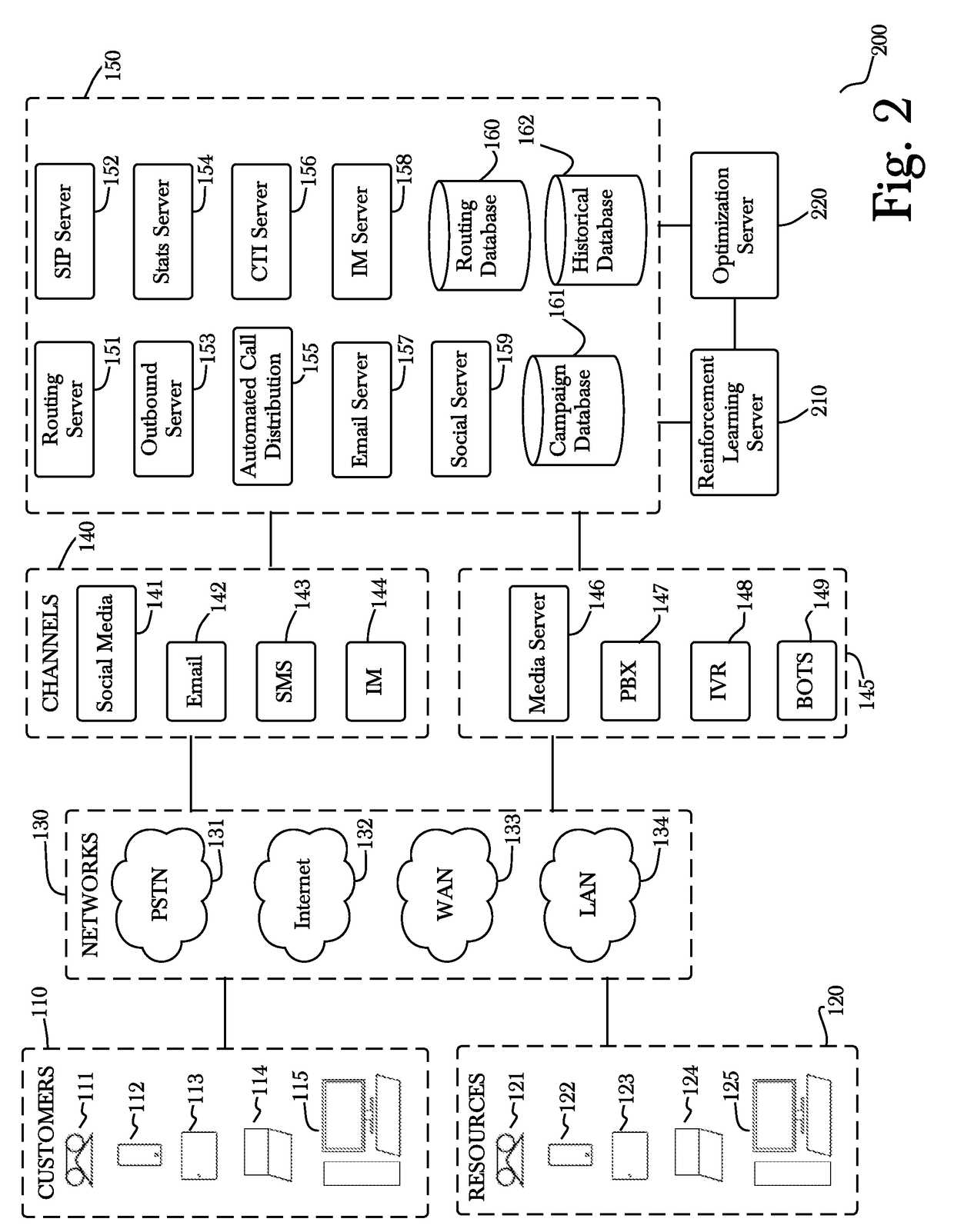

System and method for optimizing communications using reinforcement learning

InactiveUS20180082210A1Special service for subscribersProbabilistic networksMarkov chainContact center

A system and method for automatically optimizing states of communications and operations in a contact center, using a reinforcement learning module comprising a reinforcement learning server and an optimization server introduced to existing infrastructure of the contact center, that, through use of a model set up as a partially observable Markov chain with a Baum-Welch algorithm used to infer parameters and rewards added to form a partially observable Markov decision process, is solved to provide an optimal action policy to use in each state of a contact center, thereby ultimately optimizing states of communications and operations for an overall return.

Owner:NEW VOICE MEDIA LIMITED

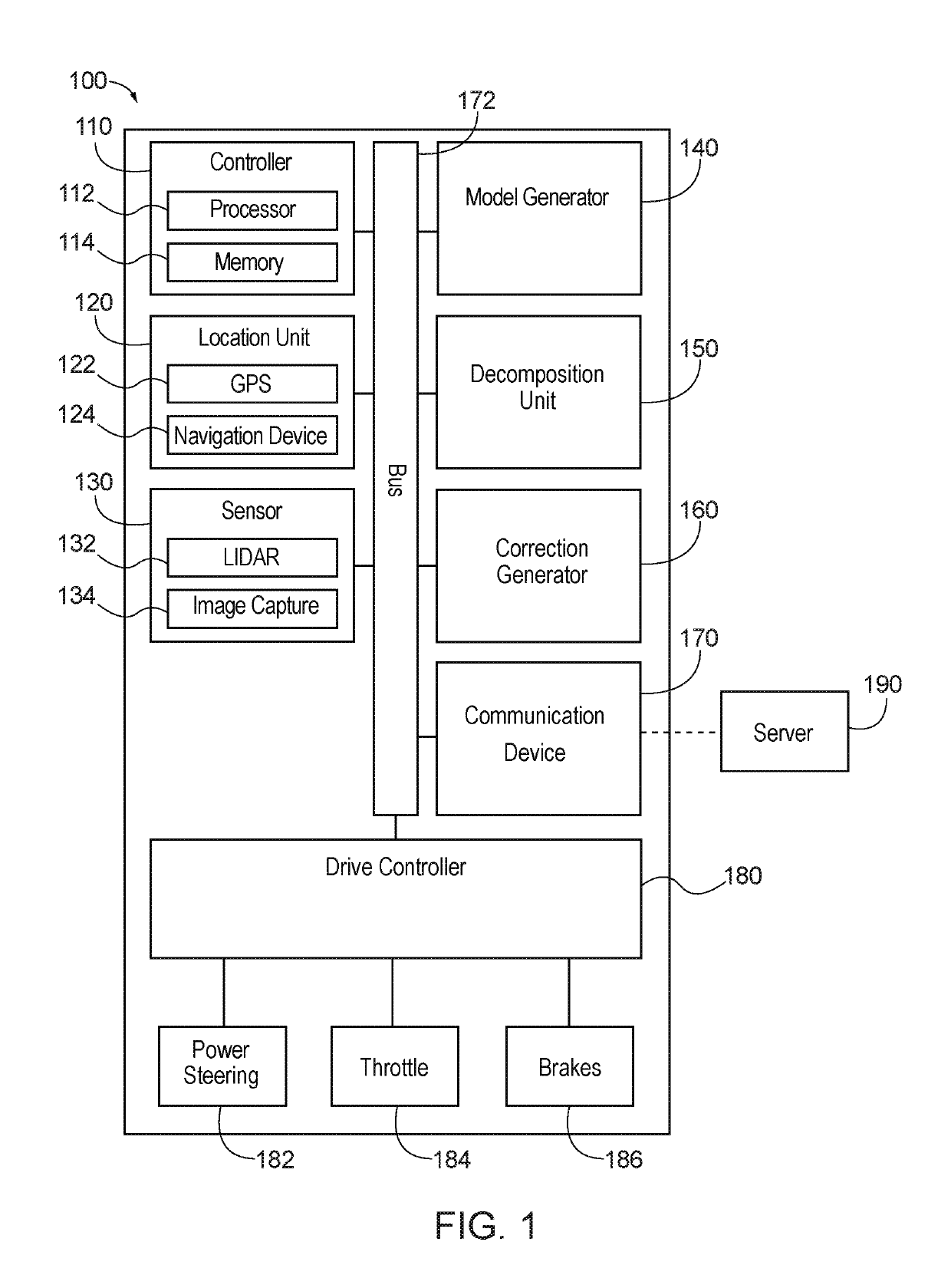

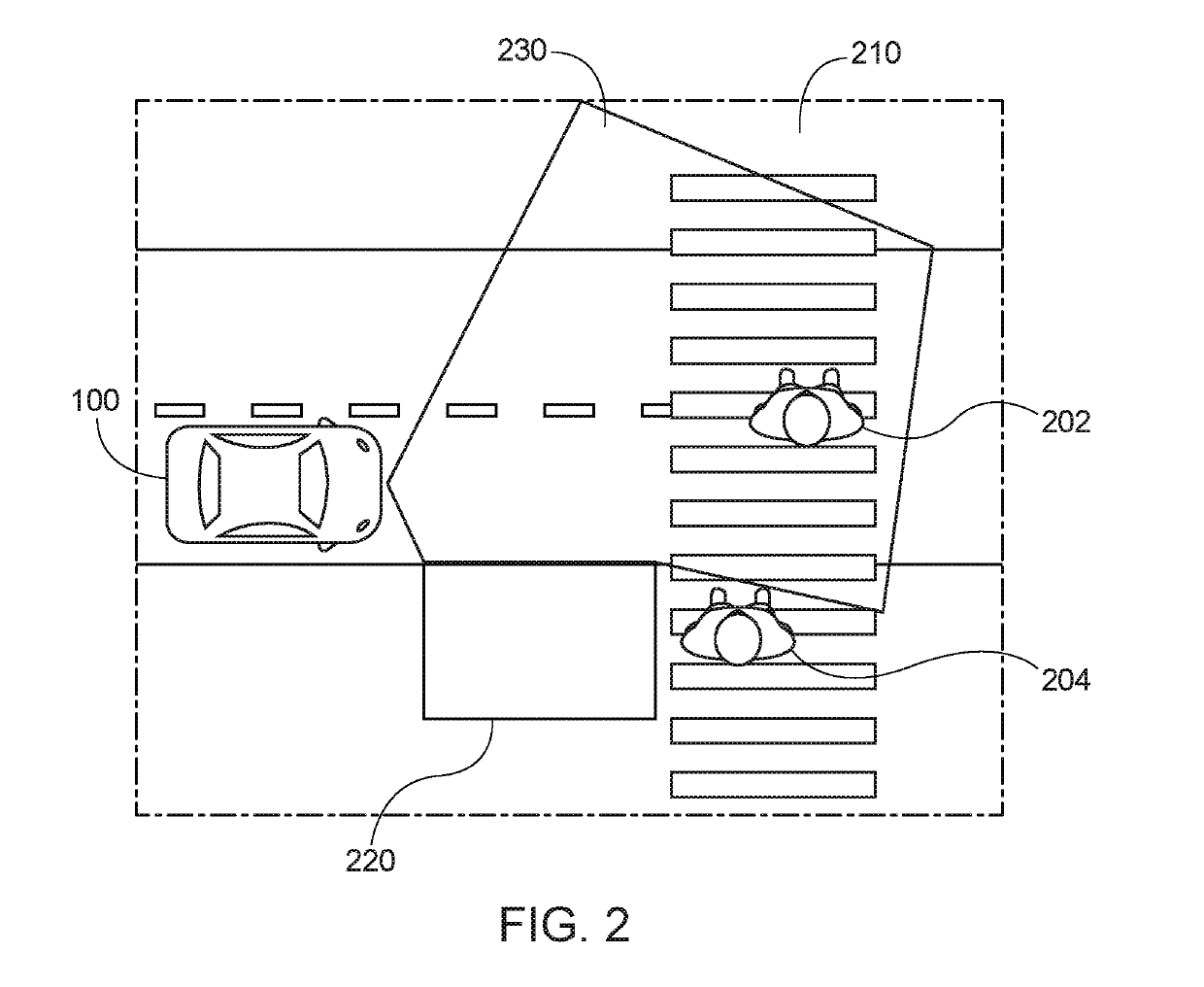

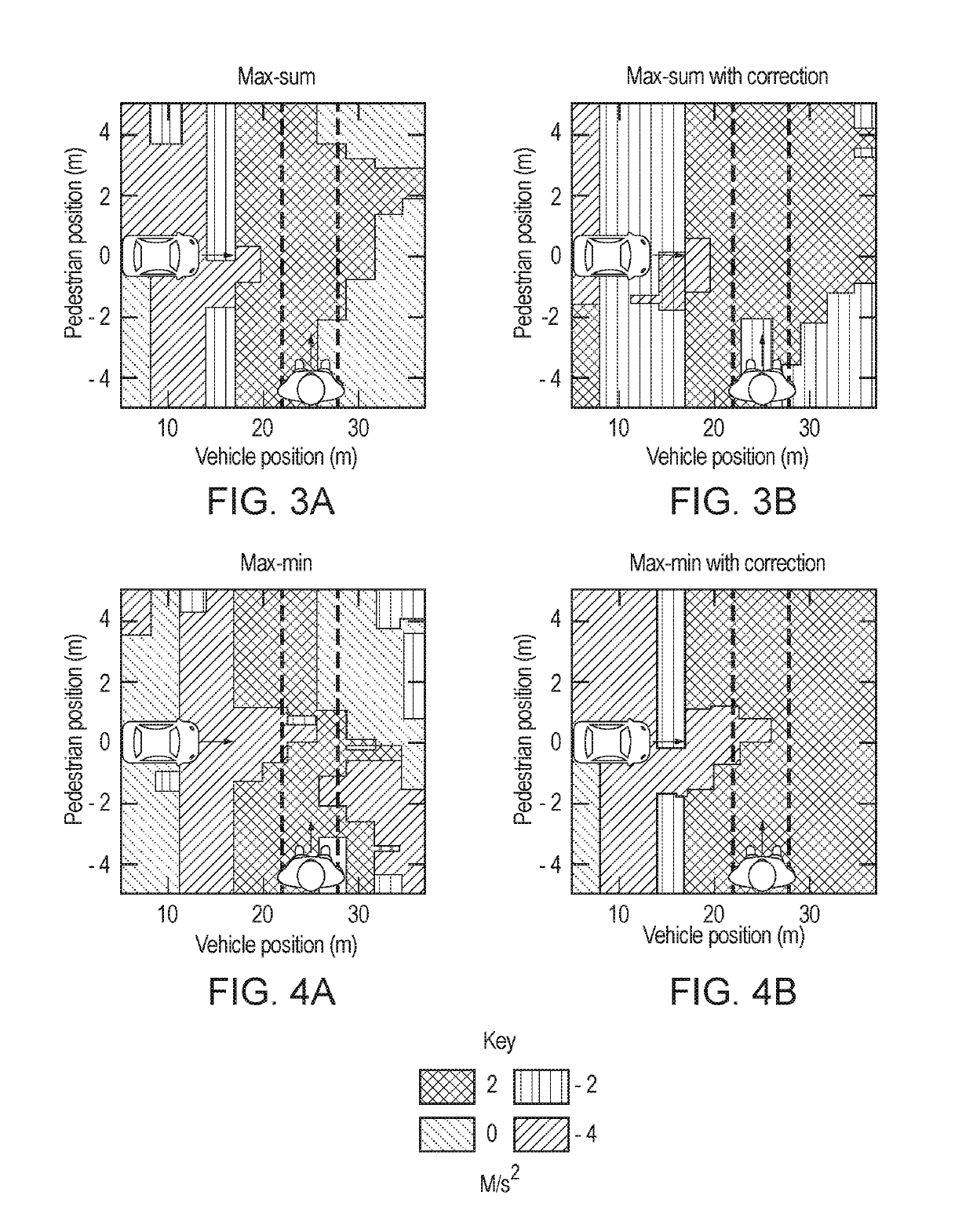

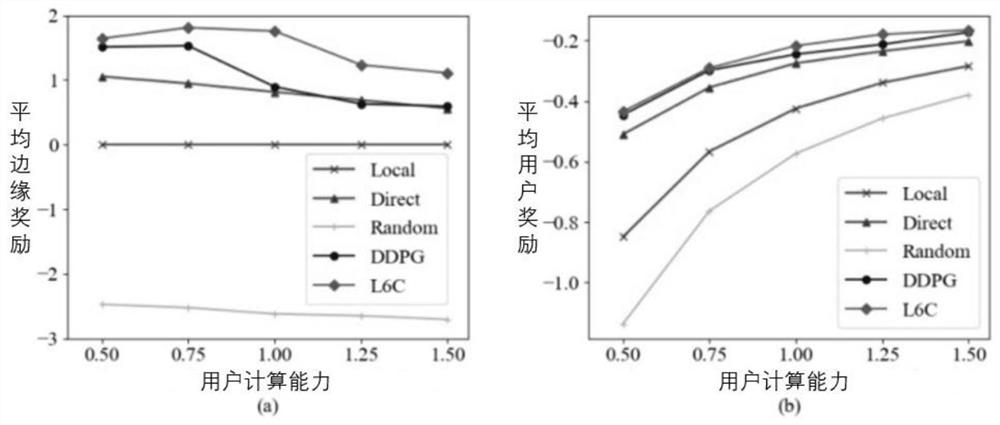

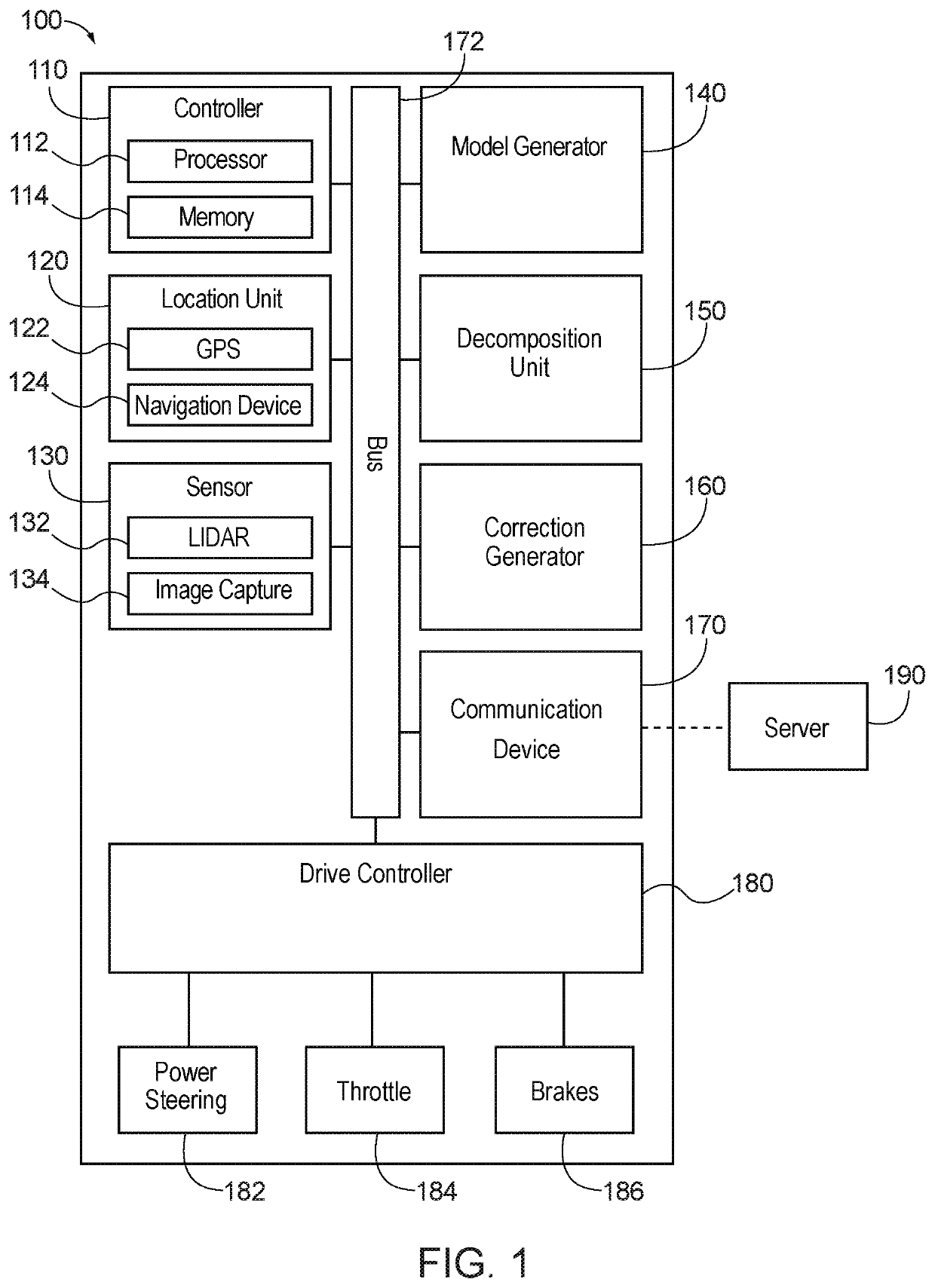

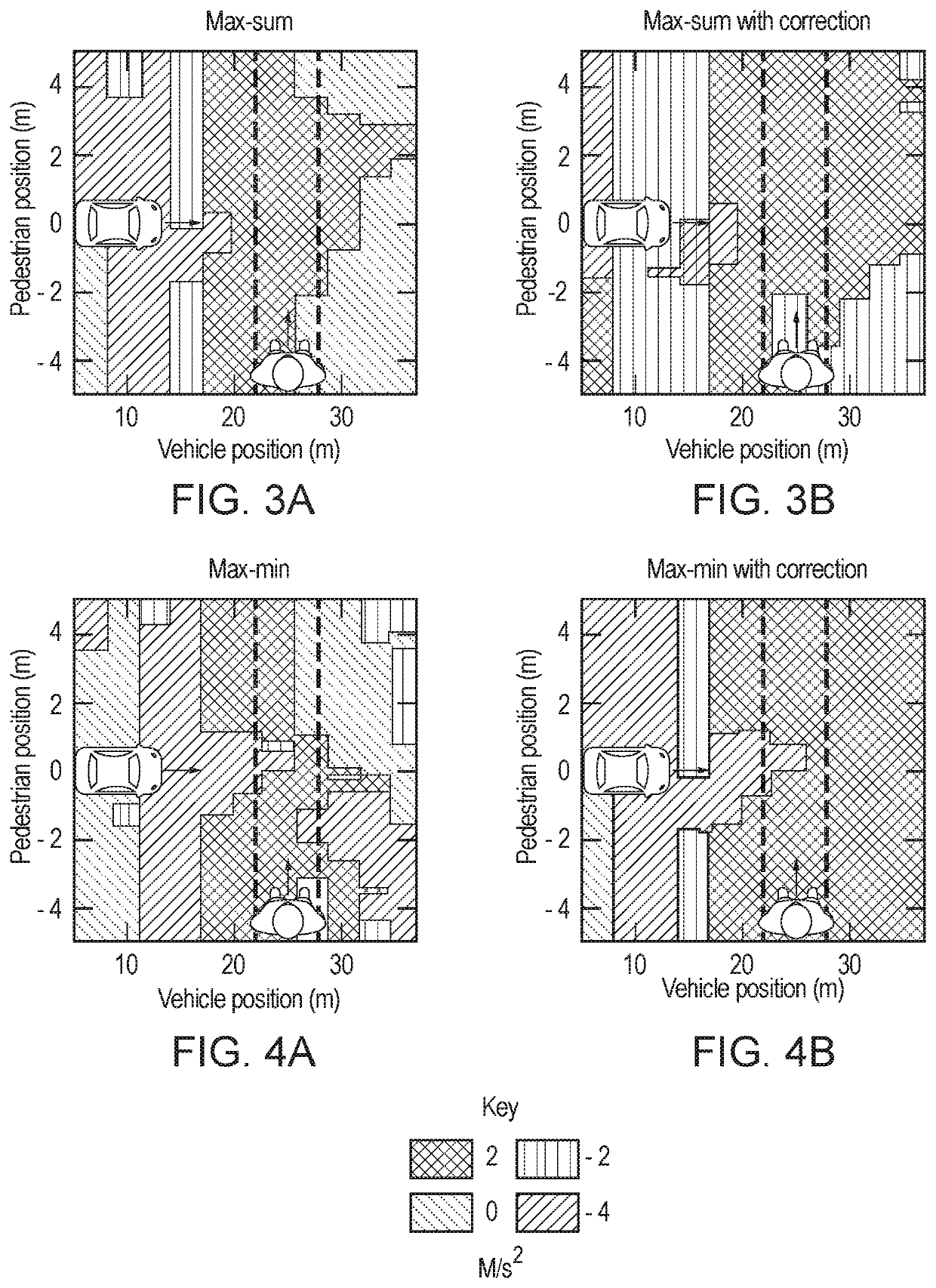

Utility decomposition with deep corrections

One or more aspects of utility decomposition with deep corrections are described herein. An entity may be detected within an environment through which an autonomous vehicle is travelling. The entity may be associated with a current velocity and a current position. The autonomous vehicle may be associated with a current position and a current velocity. Additionally, the autonomous vehicle may have a target position or desired destination. A Partially Observable Markov Decision Process (POMDP) model may be built based on the current velocities and current positions of different entities and the autonomous vehicle. Utility decomposition may be performed to break tasks or problems down into sub-tasks or sub-problems. A correction term may be generated using multi-fidelity modeling. A driving parameter may be implemented for a component of the autonomous vehicle based on the POMDP model and the correction term to operate the autonomous vehicle autonomously.

Owner:HONDA MOTOR CO LTD

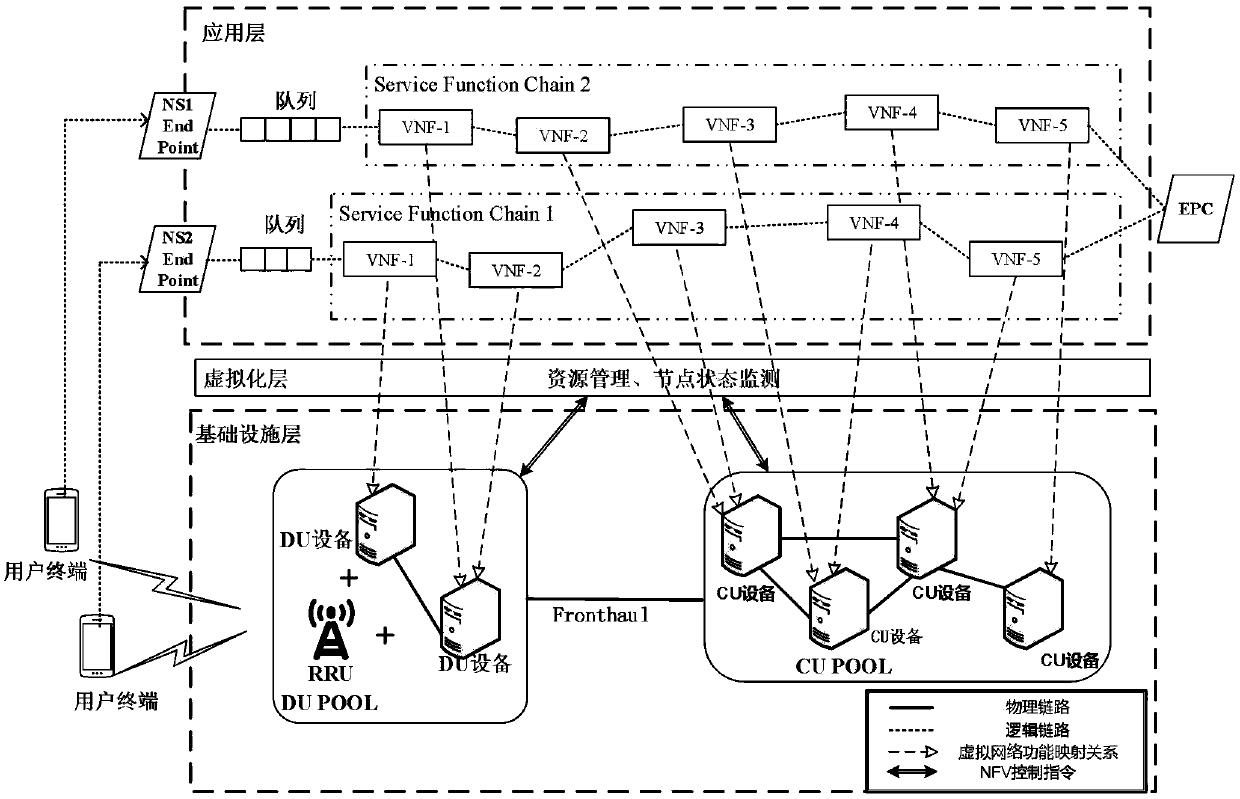

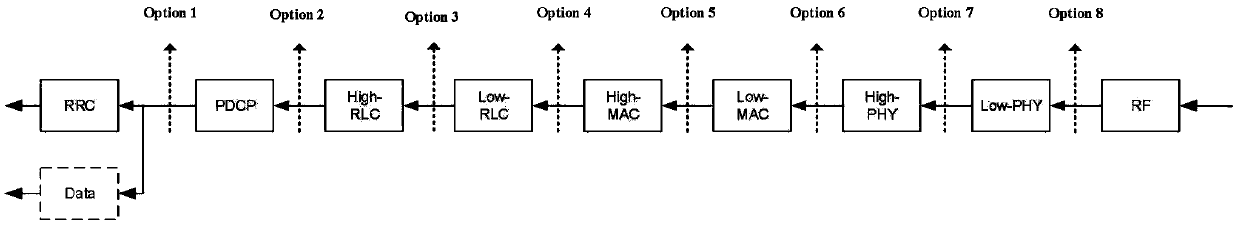

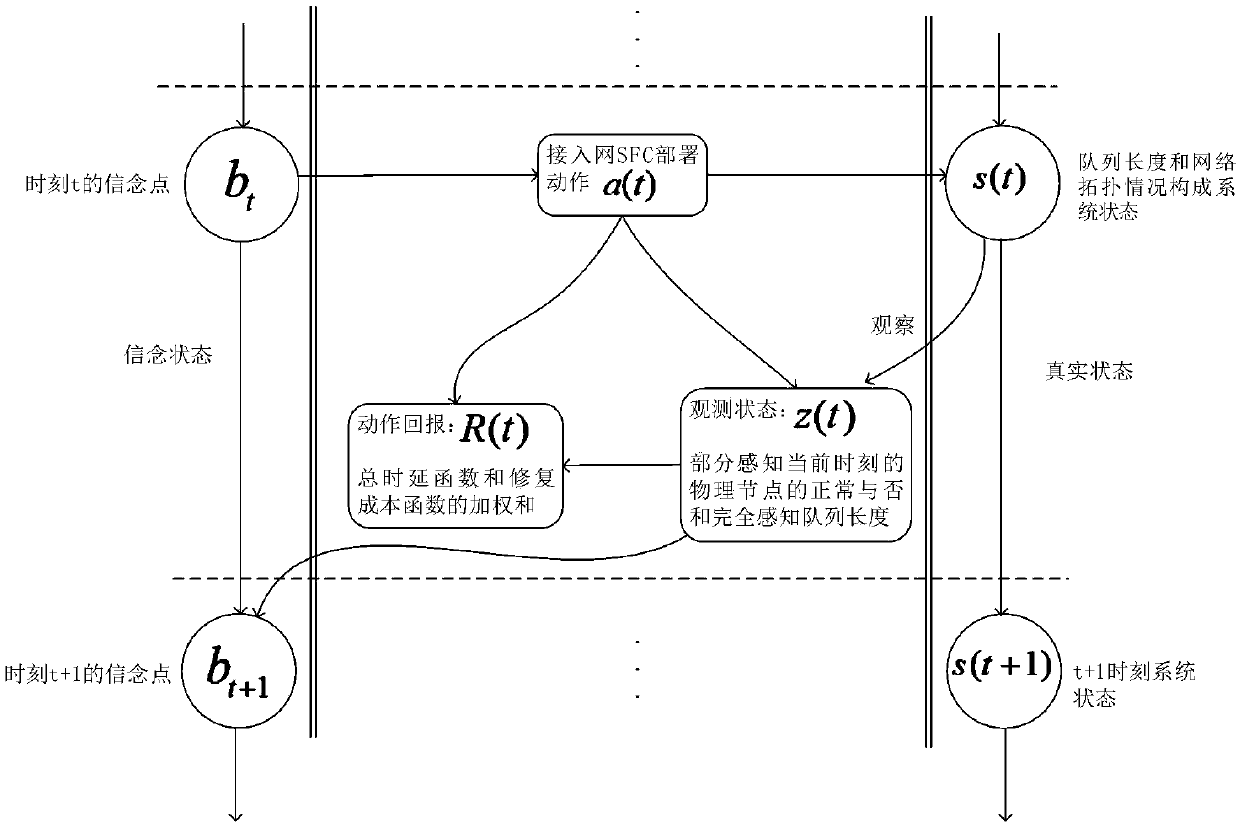

Access network service function chain deployment method based on random learning

ActiveCN108684046AImprove latencyImprove resource utilizationNetwork planningAccess networkLearning based

The invention relates to an access network service function chain deployment method based on random learning, and belongs to the technical field of wireless communication. The method comprises the steps that an access network service function chain deployment scheme based on partially observable Markov decision process partial perception topology is established for the problem of high delay causedby the physical network topology change under the 5G cloud access network scene. According to the scheme, the underlying physical network topology change is perceived through the heartbeat packet observation mechanism under the 5G access network uplink condition and the complete true topology condition cannot be acquired because of the observation error so that deployment of the service functionchain deployment of the access network slice is adaptively and dynamically adjusted by using partial perception and random learning based on the partially observable Markov decision process and the delay of the slice on the access network side can be optimized. Dynamic deployment is realized by deciding the optimal service function chain deployment mode by partially perceiving the network topologychange based on the partially observable Markov decision process so that the delay can be optimized and the resource utilization rate can be enhanced.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

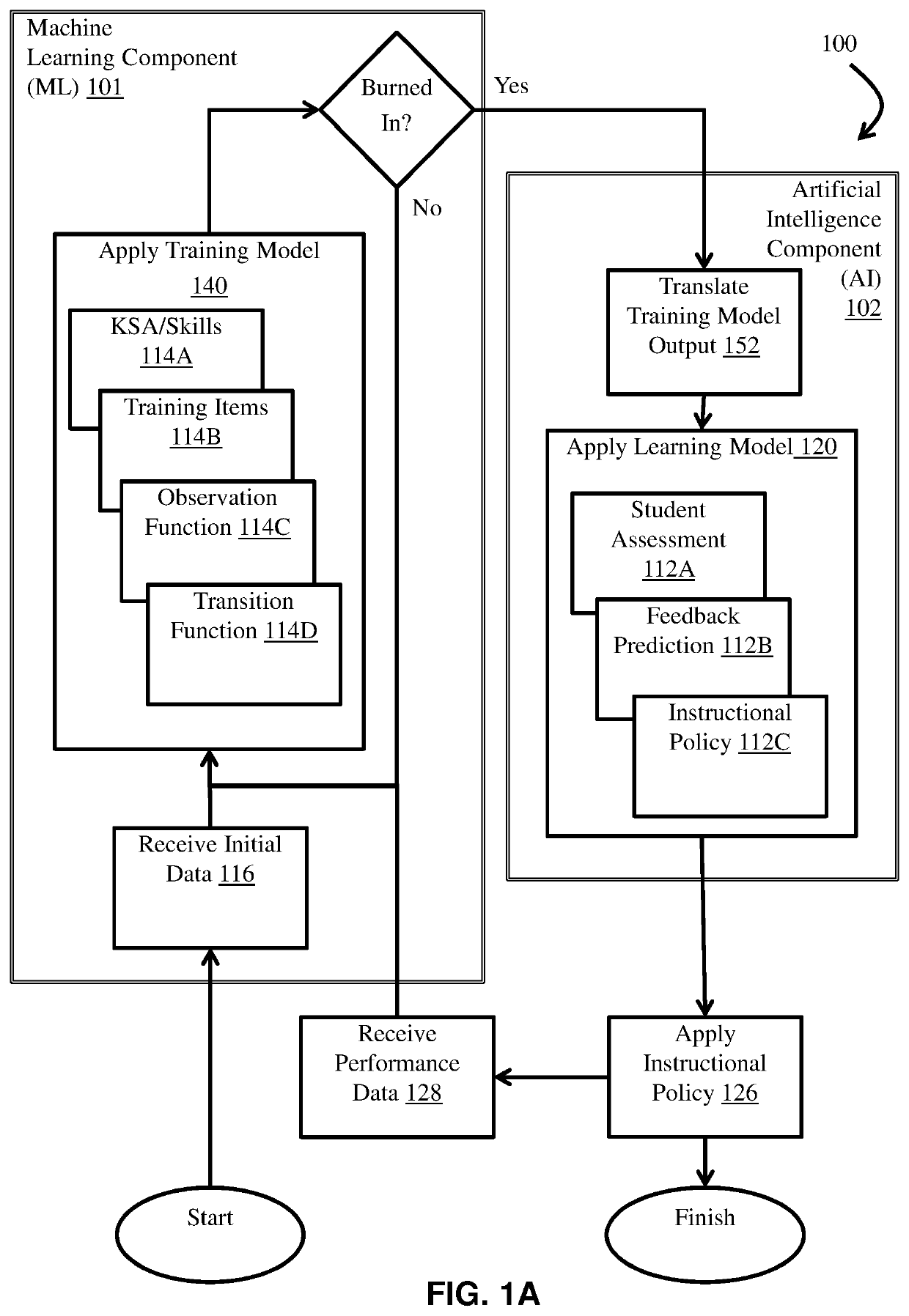

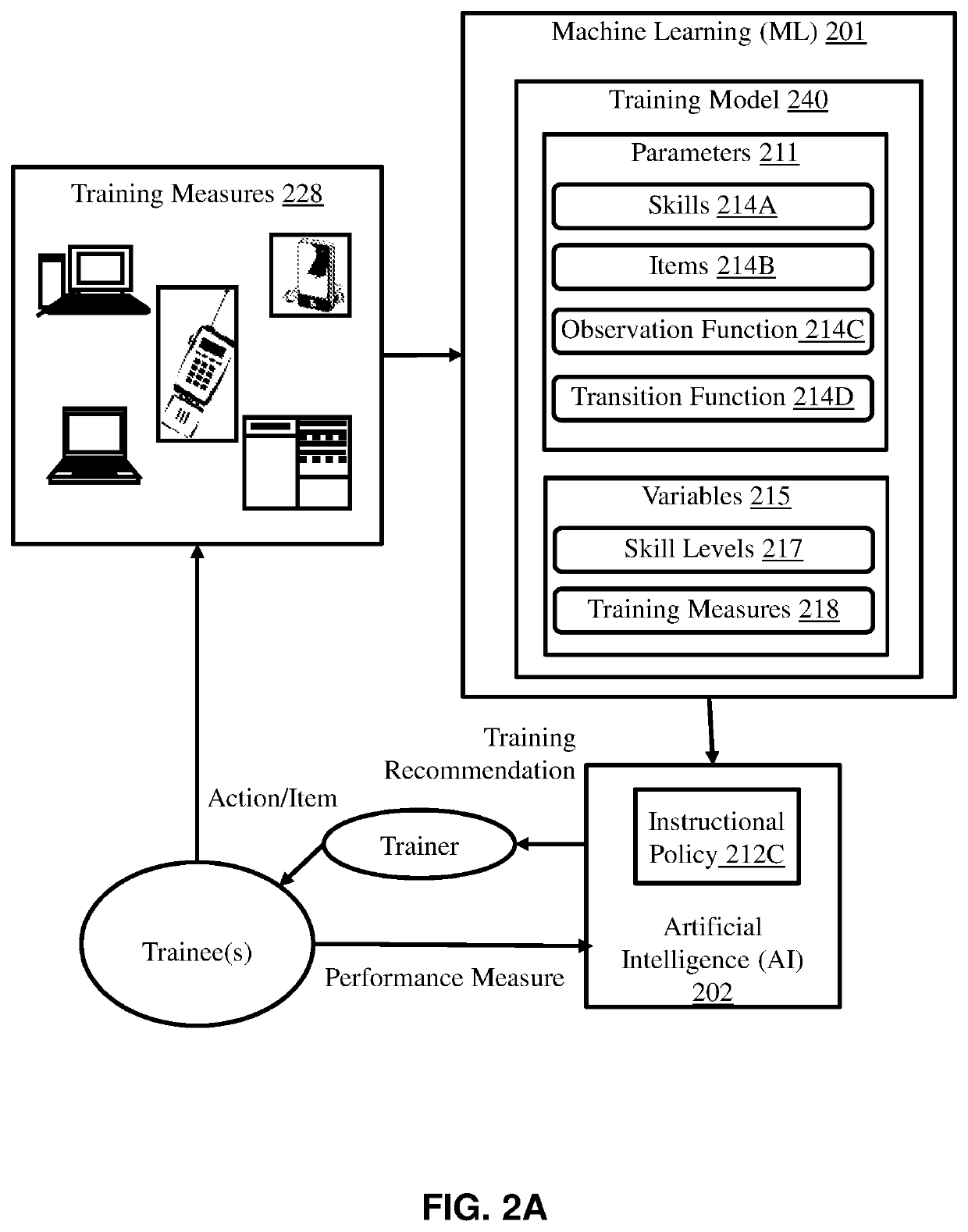

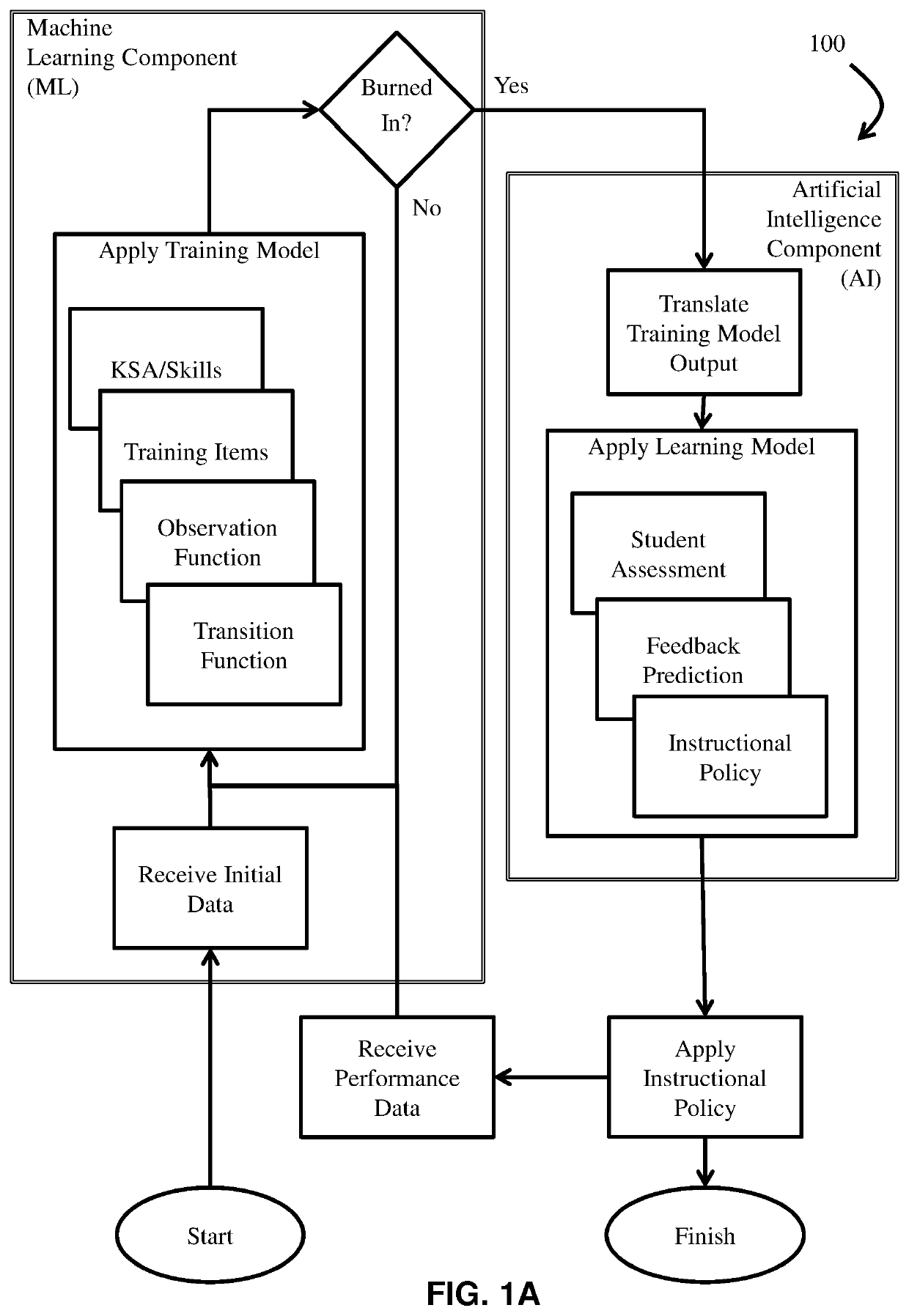

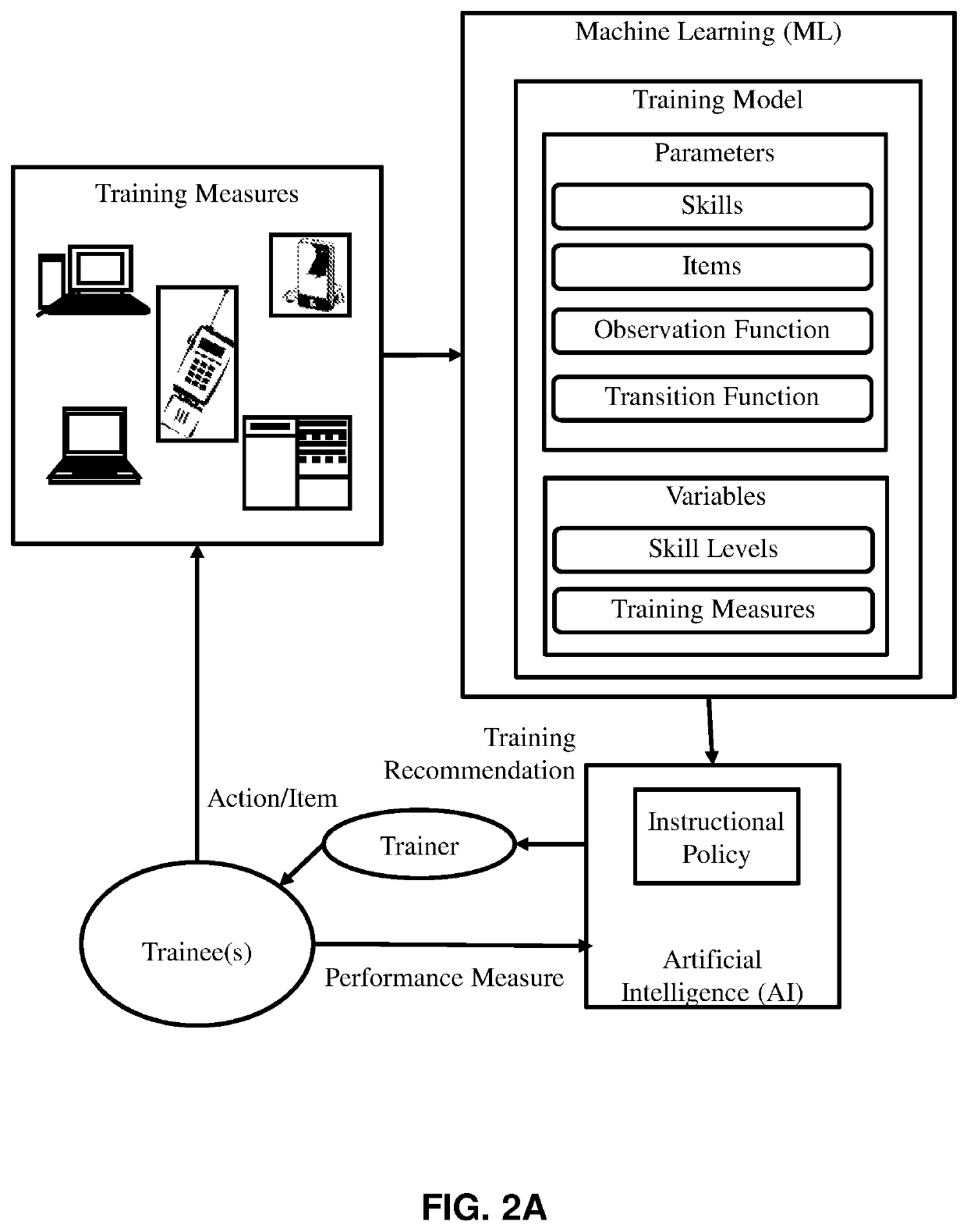

Machine learning system for a training model of an adaptive trainer

ActiveUS10552764B1Low costFast learningEnsemble learningProbabilistic networksSelf adaptiveMachine learning

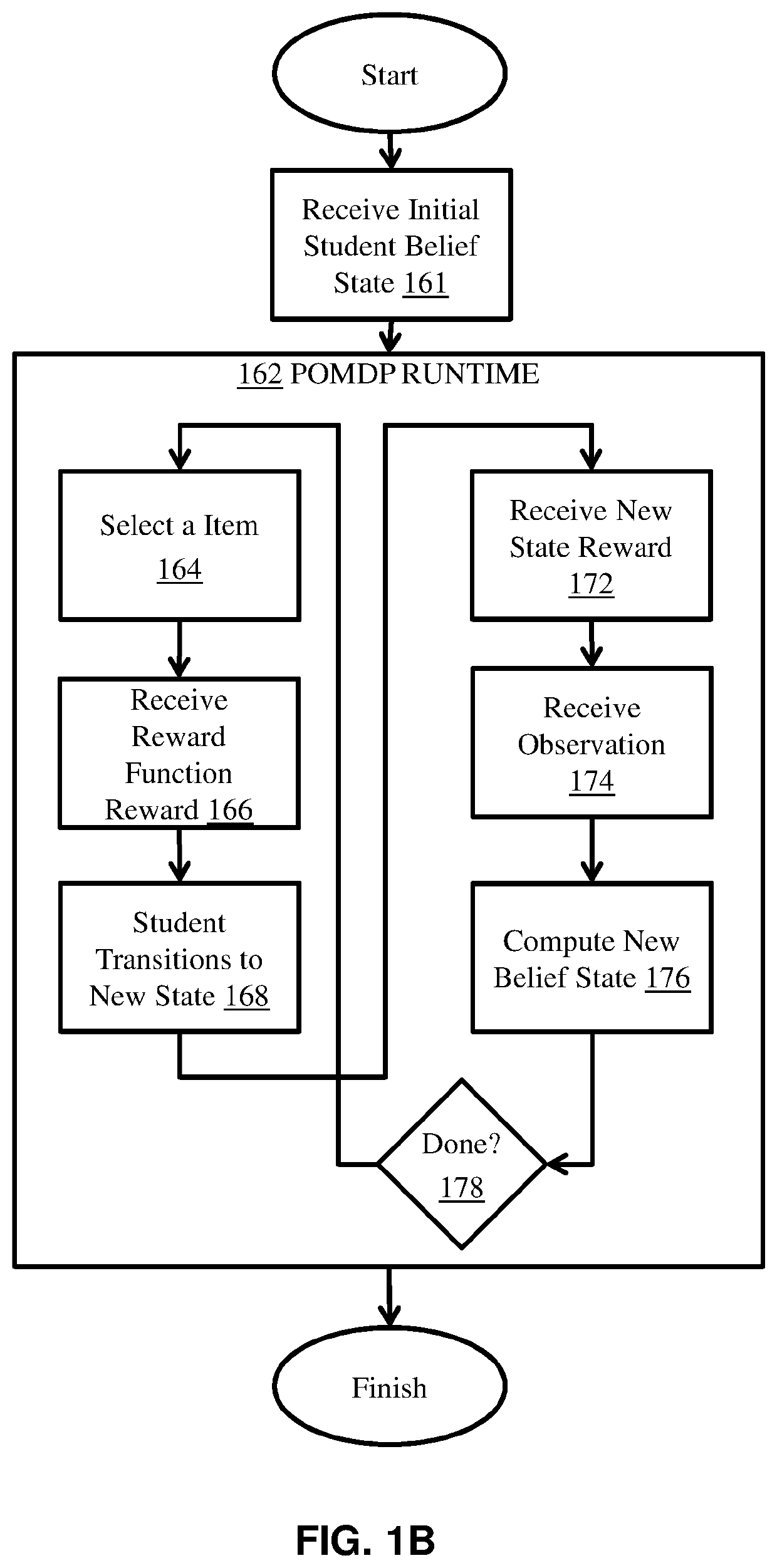

In one embodiment of the invention, a training model for students is provided that models how to present training items to students in a computer based adaptive trainer. The training model receives student performance data and uses the training model to infer underlying student skill levels throughout the training sequence. Some embodiments of the training model also comprise machine learning techniques that allow the training model to adapt to changes in students skills as the student performs on training items presented by the training model. Furthermore, the training model may also be used to inform a training optimization model, or a learning model, in the form of a Partially Observable Markov Decision Process (POMDP).

Owner:APTIMA

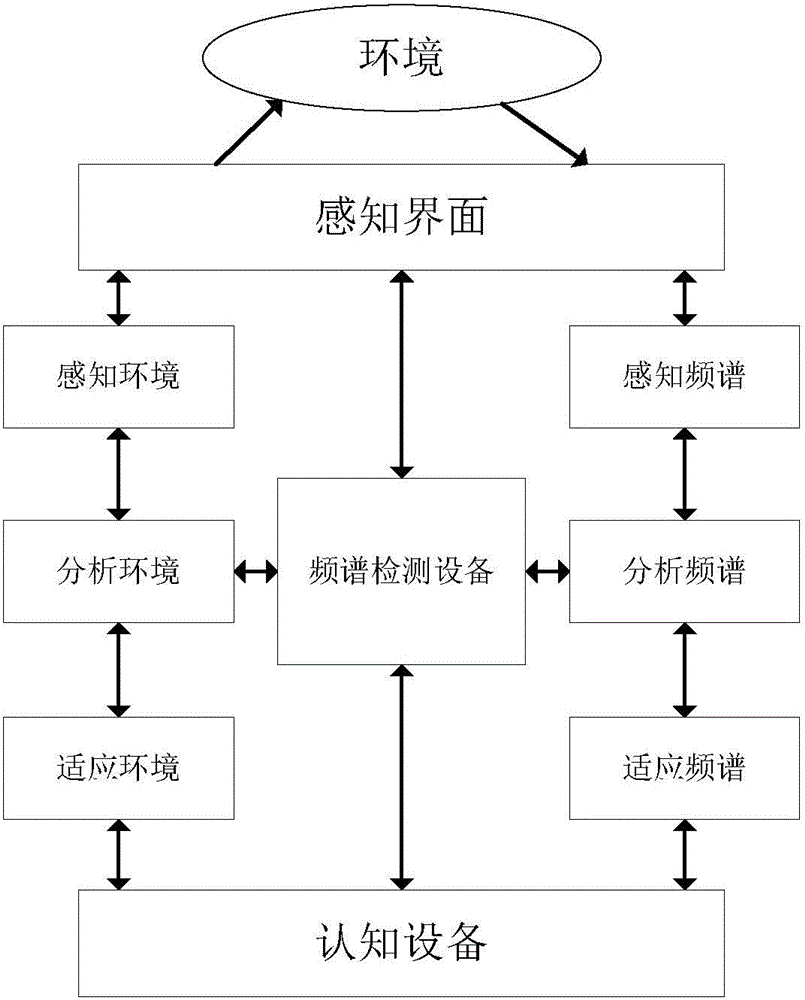

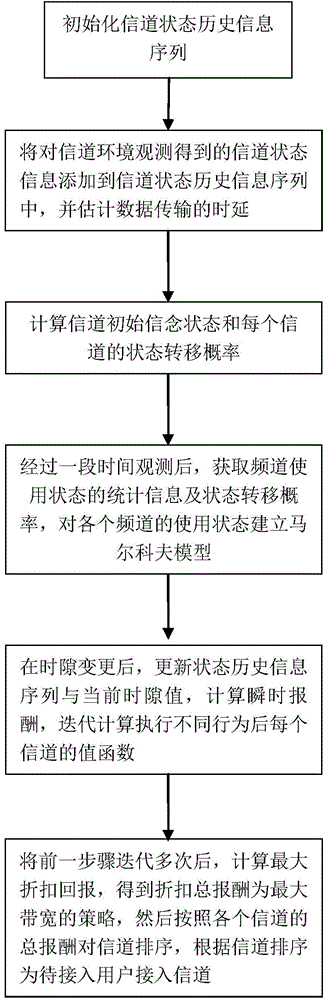

Frequency spectrum detection method based on partially observable Markov decision process model

InactiveCN104954088AGood choiceReduce the probability of collisionTransmission monitoringChannel state informationFrequency spectrum

The invention relates to a frequency spectrum detection method based on a partially observable Markov decision process model. The frequency spectrum detection method comprises the steps that channel state information is added to a channel state historical information sequence, and time delay is estimated so that the channel state information is obtained; the channel initial belief state and state transition probability of each channel are calculated; statistical information of the channel use state and the state transition probability are acquired through observation for a period of time, and a Markov model is established for the use state of each channel; when a time slot increases, the state historical information sequence and the current time slot value are updated; instantaneous remuneration is calculated via combination of the response information update belief state according to the state transition probability of the channels; the value function of each channel after performing different behaviors is calculated; and the maximum discount return acquired by secondary users is calculated and a strategy that the discount total remuneration is the maximum bandwidth is obtained, the channels are ordered in a decreasing way according to the total remuneration of each channel, and a user is guided to try to be accessed to the channels according to the new channel order if data transmission is required.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

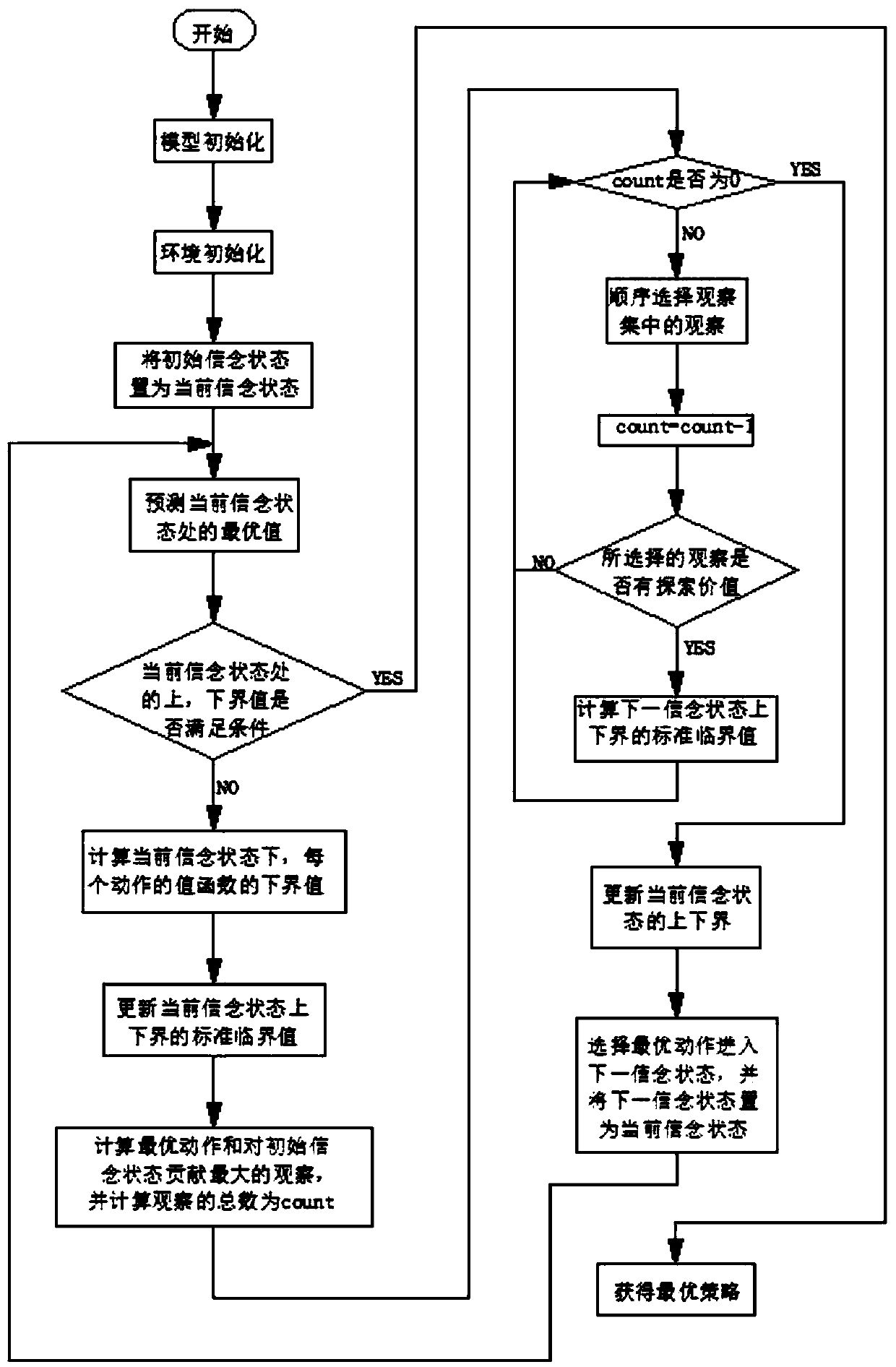

Robot optimal path planning method based on partially observable Markov decision process

ActiveCN108680155AImprove efficiencyExcellent pathNavigational calculation instrumentsPosition/course control in two dimensionsAlgorithmPlanning method

The invention discloses a robot optimal path planning method based on a partially observable Markov decision process. A robot searches an optimal path to a target position, a POMDP model and a SARSOPalgorithm are considered as a basis, and a GLS search method is utilized as a heuristic condition during searching. For a continuous state large-scale observation space problem, through usage of the robot optimal path planning method the number of times of belief upper and lower bound updating is reduced than that of early classical algorithms adopting test as a heuristic condition to repeat updating of multiple similar paths, and final optimal strategy is not affected, thereby improving the algorithm efficiency; and in the same time, the robot can get a better strategy and find a better path.

Owner:SUZHOU UNIV

Information processing apparatus, information processing method and computer program

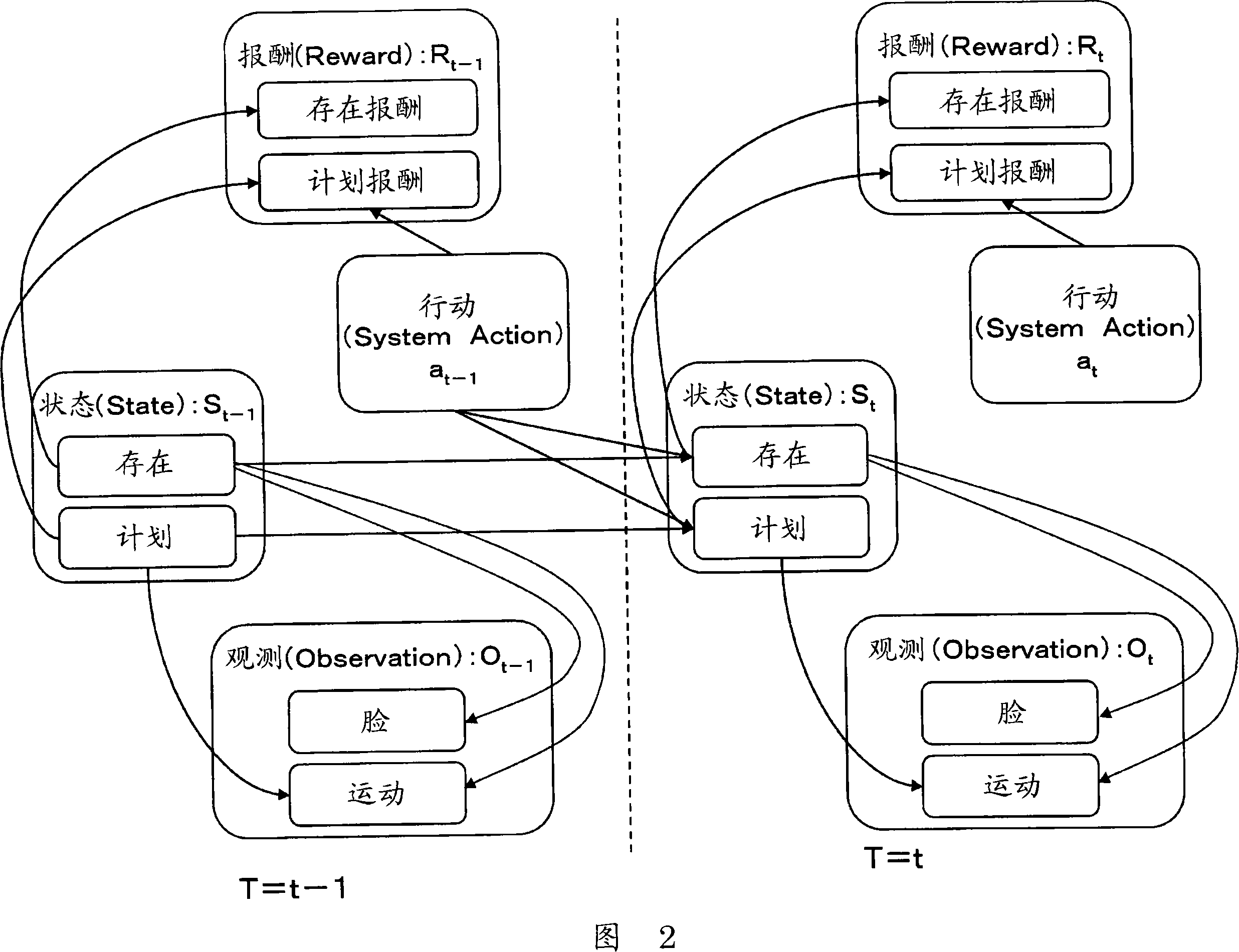

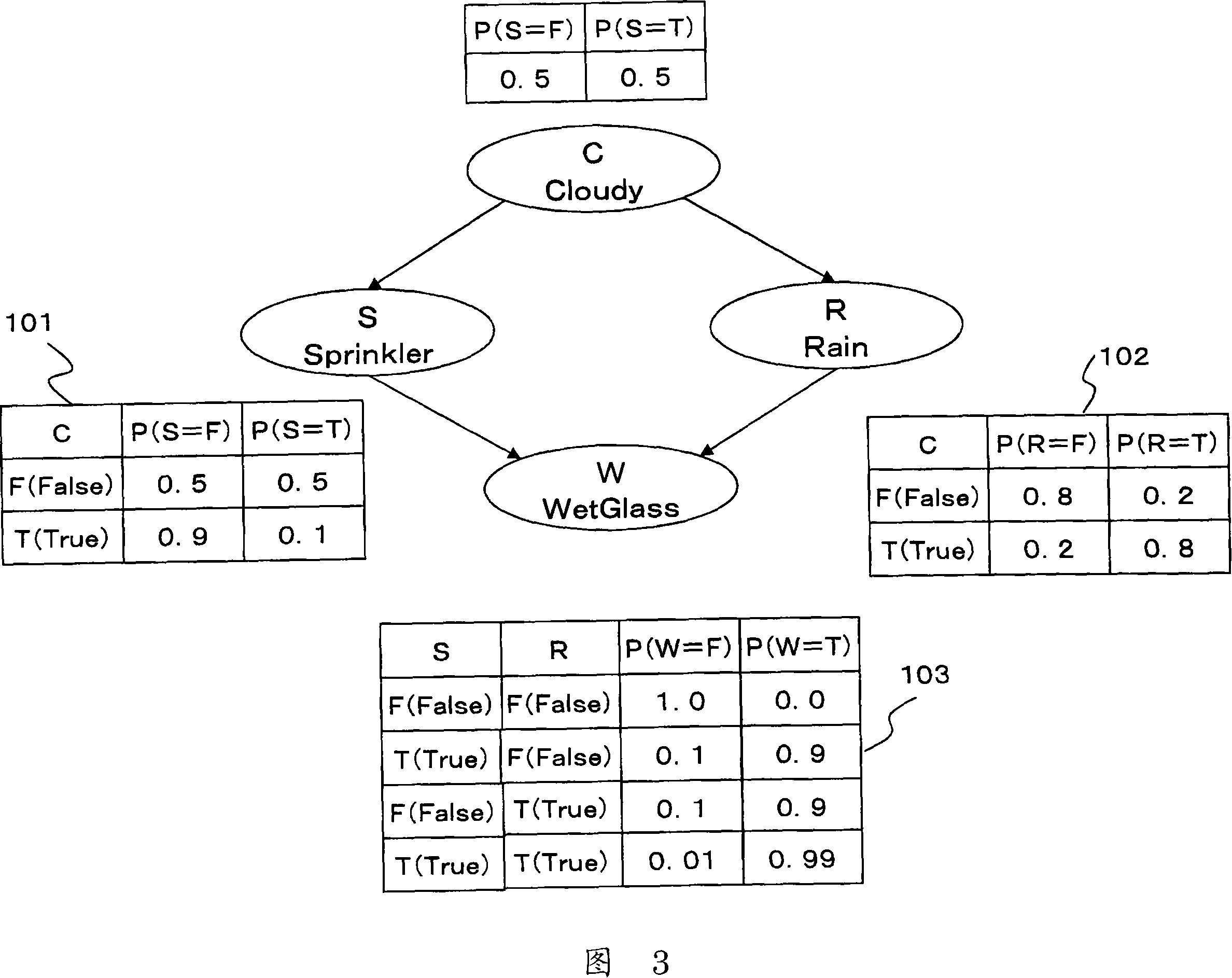

InactiveCN101105845AEffective action decision processingProgram control using stored programsProbabilistic networksInformation spaceInformation processing

Disclosed herein is an information-processing apparatus for constructing an information analysis processing configuration to be applied to information analysis processing in an observation domain including an uncertainty. The information-processing apparatus includes a data processing unit for: taking a Partially Observable Markov Decision Process as a basic configuration; taking each of elements included in every information space defined in the Partially Observable Markov Decision Process as a unit; analyzing relations between the elements; and constructing an Factored Partially Observable Markov Decision Process, which is a Partially Observable Markov Decision Process including the relations between the elements, on the basis of results of the analysis.

Owner:SONY CORP

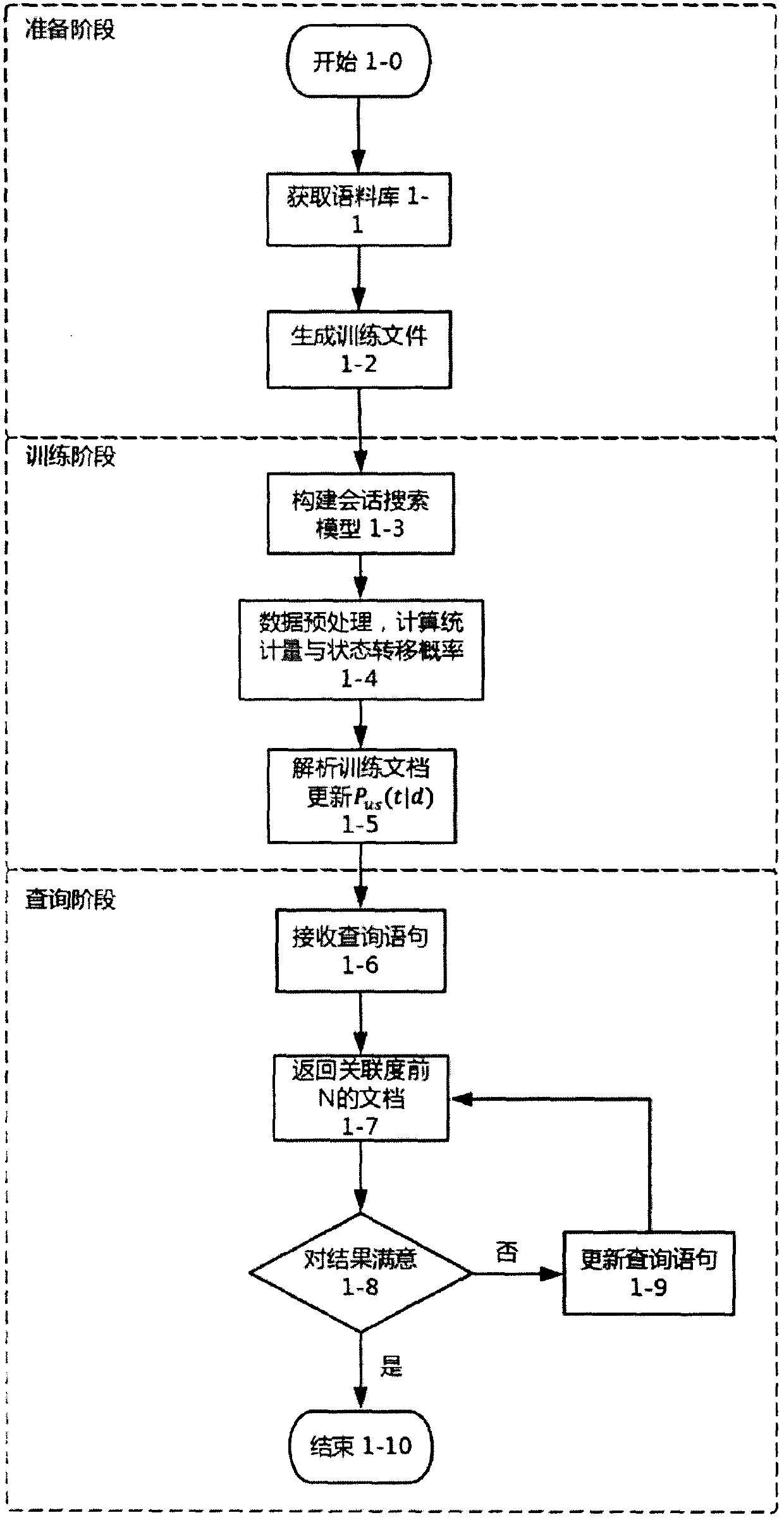

Method for searching session on basis of partially observable Markov decision process models

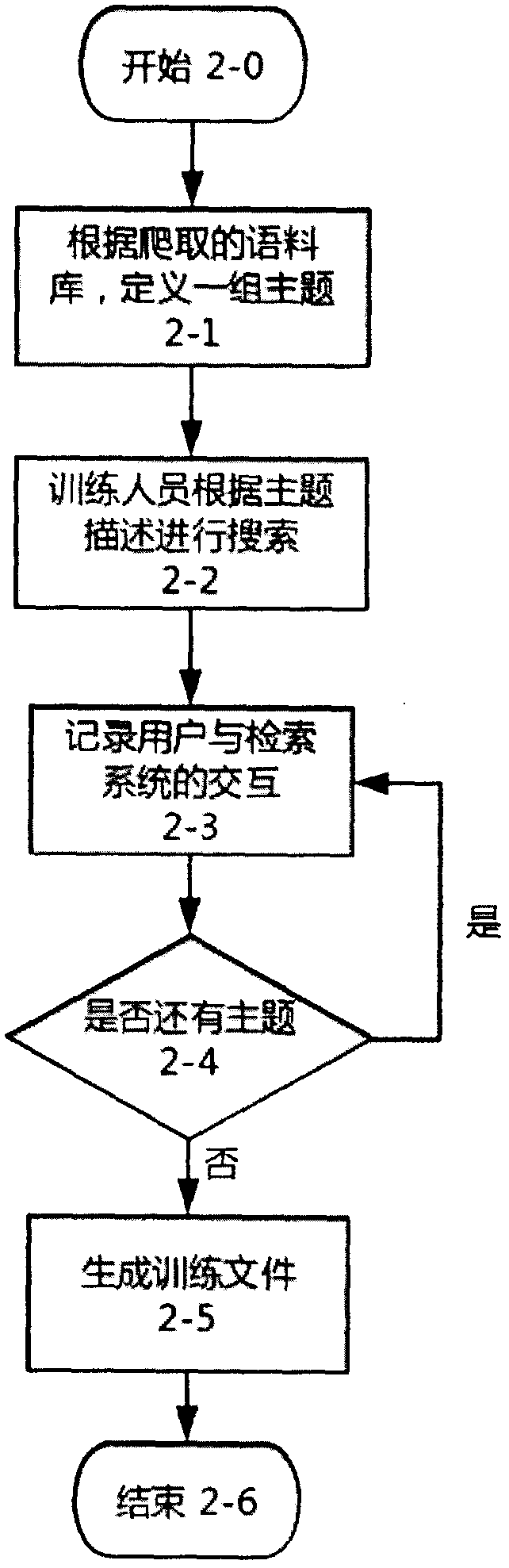

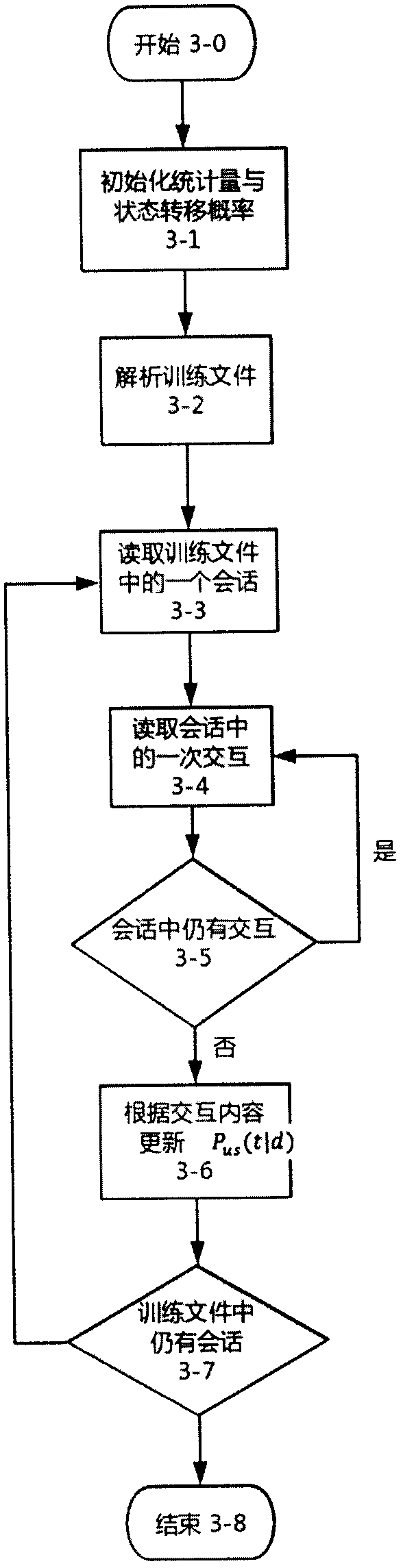

InactiveCN107729512AWeb data indexingSpecial data processing applicationsTraining phaseDocumentation

The invention discloses a method for searching session on the basis of partially observable Markov decision process models. The method includes steps of 1), carrying out preparation phases, to be morespecific, marking corpora on the basis of themes used as units, recording interaction processes when the themes are retrieved by users and generating training files after all retrieval interaction isrecorded; 2), carrying out training phases, to be more specific, initializing statistics, computing initial state transition functions, parsing interactive data of the session from the training filesand updating the state transition functions according to the interactive data; 3), carrying out retrieval phases, to be more specific, receiving initial query statements, and updating the query statements when the users dissatisfy with fed documents until satisfactory document results are fed to the users.

Owner:NANJING UNIV

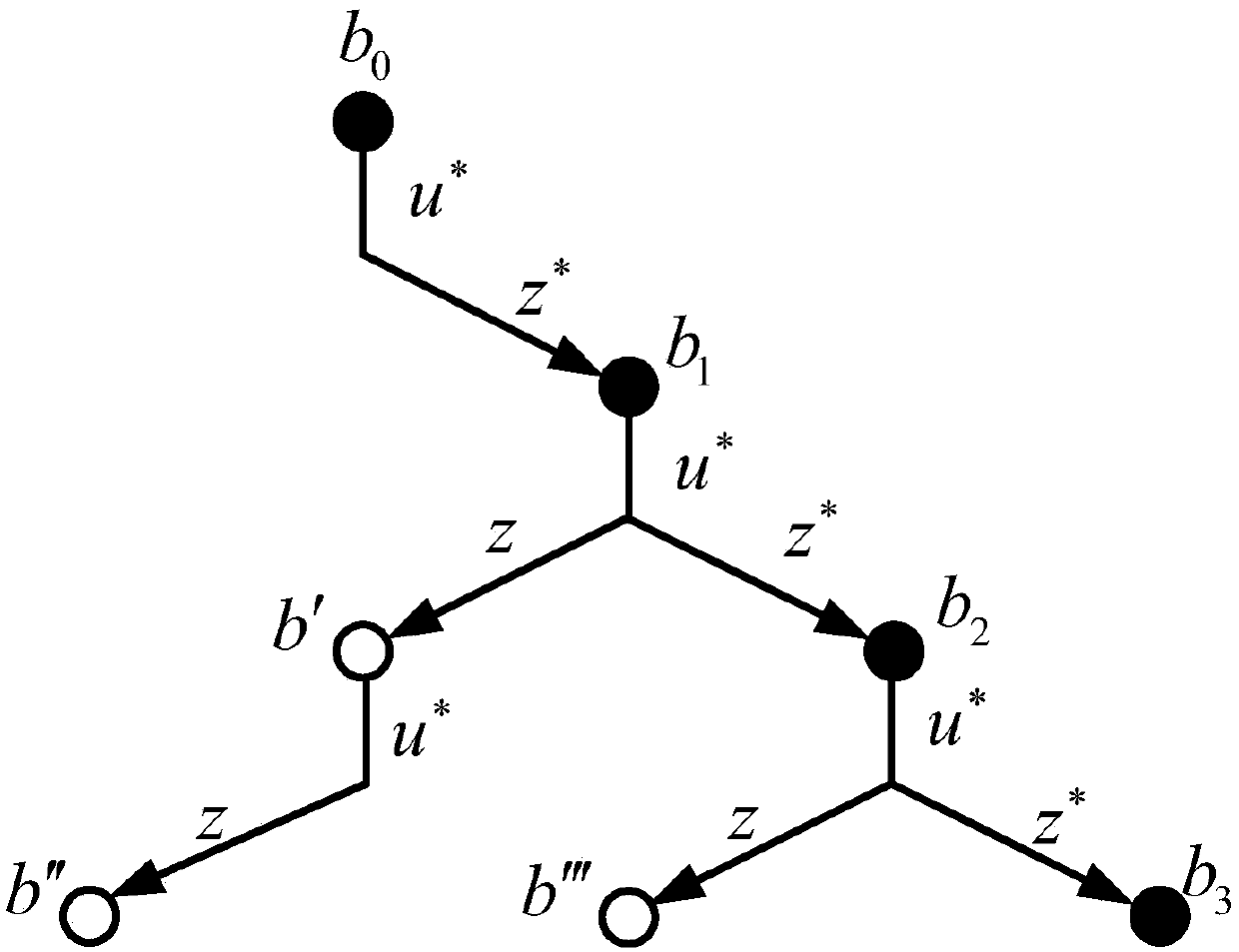

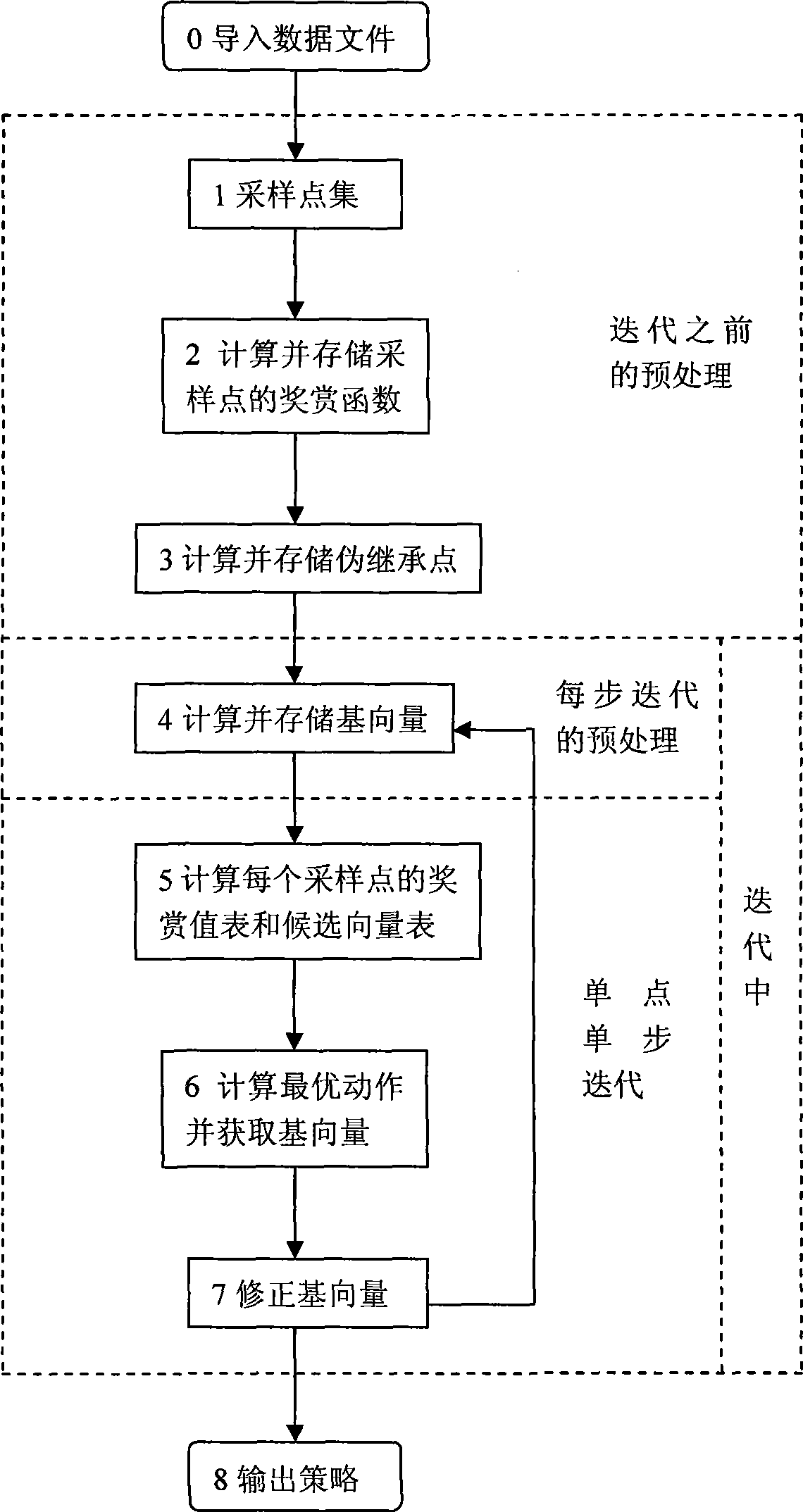

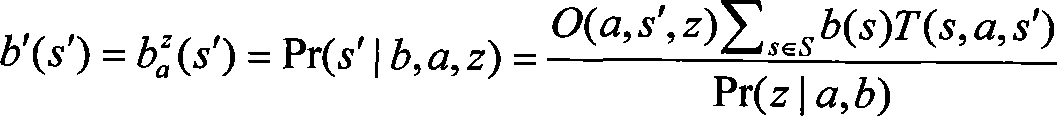

Preprocess method of partially observable Markov decision process based on points

InactiveCN101398914AFast convergenceOvercoming the problem of high computational complexityMathematical modelsPretreatment methodReward value

The invention provides a pre-processing method of a point-based partially observable Markov decision process. The method comprises the following steps: 1. pre-processing before iteration, which comprises: a. point set is sampled by the random interaction with the environment; b. reward function of the sampling point is computed and stored; c. pseudo inheritance point is computed and stored; and d. ending; 2. pre-processing of each step of iteration, which comprises: e. a basis vector is computed and stored; and f. ending; and 3. single-point and single-step iteration , which comprises: g. a reward value table and a candidate vector table of each sampling point are computed; h. optimal action is computed and the basis vector is obtained; i. the basis vector is corrected by an error term; and j. ending. The pre-processing method of the point-based partially observable Markov decision process pre-processes each sampled belief point, provides conception of the basis vector, avoids a mass of repeated and meaningless computations, and accelerates the algorithmic speed by 2-4 times.

Owner:NANJING UNIV

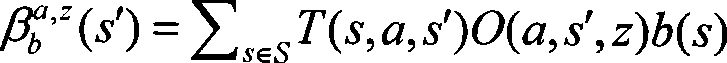

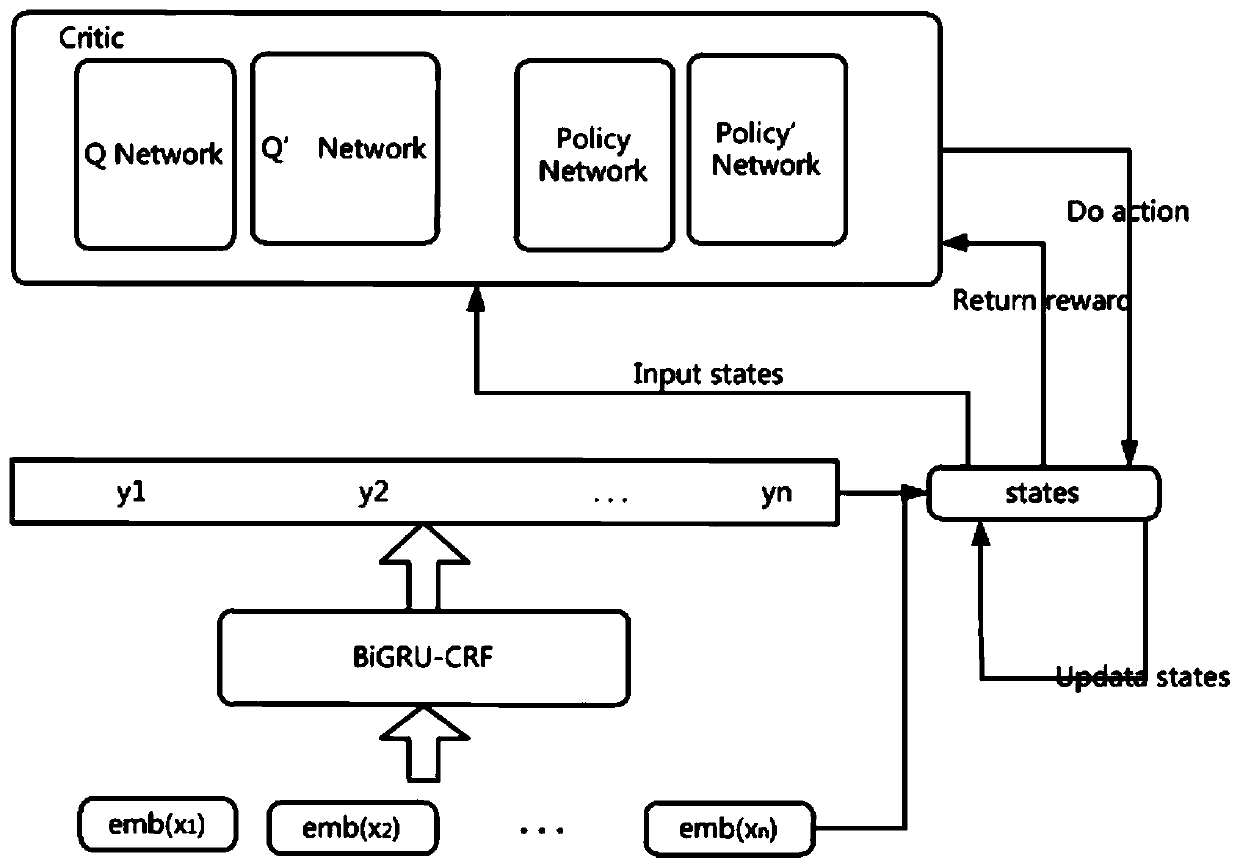

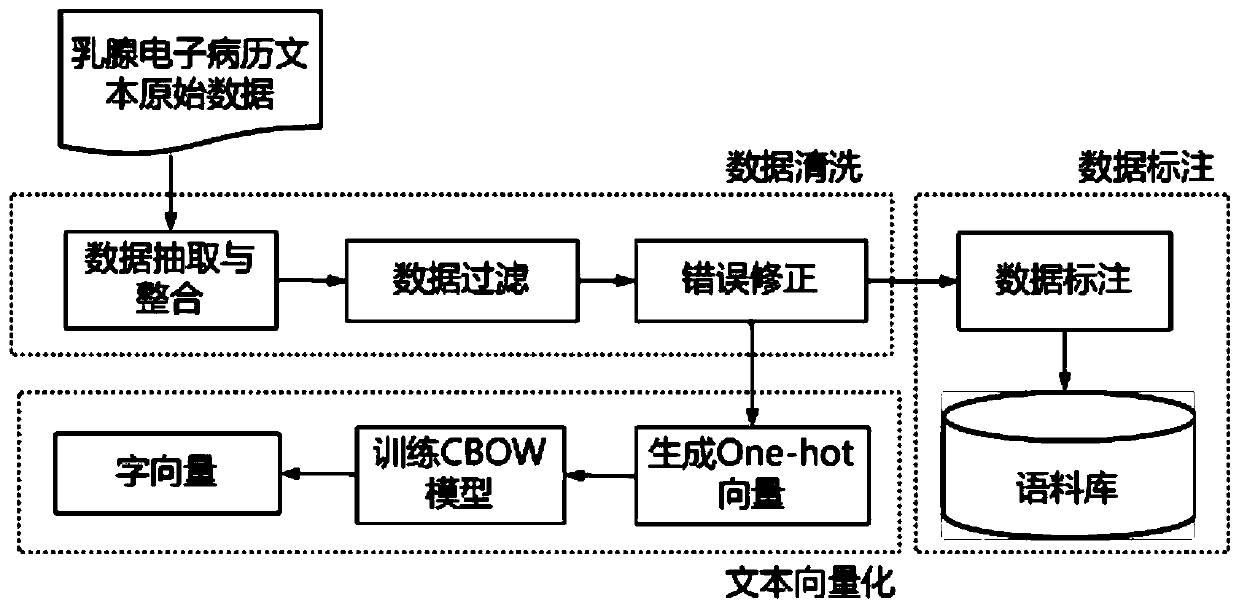

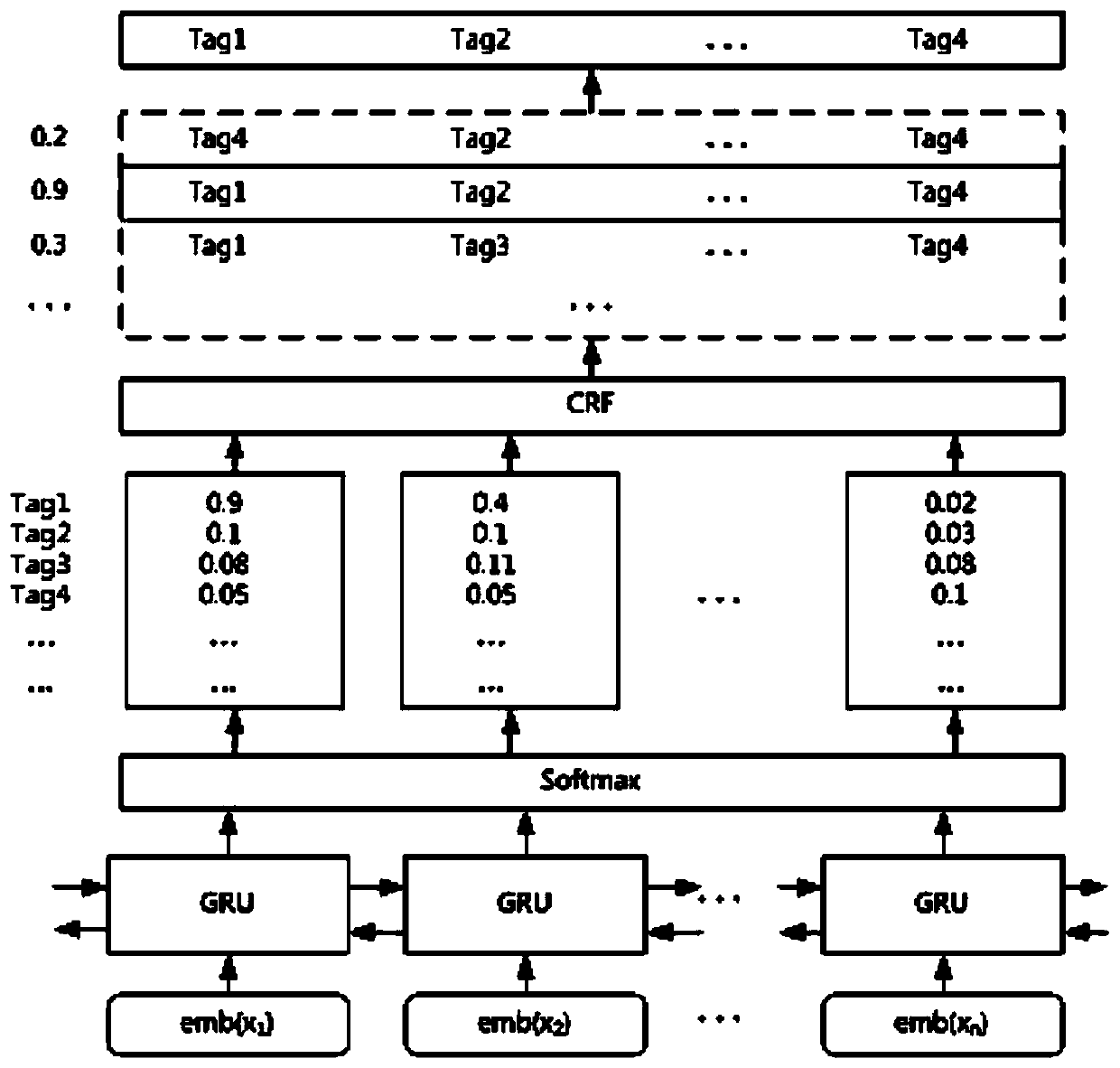

Mammary gland medical record entity recognition labeling enhancement system based on multi-agent reinforcement learning

PendingCN111312354AIncrease chance of cureImprove entity recognition performanceNatural language data processingNeural architecturesMedical recordOriginal data

The invention discloses a mammary gland medical record entity recognition labeling enhancement system based on multi-agent reinforcement learning. The system comprises a mammary gland clinical electronic medical record data preprocessing module used for processing original data into a representation form which can be identified and analyzed by a system and analyzing the mammary gland clinical electronic medical record data in terms of medical record contents, structural features, language features and semantic features; a medical clinical entity recognition module used for extracting medical concept entities in texts; and a reinforcement learning labeling enhancement module used for correcting wrong entity labels extracted from mammary gland electronic medical records. According to the method, the multi-agent reinforcement learning model for entity recognition sequence labeling is designed based on the partially observable Markov decision process, the labeling result is corrected, andcompared with a traditional deep learning entity recognition model, the accuracy is effectively improved.

Owner:DONGHUA UNIV +1

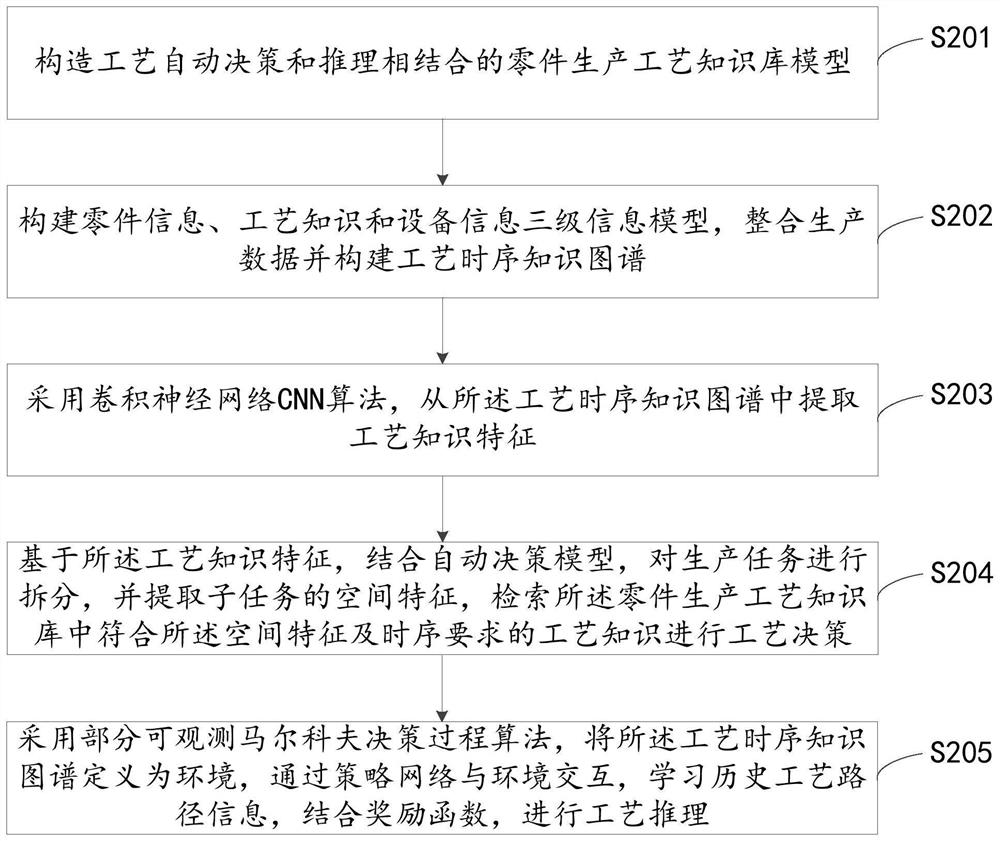

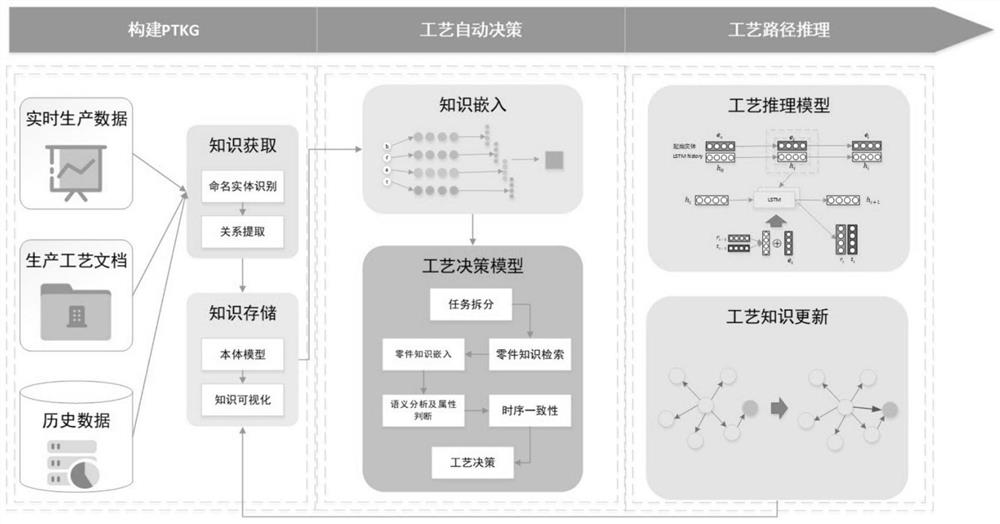

Process automatic decision-making and reasoning method and device, computer equipment and storage medium

PendingCN114647741ADynamicImprove decision-making response speedData processing applicationsNeural architecturesDecision modelDecision taking

The invention belongs to the field of deep learning, and relates to a process automatic decision-making and reasoning method and device, computer equipment and a storage medium, and the method comprises the steps: constructing a part production process knowledge base model; constructing a three-level information model of part information, process knowledge and equipment information, integrating production data and constructing a process time sequence knowledge graph; extracting process knowledge features from the process time sequence knowledge graph; splitting the production task by combining an automatic decision-making model based on the process knowledge features, extracting the spatial features of the subtasks, and retrieving the process knowledge meeting the spatial features and the time sequence requirements in the part production process knowledge base for process decision-making; a partially observable Markov decision process algorithm is adopted to define a process time sequence knowledge graph as an environment, and process reasoning is carried out. Reasoning is carried out on an unknown production process, and after a reasoning path is obtained, manual verification is carried out, and then the reasoning path is updated to the process time sequence knowledge graph, so that process knowledge is more perfect.

Owner:GUANGDONG POLYTECHNIC NORMAL UNIV

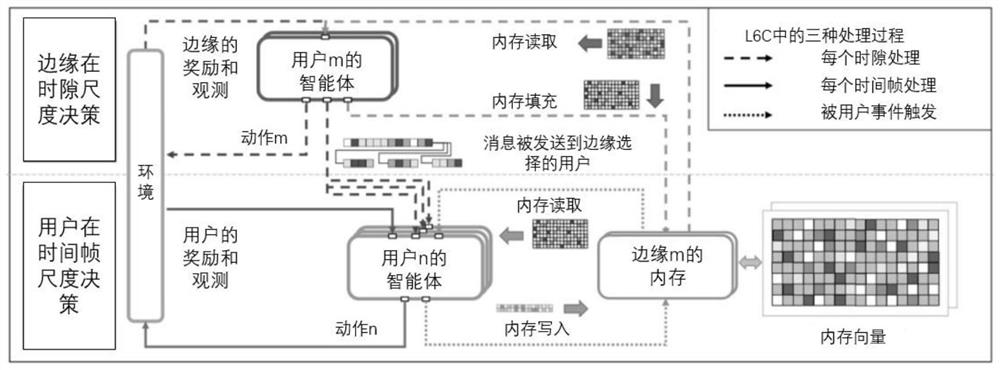

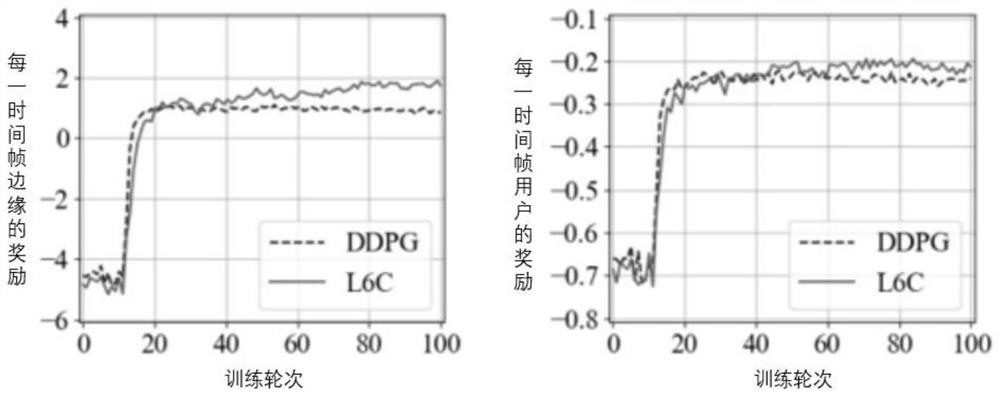

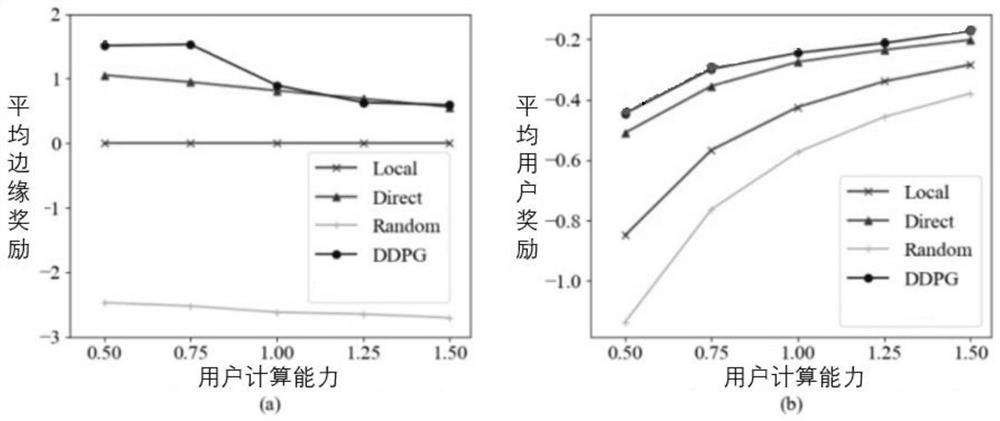

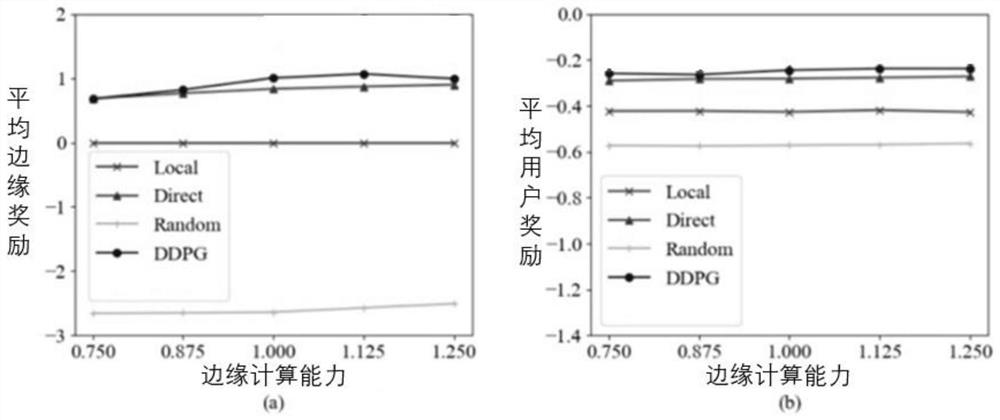

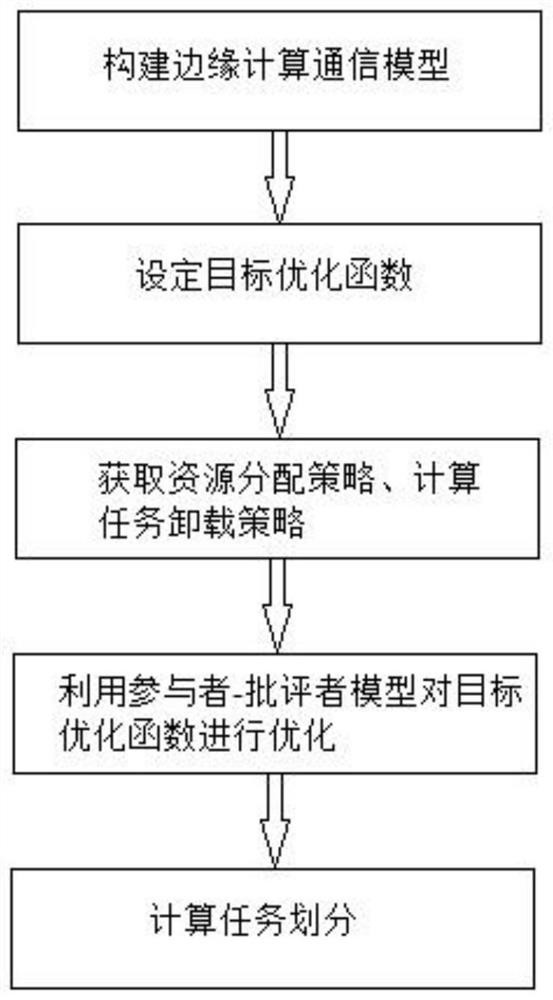

Information interaction method for improving multi-agent reinforcement learning edge calculation effect

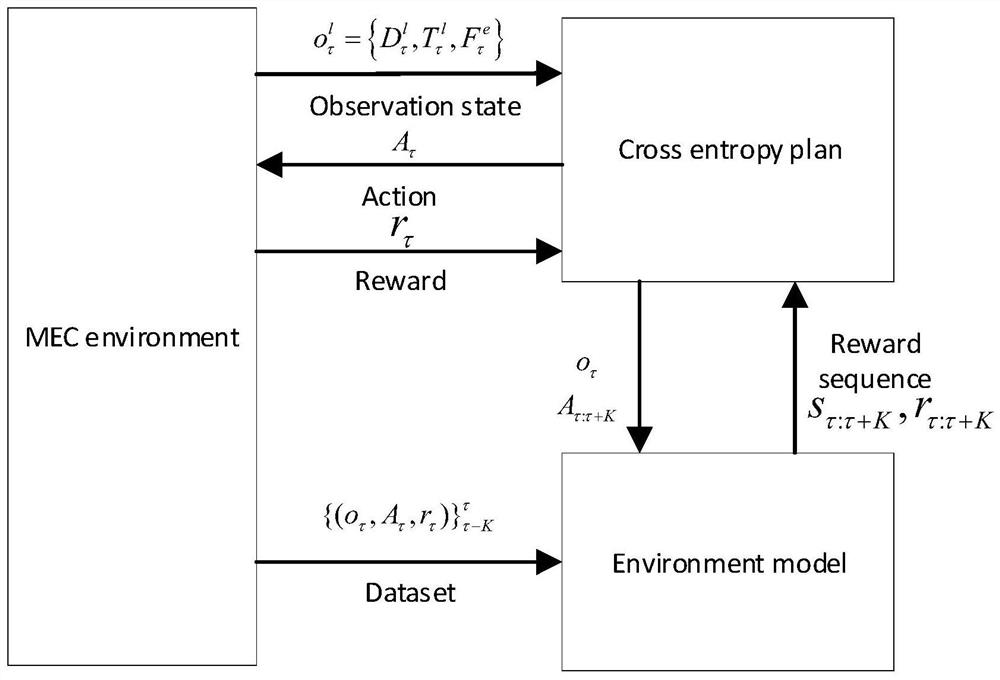

ActiveCN113641504AImprove performanceReduce energy consumptionResource allocationHigh level techniquesAlgorithmEdge node

The invention discloses an information interaction method for improving a multi-agent reinforcement learning edge calculation effect. The information interaction method comprises the following steps of constructing an edge calculation communication model based on a partially observable Markov decision process, establishing a shared memory space for executing memory reading, memory filling and memory writing operations on each edge node, setting a target optimization function according to a user cost minimization target and an edge node utility maximization target, setting a time slot length, a time frame length, an initialization time slot and a time frame, obtaining a resource allocation strategy of the edge node, and executing a memory filling operation, enabling the user to execute memory reading and memory writing operations, obtaining a calculation task, a calculation task data volume and calculation capability of each user at the same time, and obtaining a task unloading strategy of the calculation user, optimizing the target optimization function by using a participant-criminator model, and dividing and processing the calculation task. The decision effectiveness of the edge node and the user can be maximized.

Owner:TIANJIN UNIV

Utility decomposition with deep corrections

One or more aspects of utility decomposition with deep corrections are described herein. An entity may be detected within an environment through which an autonomous vehicle is travelling. The entity may be associated with a current velocity and a current position. The autonomous vehicle may be associated with a current position and a current velocity. Additionally, the autonomous vehicle may have a target position or desired destination. A Partially Observable Markov Decision Process (POMDP) model may be built based on the current velocities and current positions of different entities and the autonomous vehicle. Utility decomposition may be performed to break tasks or problems down into sub-tasks or sub-problems. A correction term may be generated using multi-fidelity modeling. A driving parameter may be implemented for a component of the autonomous vehicle based on the POMDP model and the correction term to operate the autonomous vehicle autonomously.

Owner:HONDA MOTOR CO LTD

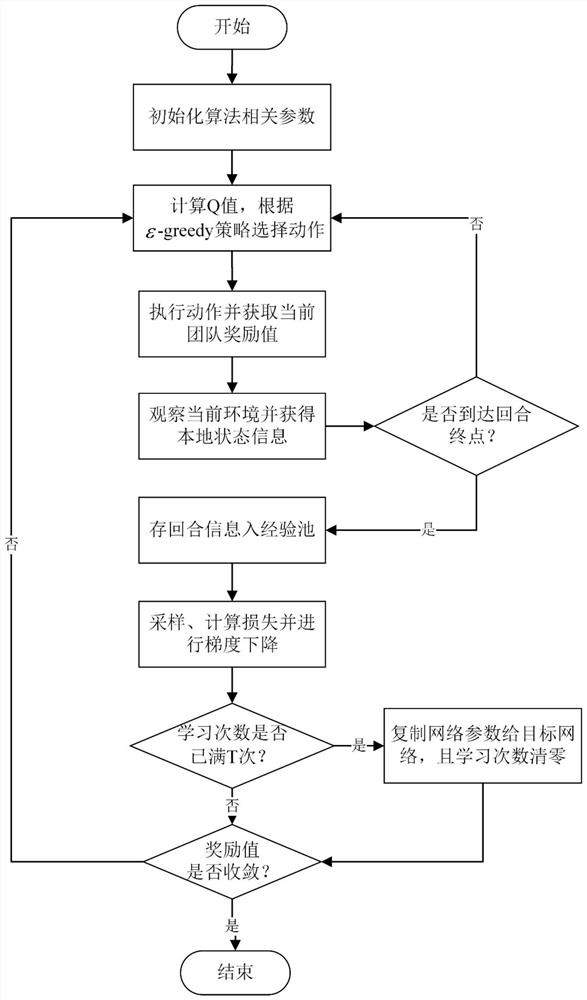

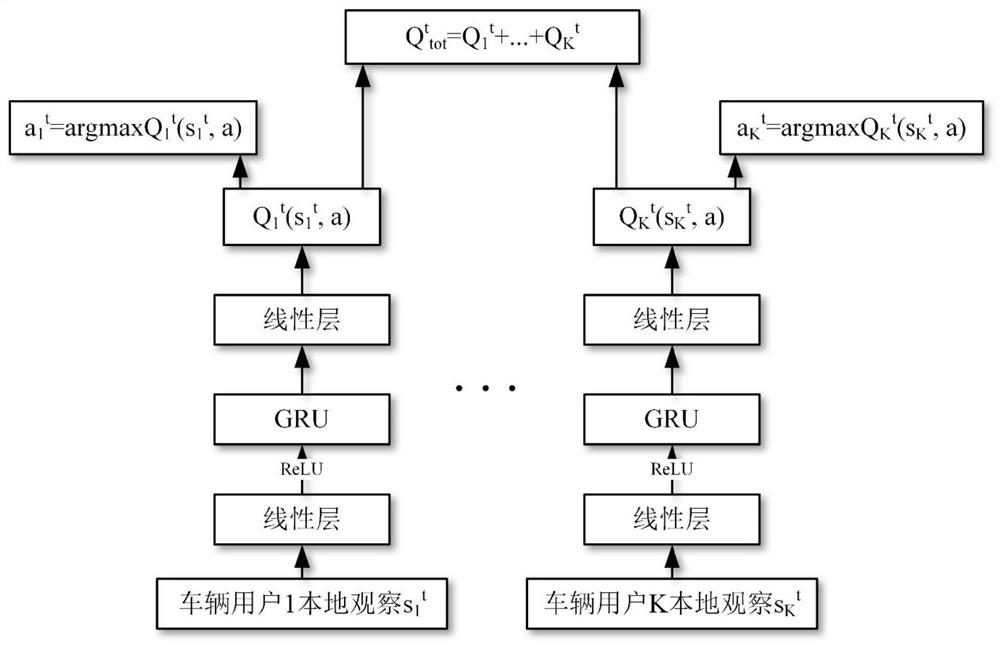

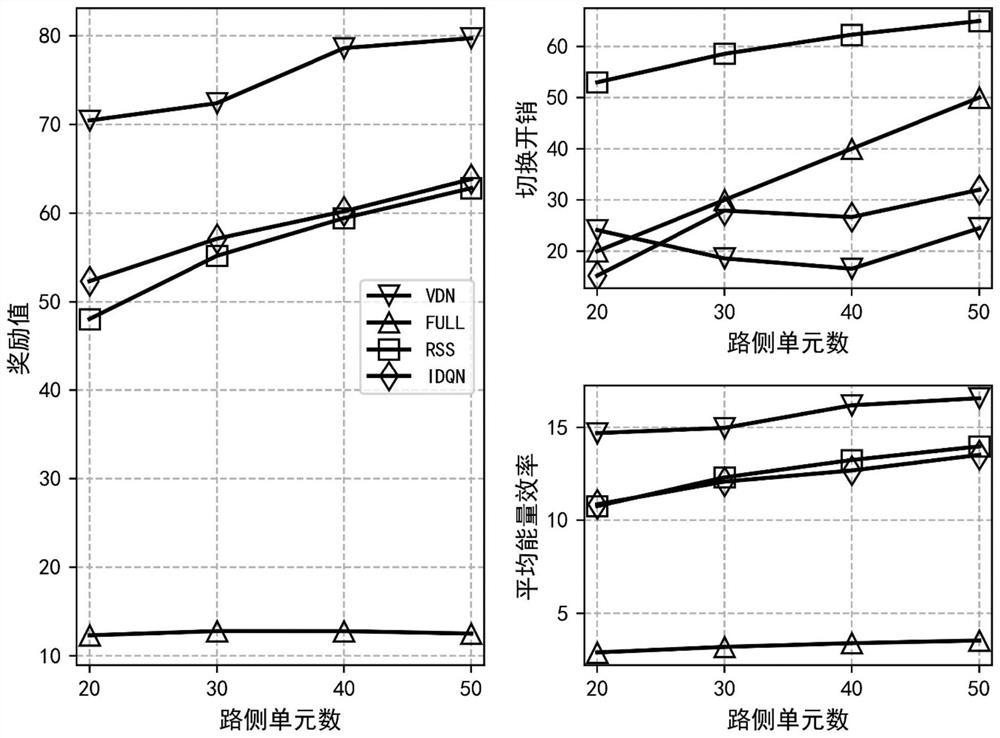

Heterogeneous Internet of Vehicles user association method based on multi-agent deep reinforcement learning

PendingCN114449482ASave resourcesGuaranteed continuityParticular environment based servicesVehicle-to-vehicle communicationEngineeringData mining

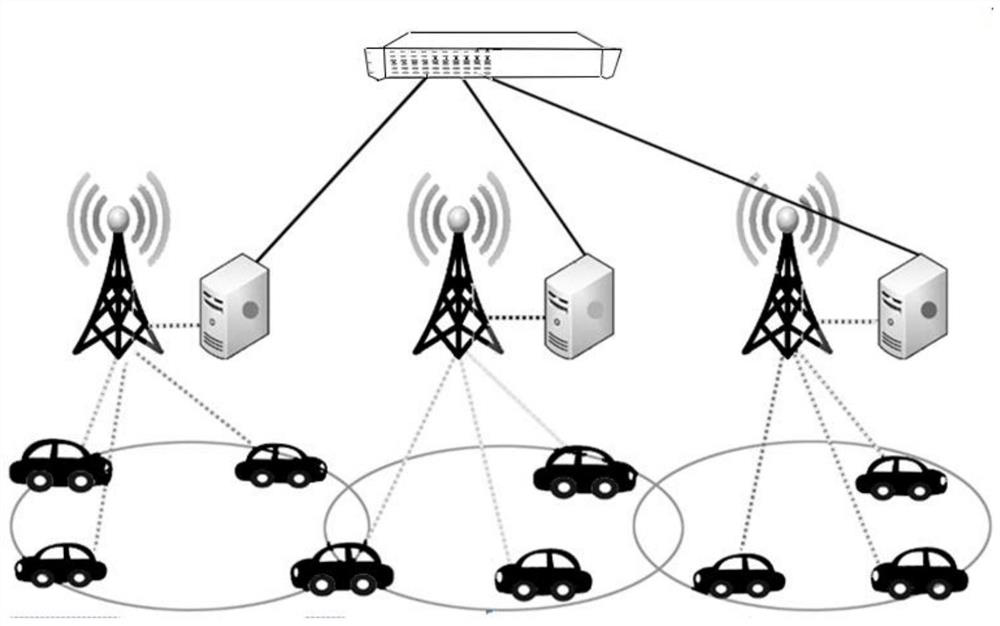

The invention discloses a heterogeneous Internet of Vehicles user association method based on multi-agent deep reinforcement learning, and the method comprises the steps: firstly modeling a problem into a partially observable Markov decision process, and then employing the idea of decomposing a team value function, and specifically comprises the steps: building a centralized training distributed execution framework, the team value function is connected with each user value function through summation so as to achieve the purpose of implicit training of the user value functions; and then, referring to experience playback and a target network mechanism, performing action exploration and selection by using an epsilon-greedy strategy, storing historical information by using a recurrent neural network, selecting a Huber loss function to calculate loss and perform gradient descent at the same time, and finally learning the association strategy of the heterogeneous Internet of Vehicles users. Compared with a multi-agent independent deep Q learning algorithm and other traditional algorithms, the method provided by the invention can more effectively improve the energy efficiency and reduce the switching overhead at the same time in a heterogeneous Internet of Vehicles environment.

Owner:NANJING UNIV OF SCI & TECH

Multi-robot collaborative navigation and obstacle avoidance method

ActiveCN113821041AGood local minimaGeneralization strategyPosition/course control in two dimensionsVehiclesAlgorithmEngineering

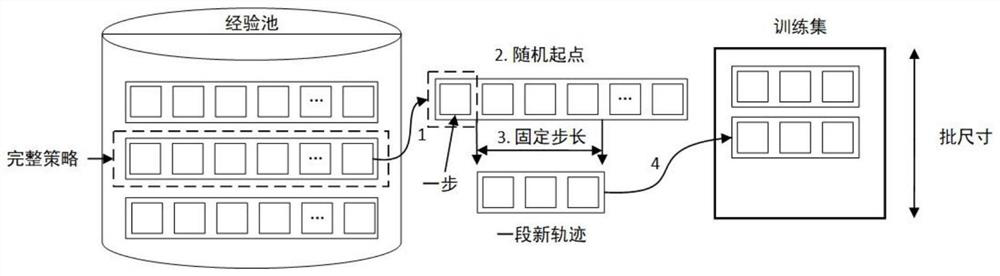

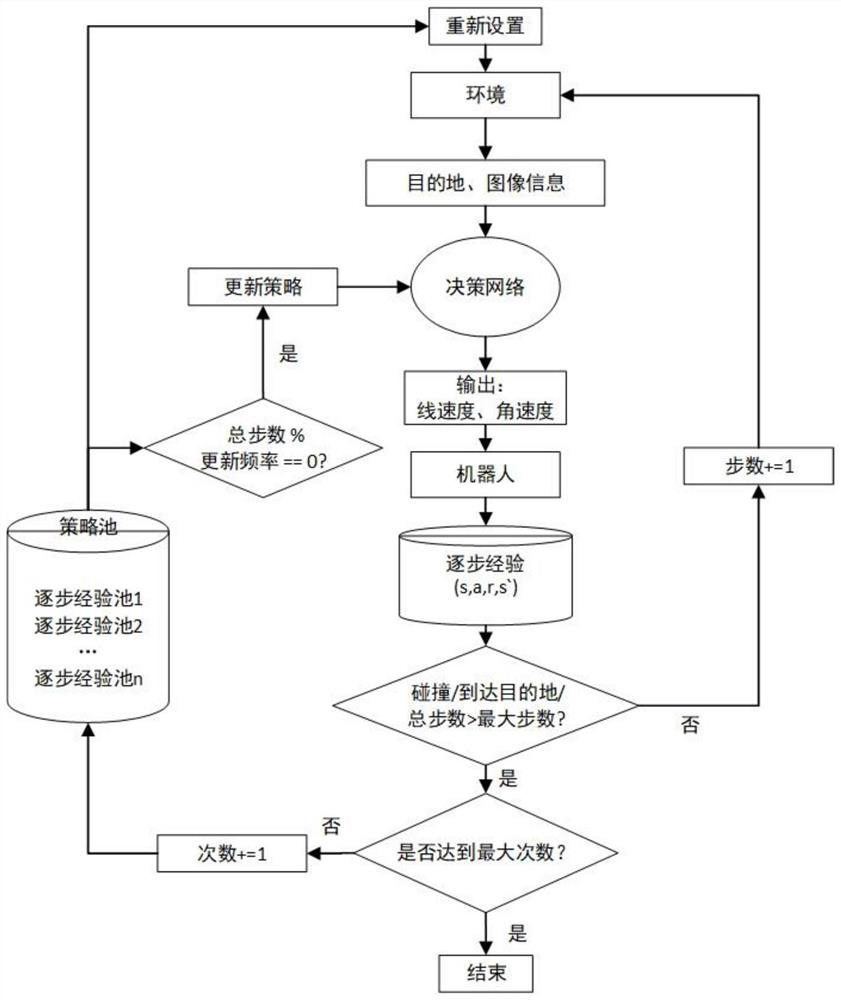

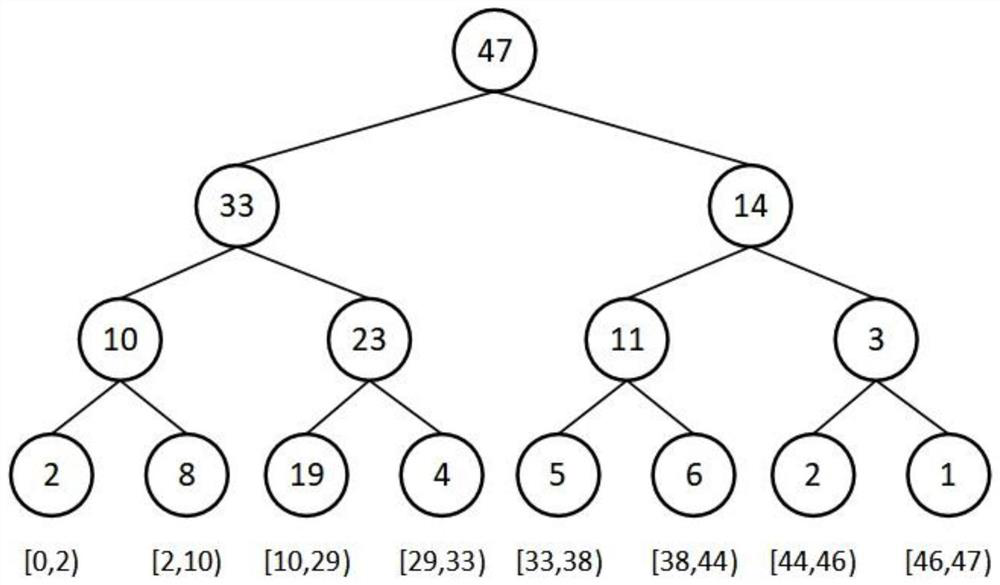

The invention discloses a multi-robot collaborative navigation and obstacle avoidance method. The method comprises the following steps of modeling a decision process of a robot in an unknown environment according to a partially observable Markov decision process; according to the environment modeling information of the current robot, introducing a depth deterministic strategy gradient algorithm, extracting a sampled image sample, and inputting the sampled image sample into a convolutional neural network for feature extraction; improvement being carried out on the basis of a depth deterministic strategy gradient algorithm, a long-short-term memory neural network being introduced to enable the network to have memorability, and image data being more accurate and stable by using a frame skipping mechanism; and meanwhile, an experience pool playback mechanism being modified, and a priority being set for each stored experience sample so that few and important experiences can be more applied to learning, and learning efficiency is improved; and finally, a multi-robot navigation obstacle avoidance simulation system being established. The method is advantaged in that the robot is enabled to learn navigation and obstacle avoidance from easy to difficult by adopting a curriculum type learning mode so that the training speed is accelerated.

Owner:SUN YAT SEN UNIV

Computing unloading and resource management method in edge calculation based on deep reinforcement learning

PendingCN113821346AMaximize self-interestAddress different interest pursuitsResource allocationProgram loading/initiatingEdge nodeEngineering

The invention discloses a computing unloading and resource management method in edge computing based on deep reinforcement learning, which comprises the following steps: constructing an edge computing communication model based on a partially observable Markov decision process, the edge computing communication model comprising M + N agents, the M agents being edge nodes, and the N agents being users; setting a target optimization function according to a user cost minimization target and an edge node utility maximization target; setting a time slot length, a time frame length, an initialization time slot and a time frame; enabling the edge node and the user to respectively use the partial observable Markov decision process to obtain a resource allocation strategy and a task unloading strategy; according to the task unloading strategy and the resource allocation strategy, optimizing a target optimization function by utilizing a participant-criminator model; and dividing and processing the computing task according to the optimized target optimization function. According to the invention, different interest pursues between the edge device and the user are solved, and respective interests are ensured to the maximum extent.

Owner:TIANJIN UNIV

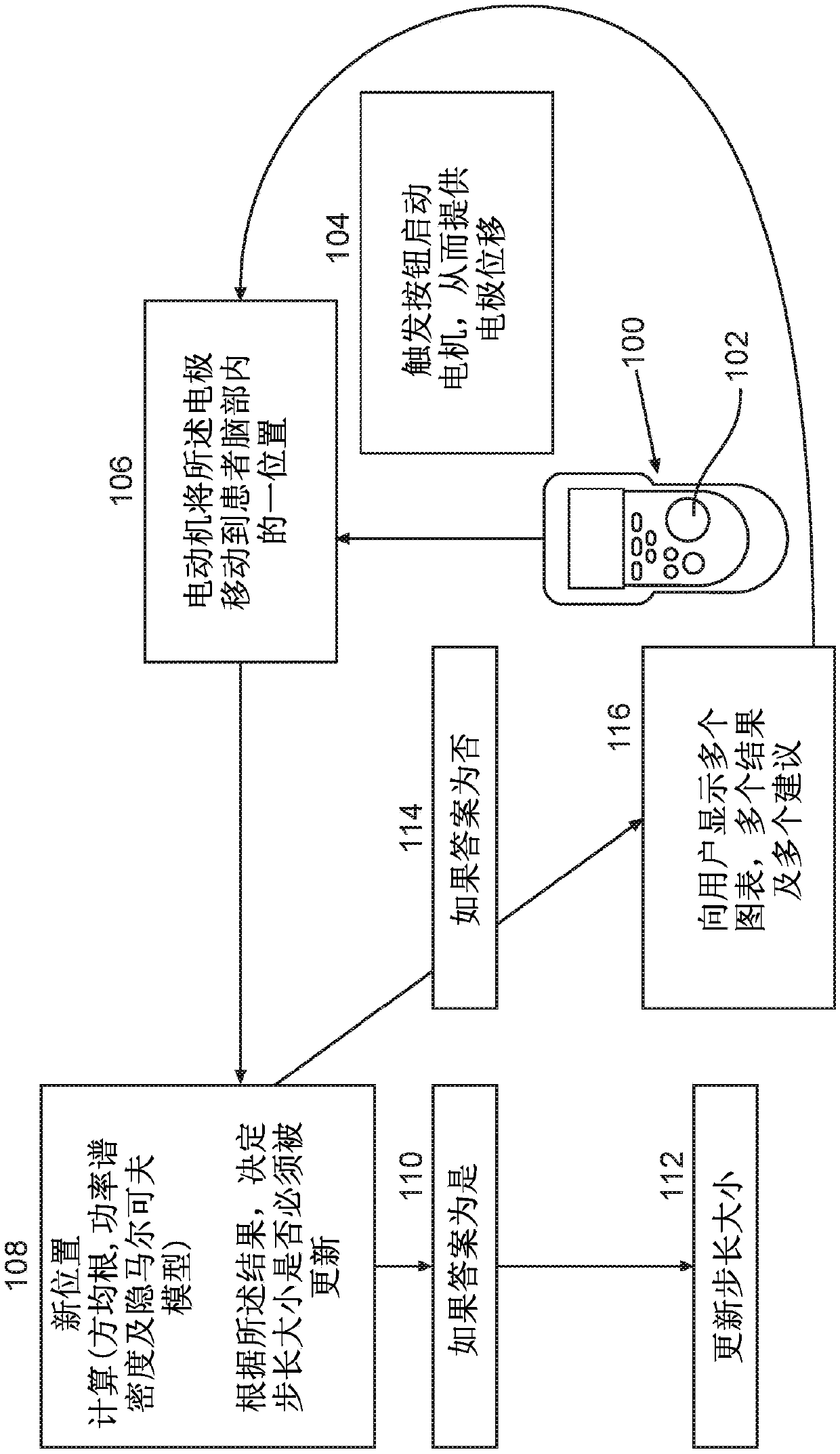

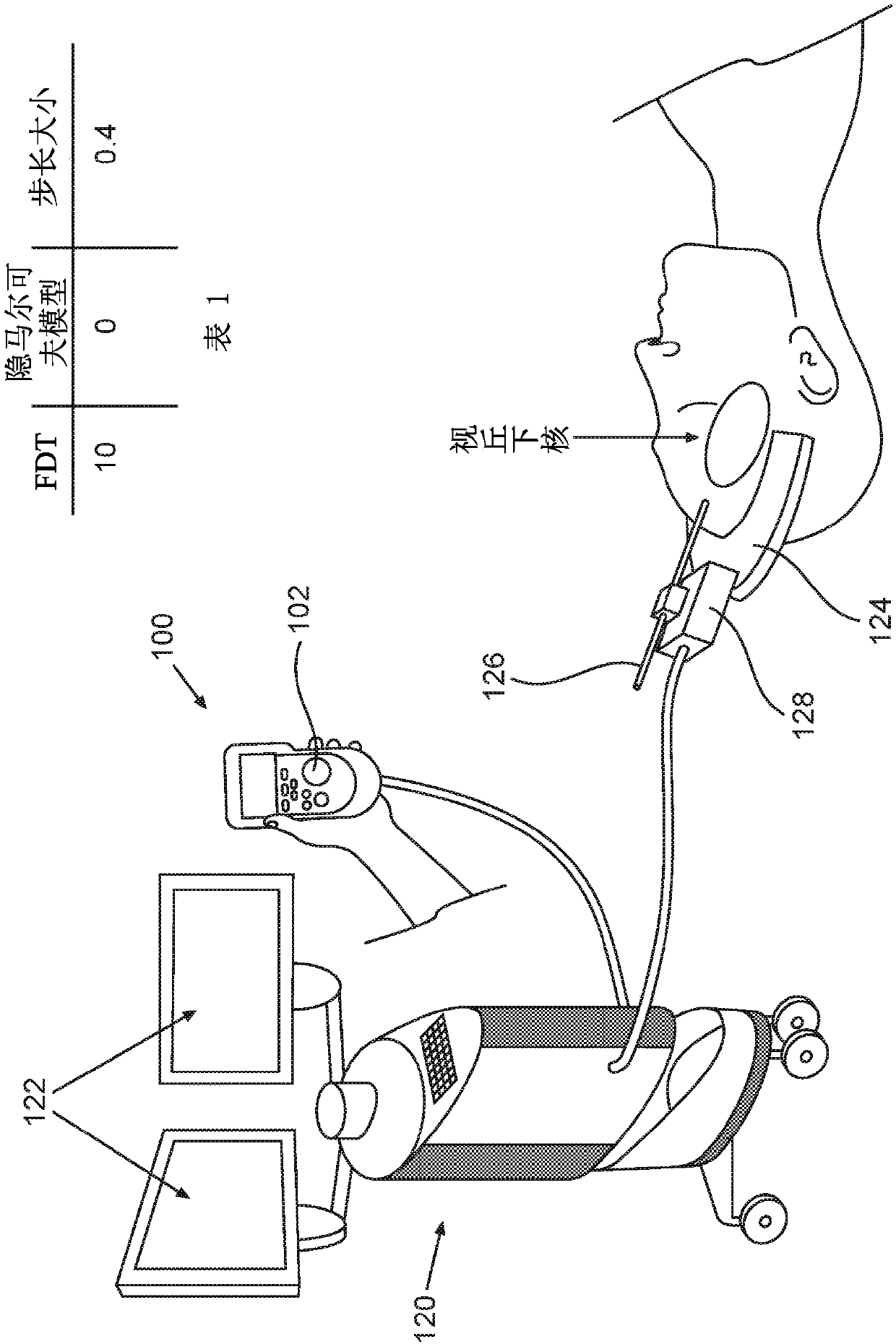

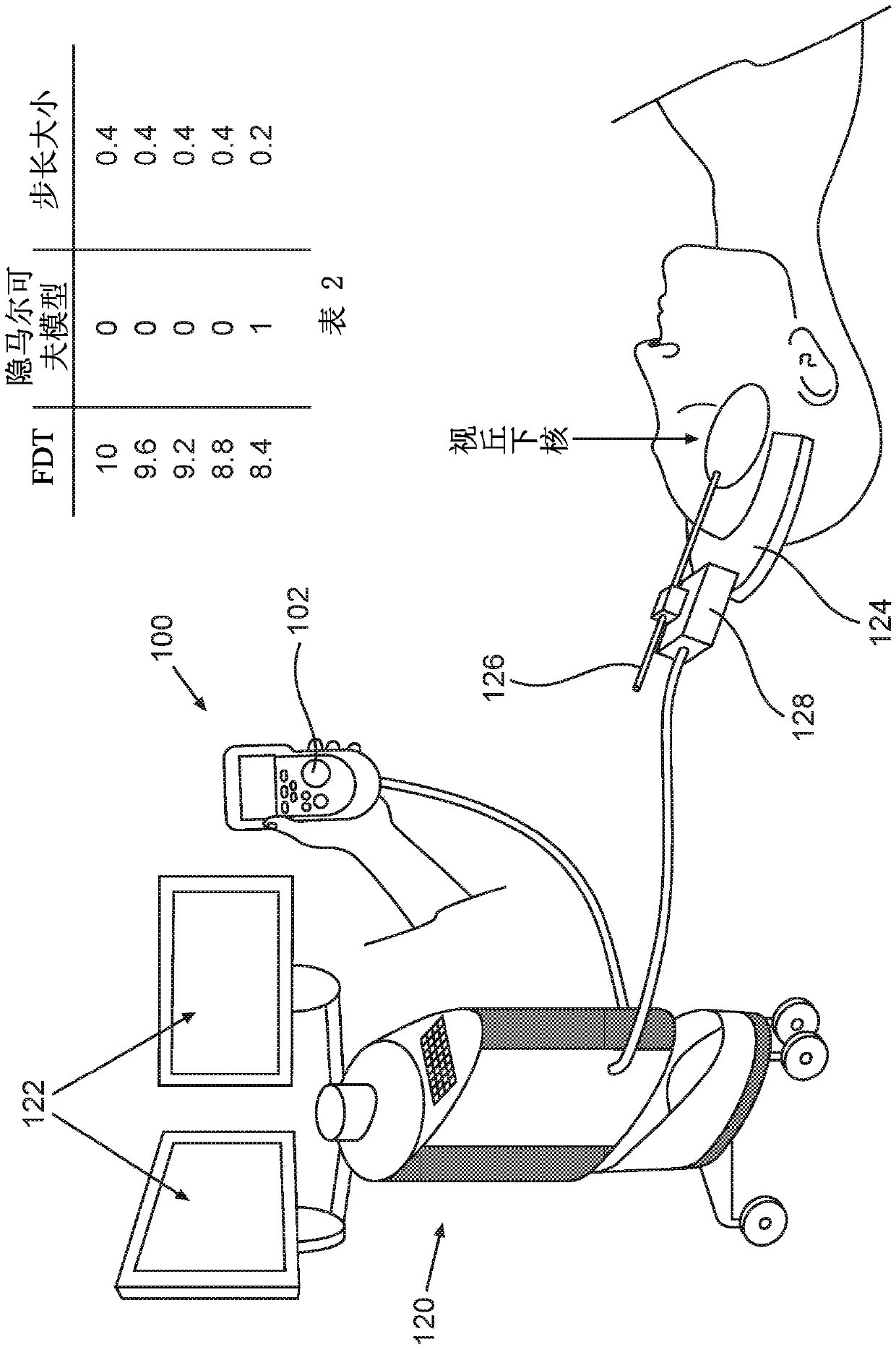

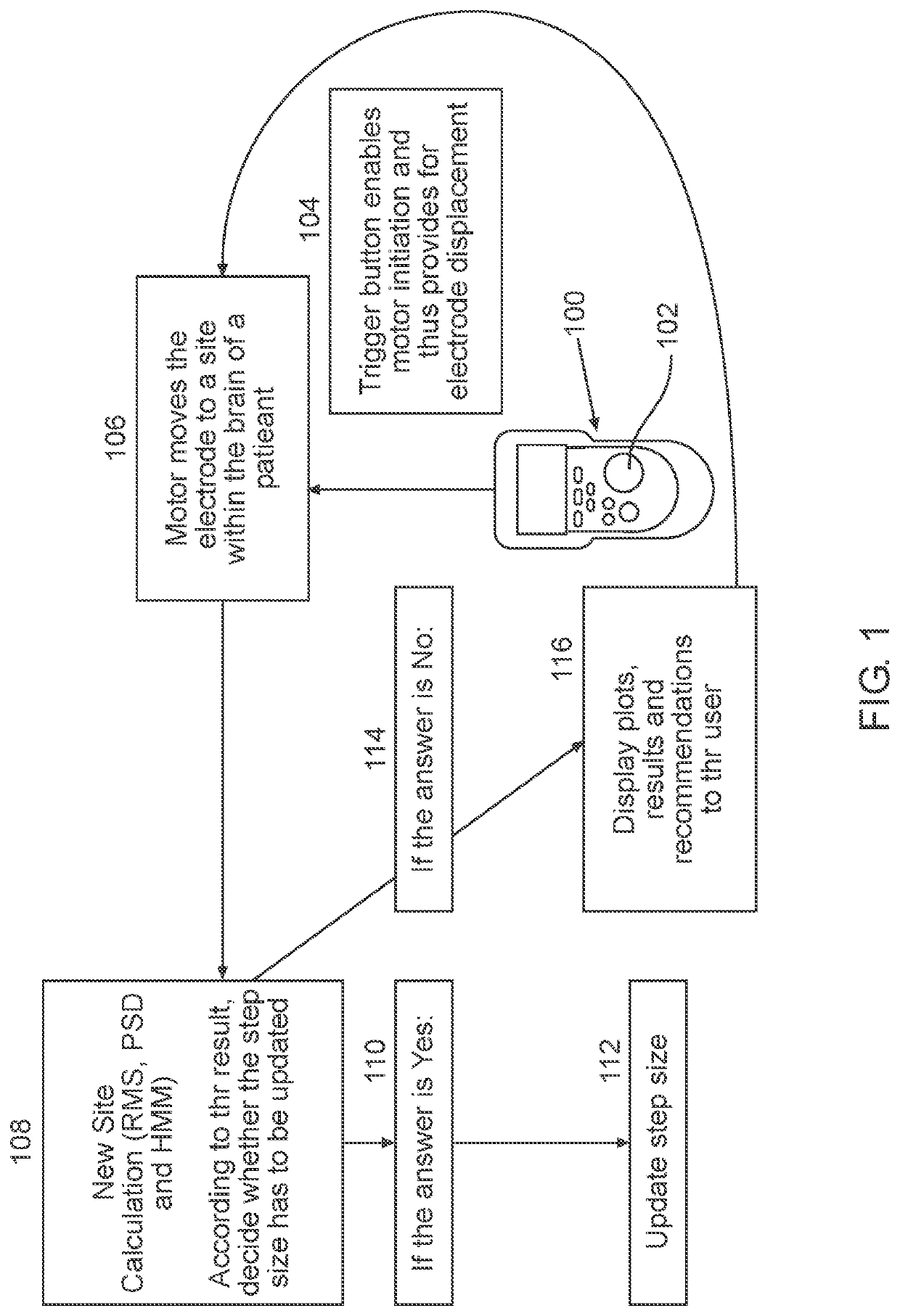

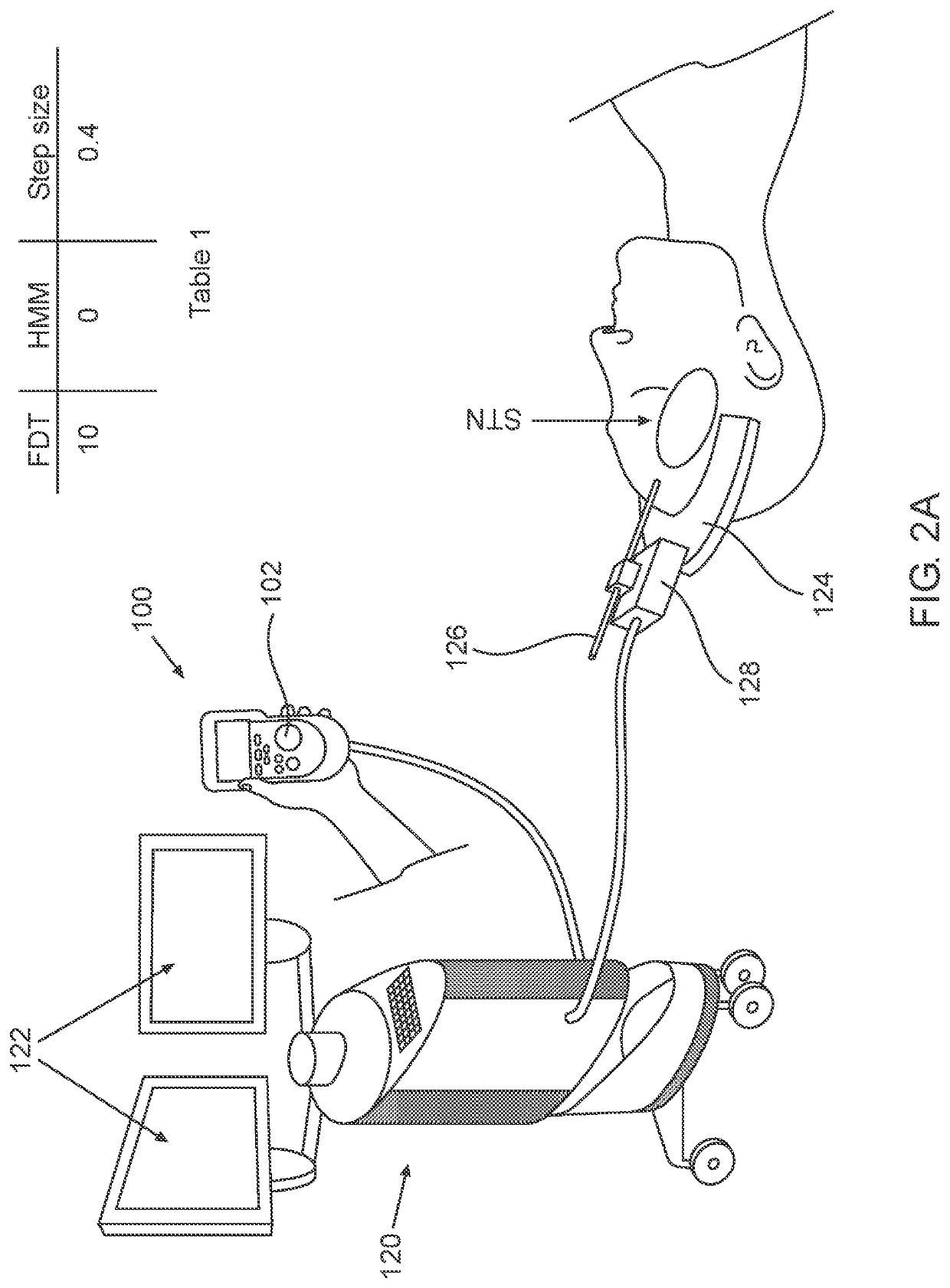

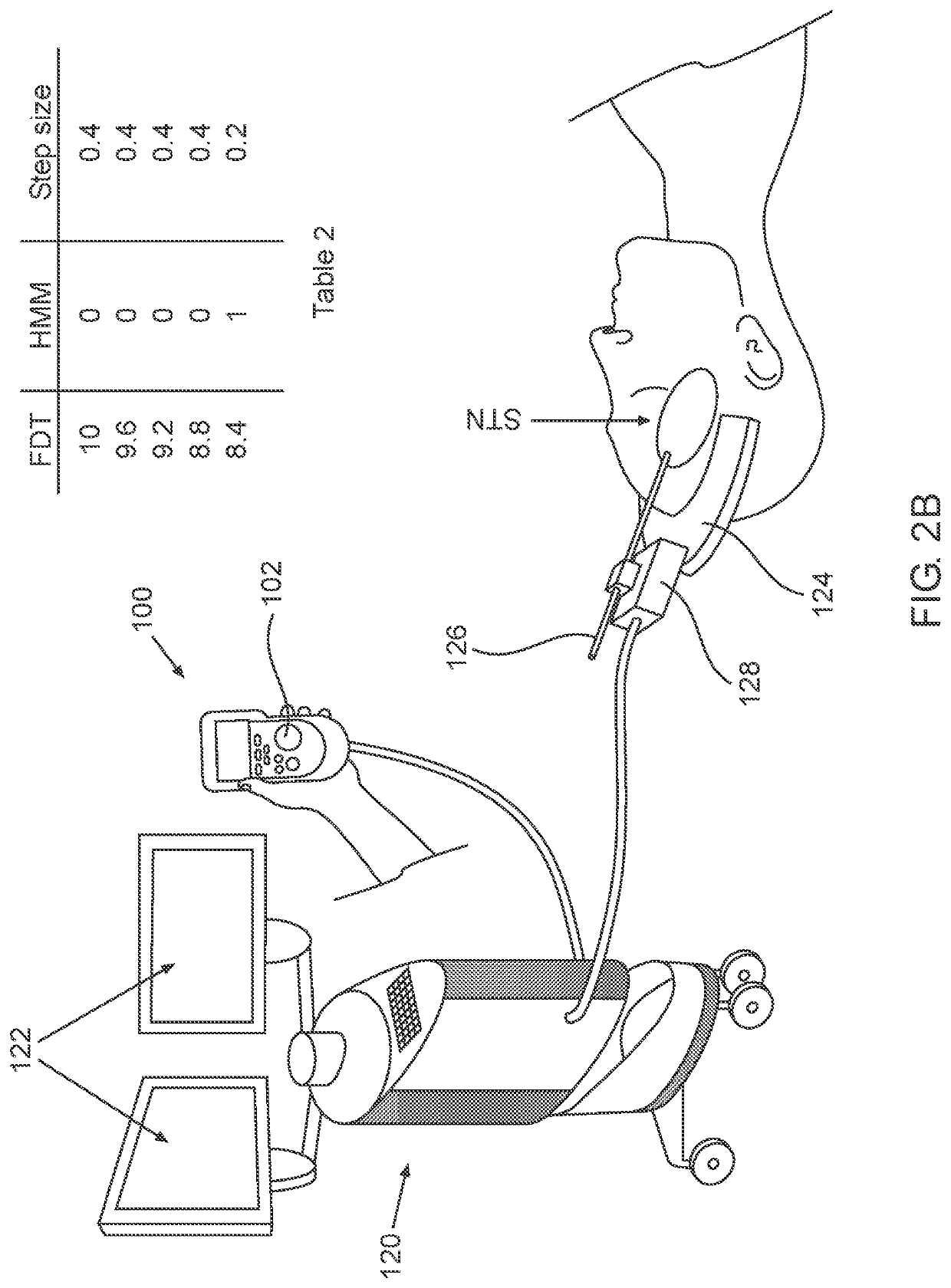

Automatic brain probe guidance system

ActiveCN107847138AInput/output for user-computer interactionHead electrodesGuidance systemHide markov model

Owner:阿尔法欧米伽医疗科技公司

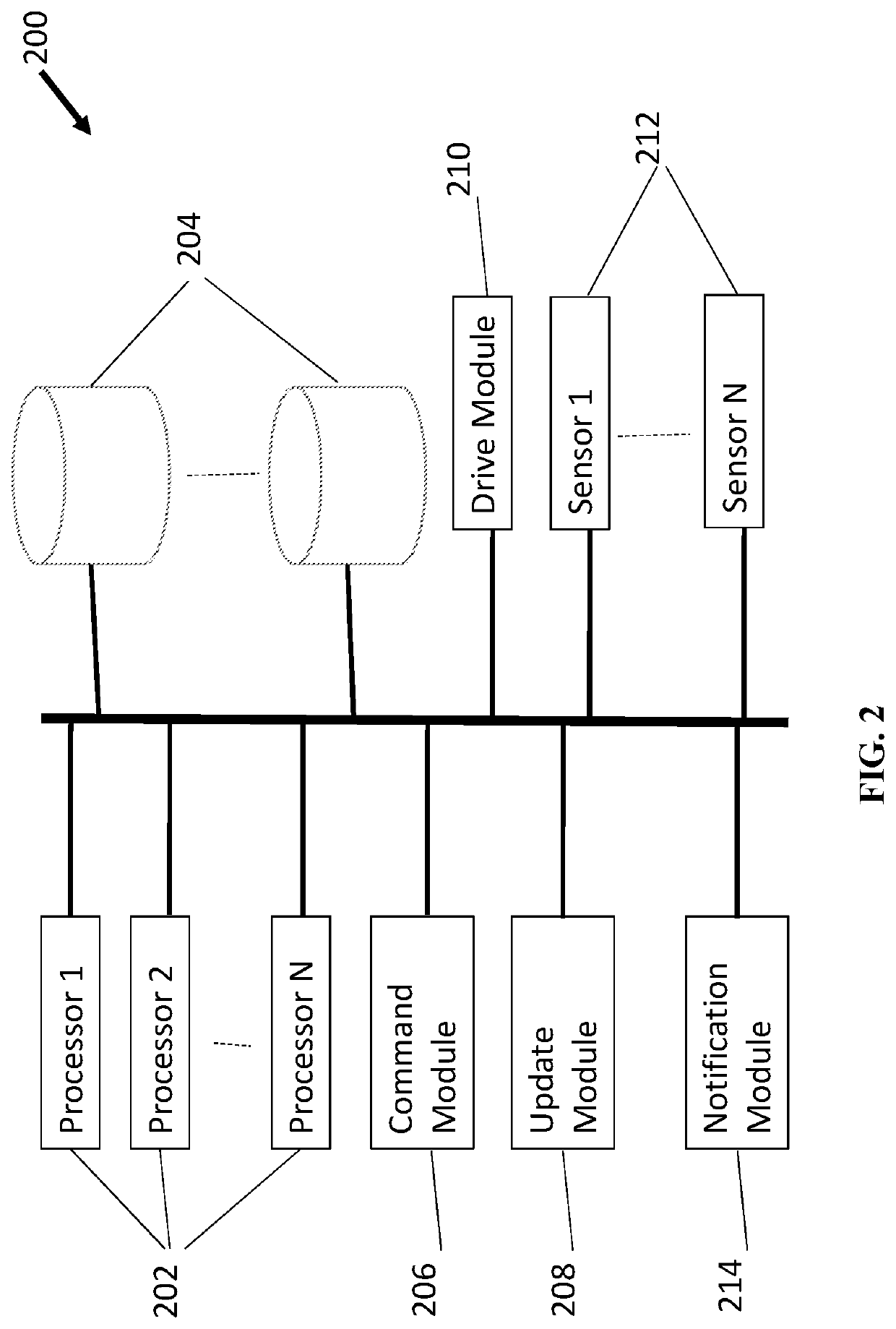

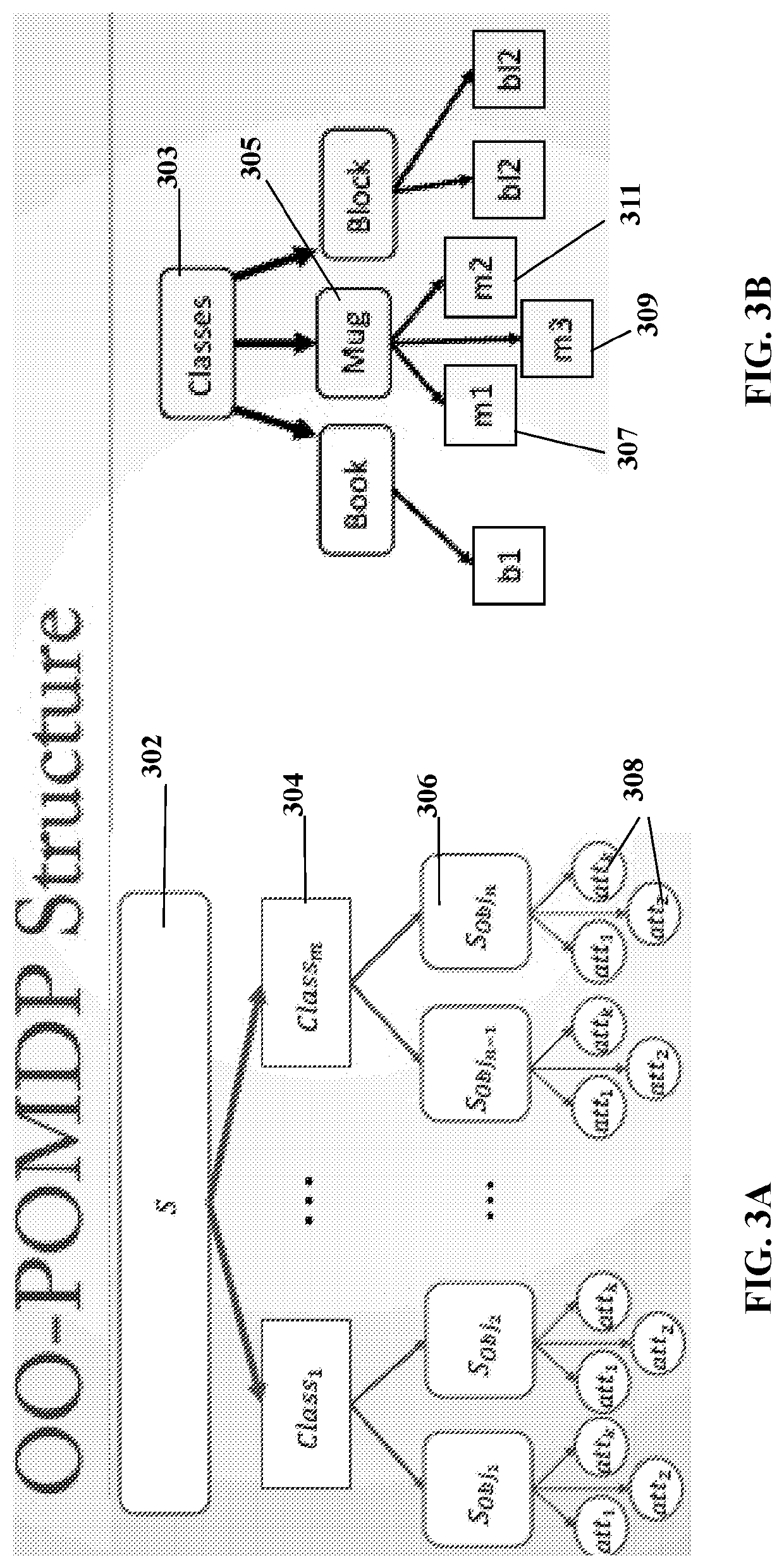

Systems and methods for operating robots using object-oriented partially observable markov decision processes

PendingUS20210347046A1Well formedProgramme-controlled manipulatorProbabilistic networksObject basedEngineering

A system and method of operating a mobile robot to perform tasks includes representing a task in an Object-Oriented Partially Observable Markov Decision Process model having at least one belief pertaining to a state and at least one observation space within an environment, wherein the state is represented in terms of classes and objects and each object has at least one attribute and a semantic label. The method further includes receiving a language command identifying a target object and a location corresponding to the target object, updating the belief associated with the target object based on the language command, driving the mobile robot to the observation space identified in the updated belief, searching the updated observation space for each instance of the target object, and providing notification upon completing the task. In an embodiment, the task is a multi-object search task.

Owner:BROWN UNIVERSITY +1

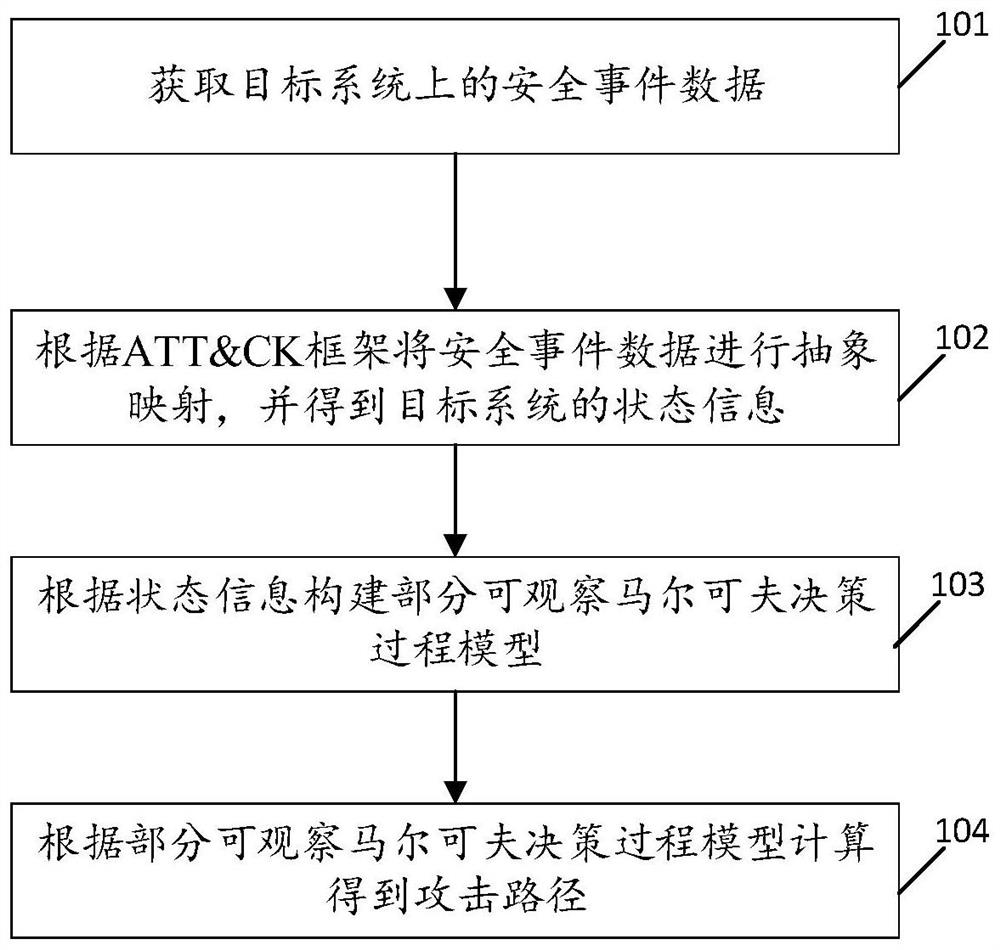

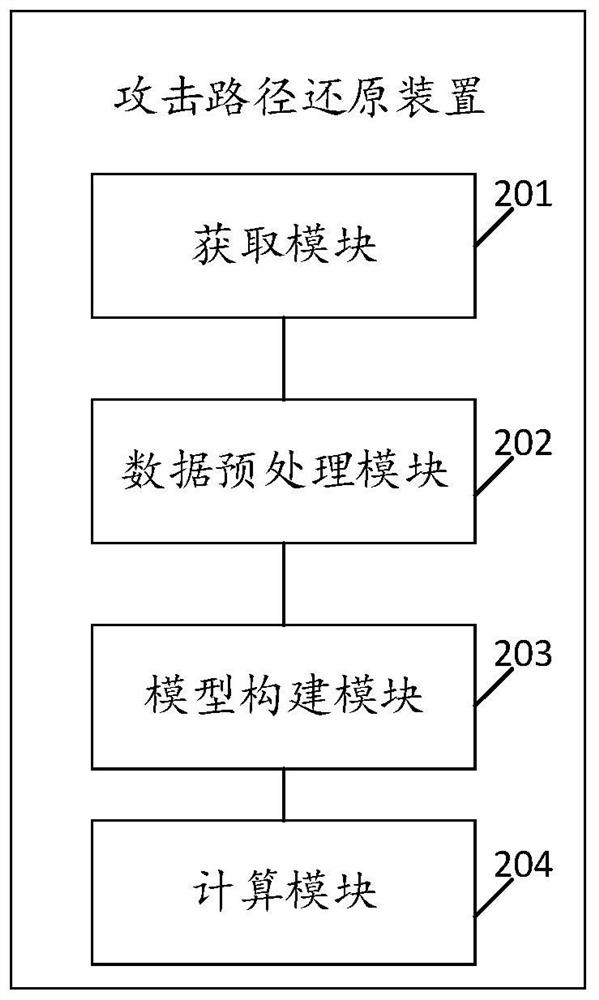

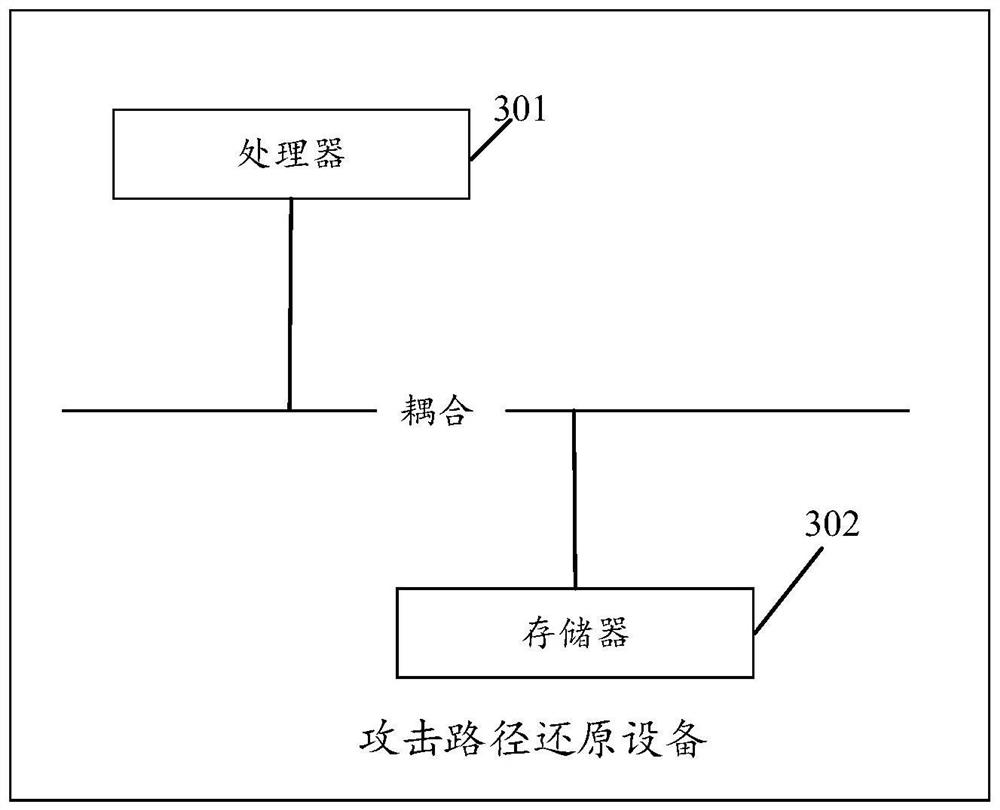

Attack path restoration method, device and apparatus and storage medium

ActiveCN112422573AOptimize the efficiency of reduction analysisExcellent generalization adaptabilityMathematical modelsTransmissionAlgorithmAttack

The invention provides an attack path restoration method, device and apparatus and a storage medium, and the method comprises the steps: obtaining safety event data on a target system; conducting abstract mapping on the security event data according to an ATTCK framework, and obtaining state information of the target system; constructing a partially observable Markov decision process model according to the state information; and calculating according to the partially observable Markov decision process model to obtain an attack path. The method has better generalization adaptability and analysis efficiency.

Owner:BEIJING TOPSEC NETWORK SECURITY TECH +2

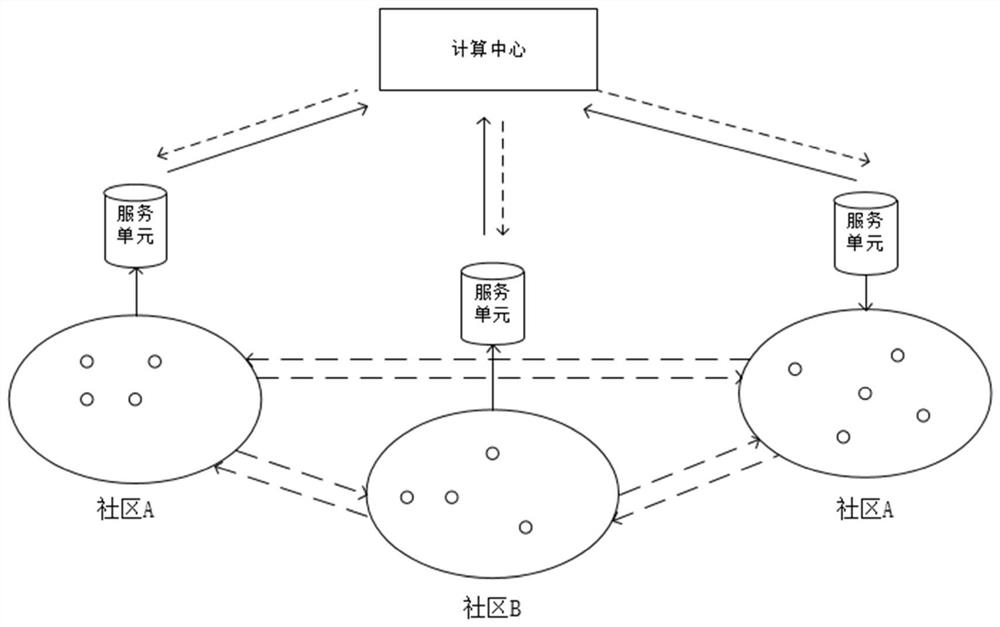

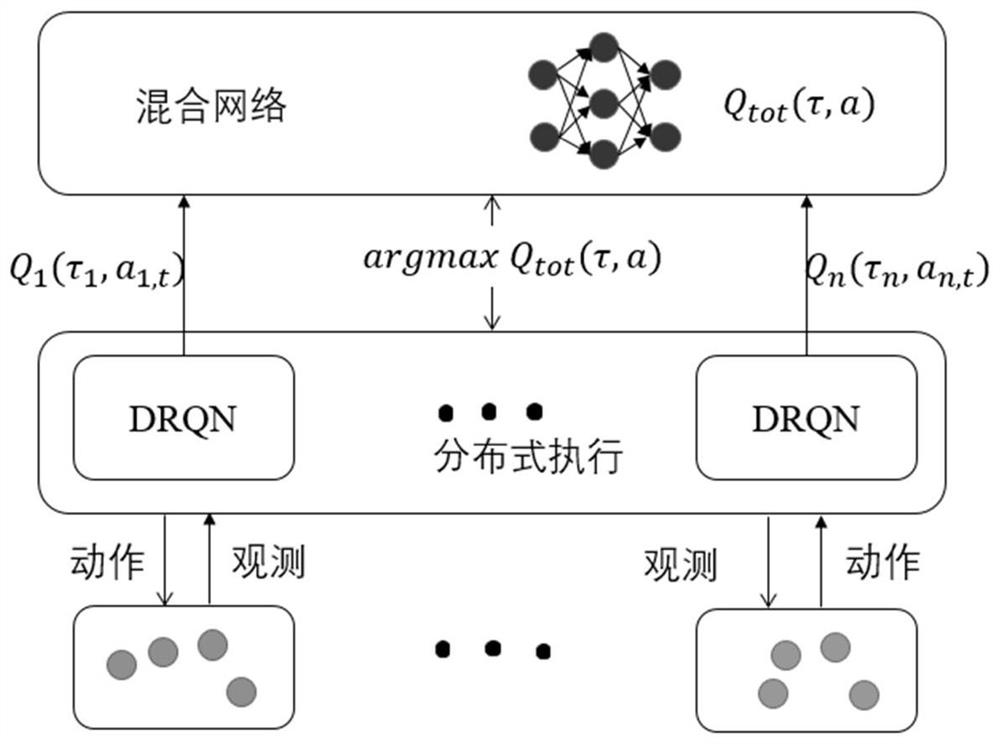

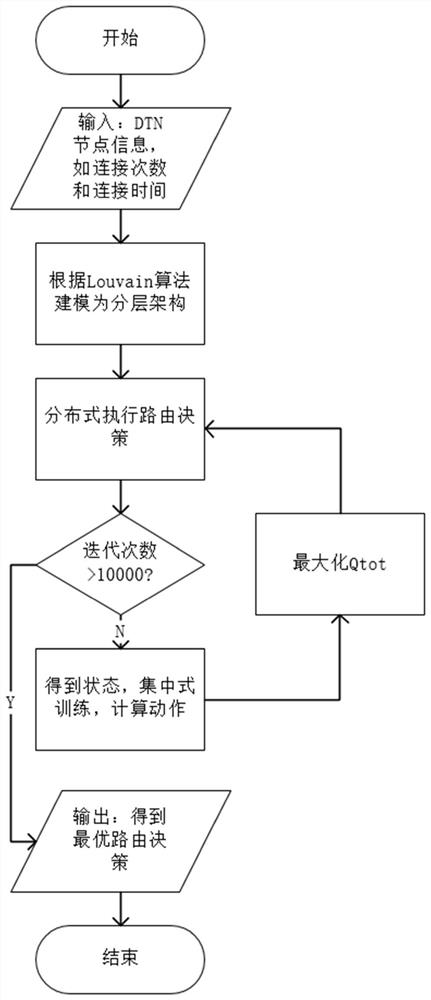

Delay tolerant network routing algorithm based on multi-agent reinforcement learning

InactiveCN112867083AEasy to captureImprove delivery rateNetwork topologiesData switching networksAlgorithmEngineering

The invention discloses a time delay tolerant network routing algorithm based on multi-agent reinforcement learning, and the algorithm is characterized in that the algorithm comprises the following steps: 1, carrying out a Louvian clustering algorithm on time delay tolerant network nodes, and providing a centralized and distributed layered architecture; 2, modeling a DTN node selection next hop problem into a distributed partially observable Markov decision process (De-POMDP) model in combination with positive social characteristics. Compared with the prior art, the technical scheme of the patent provides a layered architecture compared with an existing time delay tolerant network routing scheme based on social attributes, and social information of edge equipment can be conveniently captured; on one hand, routing decisions issued by the computing center are executed in a distributed mode, and on the other hand, routing algorithms are trained in a centralized mode at the computing center according to states transmitted by the service units. Routing forwarding in the delay tolerant network can be carried out by effectively utilizing social characteristics, so that the delivery rate is improved, and the average delay is reduced.

Owner:BEIJING UNIV OF POSTS & TELECOMM +1

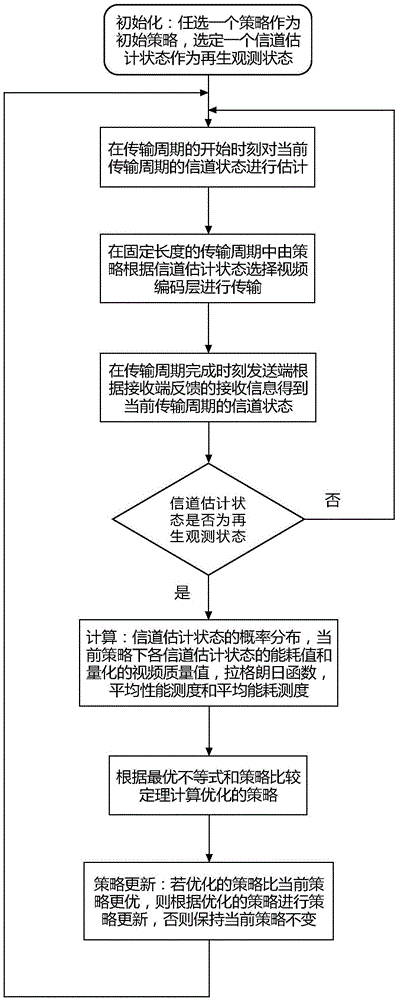

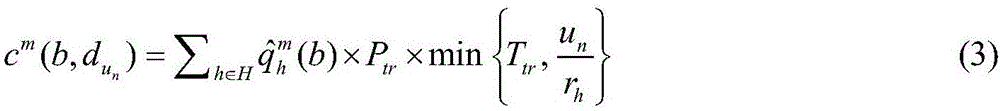

Energy-efficient wireless transmission method for scalable video coding real-time streaming media

ActiveCN106850643AImproved transmission energy efficiencyEffective performance analysisDigital video signal modificationTransmissionWireless transmissionAdaptive optimization

The invention discloses an energy-efficient wireless transmission method for scalable video coding real-time streaming media. The energy-efficient wireless transmission method is characterized by comprising the following steps: implementing selective transmission control on a streaming media scalable coding layer based on channel state estimation with random errors; carrying out performance analysis and strategy optimization based on partially observable Markov decision processes; and adaptively optimizing a transmission control strategy of the coding layer by using an online strategy iteration algorithm. According to the energy-efficient wireless transmission method disclosed by the invention, the optimization of the transmission energy efficiency can be achieved based on adaptive regulation of the traffic through the selective transmission of the video coding layer, and thus the quality QoS of the real-time streaming media video can be ensured, and the transmission energy efficiency can also be effectively increased.

Owner:HEFEI UNIV OF TECH

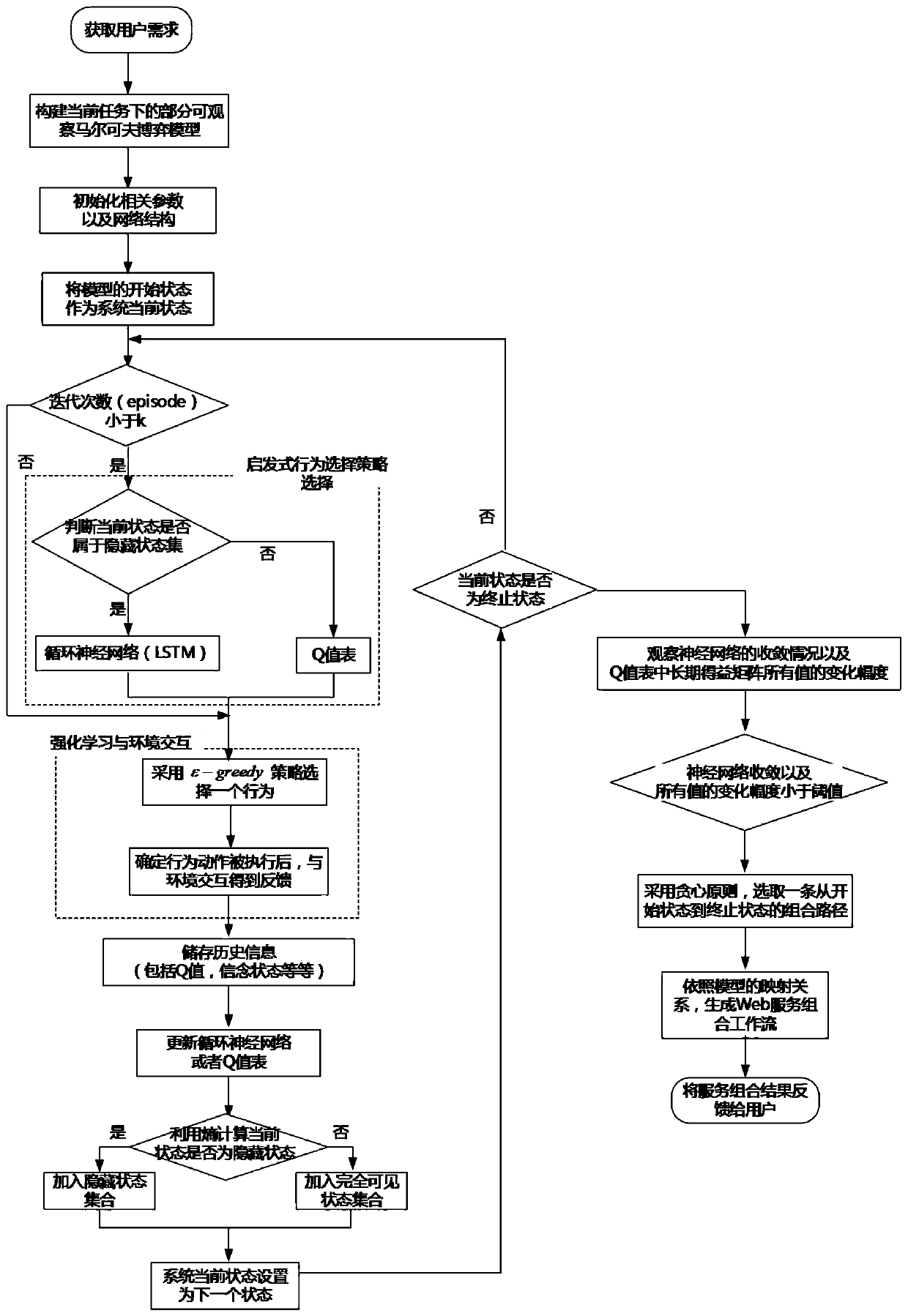

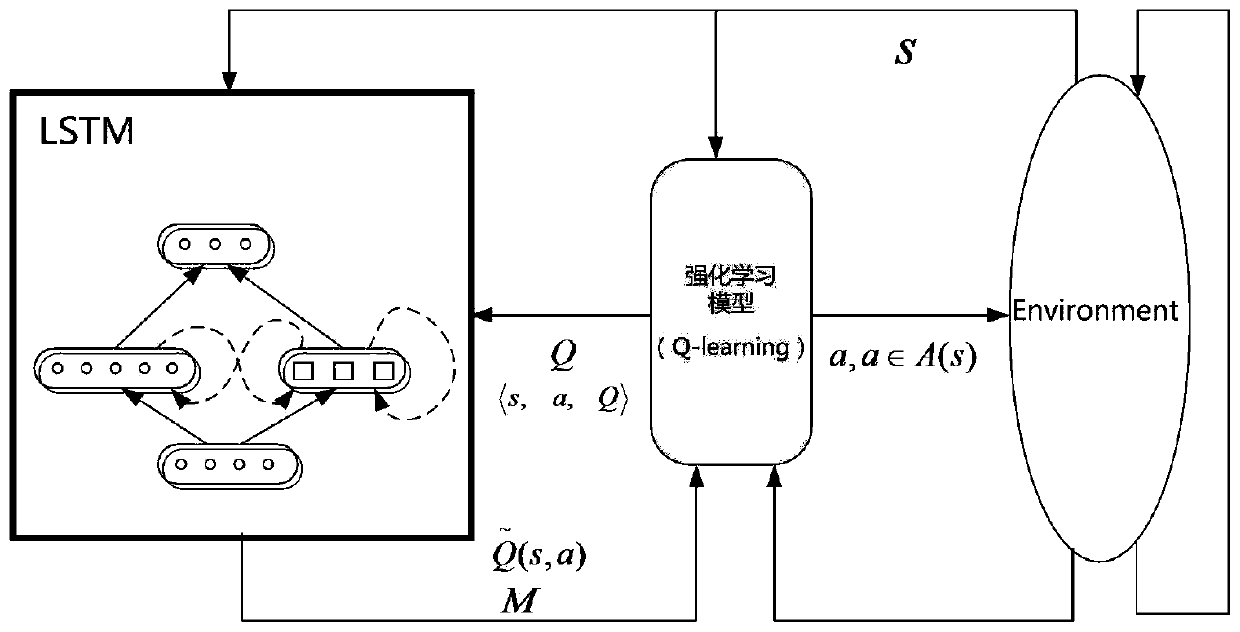

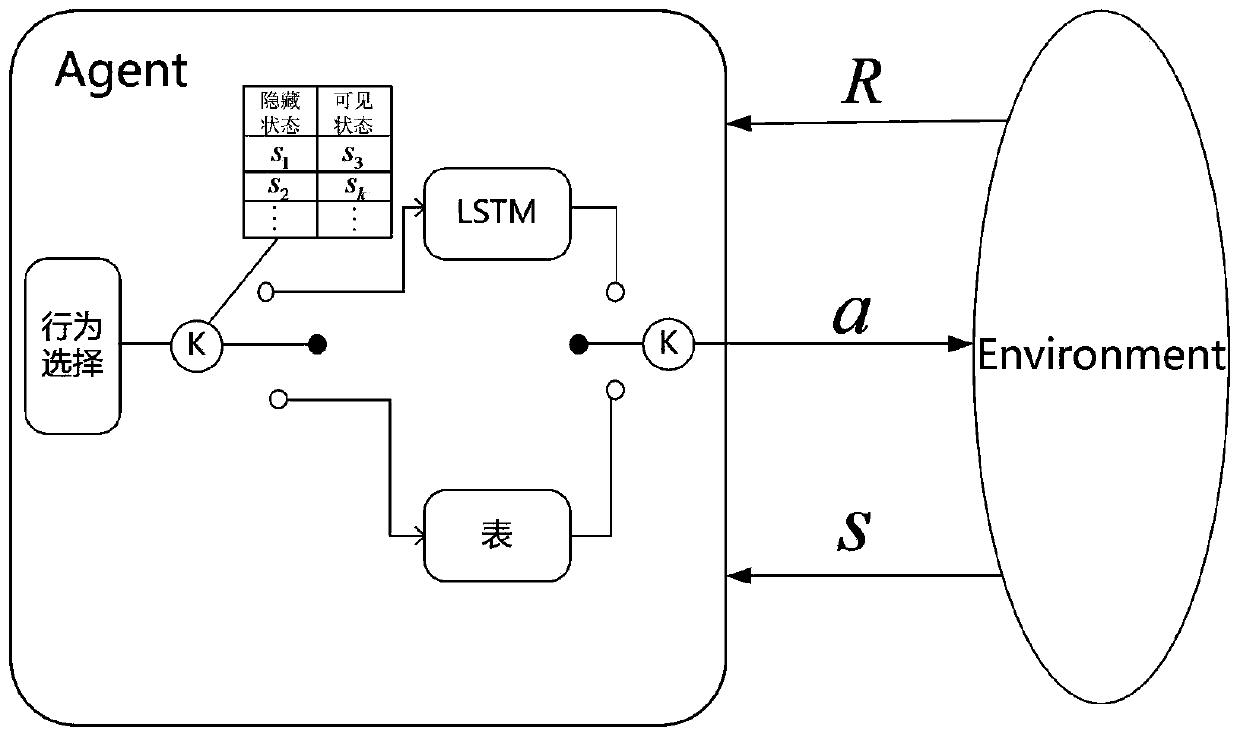

A Web Service Composition Method Based on Deep Reinforcement Learning

ActiveCN107241213BImprove quality requirementsImprove accuracyData switching networksNeural learning methodsService compositionHeuristic

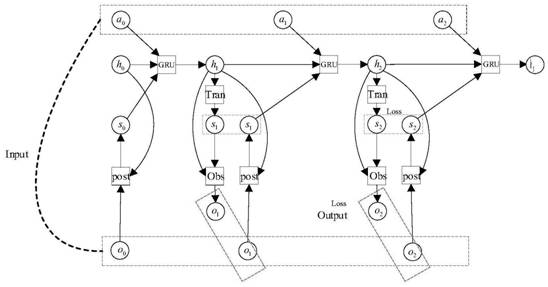

The invention discloses a web service combination method based on deep reinforcement learning. Aiming at the problems of long time consumption, poor flexibility and unsatisfactory combination results of the traditional service combination method in the face of large-scale service scenarios, the deep reinforcement learning technology and heuristic Ideas applied to the problem of service composition. In addition, considering the characteristics of partial observability of the real environment, the present invention transforms the service composition process into a partially observable Markov decision process (Partially-Observable Markov Decision Process, POMDP), and utilizes a recurrent neural network to solve the problem of POMDP , so that the method can still show high efficiency in the face of the "curse of dimensionality" challenge. The method of the present invention can effectively improve the solution speed, and independently adapt to the dynamic service composition environment on the basis of ensuring the quality of the service composition scheme, effectively improving the efficiency and self-adaptability of the service composition in the large-scale dynamic service composition scene and flexibility.

Owner:SOUTHEAST UNIV

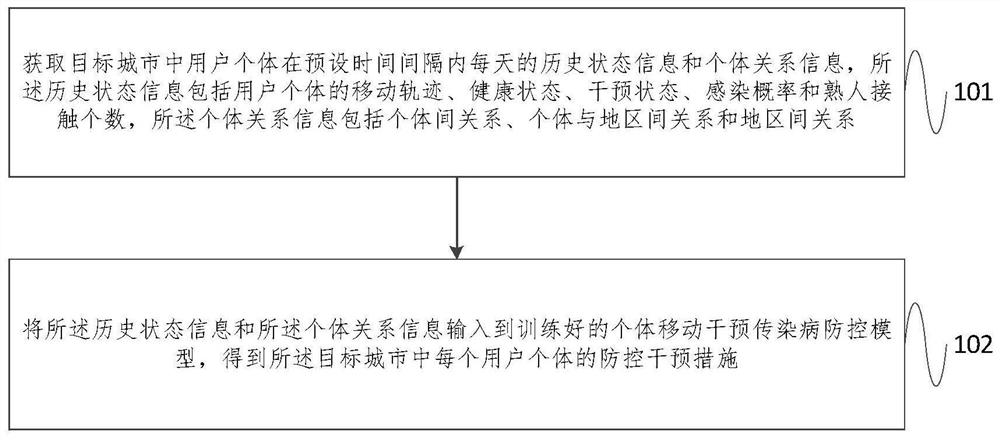

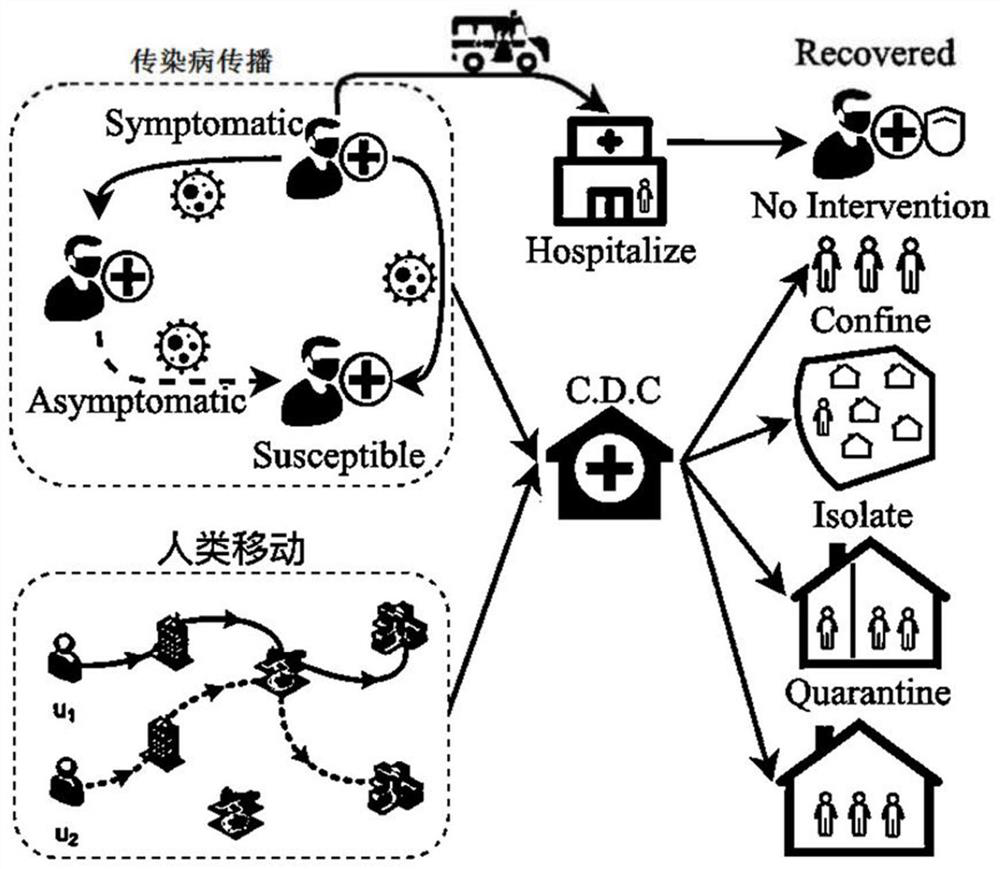

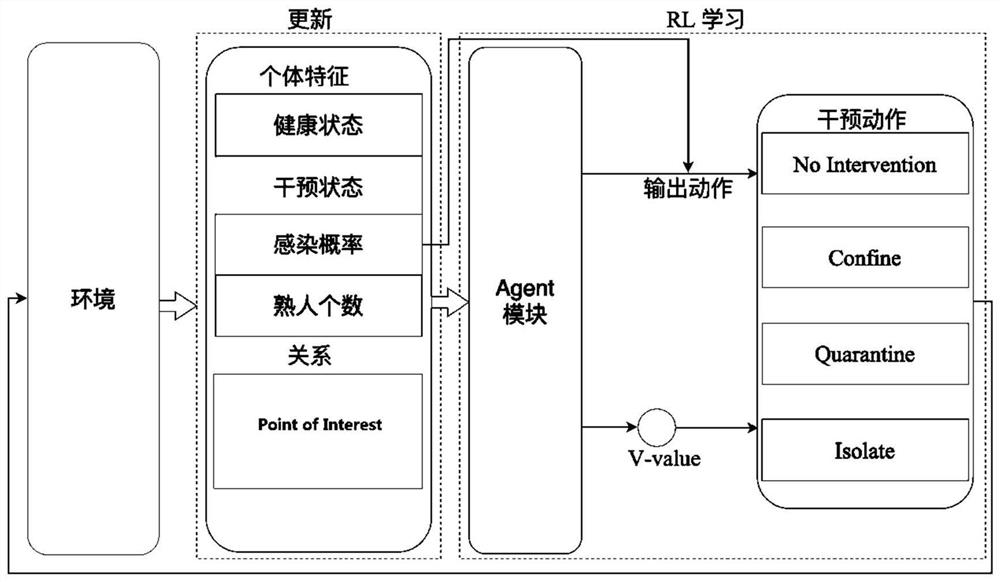

Individual movement intervention infectious disease prevention and control method and system

PendingCN113889282AReduce the number of infectionsMedical simulationMathematical modelsPhysical medicine and rehabilitationPhysical therapy

The invention provides an individual movement intervention infectious disease prevention and control method and system. The method comprises the following steps: acquiring daily historical state information and individual relationship information of individual users in a target city within a preset time interval; and inputting the historical state information and the individual relationship information into a trained individual movement intervention infectious disease prevention and control model to obtain prevention and control intervention measures of each user individual in the target city, wherein the trained individual movement intervention infectious disease prevention and control model is obtained by training a graph neural network, a long and short term neural network and an intelligent agent according to sample user individual state information and sample individual relationship information, and the intelligent agent is constructed based on a partial observable Markov decision process; and the individual state information of the sample user comprises health state information of a hidden infected person converted into a dominant infected person. According to the invention, the number of infected people can be reduced as much as possible under low travel intervention.

Owner:TSINGHUA UNIV

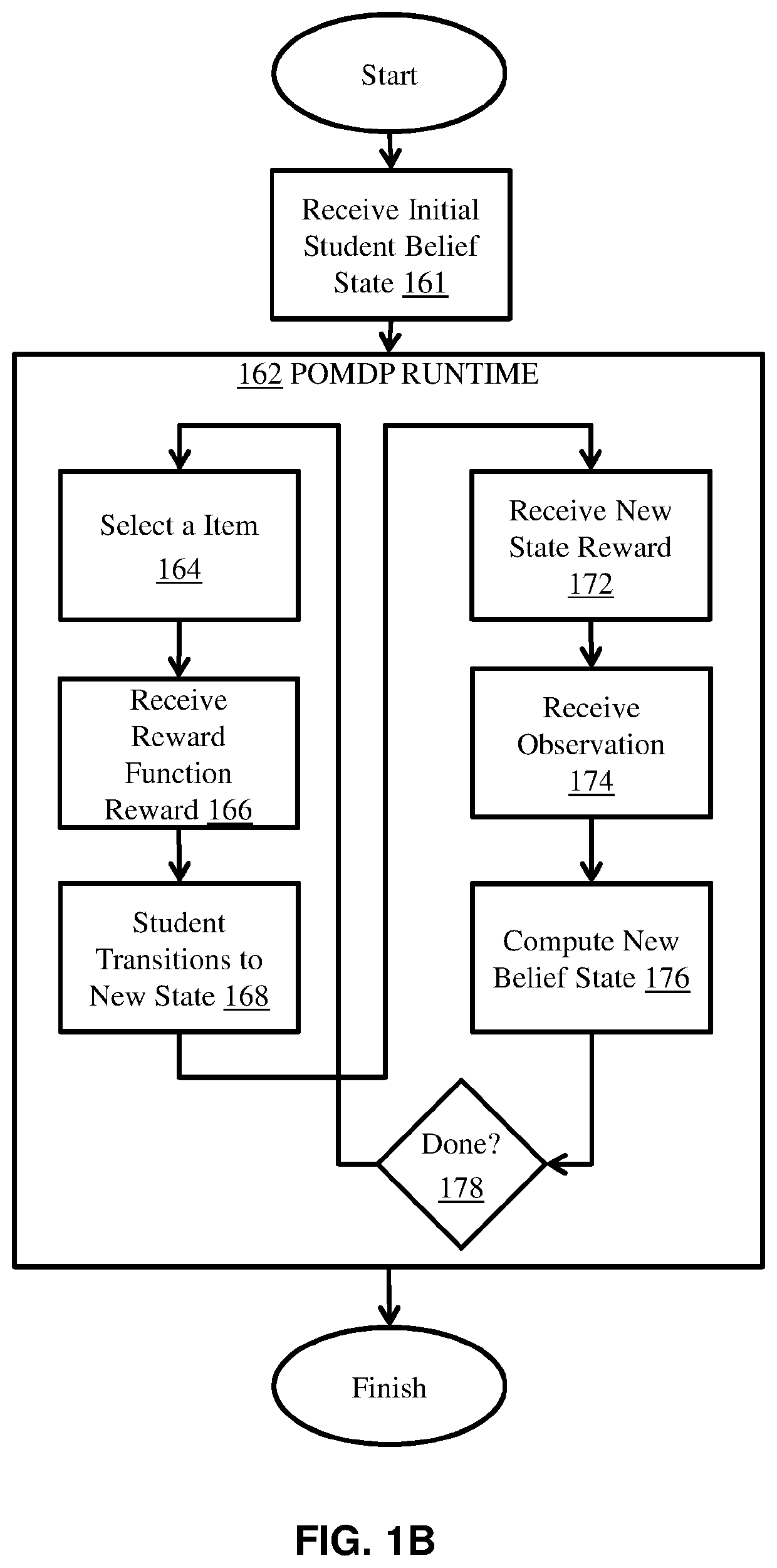

Systems and methods for automated learning

ActiveUS11188848B1Low costFast learningProbabilistic networksMachine learningAutomatic learningData science

In one embodiment of the invention, a training model for students is provided that models how to present training items to students in a computer based adaptive trainer. The training model receives student performance data and uses the training model to infer underlying student skill levels throughout the training sequence. Some embodiments of the training model also comprise machine learning techniques that allow the training model to adapt to changes in students skills as the student performs on training items presented by the training model. Furthermore, the training model may also be used to inform a training optimization model, or a learning model, in the form of a Partially Observable Markov Decision Process (POMDP).

Owner:APTIMA

Automatic brain probe guidance system

The disclosure relates to an automatic brain-probe guidance systems. Specifically, the disclosure is directed to a real-time method and system for guiding a probe to the dorsolateral oscillatory region of the subthalamic nucleus in the brain of a subject in need thereof using factored Partially Observable Markov Decision Process (POMDP) via Hidden Markov Model (HMM) represented as a dynamic Bayesian Network (DBN).

Owner:ALPHA OMEGA NEURO TECH

Discontinuous large-bandwidth repeater frequency spectrum monitoring method

ActiveCN114826453AImprove monitoring effectEasy accessRadio transmissionTransmission monitoringFrequency spectrumDigital conversion

The invention discloses a discontinuous large-bandwidth transponder frequency spectrum monitoring method, which is applied to a process of monitoring a plurality of transponders of a satellite by monitoring equipment. Comprising the following steps: S1, establishing a repeater Markov model based on a partially observable Markov decision process; s2, the monitoring equipment obtains an optimal transponder selection strategy through a reinforcement learning method; s3, monitoring a plurality of transponders of the satellite according to the optimal transponder selection strategy; s4, acquiring the frequency spectrum of the transponder by a robust frequency spectrum acquisition method based on compressed sampling; and S5, the receiving processing module completes down-conversion, analog-to-digital conversion and cognitive processing in a digital domain. According to the method provided by the invention, optimal scheduling is carried out on resolution ratios such as space, time and frequency, and the effectiveness of monitoring data is improved.

Owner:NAT INNOVATION INST OF DEFENSE TECH PLA ACAD OF MILITARY SCI

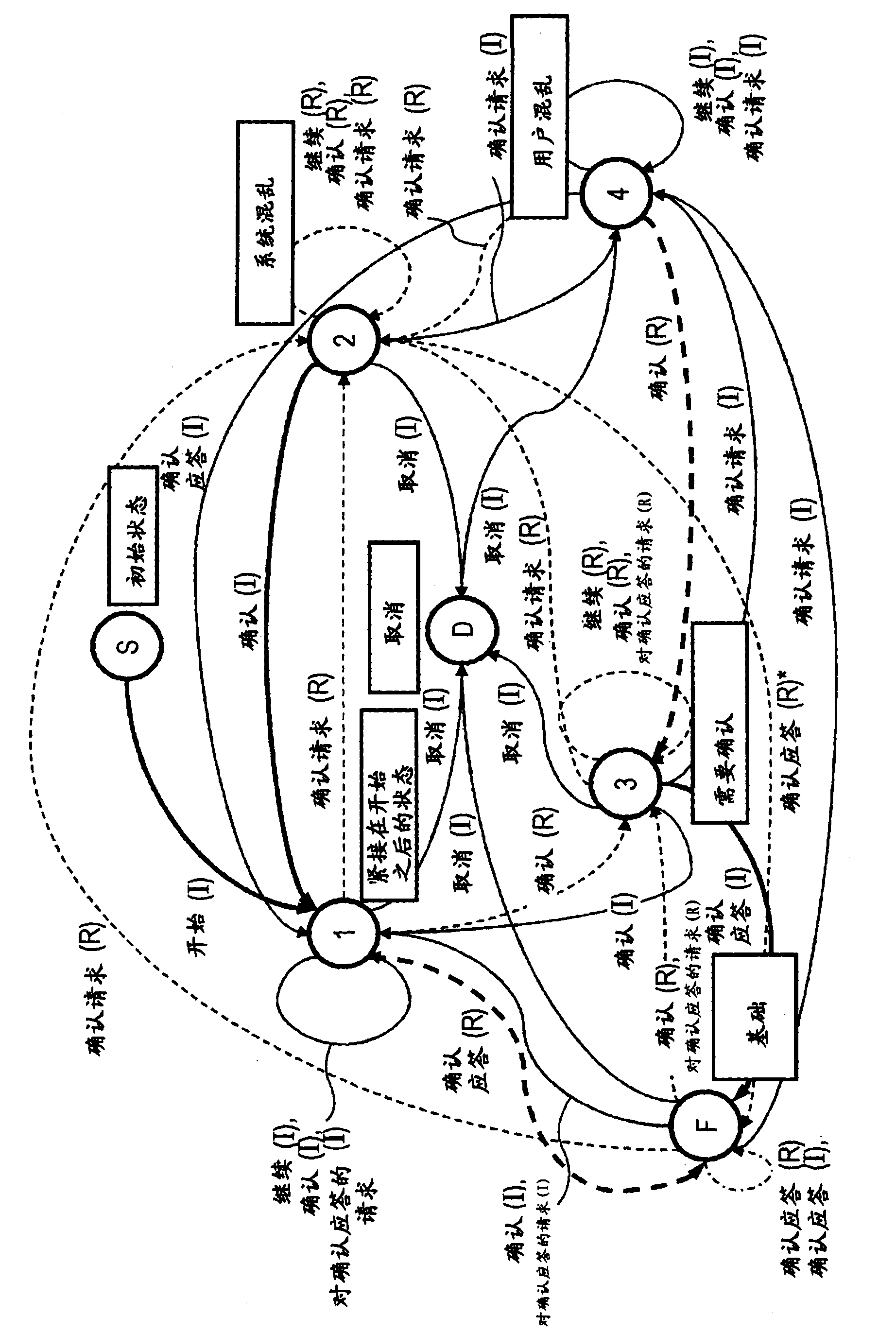

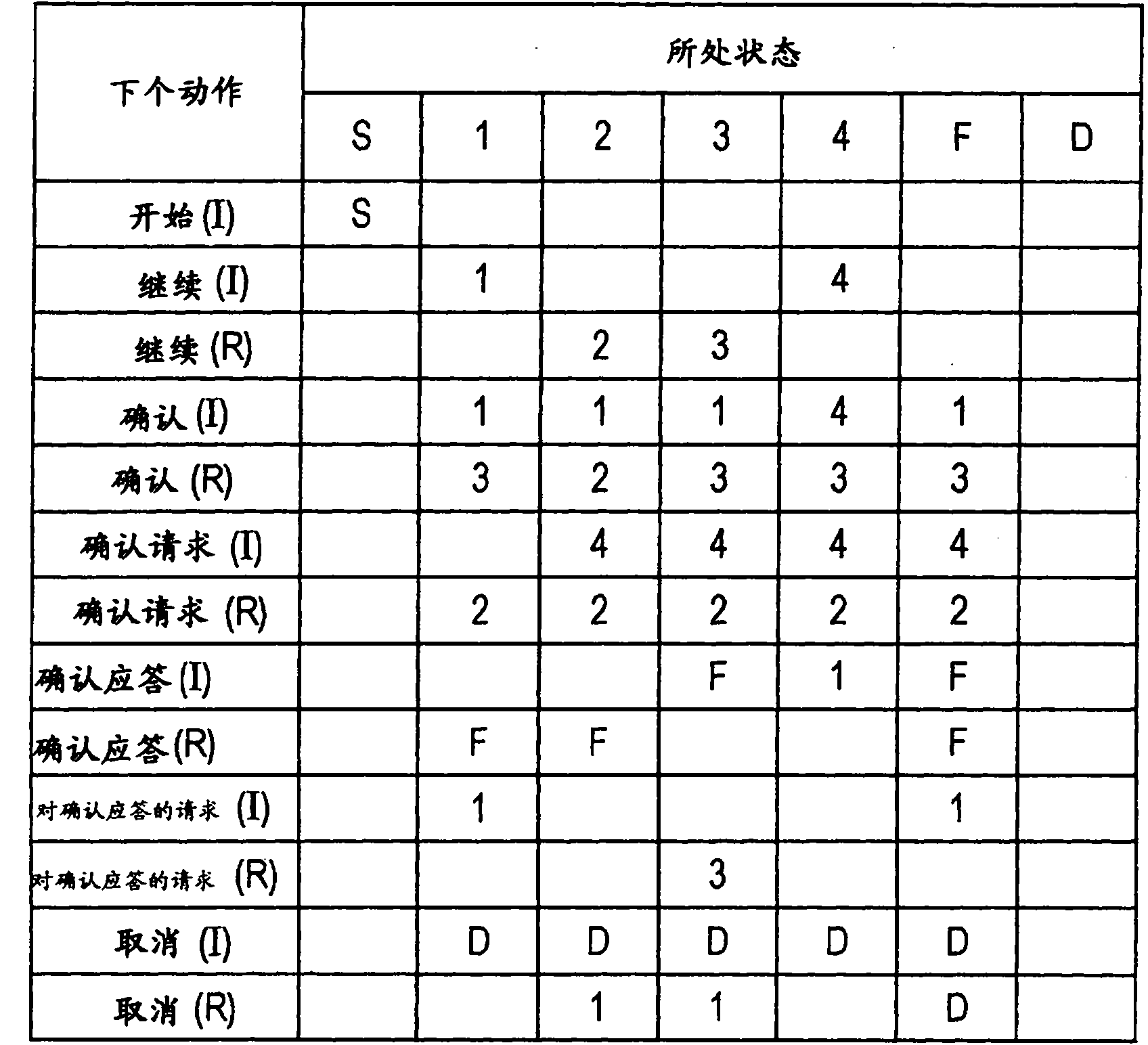

Information processing apparatus, information processing method, and computer program

InactiveCN101884064AAchieve understandingAccurate identificationSpeech recognitionSound input/outputInformation processingTask management

The invention discloses an information processing apparatus, an information processing method, and a computer program. It is possible to provide a device and a method for executing a grounding process using the POMDP (Partially Observable Markov Decision Process). The POMDP contains: observation information such as substantial information including analysis information from a language analysis unit into which a user utterance is inputted and which executes a language analysis; and task realization information from a task management unit which executes a task. Since the grounding process as a recognition process of a user request by a user utterance is executed by applying the POMDP, it is possible to effectively, rapidly, and accurately recognize a user request and execute a task based on the user request.

Owner:SONY CORP

Resource Allocation Method for Wireless Internet of Things Based on Probabilistic Transfer Deep Reinforcement Learning

ActiveCN111586146BReduce the decision variable spaceReduce Decision LatencyCharacter and pattern recognitionTransmissionEdge serverDistributed decision

The invention discloses a wireless Internet of Things resource allocation method based on probabilistic transfer deep reinforcement learning, which distributes decision-making agents in each edge server, so that each agent only needs to make decisions for the users it serves That is, the decision variable space is greatly reduced, and the decision delay is also reduced. At the same time, a service migration model based on a distributed partially observable Markov decision process is proposed, which overcomes the problem that each agent can observe The state information of the state is limited, so that the decision-making cannot reach the optimal solution.

Owner:GUIZHOU POWER GRID CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com