Emotion recognition method, system and device based on multi-modal feature fusion and medium

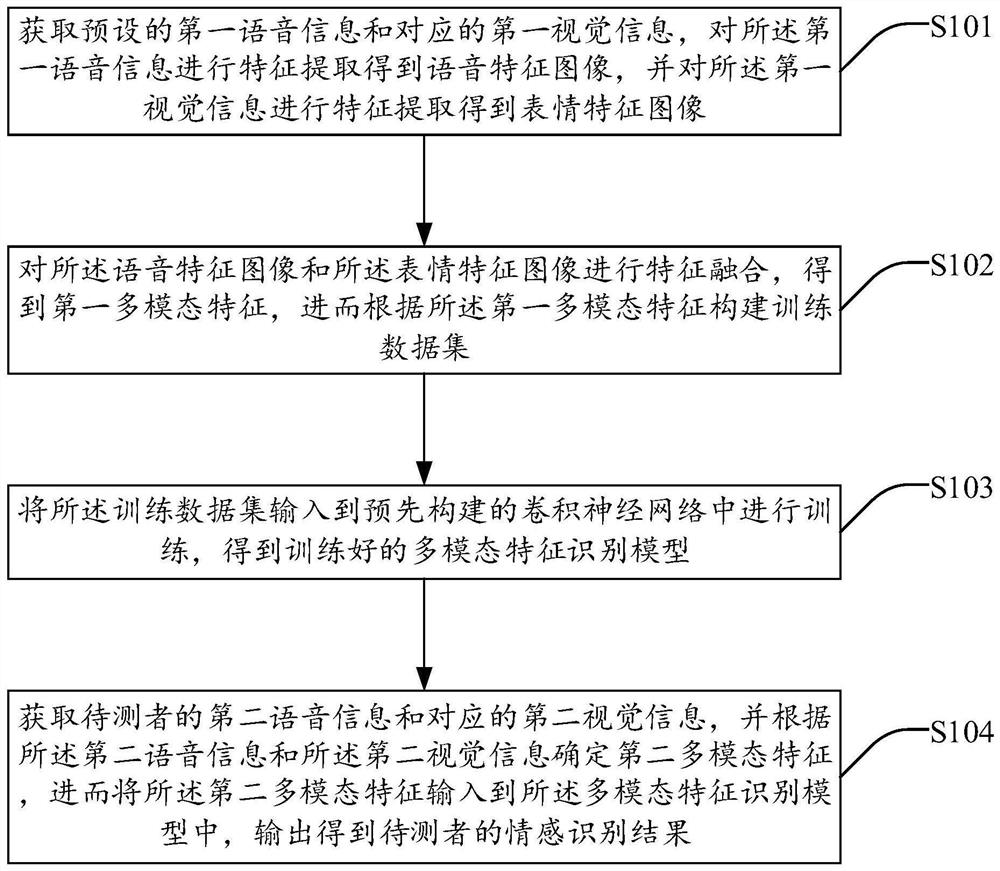

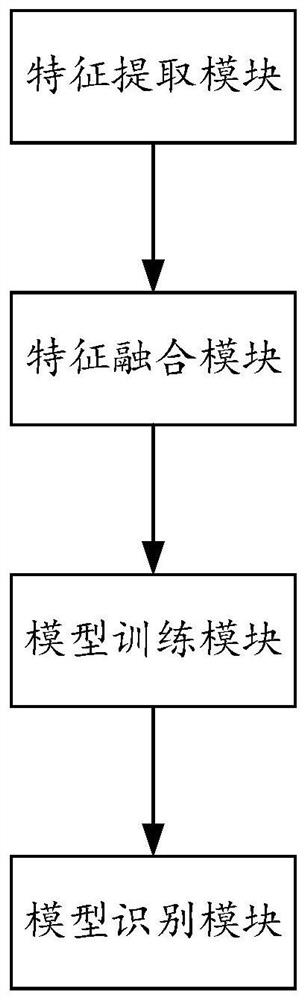

A feature fusion and emotion recognition technology, applied in the field of emotion recognition, can solve problems such as unconsidered, low recognition efficiency, high model complexity, etc., to achieve the effect of improving efficiency, improving accuracy, and reducing model complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0048]Embodiments of the present invention are described in detail below, examples of which are shown in the drawings, wherein the same or similar reference numerals designate the same or similar elements or elements having the same or similar functions throughout. The embodiments described below by referring to the figures are exemplary only for explaining the present invention and should not be construed as limiting the present invention. For the step numbers in the following embodiments, it is only set for the convenience of illustration and description, and the order between the steps is not limited in any way. The execution order of each step in the embodiments can be adapted according to the understanding of those skilled in the art sexual adjustment.

[0049] In the description of the present invention, multiple means two or more. If the first and the second are described only for the purpose of distinguishing technical features, it cannot be understood as indicating or...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com