Natural image classification method combining self-knowledge distillation and unsupervised method

A technology of natural images and classification methods, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve the problems of large number of parameters of deep learning models, inflexible deployment and use, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0028] To make the objectives, technical solutions, and advantages of the present invention will become more apparent hereinafter in conjunction with specific embodiments, and with reference to the accompanying drawings, further details of the present invention.

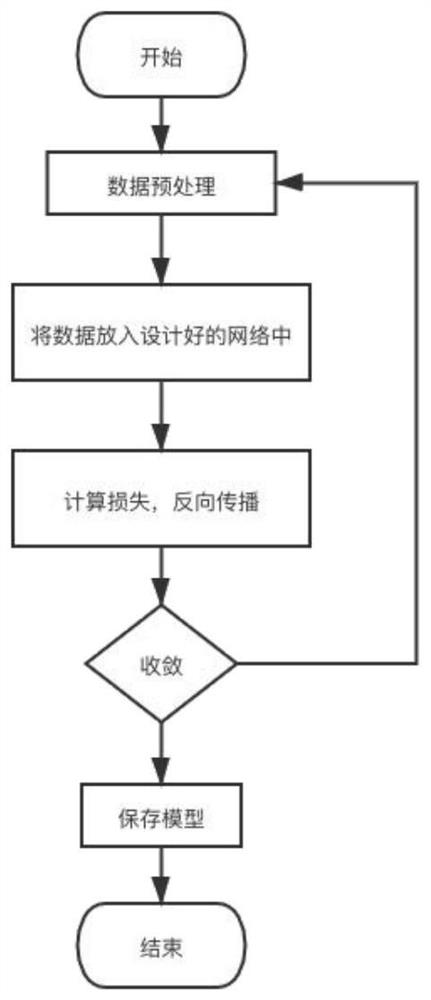

[0029] S1: data portion

[0030] This embodiment uses the image data set Cifar100 classified training set. Cifar100 data set contains 60,000 training pictures, pictures of which 50,000 for the training set, 10 000 pictures for the test set, a total of 10 categories.

[0031] S1.1 using a simple random cuts, level inversion enhancement processing data

[0032] S1.2 Normalize the data operation. After randomization disrupted into different batches.

[0033] S2 training part

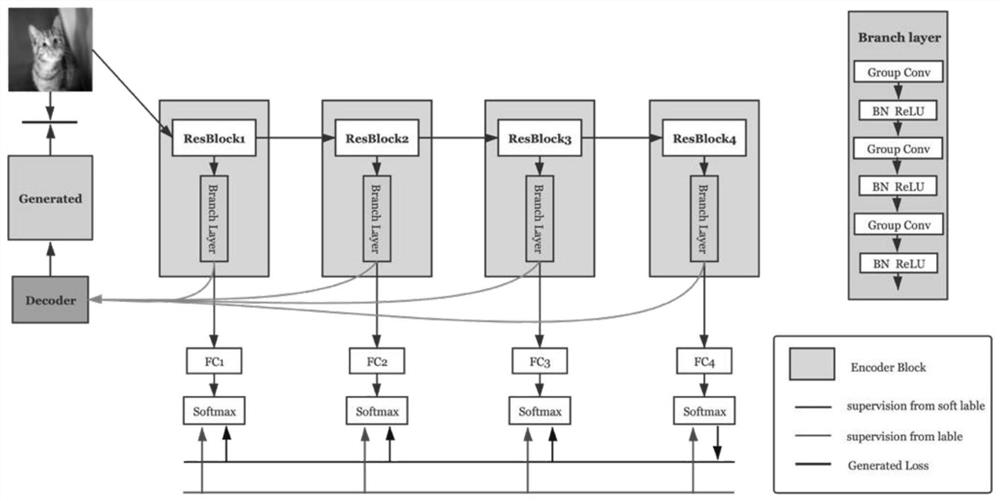

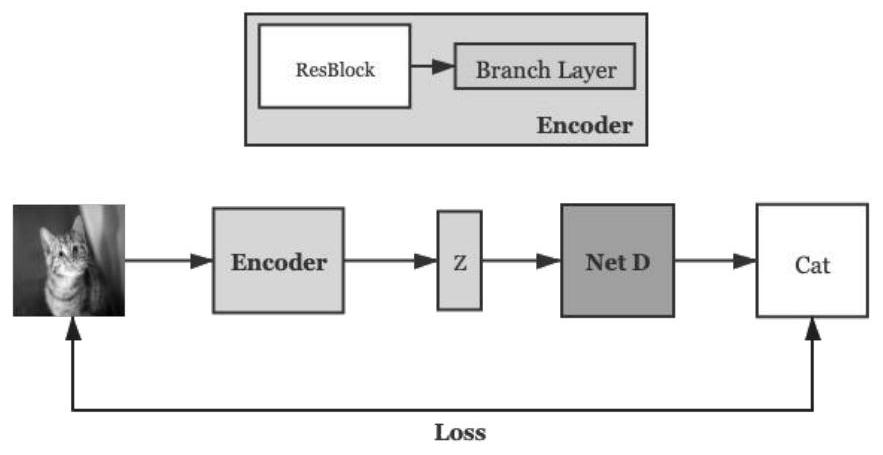

[0034] S2.1 model based on the depth of the deepest layer of the division as a network of teachers to supervise other branches with shallow knowledge of its output using distillation.

[0035] S2.2 designed joined together, the entire network is const...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com