Equipment-free personnel action recognition and position estimation method based on multi-task learning

A multi-task learning and action recognition technology, applied in the field of action recognition and position estimation of personnel without equipment, can solve the problems of increasing positioning errors, and achieve the effects of improving estimation performance, improving practicability and convenience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] Objects, advantages and features of the present invention will be illustrated and explained by the following non-limiting description of preferred embodiments. These embodiments are only typical examples of applying the technical solutions of the present invention, and all technical solutions formed by adopting equivalent replacements or equivalent transformations fall within the protection scope of the present invention.

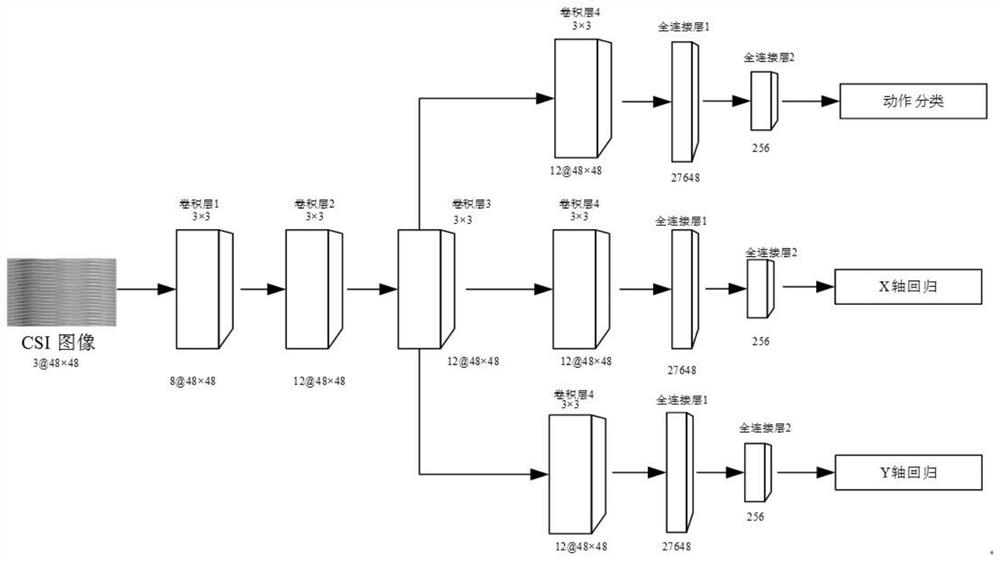

[0041] The present invention discloses a method for action recognition and position estimation of personnel without equipment based on multi-task learning. Aiming at the defects existing in the prior art, a method for action recognition and position estimation of personnel without equipment based on multi-task learning is proposed. Not only the positioning accuracy and action recognition rate are high, but also the structure is simple and the implementation cost is low.

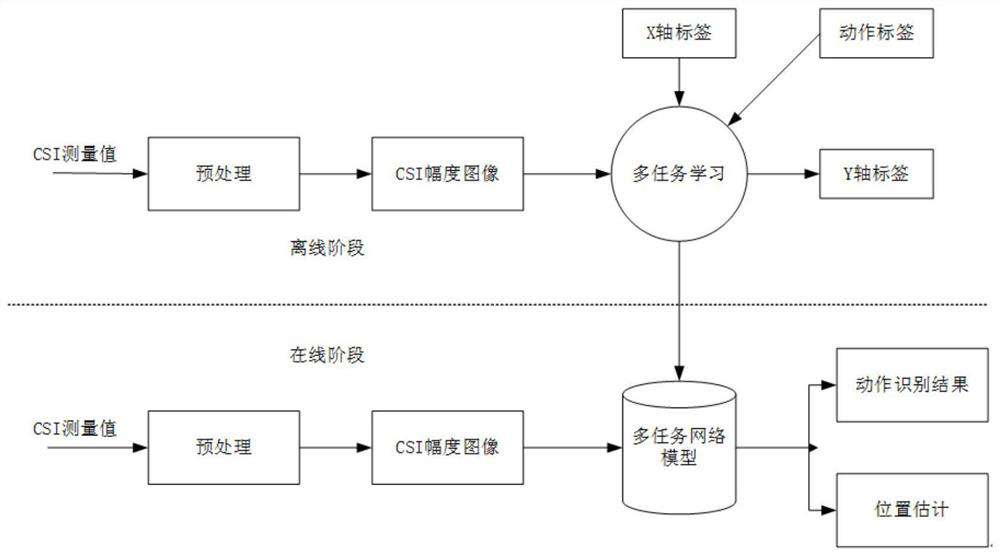

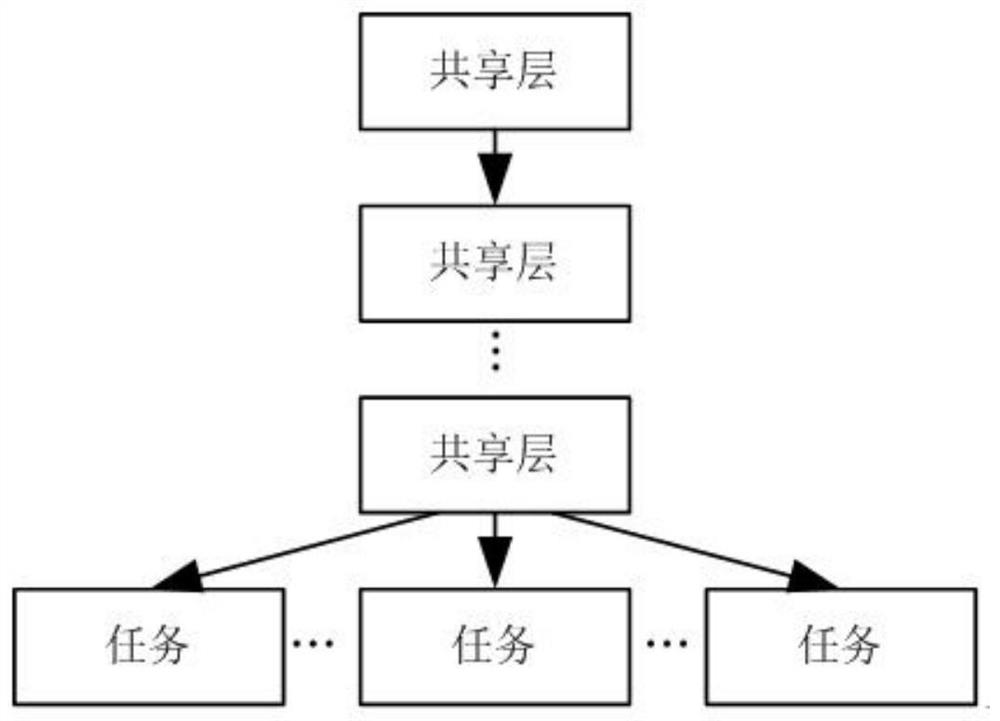

[0042] A non-device human action recognition and position estimation method bas...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com