Multi-exposure image fusion method based on depth perception enhancement

An image fusion and depth perception technology, applied in the field of HDR image generation and image fusion, to achieve the effects of rich detailed information, good global structure consistency, good computing efficiency and scalability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0076] The present invention will be further described below in conjunction with accompanying drawing and specific embodiment, but following embodiment does not limit the present invention in any way.

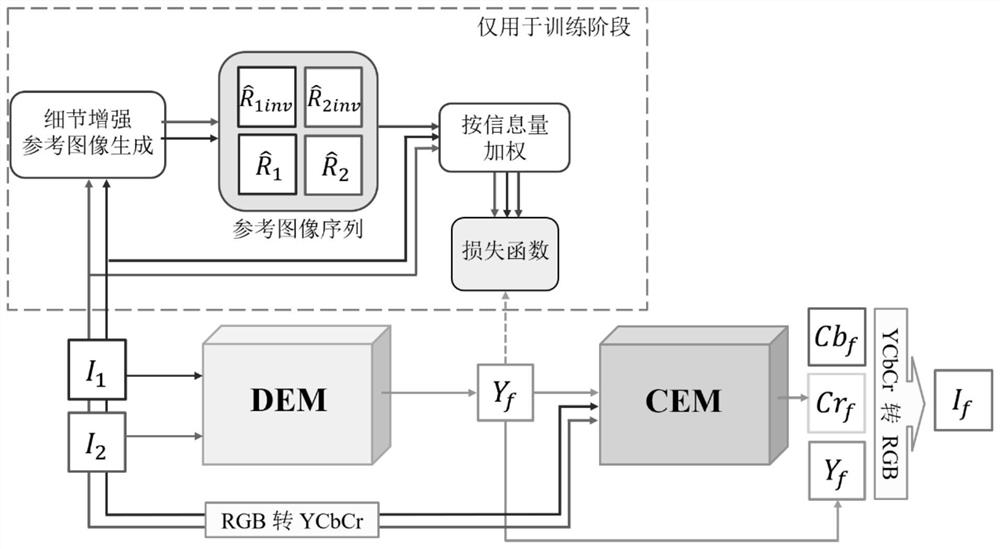

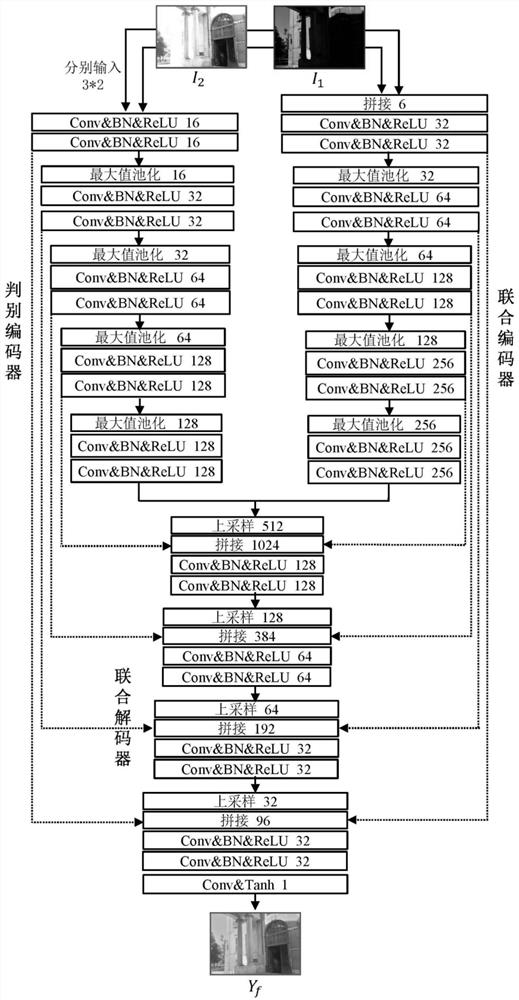

[0077] A multi-exposure image fusion method based on depth perception enhancement proposed by the present invention, such as figure 1 shown, including the following steps:

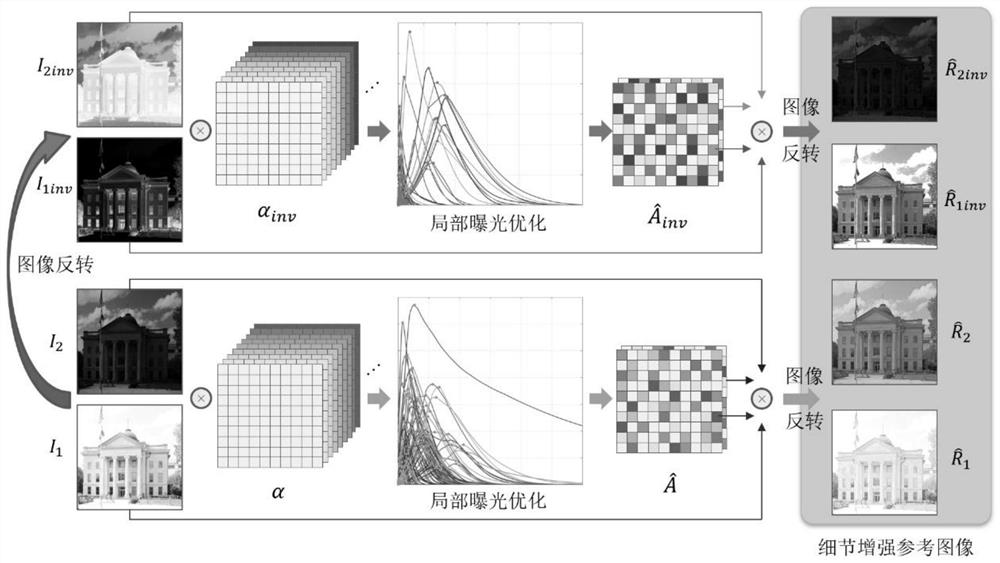

[0078] Step 1. For two source images I 1 and I 2 Design a two-way detail enhancement method for enhancement, obtain multiple detail enhancement reference images respectively, and form a sequence of detail enhancement reference images; figure 2 As shown, the process is as follows:

[0079] 1.1 Forward enhancement: Decompose the source image I: Where R and E represent the scene detail component and the exposure component, respectively; The operator represents an element-wise multiplication operation; a simple transformation yields in It is obtained by taking the reciprocal of E element by element....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com