Knowledge distillation-based confidential text recognition model training method, system and device

A text recognition and model training technology, applied in the field of text recognition, can solve the problems of large pre-training models and slow prediction speed, and achieve the effect of improving classification accuracy, improving accuracy, and fast prediction speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

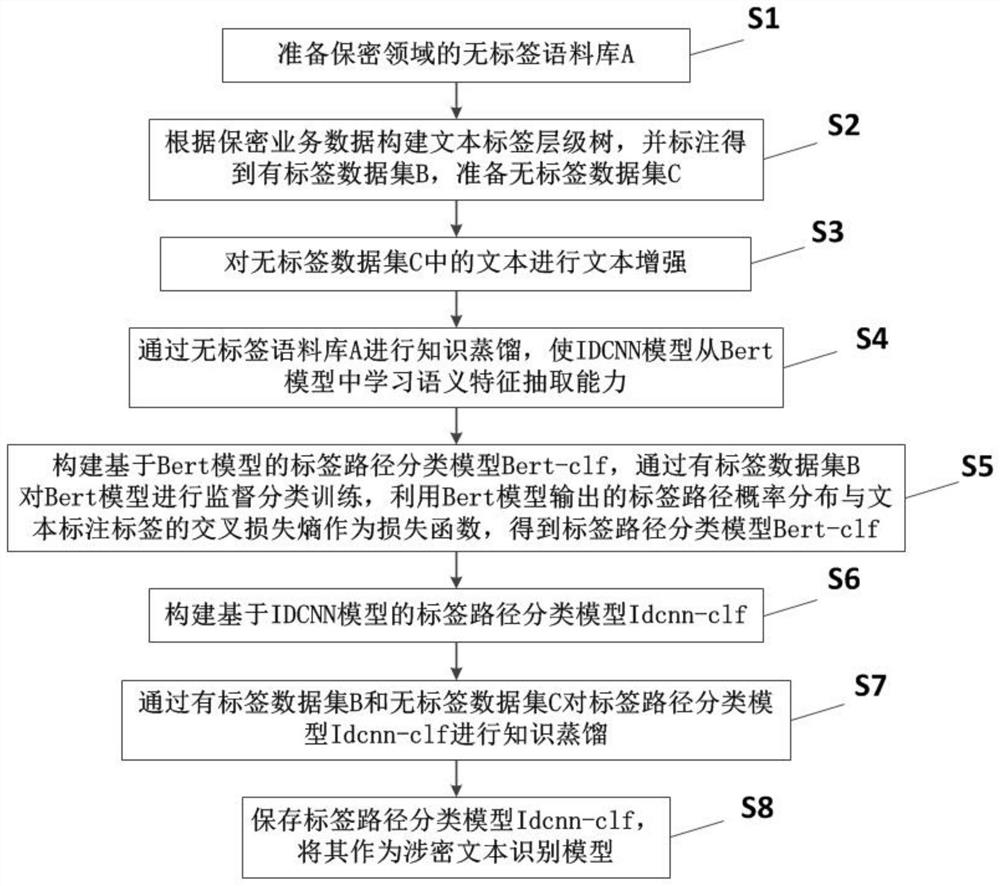

[0065] like figure 1 As shown, this embodiment provides a method for training a secret-related text recognition model based on knowledge distillation, including the following steps:

[0066] S1: Prepare an unlabeled corpus A in the secrecy domain.

[0067] The purpose of this step is to prepare a large-scale unlabeled confidential domain corpus for subsequent knowledge distillation.

[0068] S2: Construct a text label hierarchical tree based on the confidential business data, and label the labeled data set B, and prepare the unlabeled data set C.

[0069] Among them, each node of the label hierarchy tree has a label, the label of each child node of the label hierarchy tree belongs to the label of the parent node, and the category label corresponding to the text in the label data set B is the leaf node label of the label hierarchy tree.

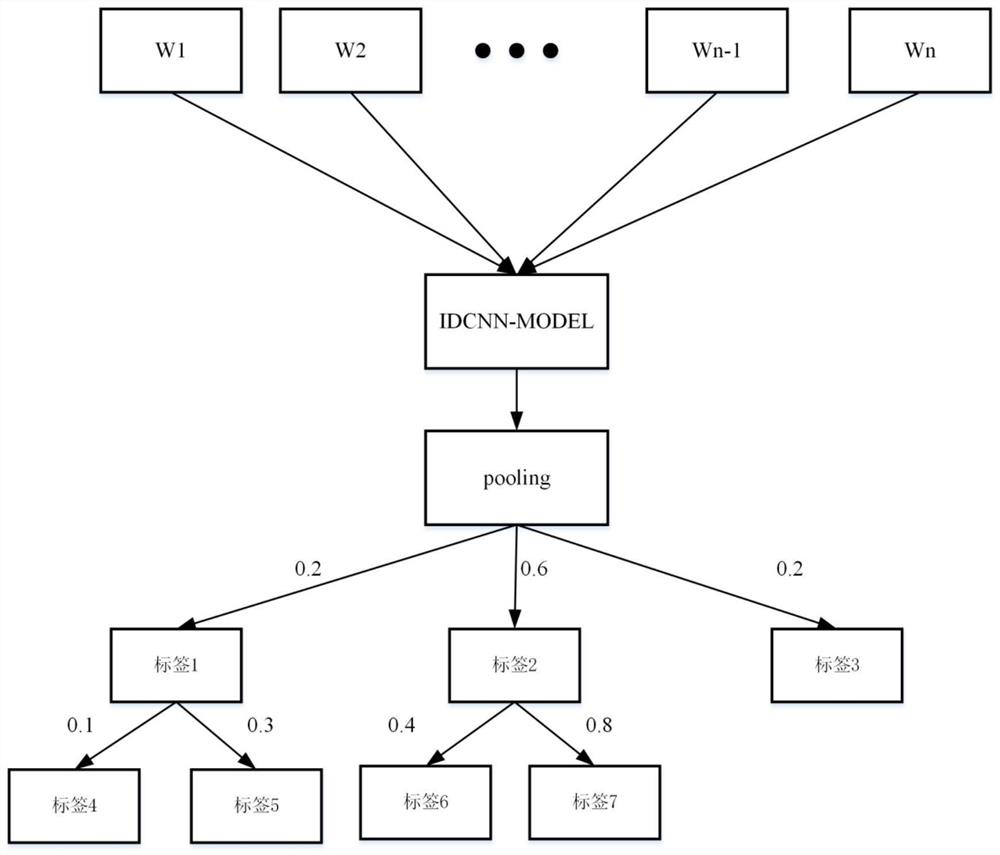

[0070] Specifically, this step constructs a label hierarchy tree based on confidential business knowledge, each node of the tree has a corr...

Embodiment 2

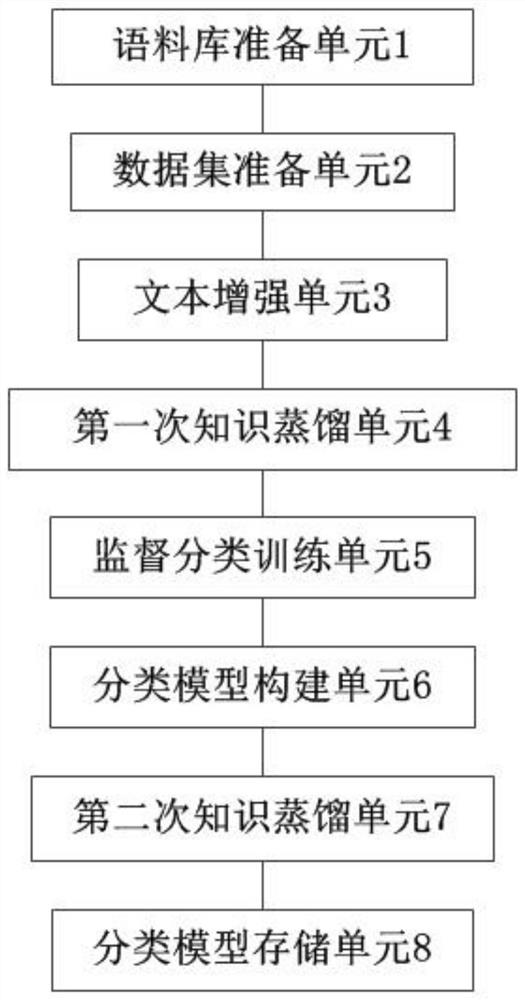

[0097] Based on Example 1, such as image 3 As shown, the present invention also discloses a secret-related text recognition model training system based on knowledge distillation, including: corpus preparation unit 1, data set preparation unit 2, text enhancement unit 3, first knowledge distillation unit 4, supervised classification A training unit 5 , a classification model construction unit 6 , a second knowledge distillation unit 7 and a classification model storage unit 8 .

[0098] The corpus preparation unit 1 is used to prepare the unlabeled corpus A in the confidential field;

[0099] The data set preparation unit 2 is used to construct a text label hierarchical tree according to the confidential business data, and label the labeled data set B, and prepare the unlabeled data set C.

[0100] A text enhancement unit 3 is configured to perform text enhancement on the text in the unlabeled data set C.

[0101] The first knowledge distillation unit 4 is used to perform kn...

Embodiment 3

[0107] This embodiment discloses a knowledge distillation-based secret-related text recognition model training device, including a processor and a memory; wherein, when the processor executes the knowledge-distilled-based secret-related text recognition model training program stored in the memory Implement the following steps:

[0108] 1. Prepare the unlabeled corpus A in the confidential field.

[0109] 2. Construct a text label hierarchical tree based on the confidential business data, and label the labeled data set B, and prepare the unlabeled data set C.

[0110] 3. Perform text enhancement on the text in the unlabeled dataset C.

[0111] 4. Carry out knowledge distillation through the unlabeled corpus A, so that the IDCNN model can learn the semantic feature extraction ability from the Bert model.

[0112] 5. Build Bert-clf, a label path classification model based on the Bert model, conduct supervised classification training on the Bert model through the labeled data se...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com