Mixed reality interactive guidance system and method based on adaptive visual differences

A visual difference, mixed reality technology, applied in the input/output, navigation, mapping and navigation directions of user/computer interaction, can solve the problems of unfriendly people with vision defects, and the differentiated presentation of people with different vision states is not considered. Improve user experience, improve indoor positioning accuracy, and improve the effect of immersive experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

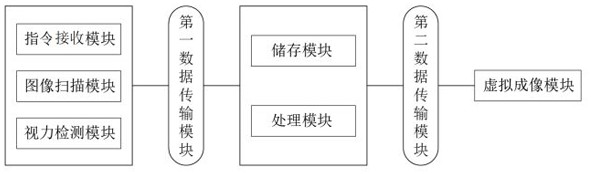

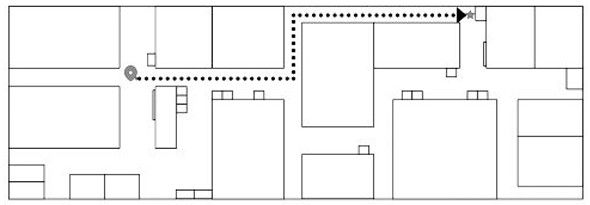

[0063] The mixed reality interactive guidance system of this embodiment can perform indoor wayfinding and navigation in public places, see figure 1 , the system of this embodiment includes an instruction receiving module, an image scanning module, a vision detection module, a storage module, a processing module, a virtual imaging module, a first data transmission module and a second data transmission module, an instruction receiving module, an image scanning module, a vision The detection modules send data to the processing module through the first data transmission module, and the processing module sends control instructions to the virtual imaging module through the second data transmission module. The processing module is also connected to the storage module, and can retrieve data from the storage module. The entity of the system in this embodiment is the head-mounted MR glasses, and each module included is embedded in the corresponding position of the frame of the MR glasses...

Embodiment 2

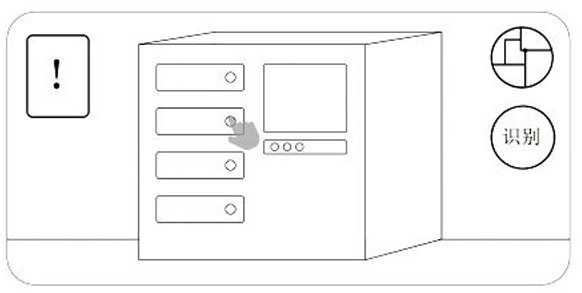

[0073] On the basis of Embodiment 1, the system of this embodiment also has the function of facility identification, that is, to identify the target facility and provide the operation method of the target facility. The target facility is the facility selected by the wearer. Facilities are generally smart facilities deployed in public places, such as ticket vending machines, vending machines, shared equipment, etc.

[0074] In this embodiment, the storage module also stores three-dimensional object models and operation methods of indoor facilities in public places; the processing module also includes a facility identification sub-module. When the image scanning module receives the facility identification instruction, it scans the target facility and extracts facility feature information, where the facility feature information refers to the depth image of the target facility, and transmits the facility feature information to the processing module through the first data transmissi...

Embodiment 3

[0077] This embodiment will provide a method for constructing the corresponding relationship between vision status and interface presentation scheme, where the interface presentation scheme includes layout and color scheme.

[0078] The specific construction process is as follows:

[0079] (1) Predefine several different vision states and the diopters corresponding to each vision state.

[0080] In this embodiment, the diopter is x D (-3≤ x ≤2), divided into six categories of vision states with diopters of -3D, -2D, -1D, 0D, 1D, and 2D, D is the diopter unit, 0D means normal vision, 1D, 2D represent presbyopia 100 degrees, 200 degrees; -1D, -2D, -3D represent 100 degrees, 200 degrees, 300 degrees of myopia respectively.

[0081] (2) Determine the interface layout suitable for people with various visual conditions.

[0082] In this embodiment, the interface is divided into 3 areas: Guidance Area 1, Prompt Area 2 and Selection Area 3. For details, see Figure 4 . The guida...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com