Implementation method for many-core simplified Cache protocol without transverse consistency

A protocol implementation and consistency technology, applied in the field of high-performance computing, can solve problems such as false sharing of shared main memory Cache structure, achieve the effects of reducing hardware overhead, improving write-back efficiency, and solving false sharing problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

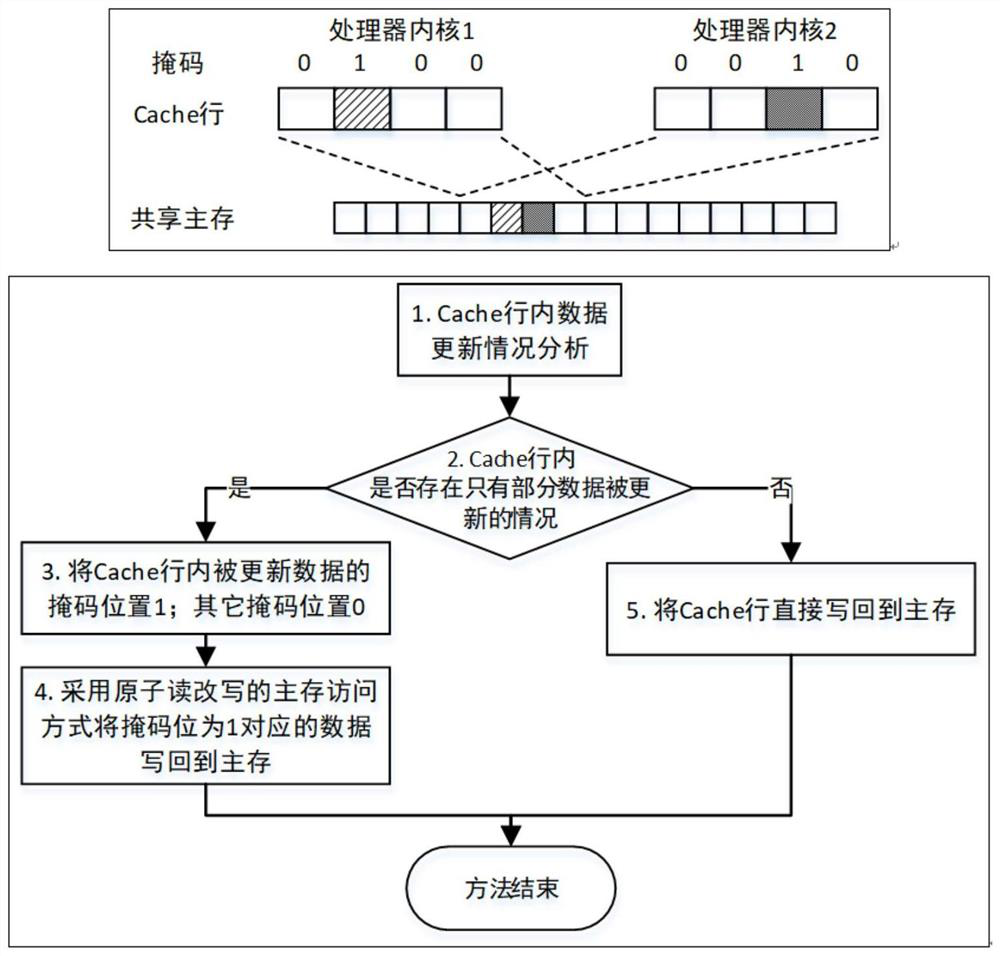

[0020] Embodiment: The present invention provides a method for implementing the many-core streamlined Cache protocol without horizontal consistency, specifically comprising the following steps:

[0021] S1. Obtain the Cache row status bit information of the hardware Cache, analyze the data update situation in the Cache row, and mark the updated data;

[0022] S2. If all the data in the Cache line is not updated, or all the data in the Cache line is updated, jump to S5, if only part of the data in the Cache line is updated, jump to S3;

[0023] S3. When only part of the data in a Cache row needs to be written back, determine the unit size and the number of data of the part of the data, set the bit mask corresponding to the part of the data to 1, and set the other bit masks to 0;

[0024] S4. According to the mask granularity and setting situation, the data corresponding to the mask bit in the main memory is updated as 1, as follows:

[0025] S4.1 According to the physical addr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com