Patents

Literature

39 results about "False sharing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

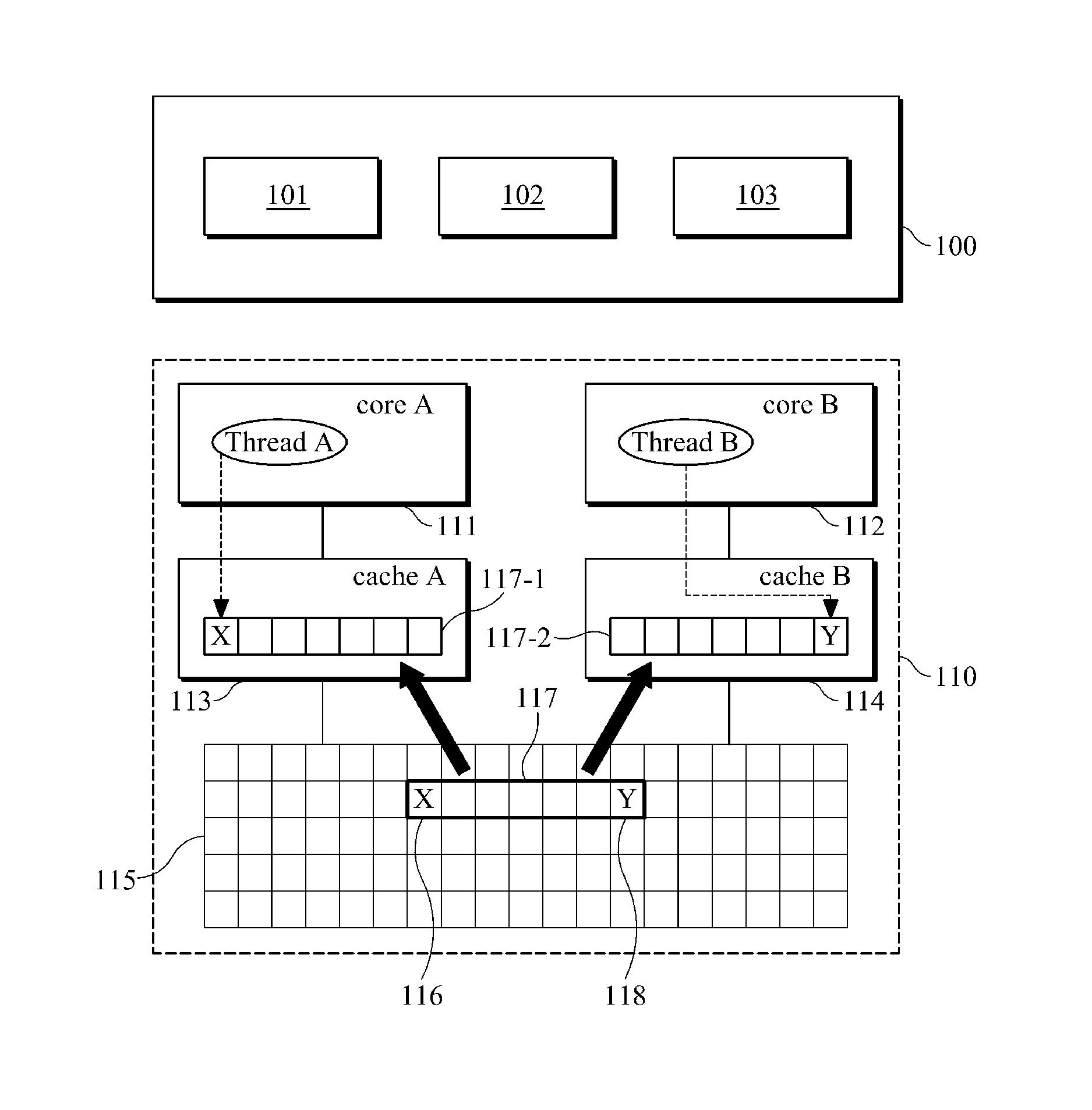

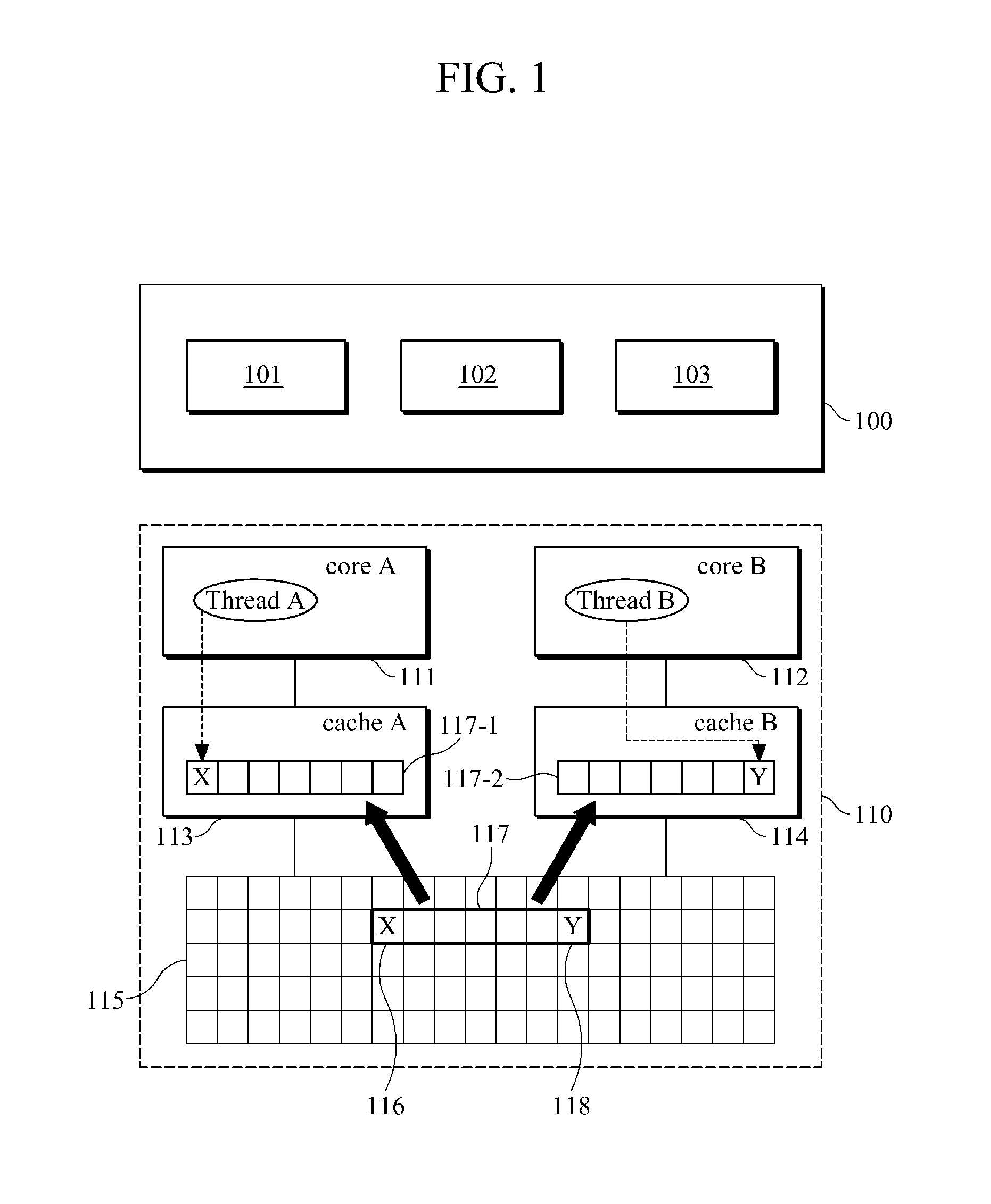

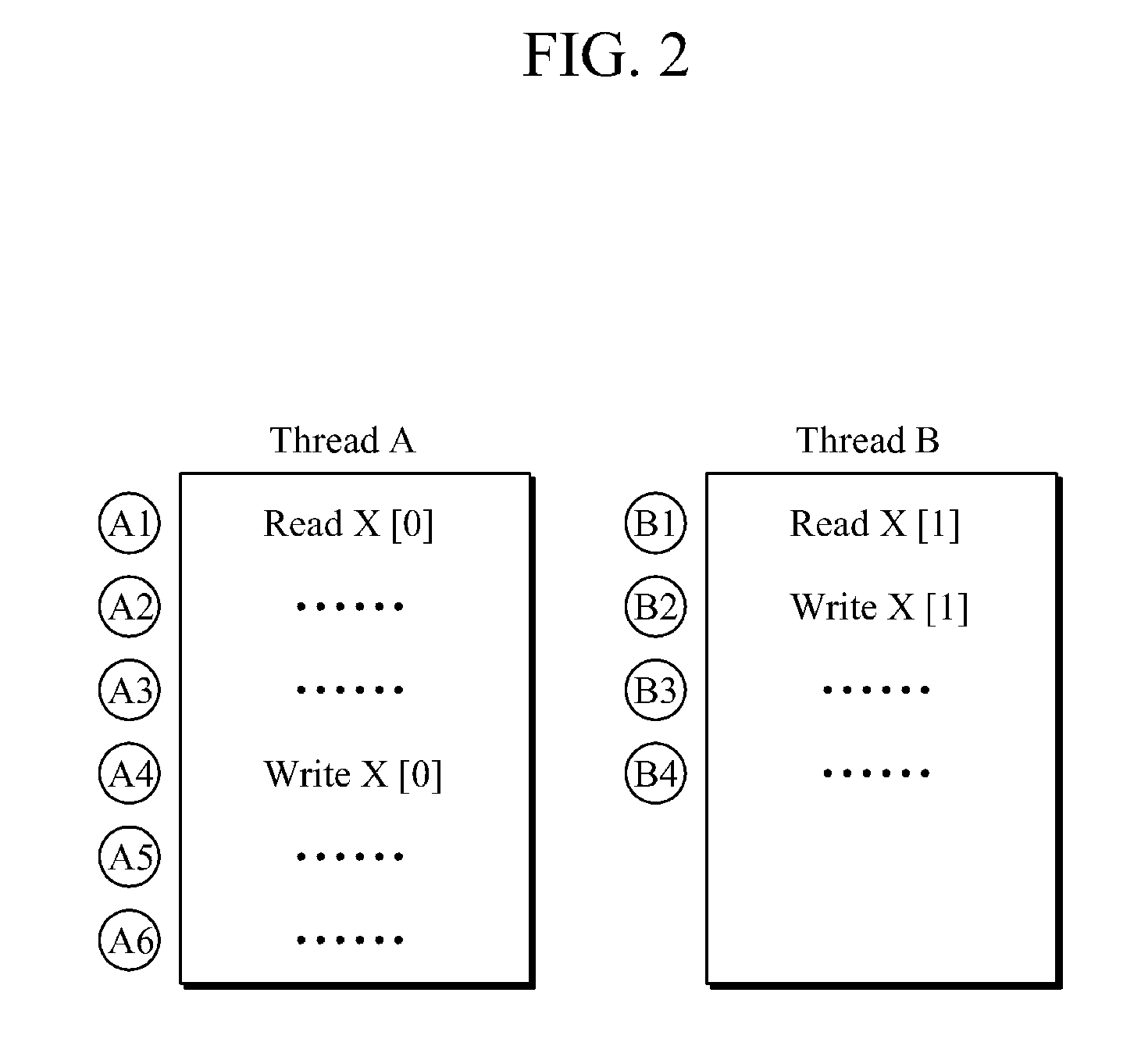

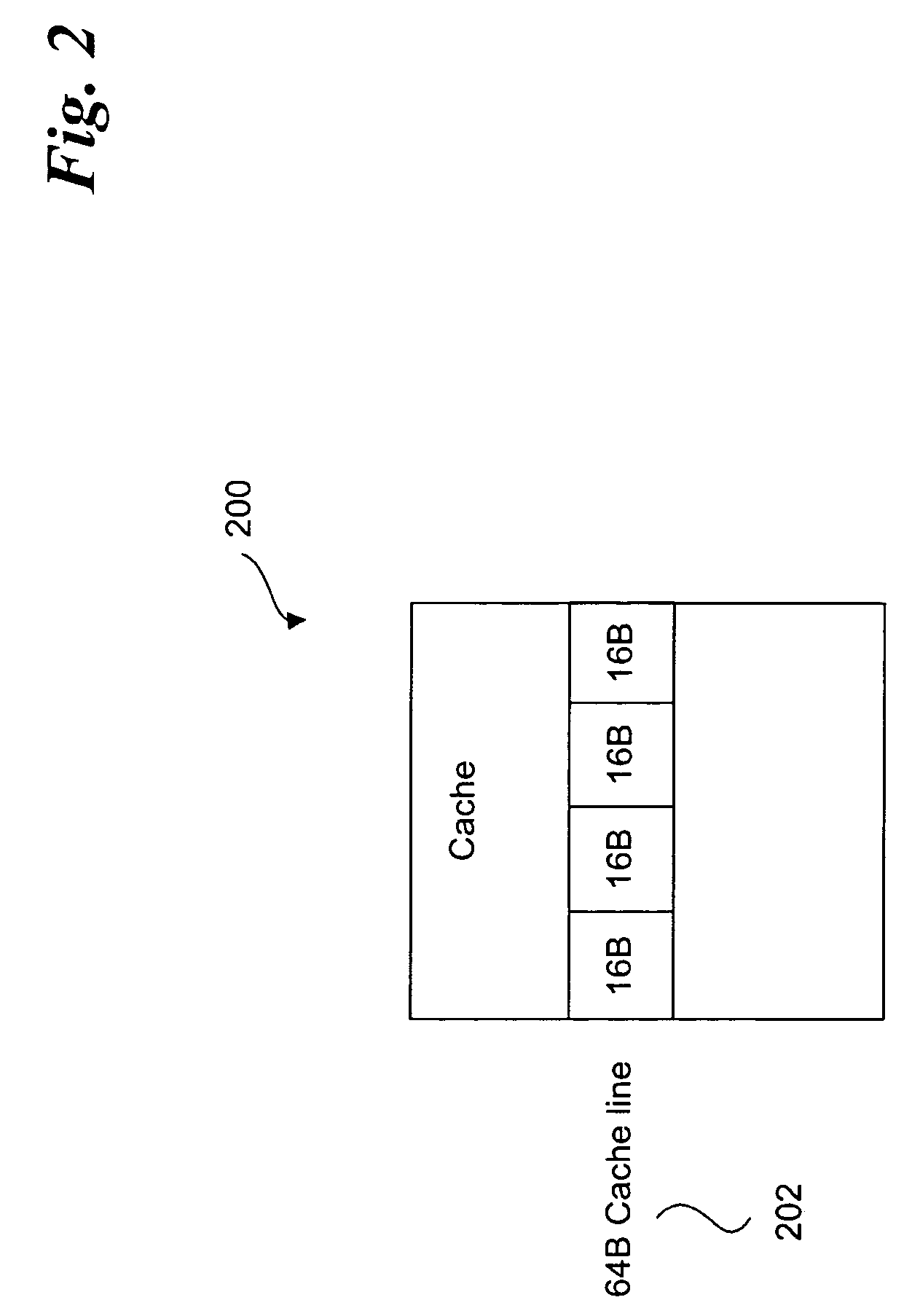

In computer science, false sharing is a performance-degrading usage pattern that can arise in systems with distributed, coherent caches at the size of the smallest resource block managed by the caching mechanism. When a system participant attempts to periodically access data that will never be altered by another party, but those data share a cache block with data that are altered, the caching protocol may force the first participant to reload the whole unit despite a lack of logical necessity. The caching system is unaware of activity within this block and forces the first participant to bear the caching system overhead required by true shared access of a resource.

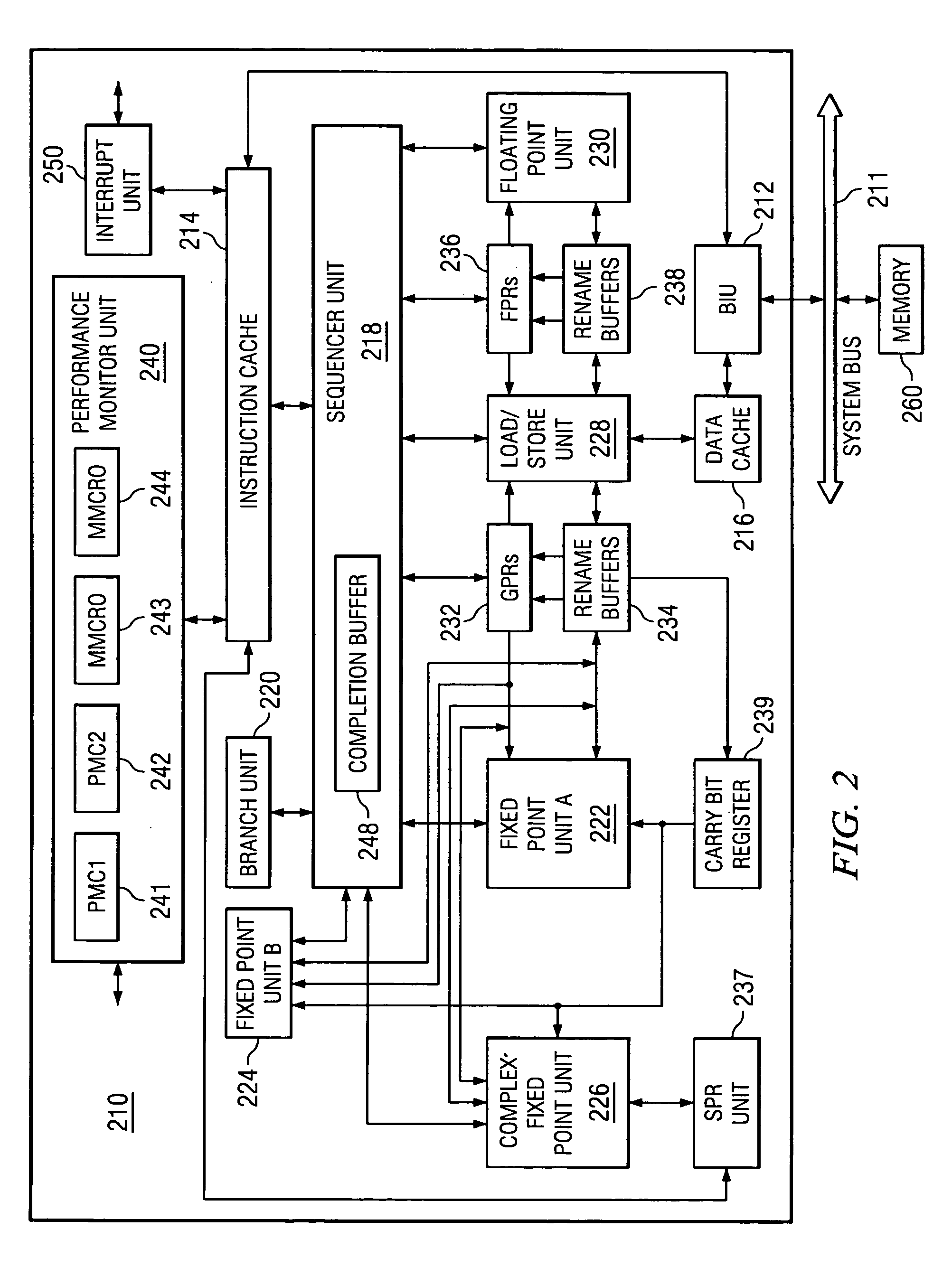

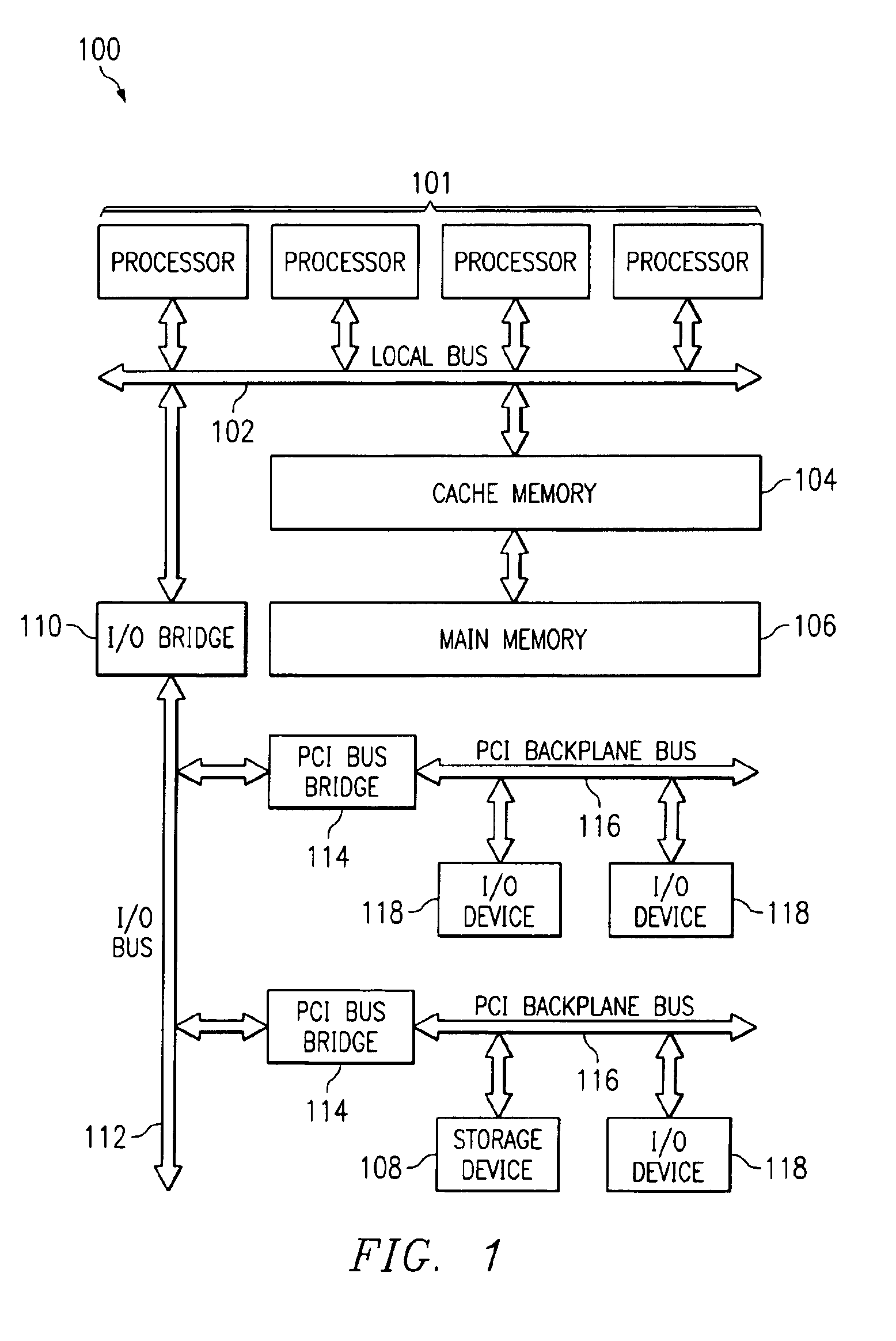

Method and apparatus for autonomically moving cache entries to dedicated storage when false cache line sharing is detected

InactiveUS7114036B2Improve performanceMemory adressing/allocation/relocationDigital computer detailsData processing systemProcessing Instruction

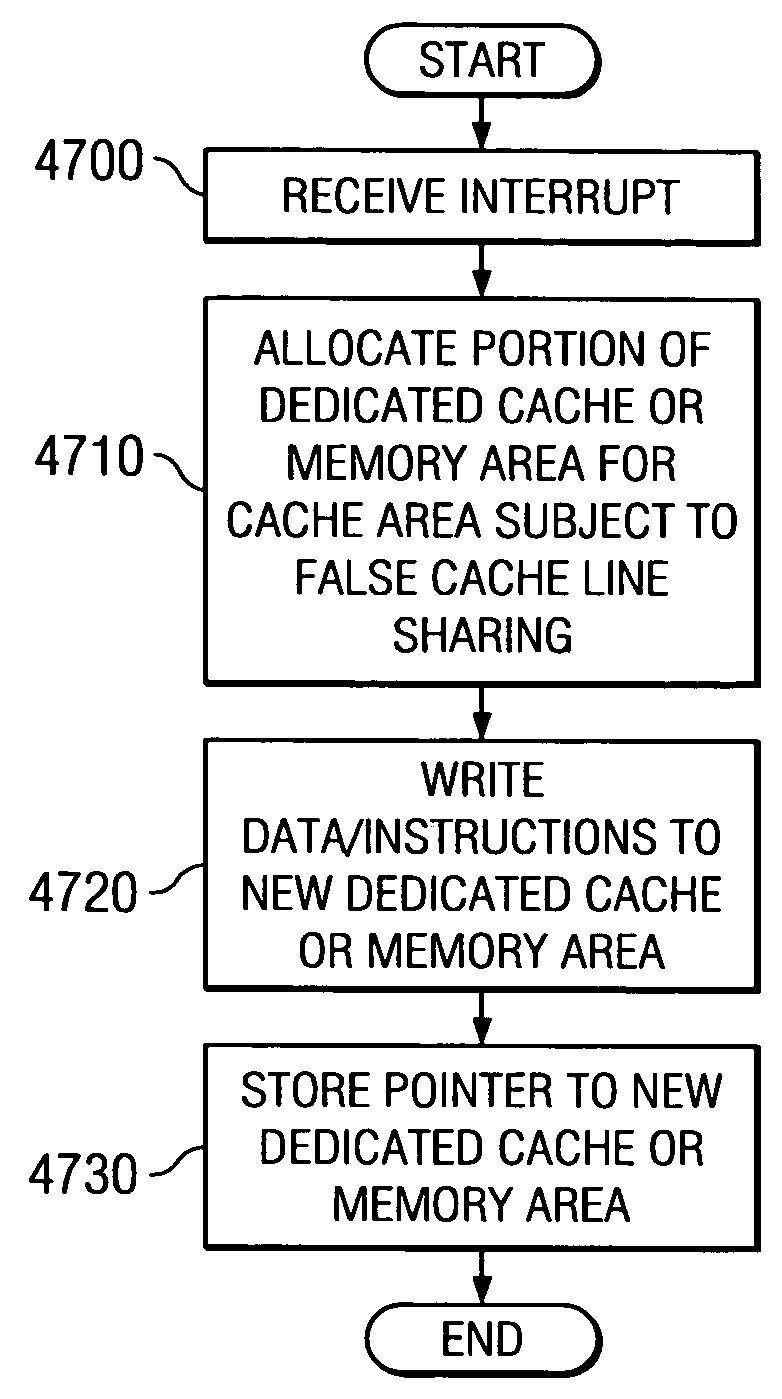

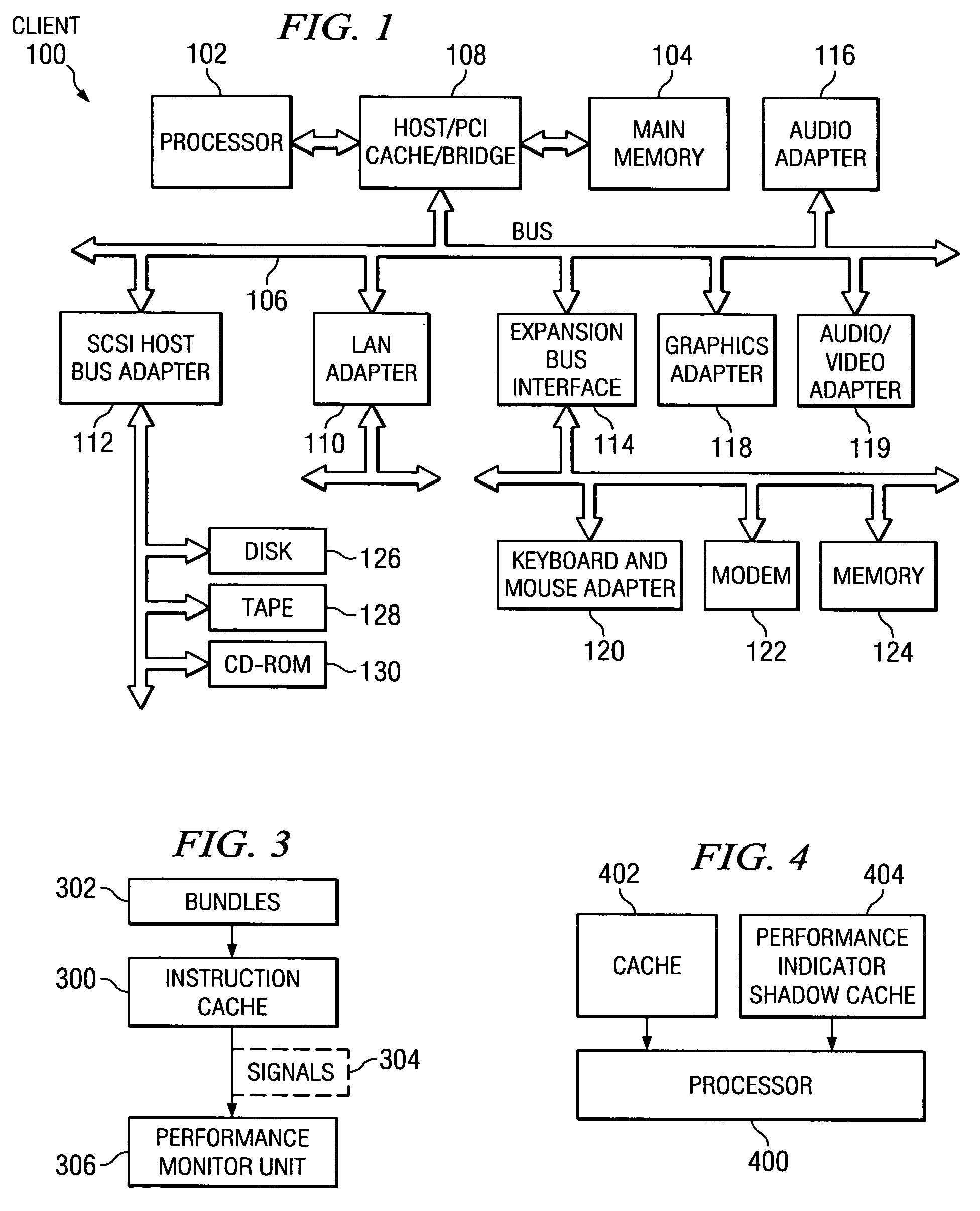

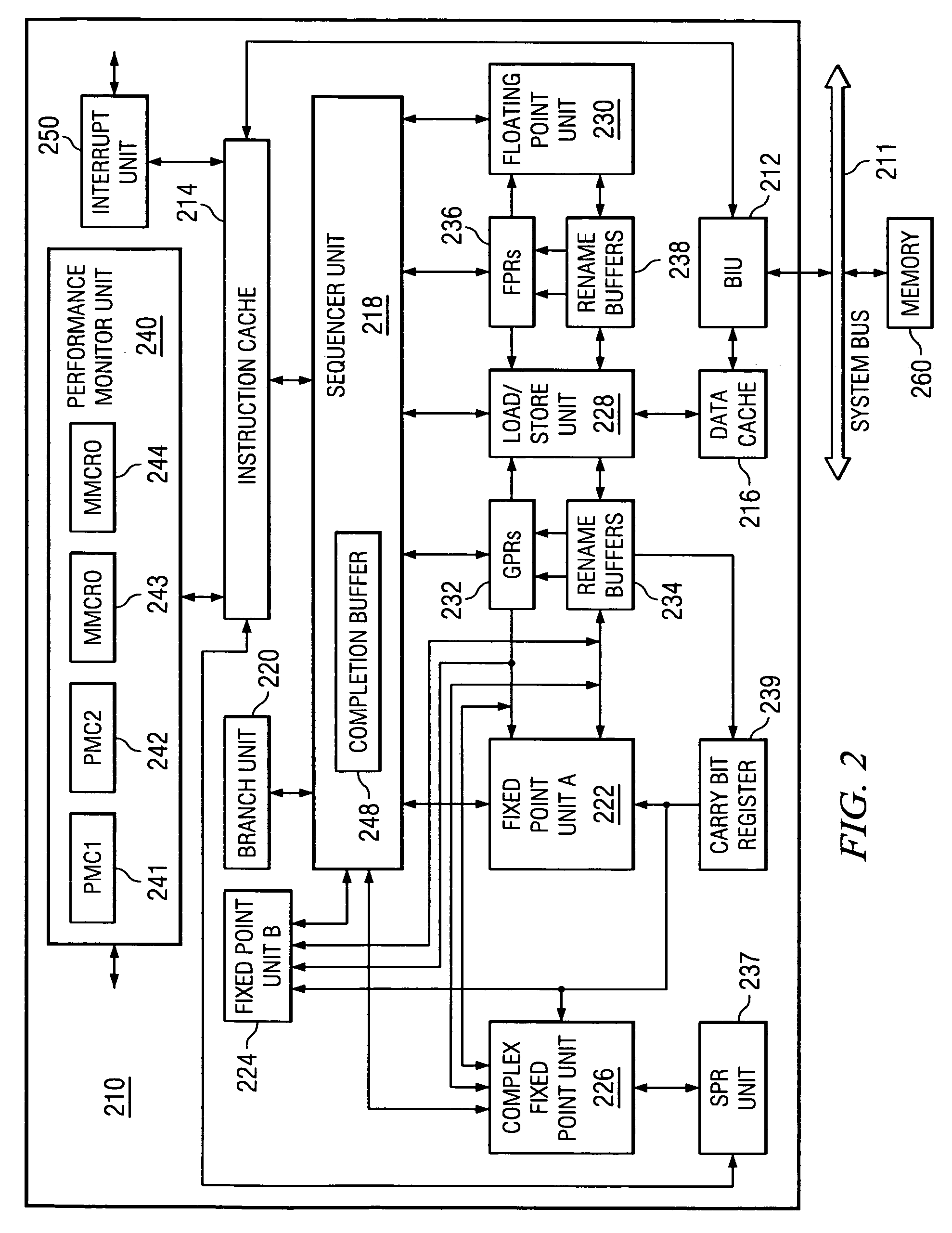

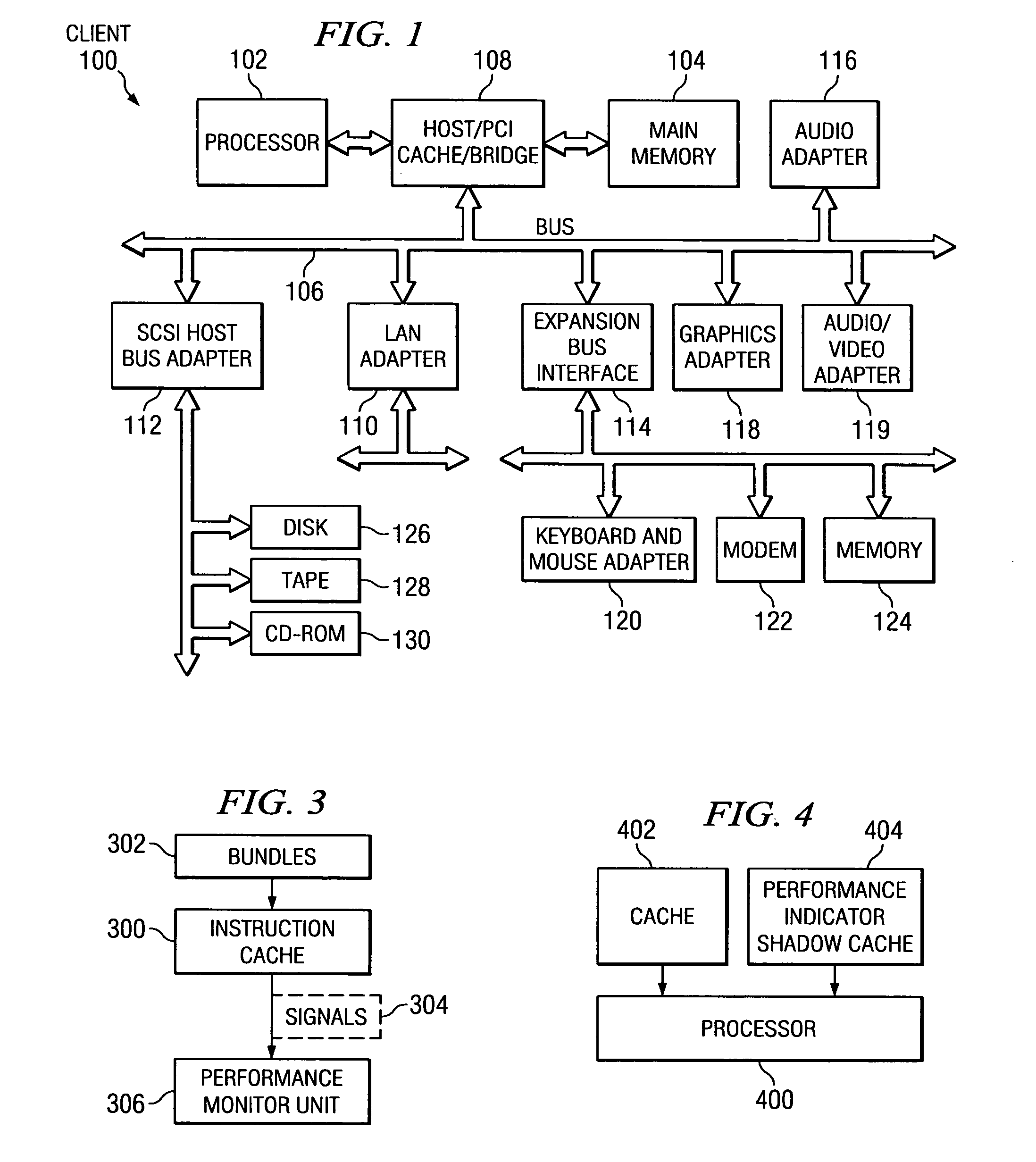

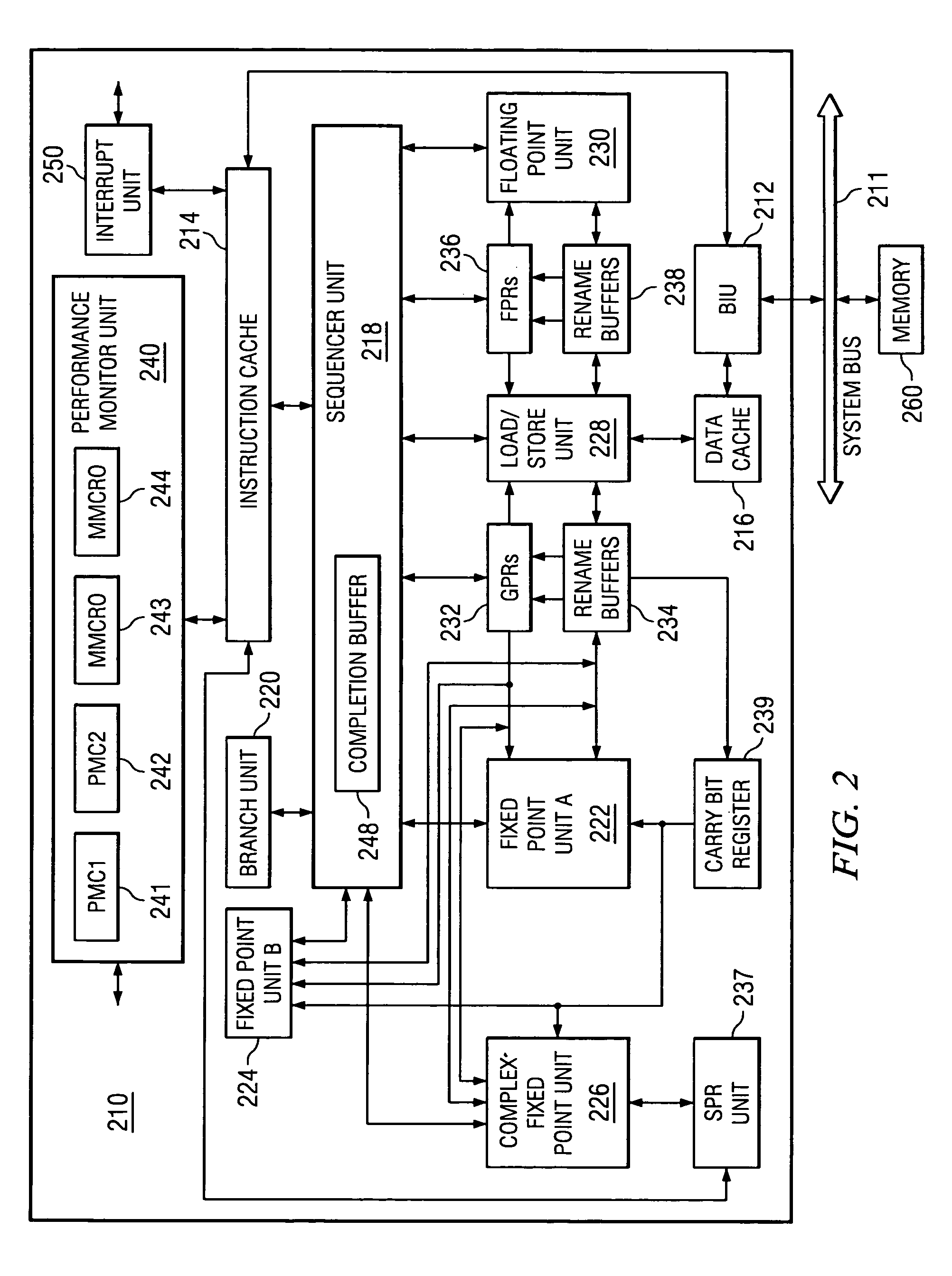

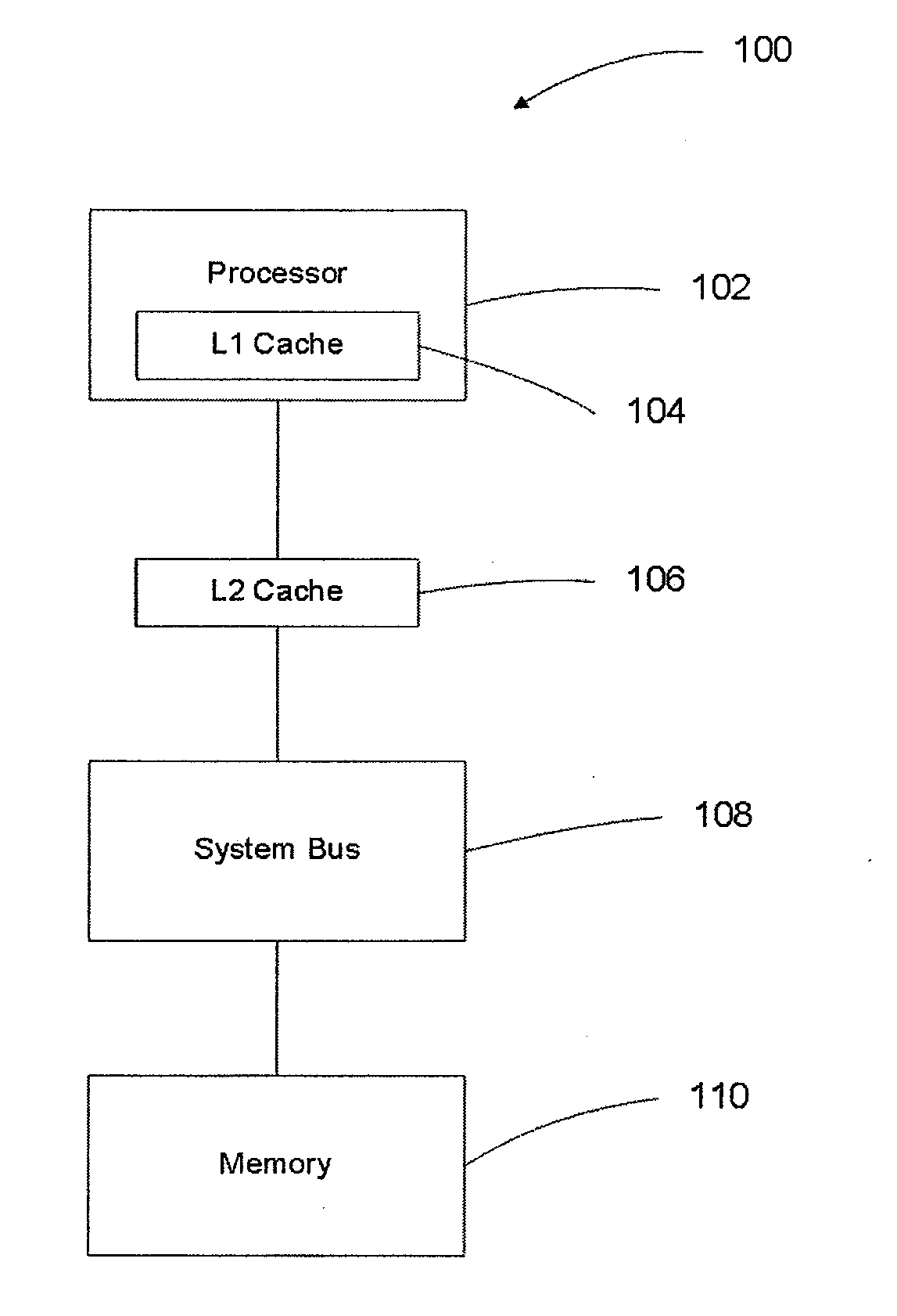

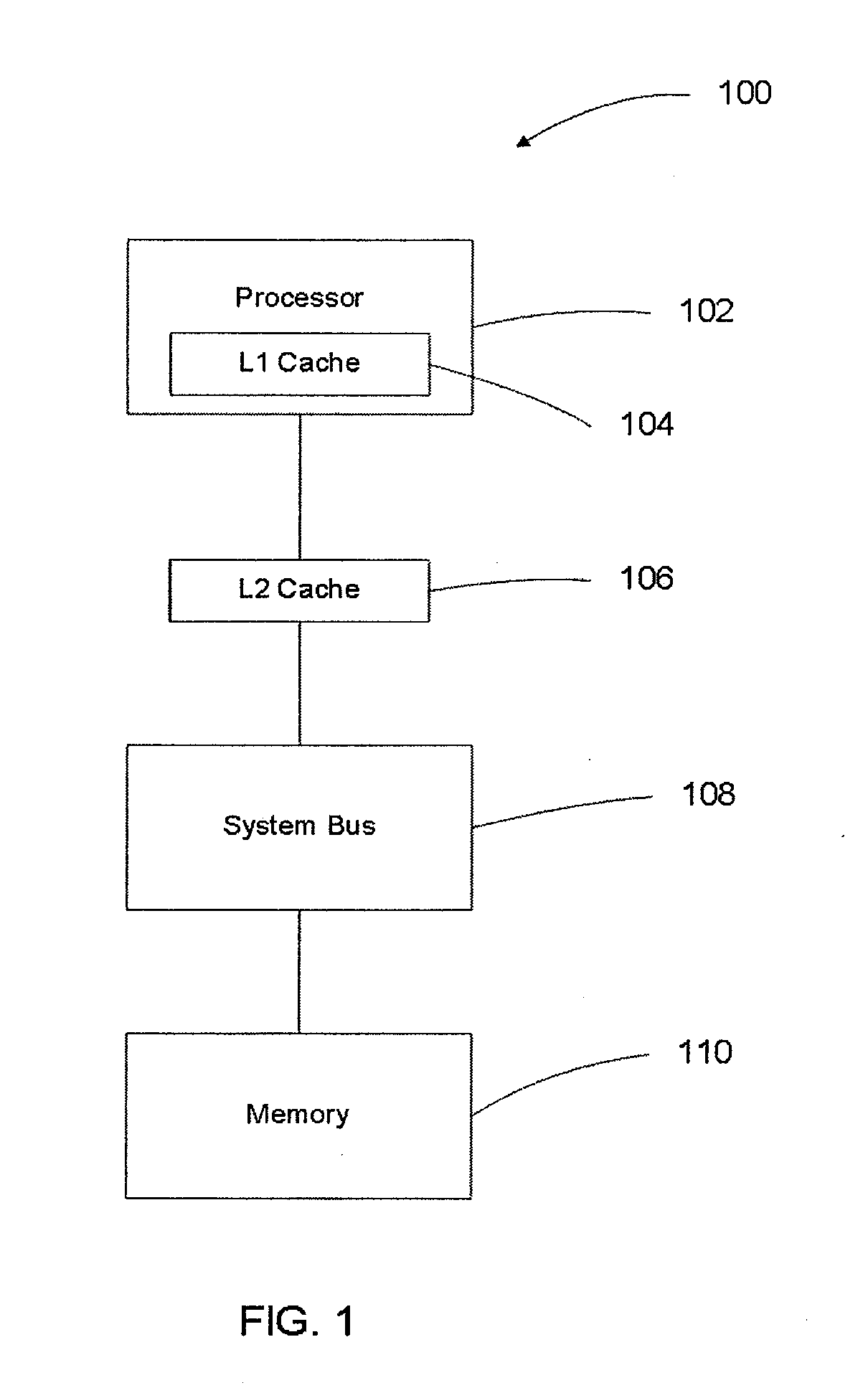

A method, apparatus, and computer instructions in a data processing system for processing instructions are provided. Instructions are received at a processor in the data processing system. If a selected indicator is associated with the instruction, counting of each event associated with the execution of the instruction is enabled. In some embodiments, when it is determined that a cache line is being falsely shared using the performance indicators and counters, an interrupt may be generated and sent to a performance monitoring application. An interrupt handler of the performance monitoring application will recognize this interrupt as indicating false sharing of a cache line. Rather than reloading the cache line in a normal fashion, the data or instructions being accessed may be written to a separate area of cache or memory area dedicated to false cache line sharing data. The code may then be modified by inserting a pointer to this new area of cache or memory. Thus, when the code again attempts to access this area of the cache, the access is redirected to the new cache or memory area rather than to the previous area of the cache that was subject to false sharing. In this way, reloads of the cache line may be avoided.

Owner:IBM CORP

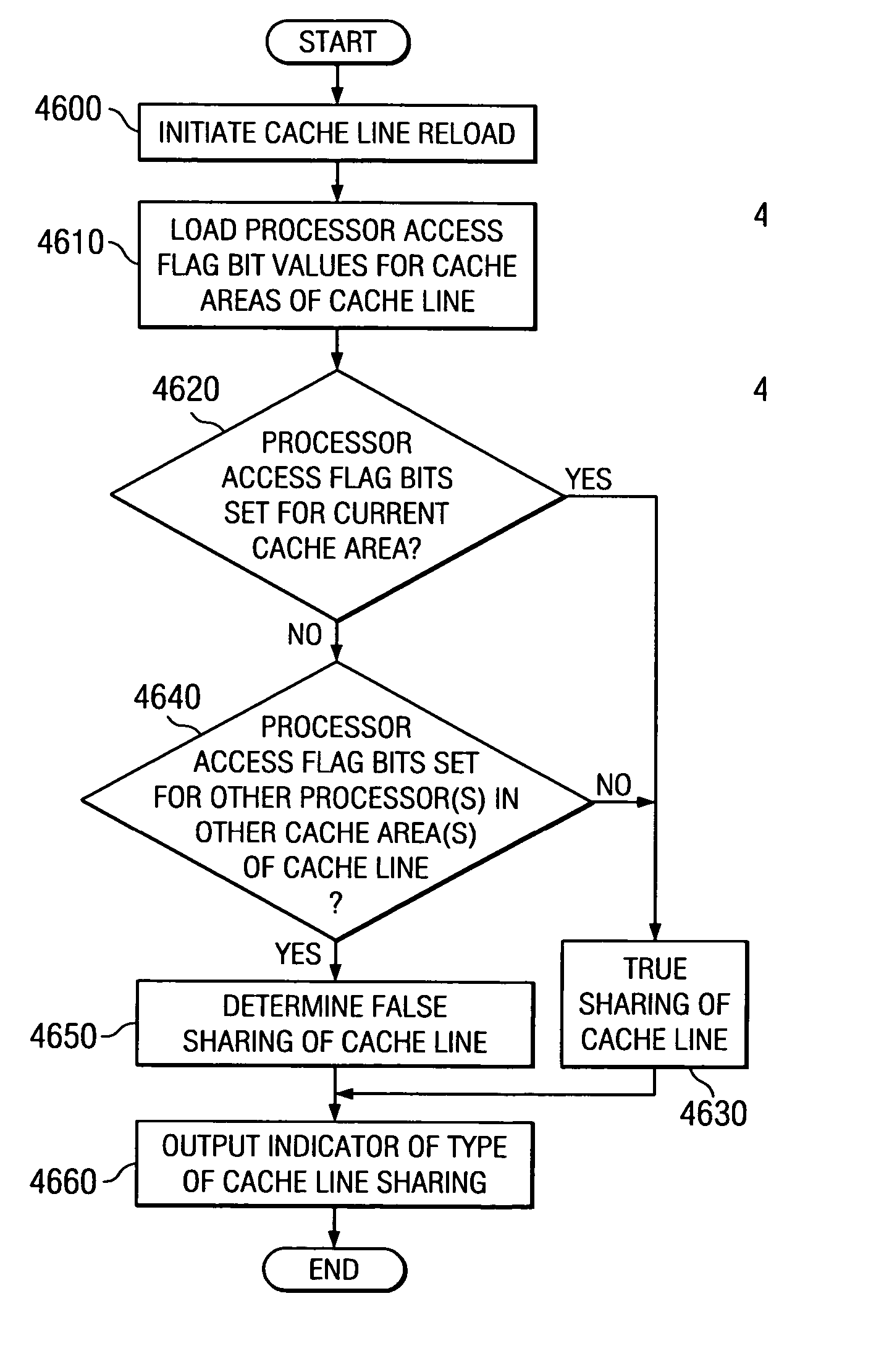

Method and apparatus for identifying false cache line sharing

InactiveUS7093081B2Improve performanceMemory adressing/allocation/relocationData processing systemProcessing Instruction

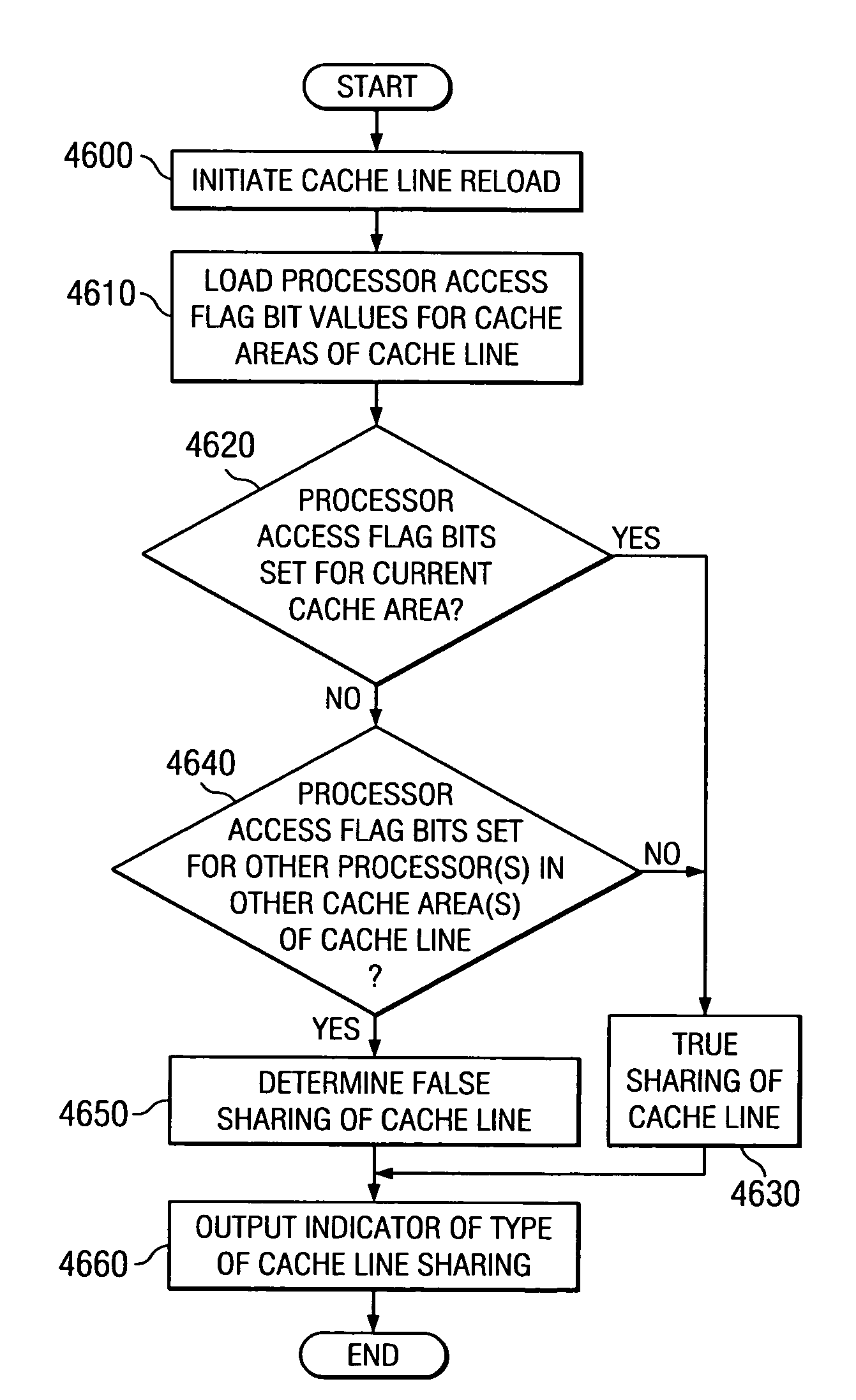

A method, apparatus, and computer instructions in a data processing system for processing instructions are provided. Instructions are received at a processor in the data processing system. If a selected indicator is associated with the instruction, counting of each event associated with the execution of the instruction is enabled. In some embodiments, the performance indicators may be utilized to obtain information regarding the nature of the cache hits and reloads of cache lines within the instruction or data cache. These embodiments may be used to determine whether processors of a multiprocessor system, such as a symmetric multiprocessor (SMP) system, are truly sharing a cache line or if there is false sharing of a cache line. This determination may then be used as a means for determining how to better store the instructions / data of the cache line to prevent false sharing of the cache line.

Owner:IBM CORP

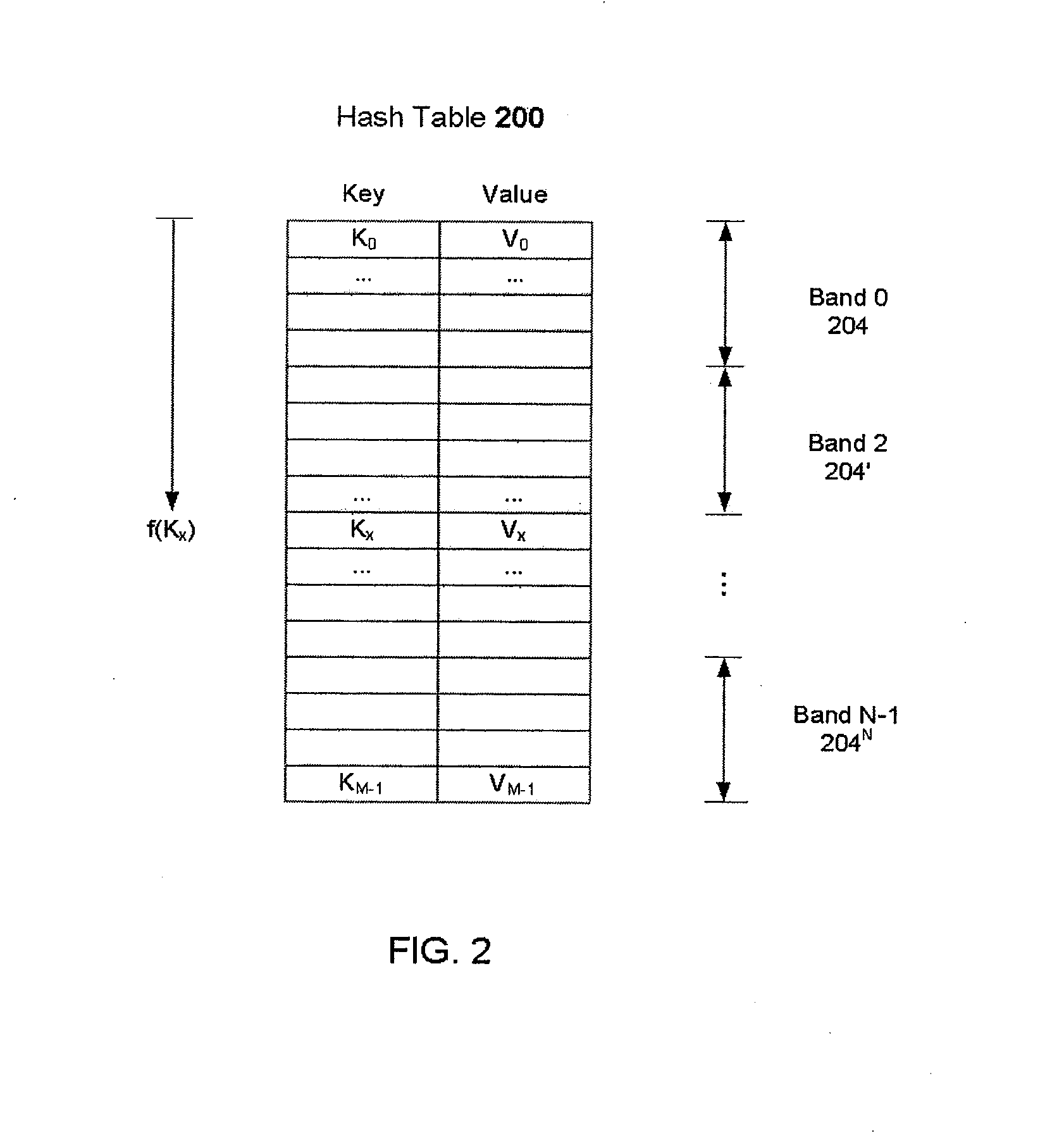

Hash table operations with improved cache utilization

InactiveUS20080215849A1Improve cache utilizationImprove localityDigital data information retrievalSpecial data processing applicationsGeneral purposeFalse sharing

Method and apparatus for building large memory-resident hash tables on general purpose processors. The hash table is broken into bands that are small enough to fit within the processor cache. A log is associated with each band and updates to the hash table are written to the appropriate memory-resident log rather than being directly applied to the hash table. When a log is sufficiently full, updates from the log are applied to the hash table insuring good cache reuse by virtue of false sharing of cache lines. Despite the increased overhead in writing and reading the logs, overall performance is improved due to improved cache line reuse.

Owner:CERTEON

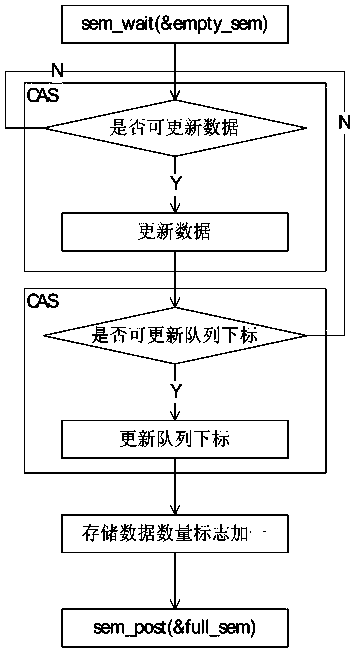

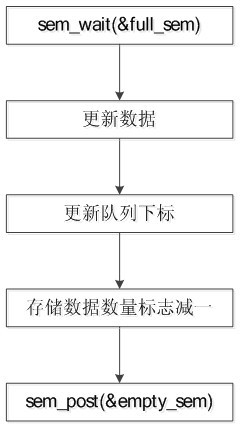

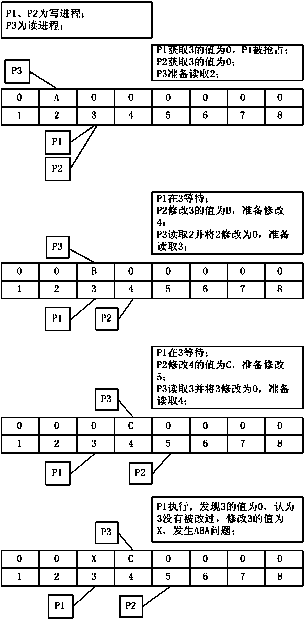

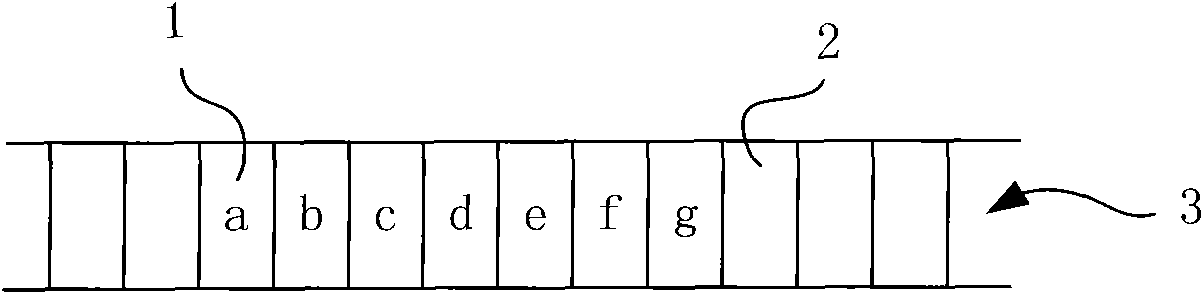

Method for achieving unlocked concurrence message processing mechanism

InactiveCN104077113AGuaranteed atomicityImprove efficiencyConcurrent instruction executionComputer hardwareMessage processing

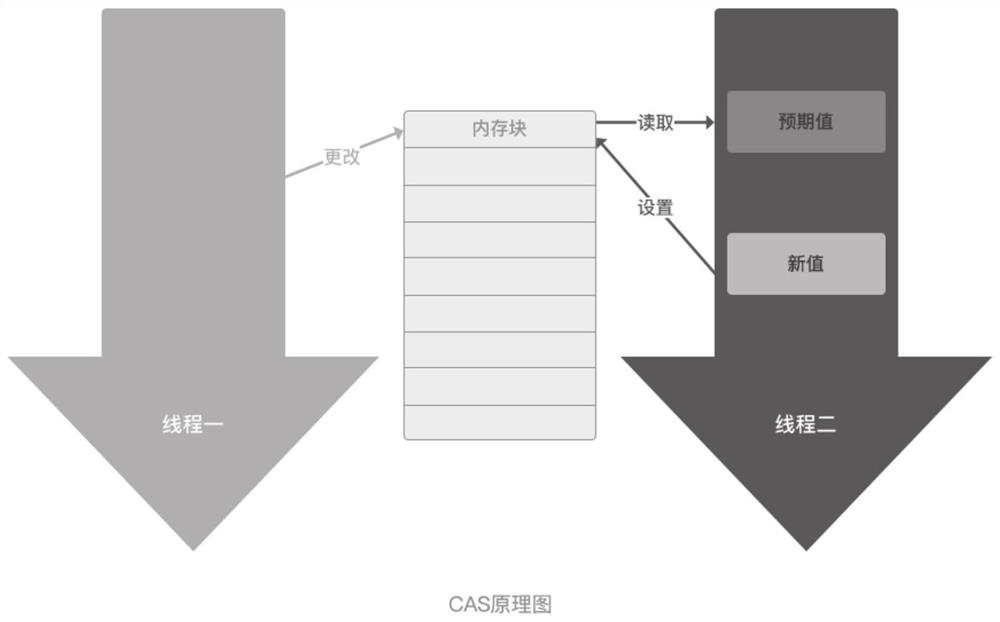

The invention discloses a method for achieving an unlocked concurrence message processing mechanism. An annular array is used as a data buffering area, caching and pre-reading can be facilitated, meanwhile, the defect that memory needs to be applied or released at each node operation caused by a chain table structure is overcome, and the efficiency is improved. In order to solve the concurrence control problem of a multiple-producer and single-consumer mode, a CAS and a memory barrier are used for guaranteeing mutual exclusion, a locking mode is not used, and performance deterioration caused by low efficiency of locking is avoided. In order to solve the common ABA problem in the unlocked technology, a double-insurance CAS technology is used for avoiding the occurrence of the ABA problem. In order to solve the false sharing problem, the method that cache lines are filled between a head pointer, a tail pointer and capacity is used for avoiding the false sharing problem due to the fact that the head pointer, the tail pointer and the capacity are in the same cache line. Meanwhile, the length of the array is set to be the index times of two, the bit operation of 'and operation' is used for acquiring the subscript of the array, and the overall efficiency is improved.

Owner:CSIC WUHAN LINCOM ELECTRONICS

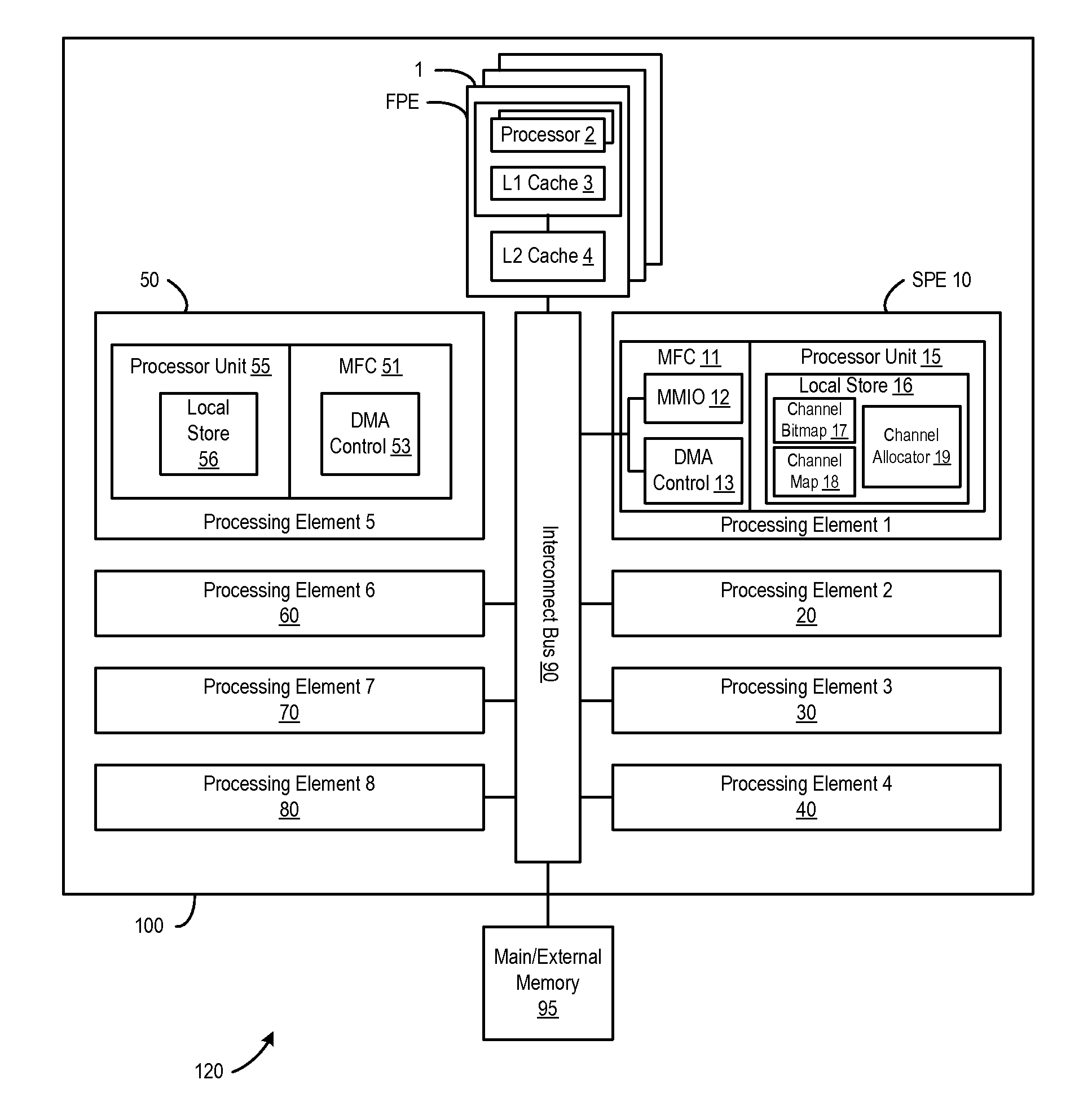

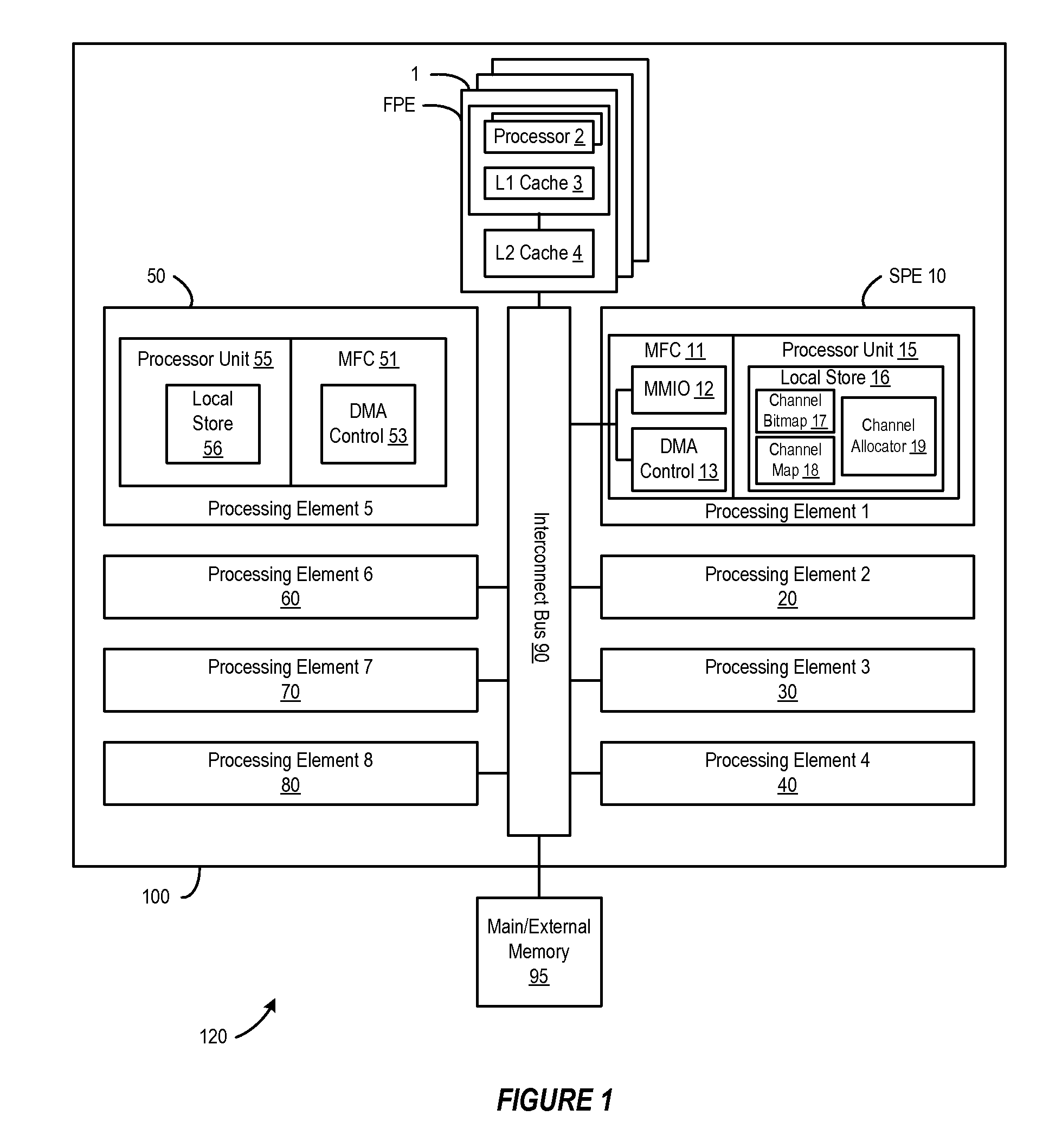

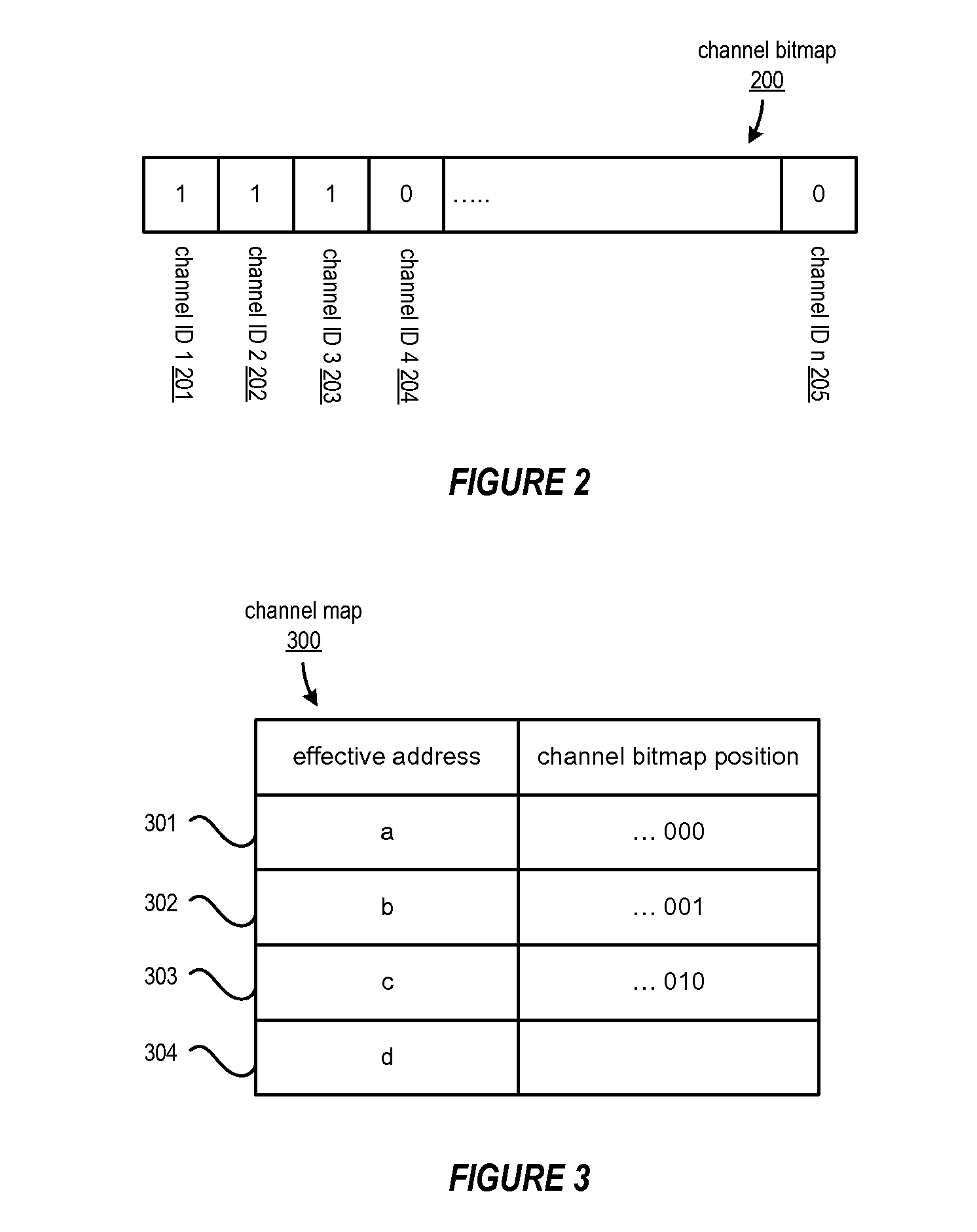

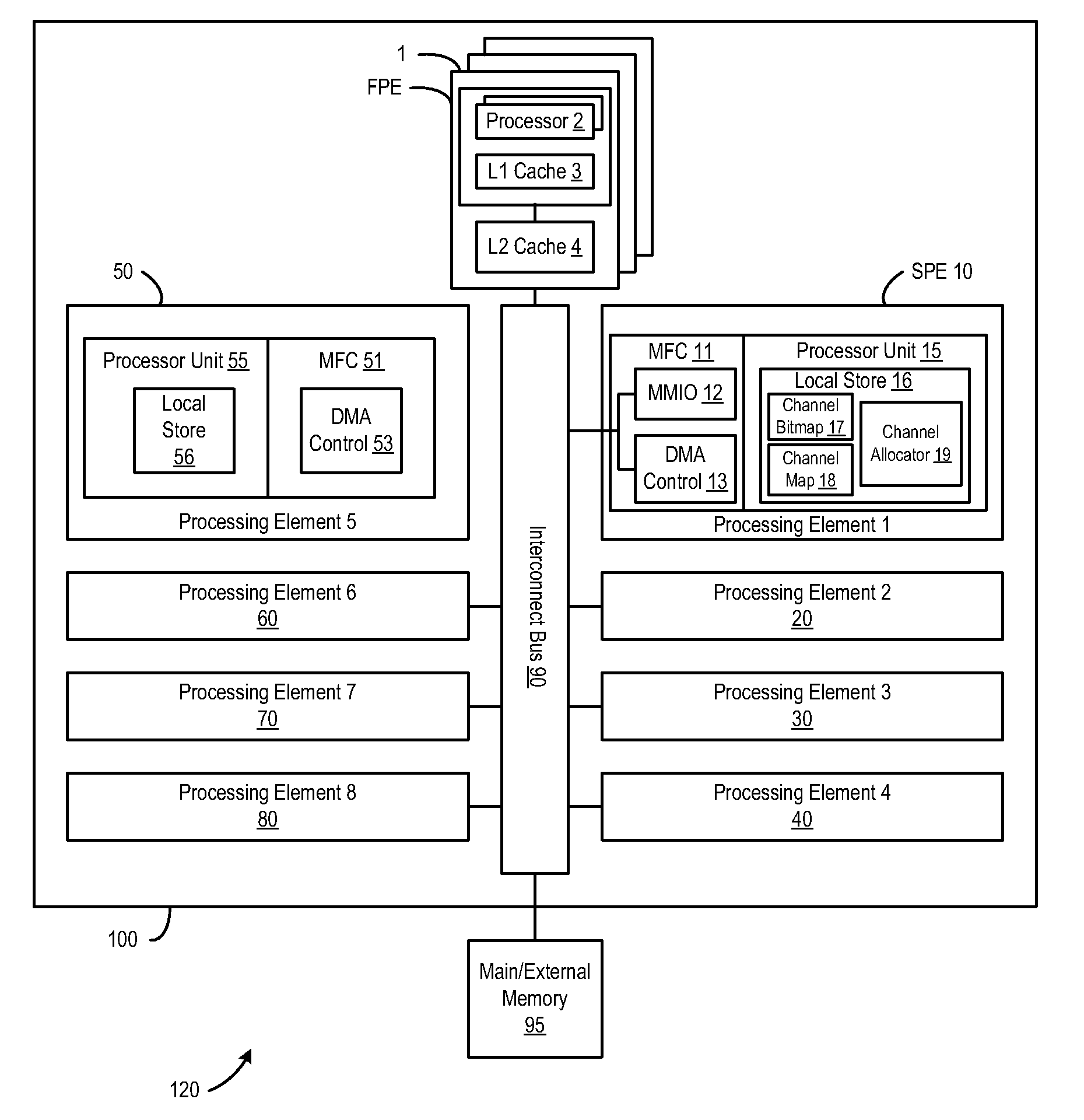

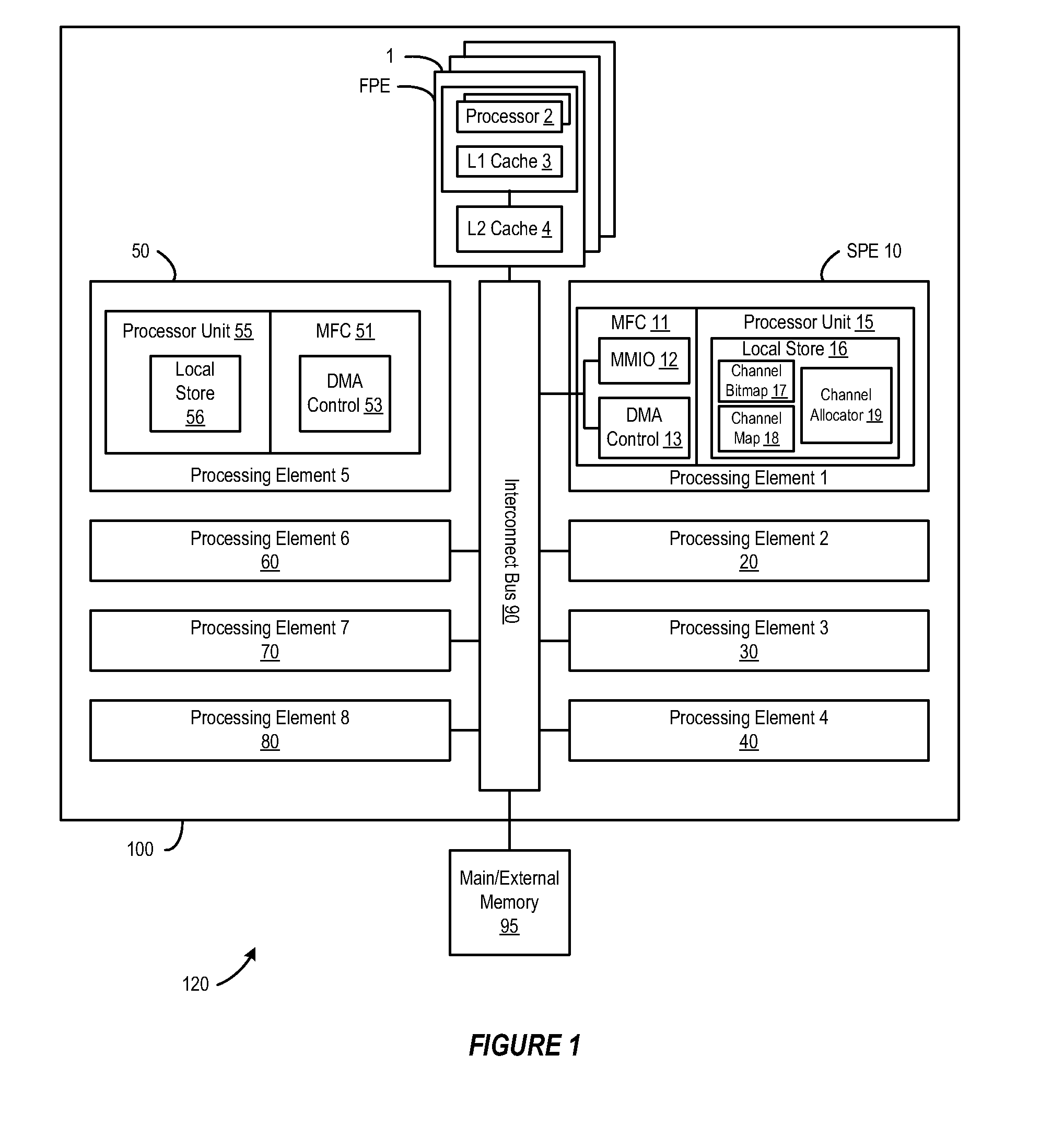

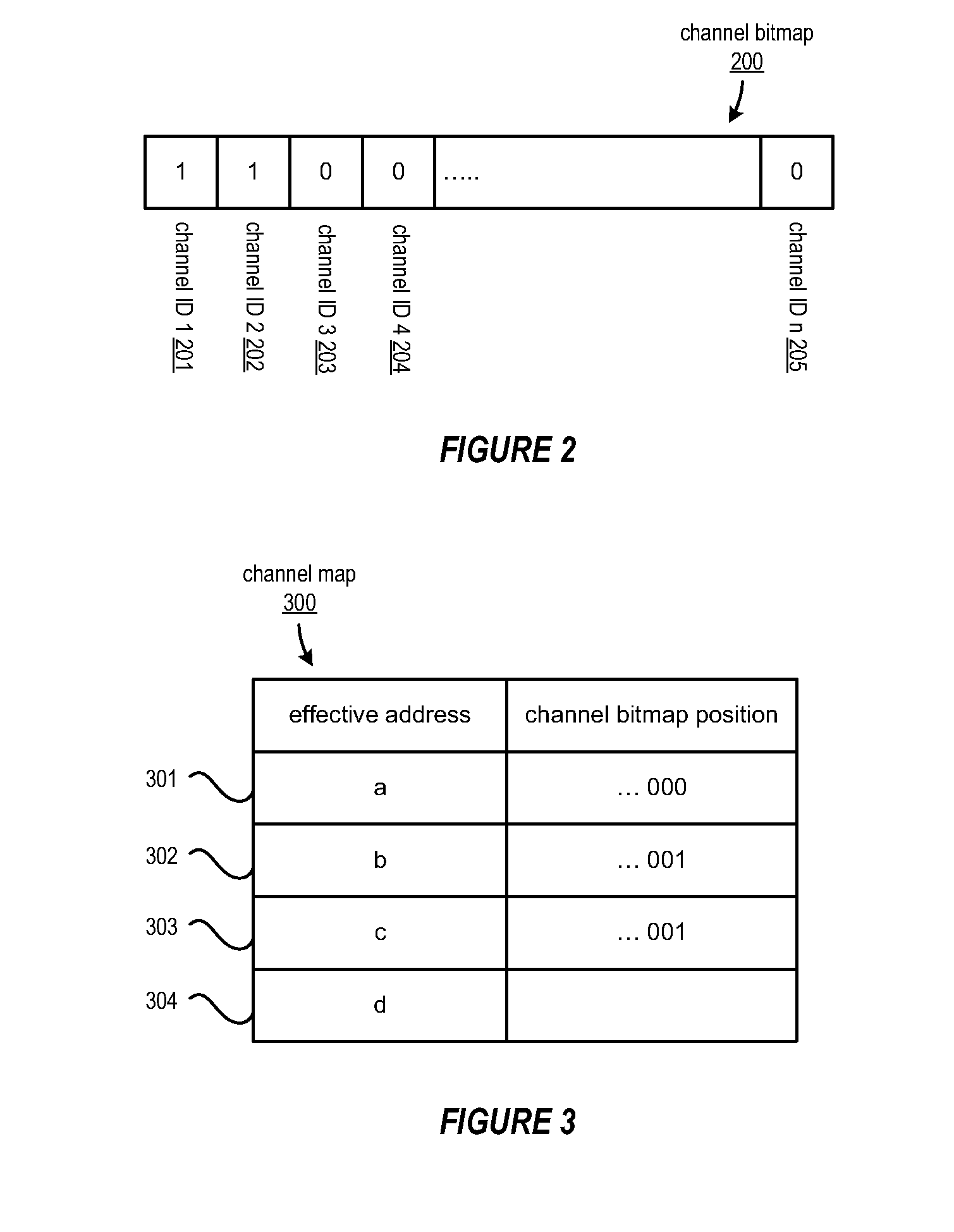

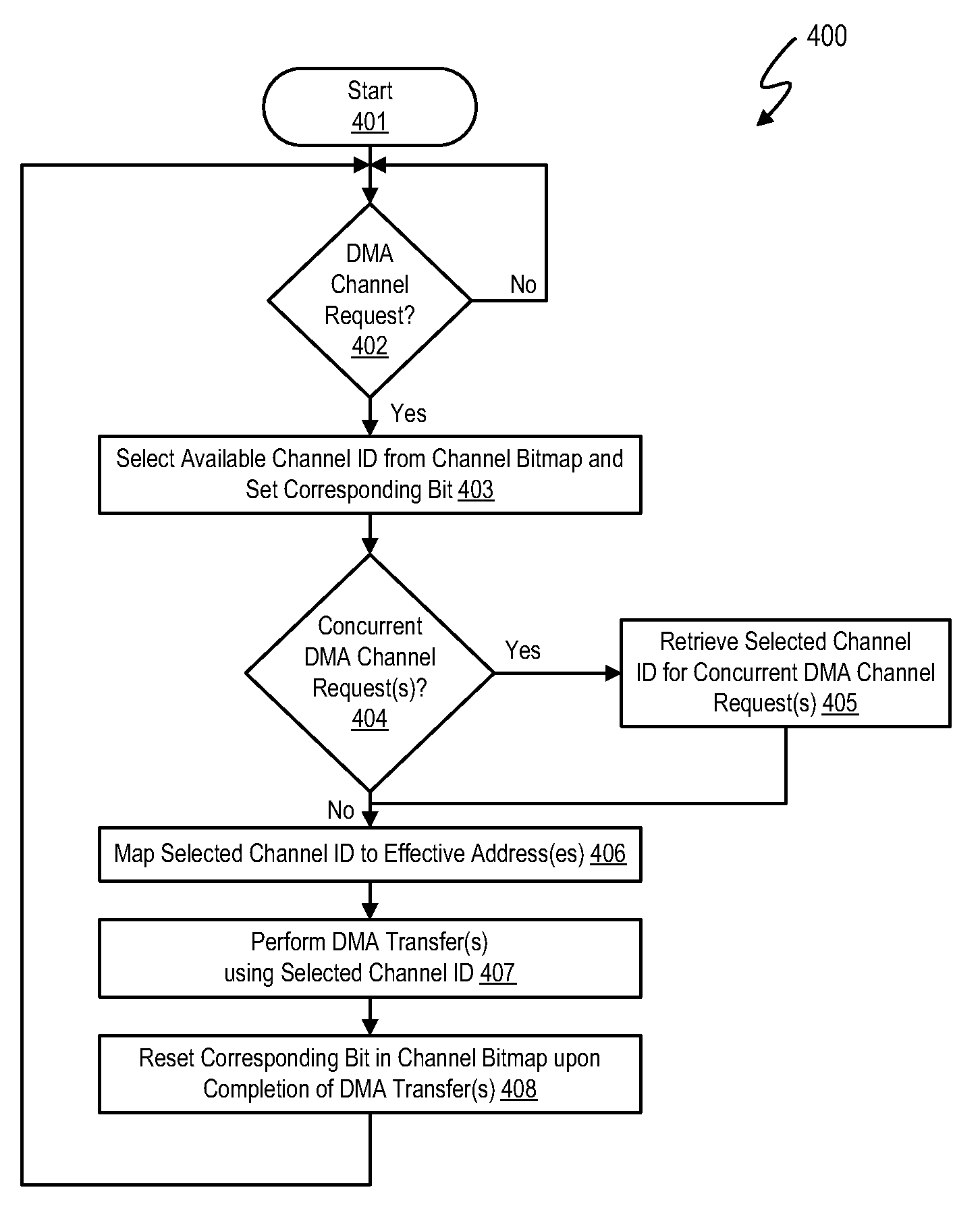

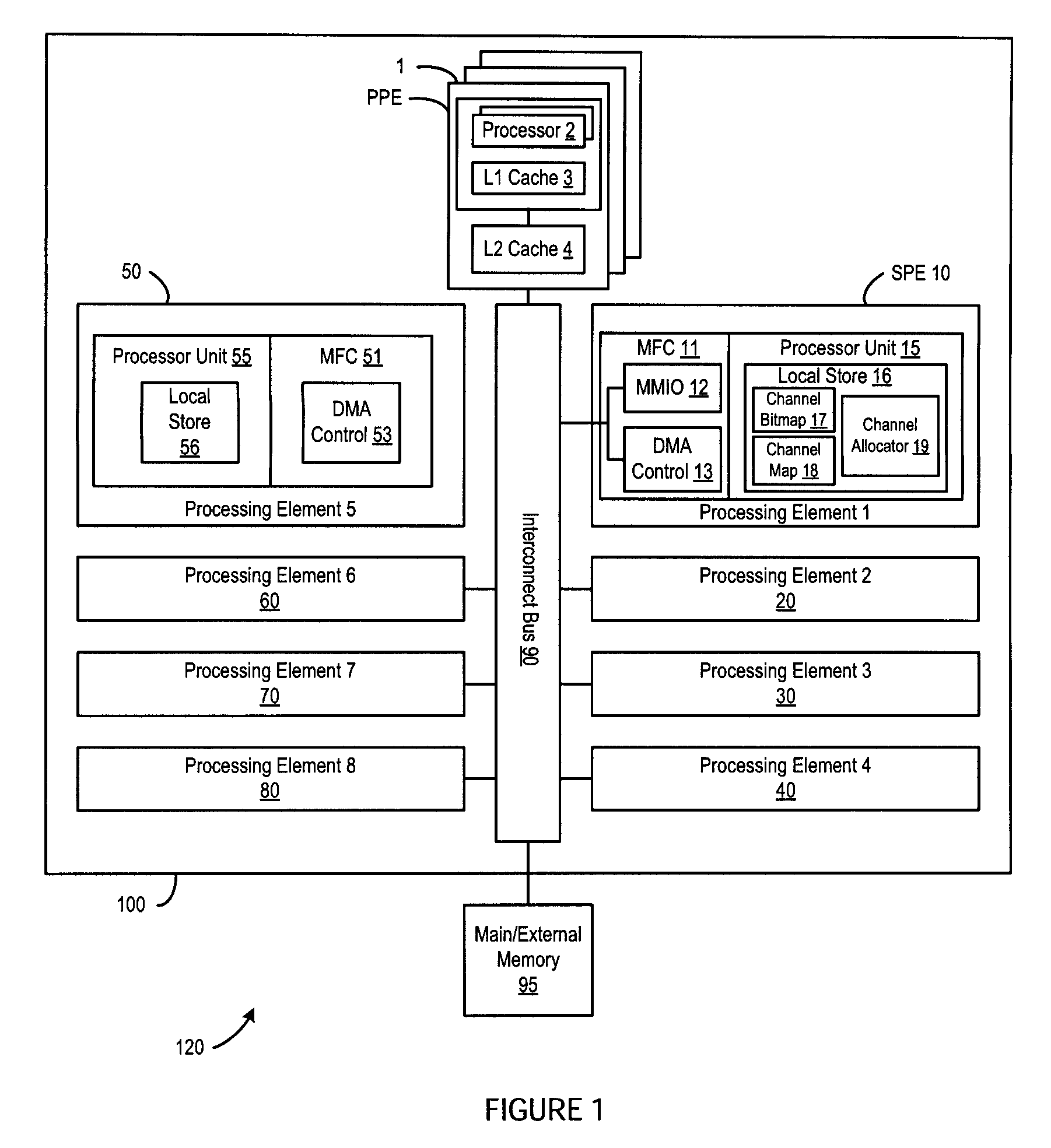

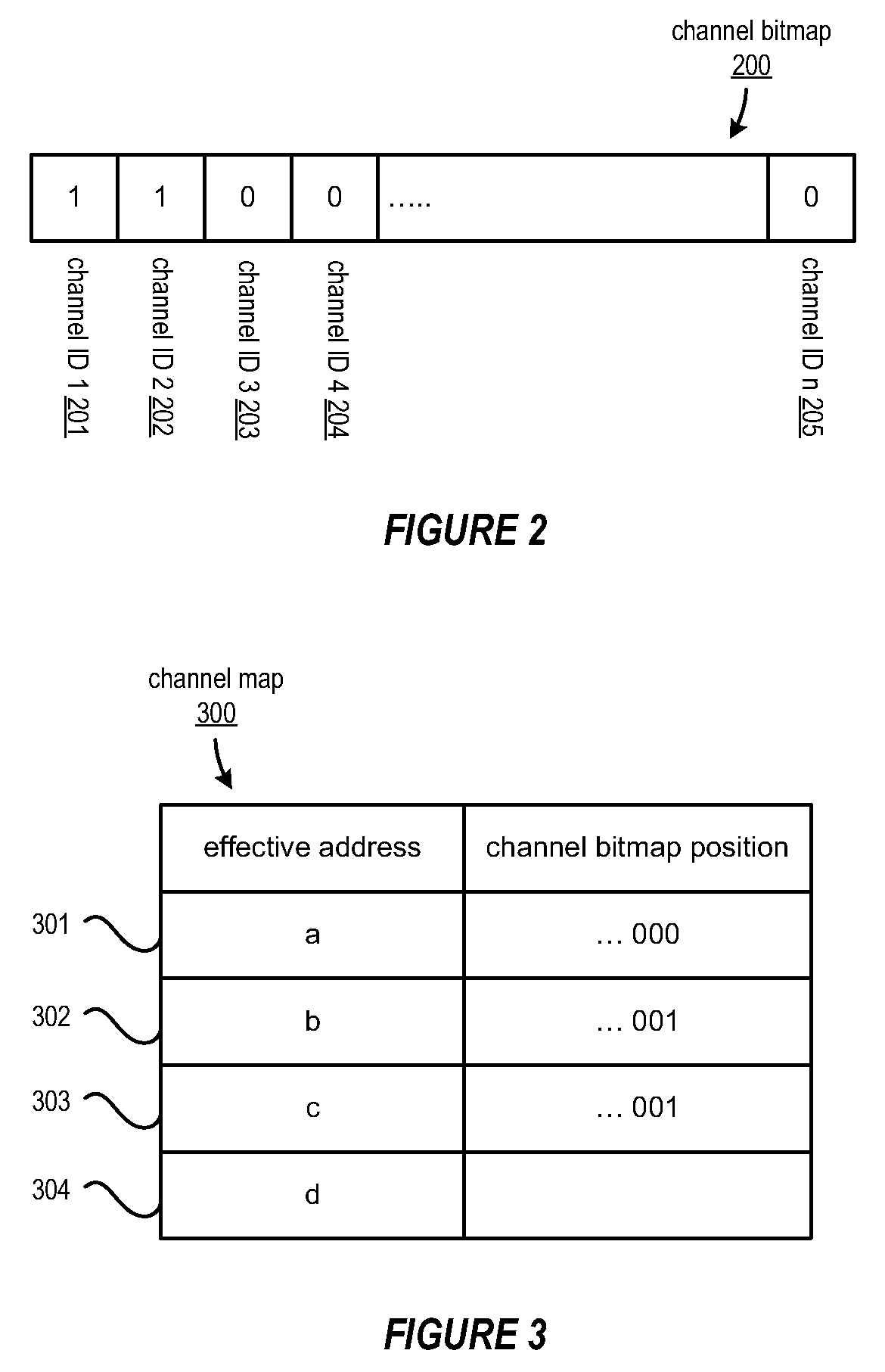

Dynamic logical data channel assignment using channel bitmap

InactiveUS20090150576A1Reducing false dependencyImprove performanceElectric digital data processingData transmissionFalse sharing

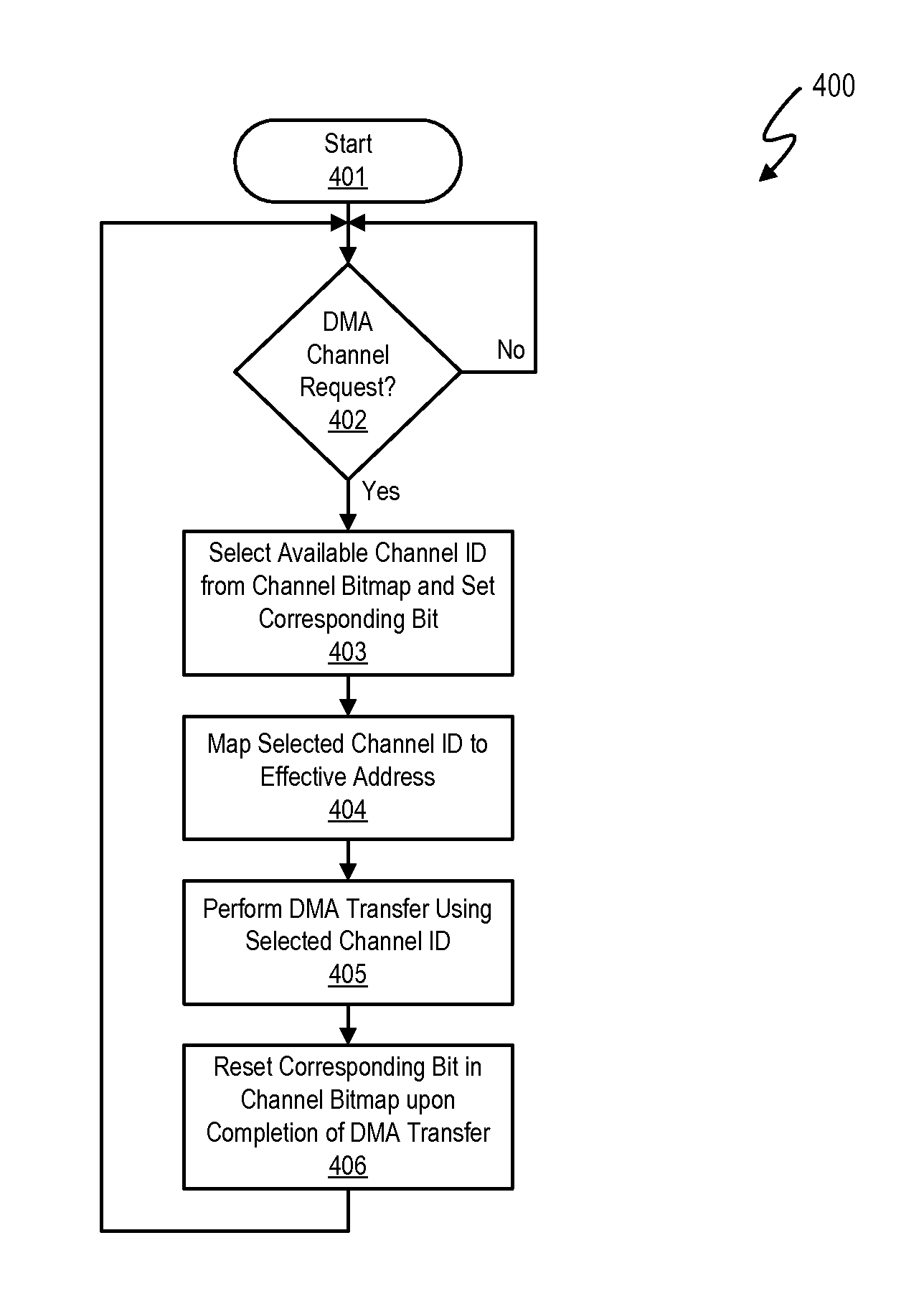

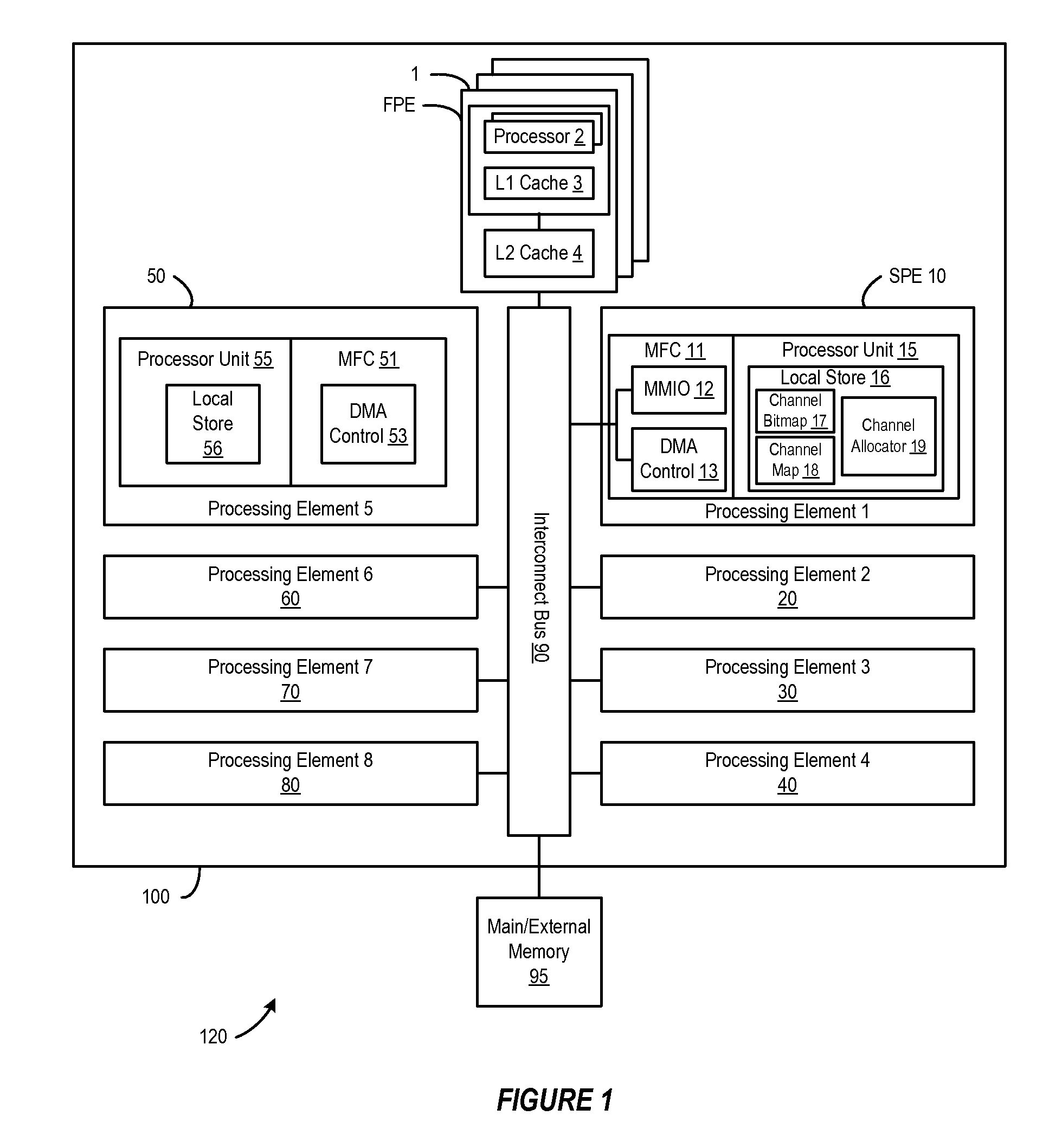

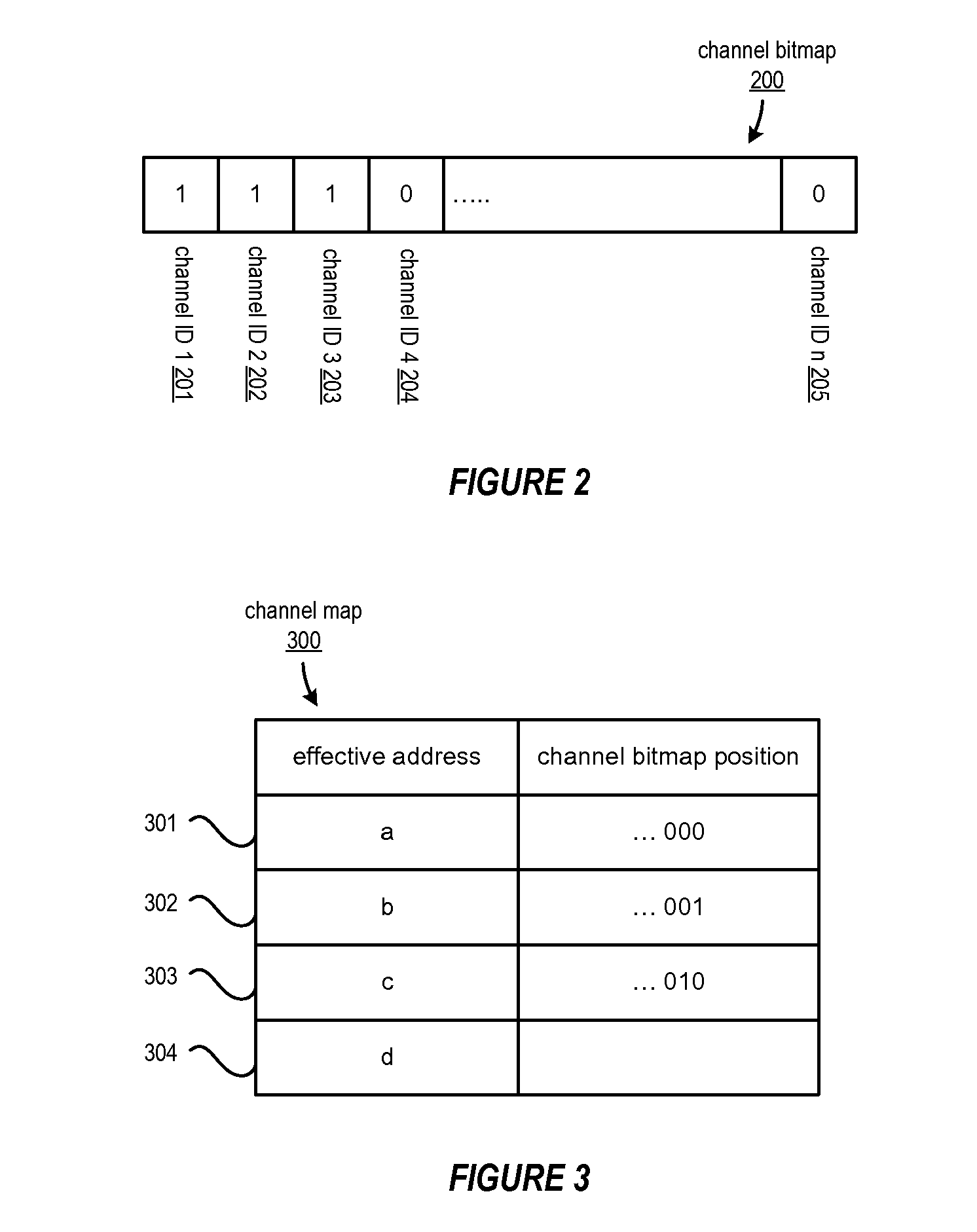

A method, system and program are provided for dynamically allocating DMA channel identifiers by virtualizing DMA transfer requests into available DMA channel identifiers using a channel bitmap listing of available DMA channels to select and set an allocated DMA channel identifier. Once an input value associated with the DMA transfer request is mapped to the selected DMA channel identifier, the DMA transfer is performed using the selected DMA channel identifier, which is then deallocated in the channel bitmap upon completion of the DMA transfer. When there is a request to wait for completion of the data transfer, the same input value is used with the mapping to wait on the appropriate logical channel. With this method, all available logical channels can be utilized with reduced instances of false-sharing.

Owner:IBM CORP

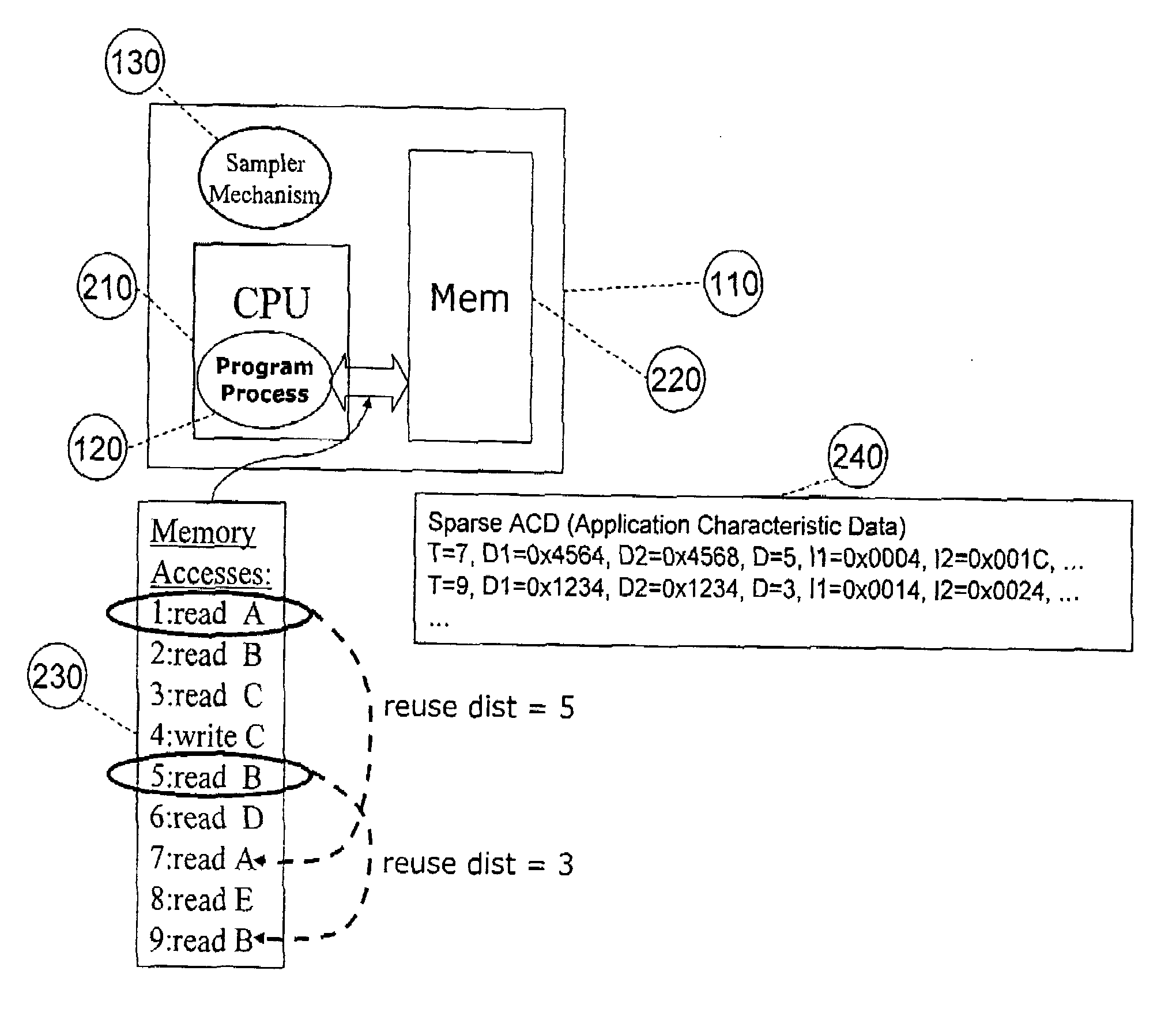

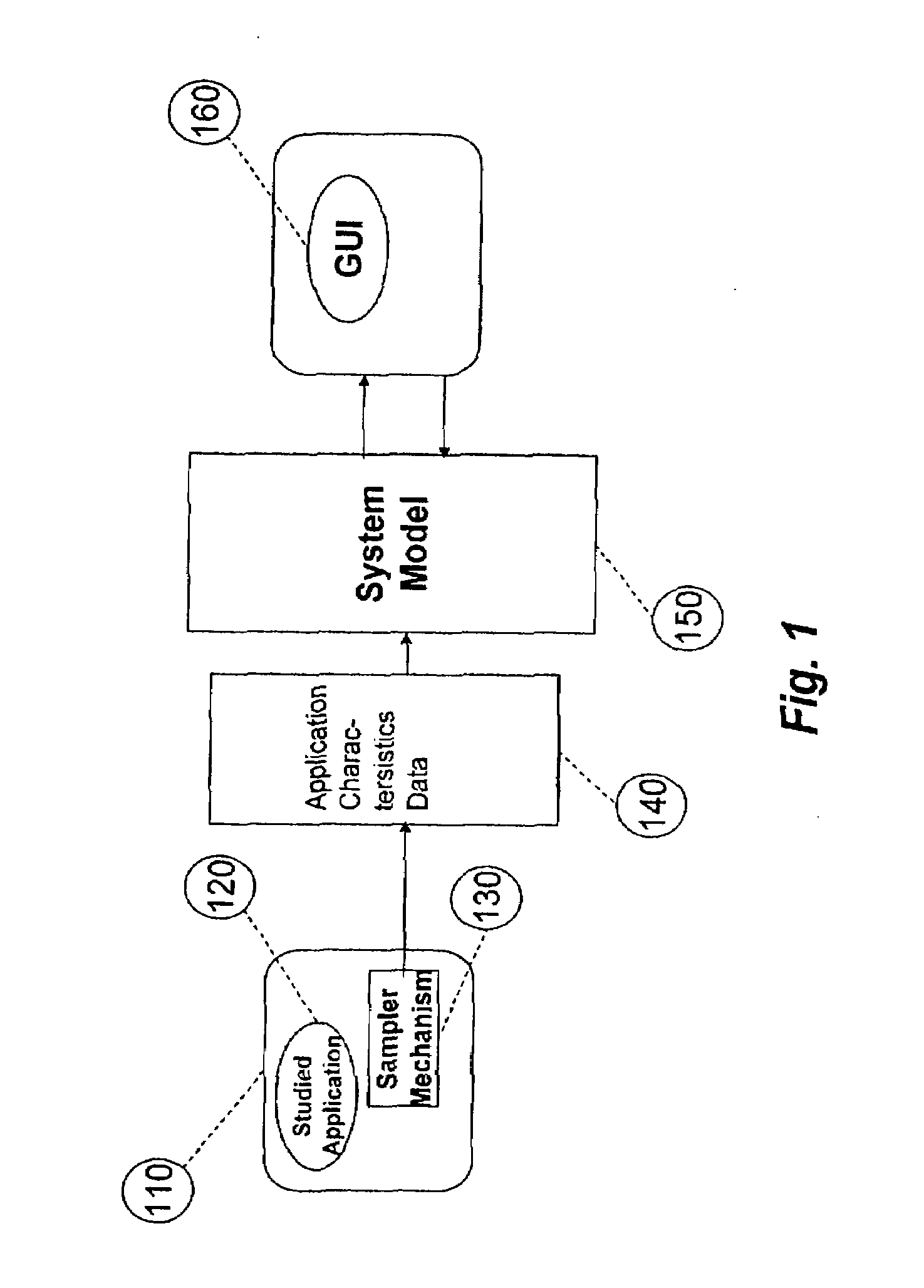

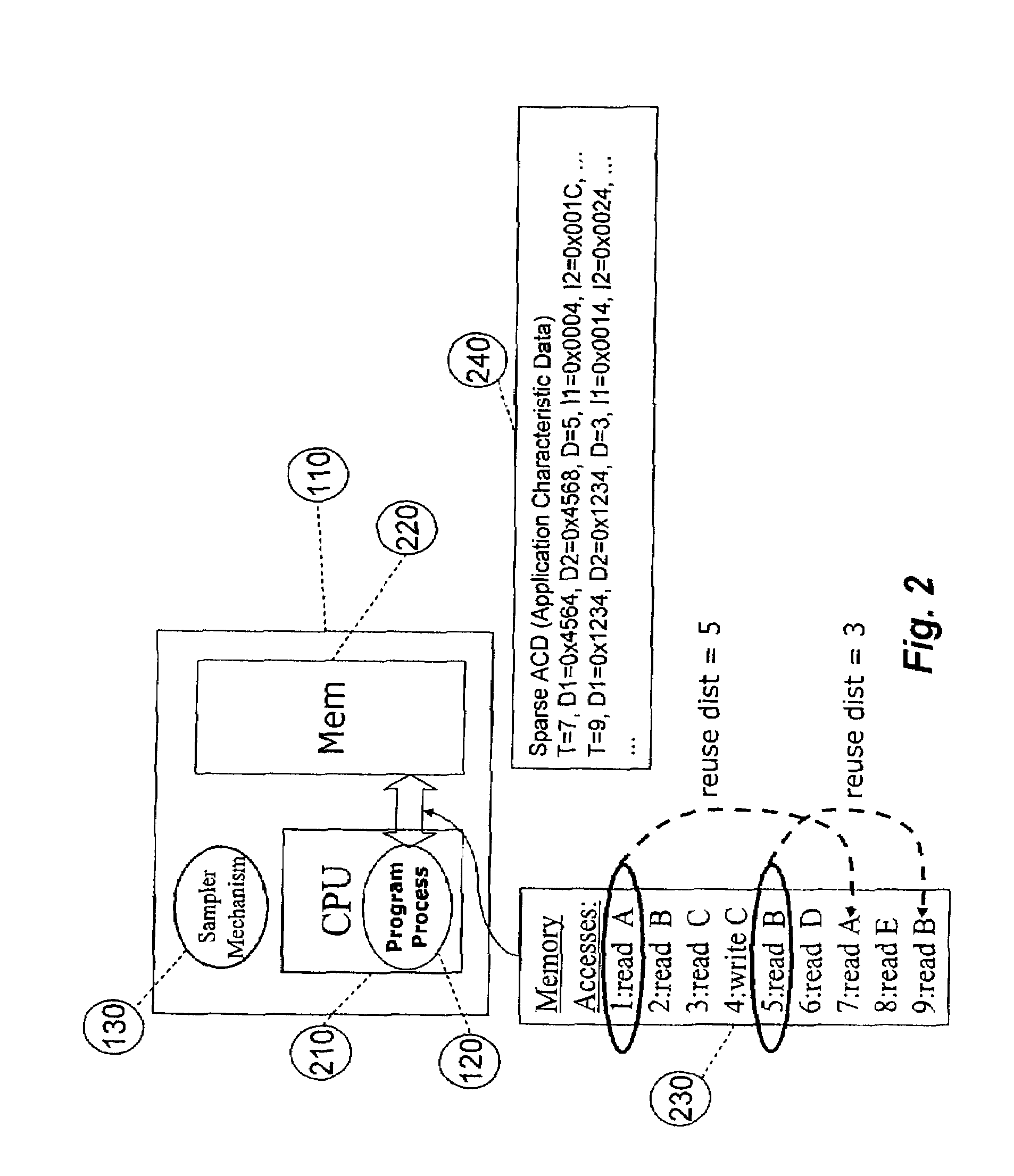

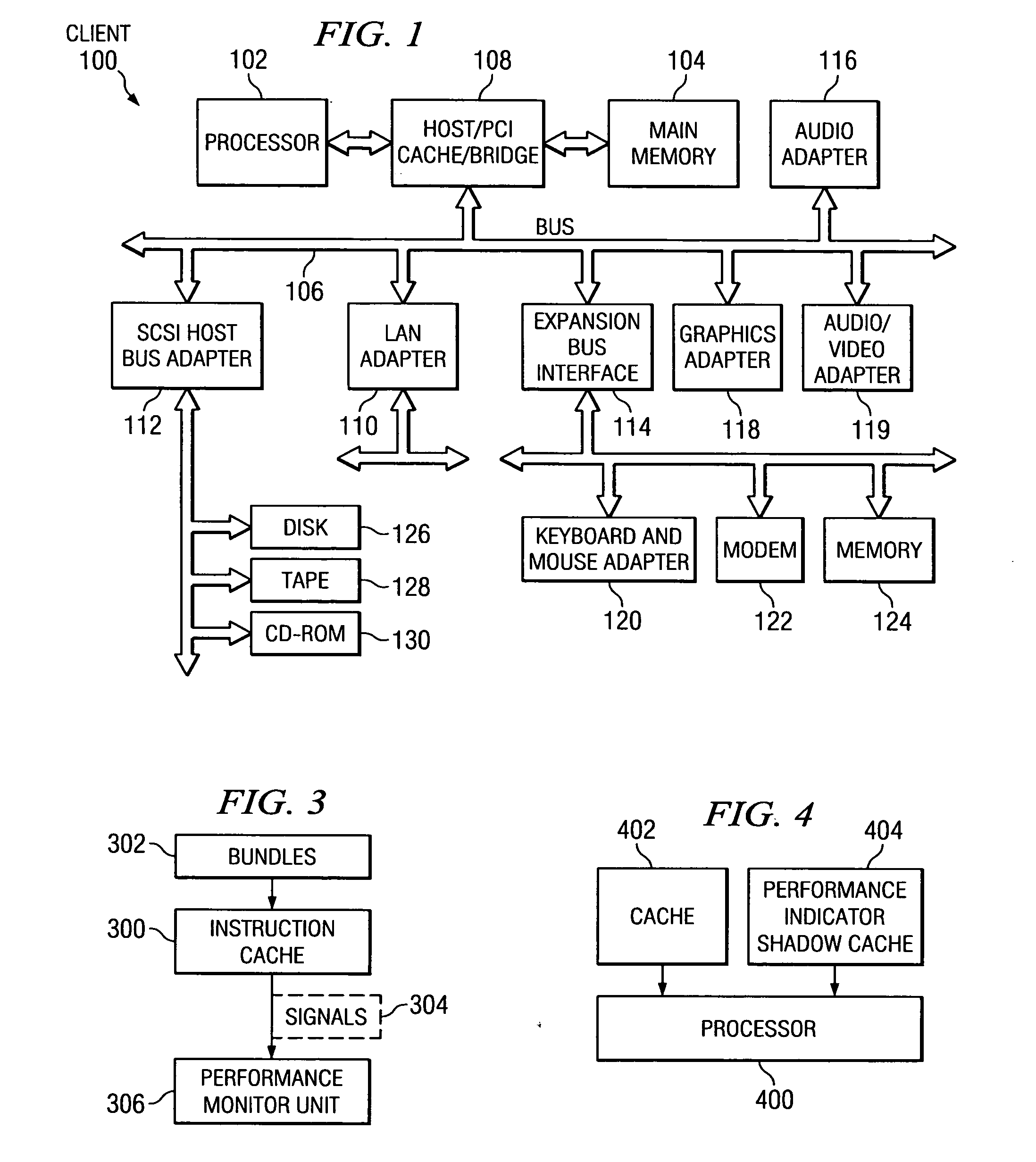

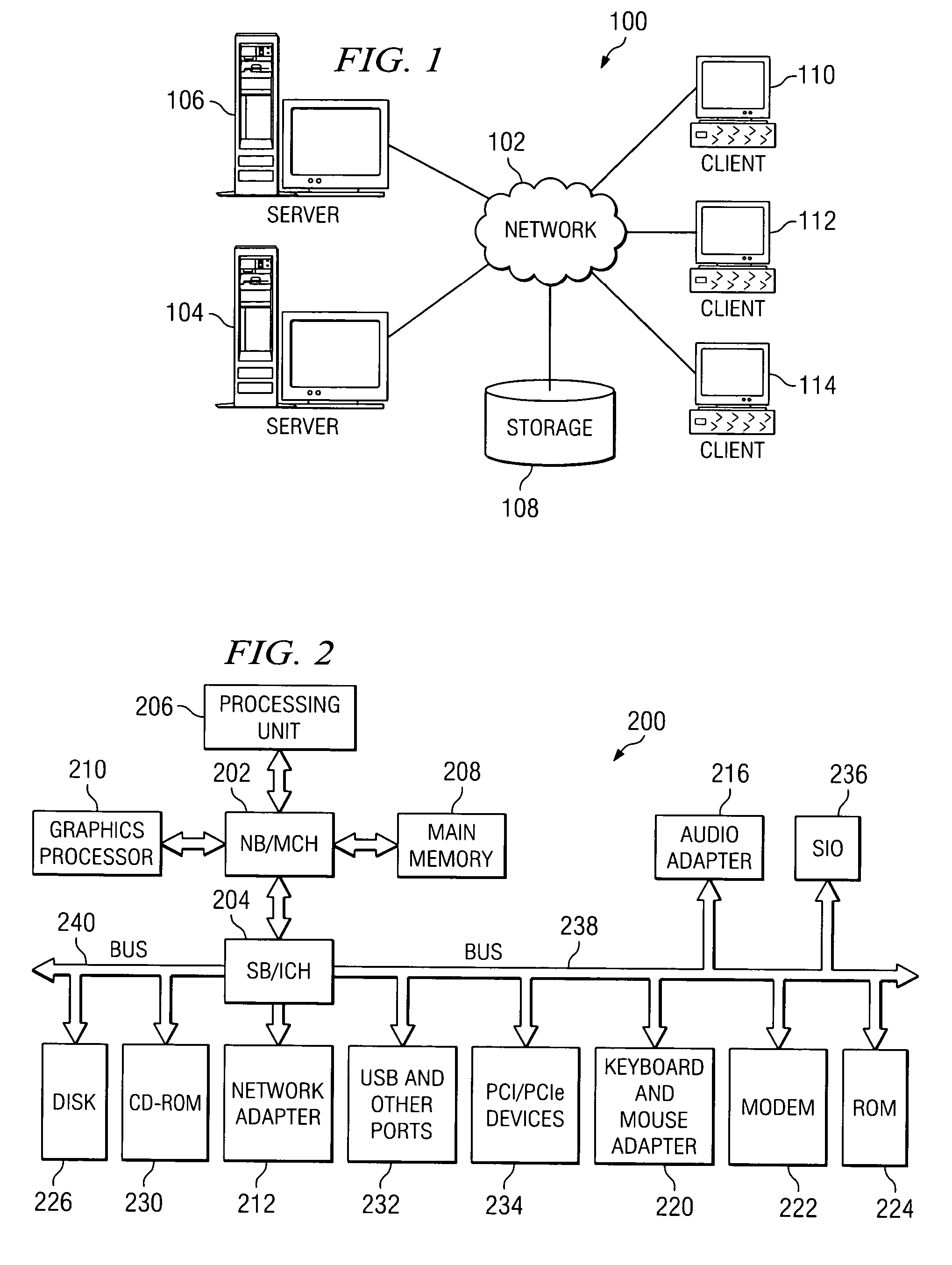

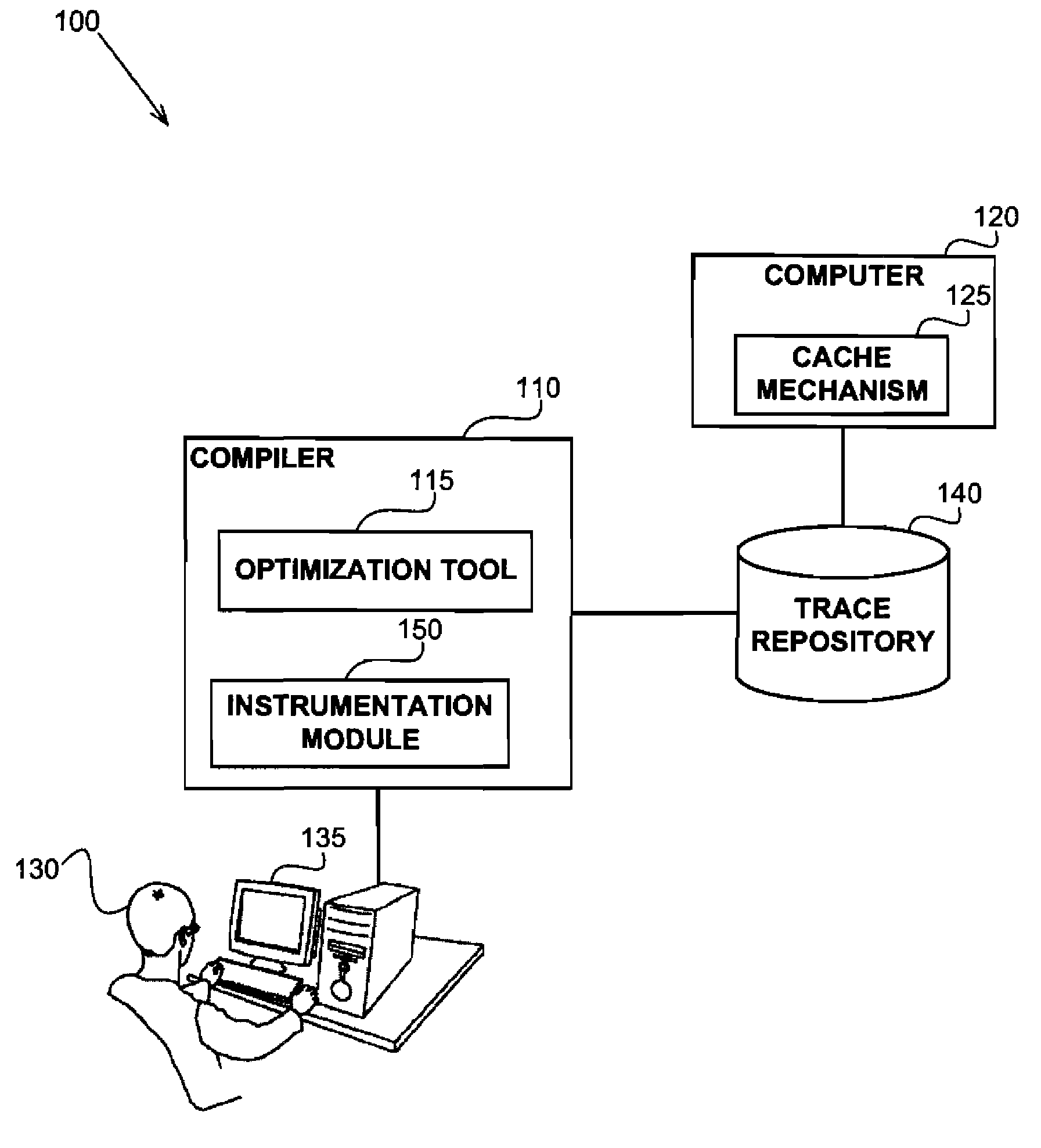

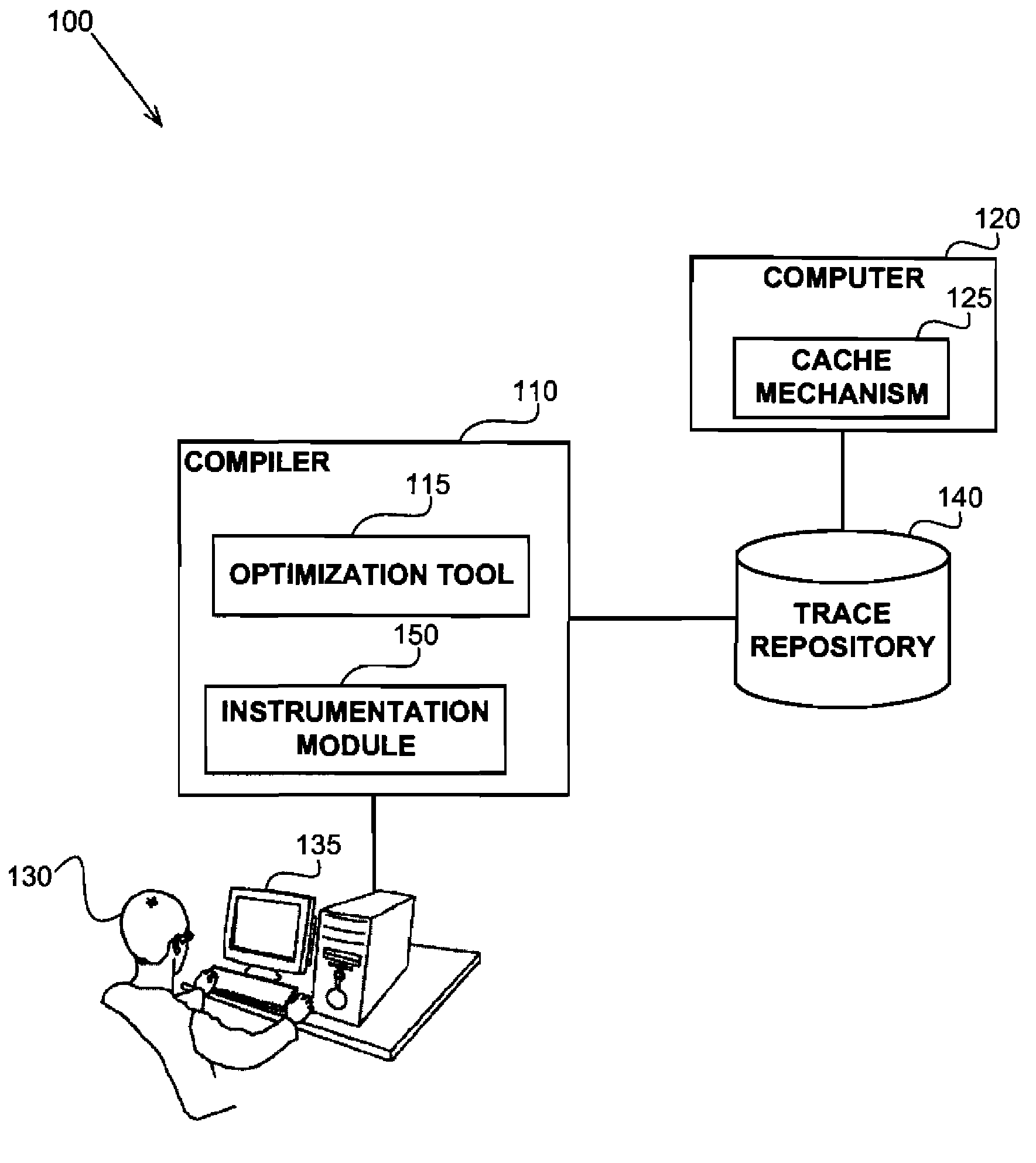

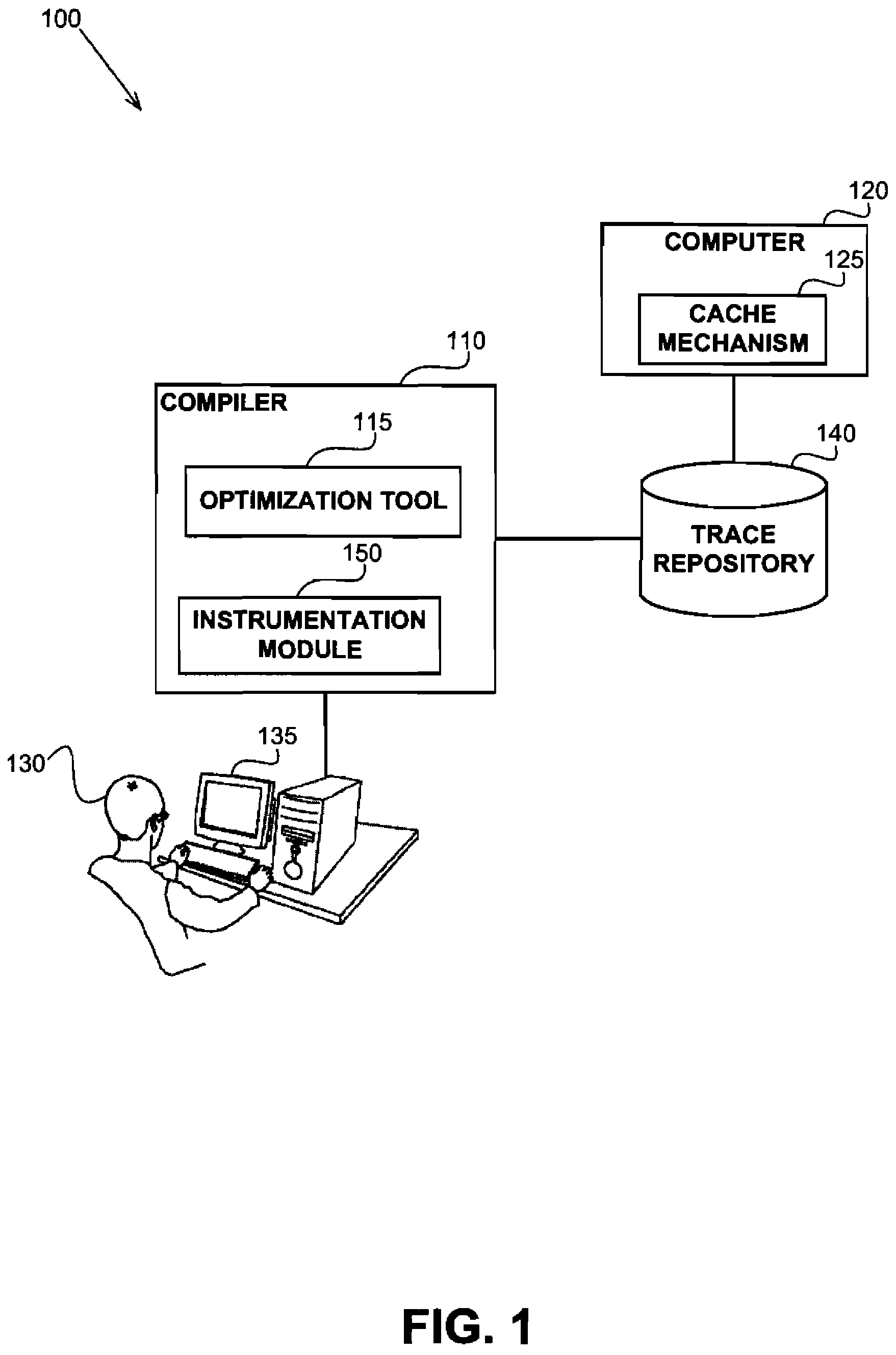

System for and method of capturing performance characteristics data from a computer system and modeling target system performance

ActiveUS8539455B2Satisfy speedSimple and efficient user environmentError detection/correctionDigital computer detailsData modelingRandom replacement

A system, method, and computer program product that captures performance-characteristic data from the execution of a program and models system performance based on that data. Performance-characterization data based on easily captured reuse distance metrics is targeted. Reuse distance for one memory operation may be measured as the number of memory operations that have been performed since the memory object it accesses was last accessed. Separate call stacks leading up to the same memory operation are identified and statistics are separated for the different call stacks. Methods for efficiently capturing this kind of metrics are described. These data can be refined into easily interpreted performance metrics, such as performance data related to caches with LRU replacement and random replacement strategies in combination with fully associative as well as limited associativity cache organizations. Methods for assessing cache utilization as well as parallel execution are covered. The method includes modeling multithreaded memory systems and detecting false sharing coherence misses.

Owner:ROGUE WAVE SOFTWARE

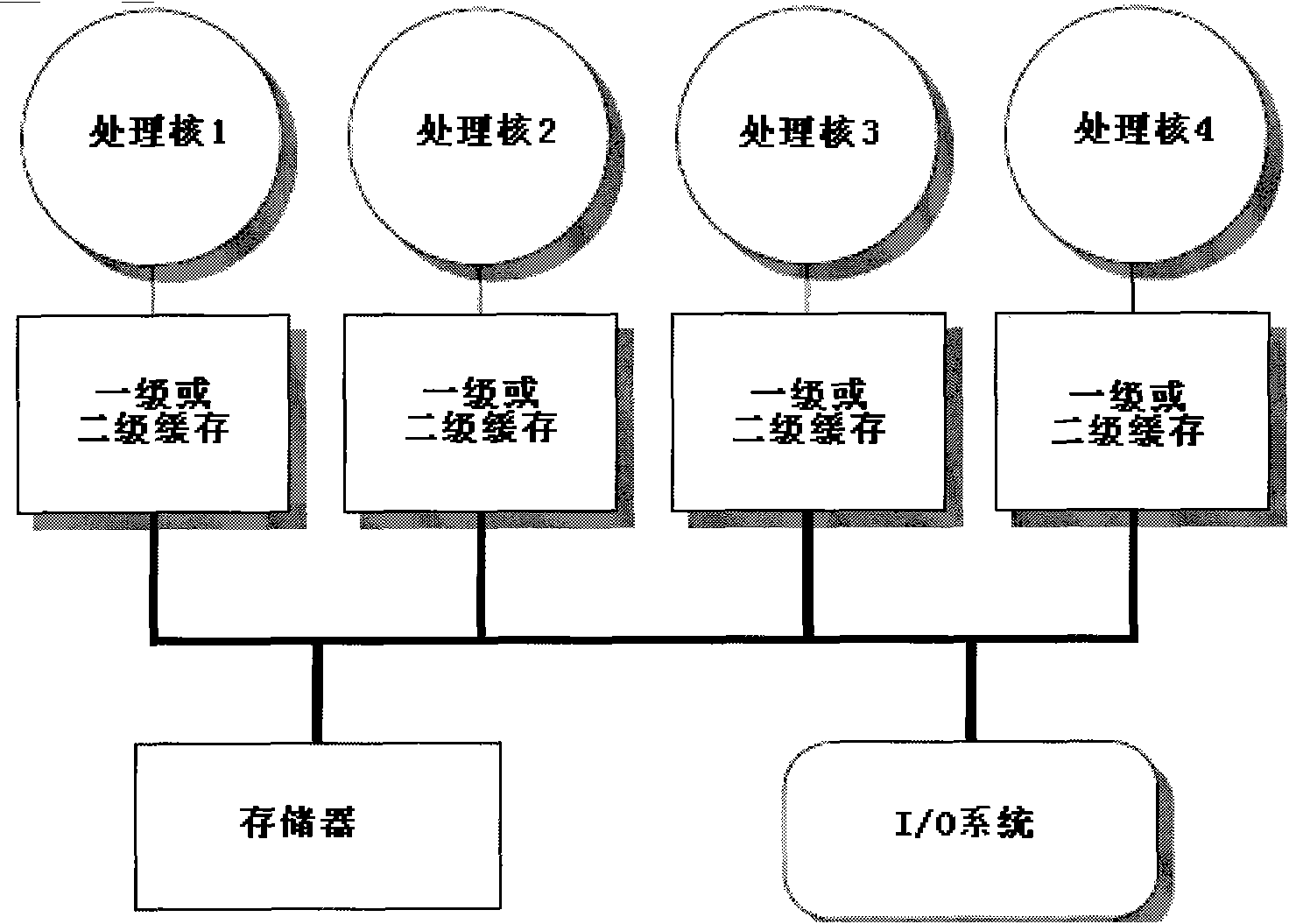

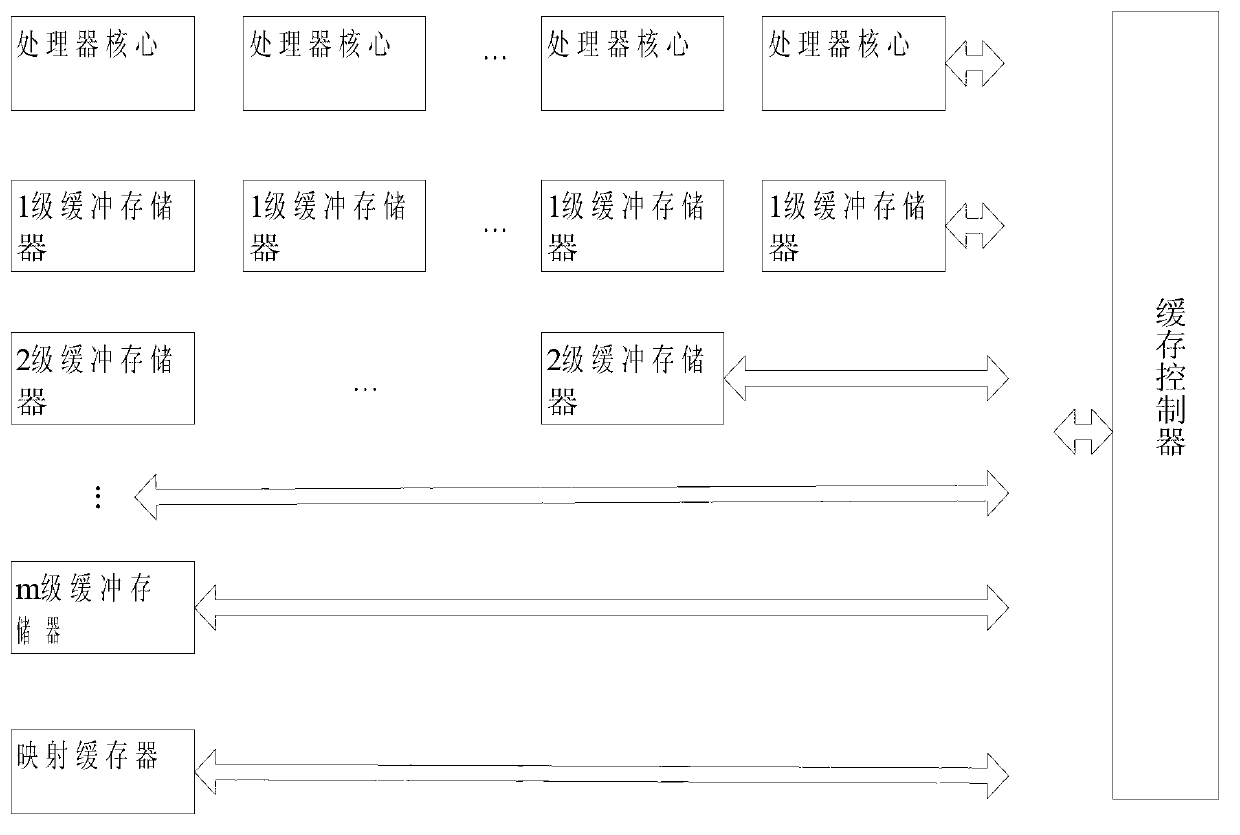

Method and device for processing single-producer/single-consumer queue in multi-core system

InactiveCN101853149AImprove efficiencyReduce overheadMemory adressing/allocation/relocationConcurrent instruction executionCache consistencyMulticore systems

The invention relates to a method and a device for processing a single-producer / single-consumer queue in a multi-core system. A private buffer area and a timer are arranged at one end of a producer at least; when the producer produces new data, the new data are written in the private buffer area; and when and only when the private buffer area is full or the timer expires, the producer writes all the data in the private buffer area in a queue at a single time. The invention also comprises an enqueuing device, a dequeuing device and a timing device, one end of the queue is accessed by a processing entity to write the data in the queue; and the other end is accessed by another processing entity to read data from the queue, thereby eliminating the false sharing cache miss and sufficiently decreasing the expense spent on the cache consistency protocol in a multi-core processor system.

Owner:张力

Method and apparatus for identifying false cache line sharing

InactiveUS20050154839A1Improve performanceMemory adressing/allocation/relocationProcessing InstructionData processing system

A method, apparatus, and computer instructions in a data processing system for processing instructions are provided. Instructions are received at a processor in the data processing system. If a selected indicator is associated with the instruction, counting of each event associated with the execution of the instruction is enabled. In some embodiments, the performance indicators may be utilized to obtain information regarding the nature of the cache hits and reloads of cache lines within the instruction or data cache. These embodiments may be used to determine whether processors of a multiprocessor system, such as a symmetric multiprocessor (SMP) system, are truly sharing a cache line or if there is false sharing of a cache line. This determination may then be used as a means for determining how to better store the instructions / data of the cache line to prevent false sharing of the cache line.

Owner:IBM CORP

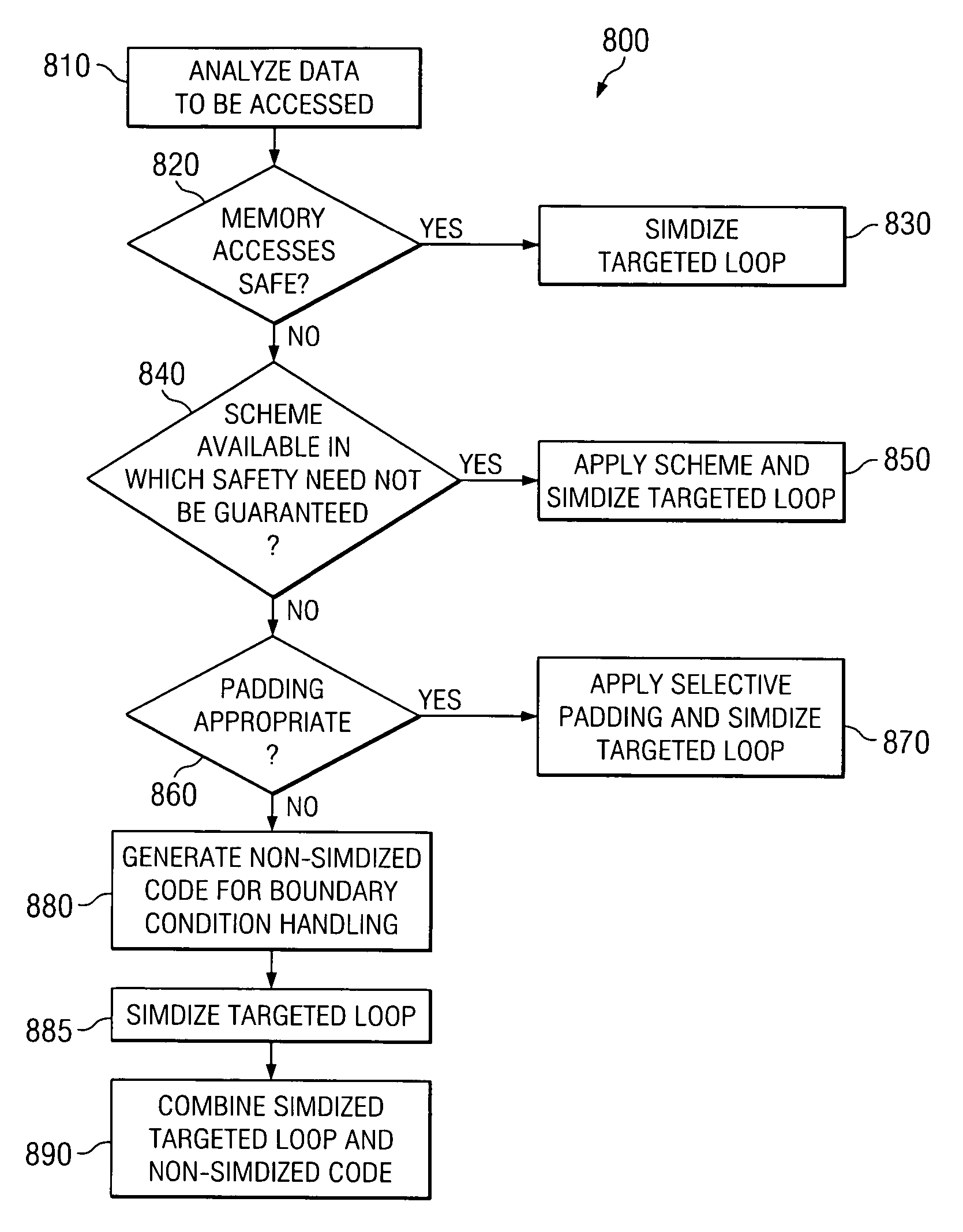

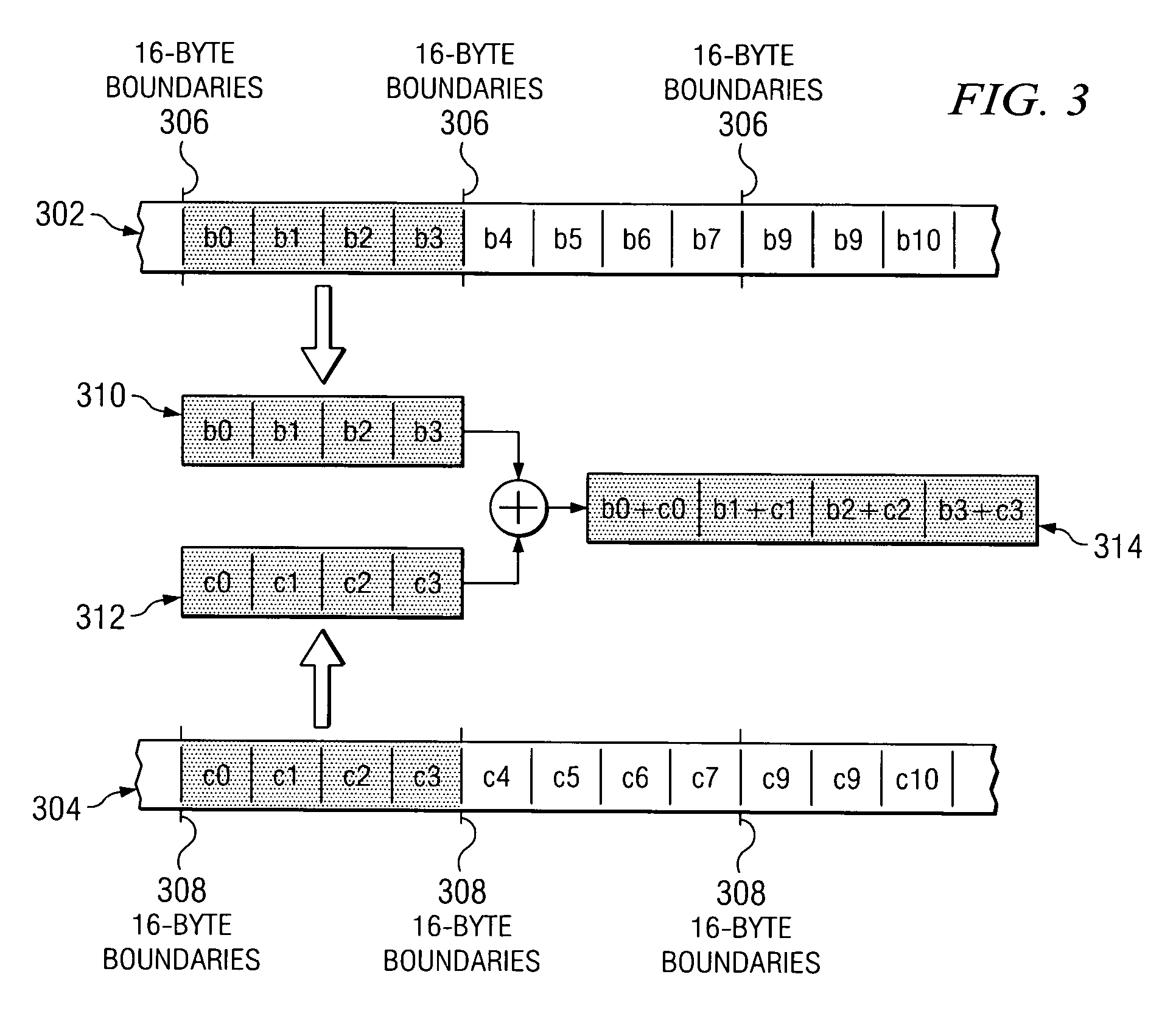

Efficient generation of SIMD code in presence of multi-threading and other false sharing conditions and in machines having memory protection support

InactiveUS20070226723A1General purpose stored program computerProgram controlTerm memoryProtection system

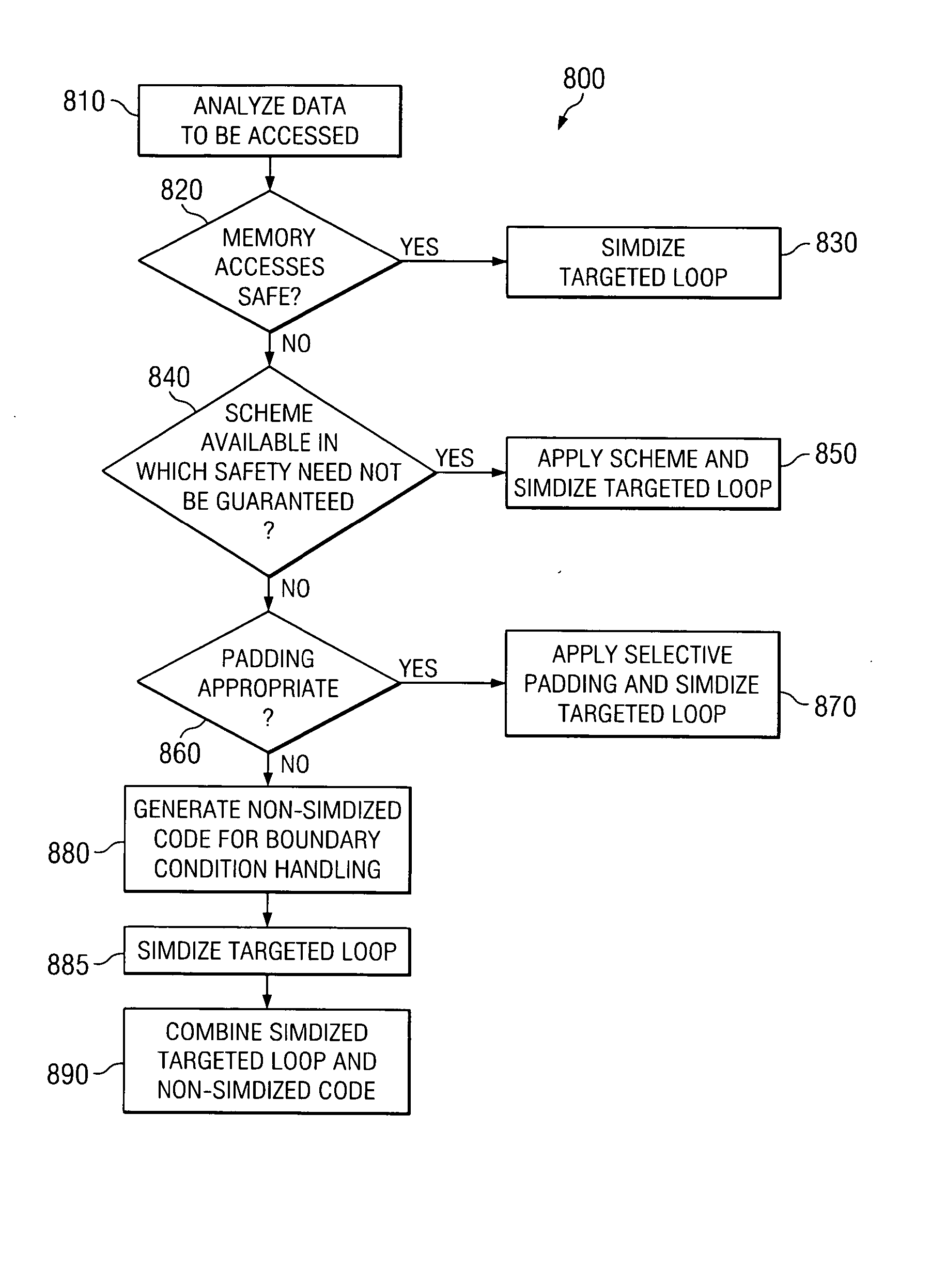

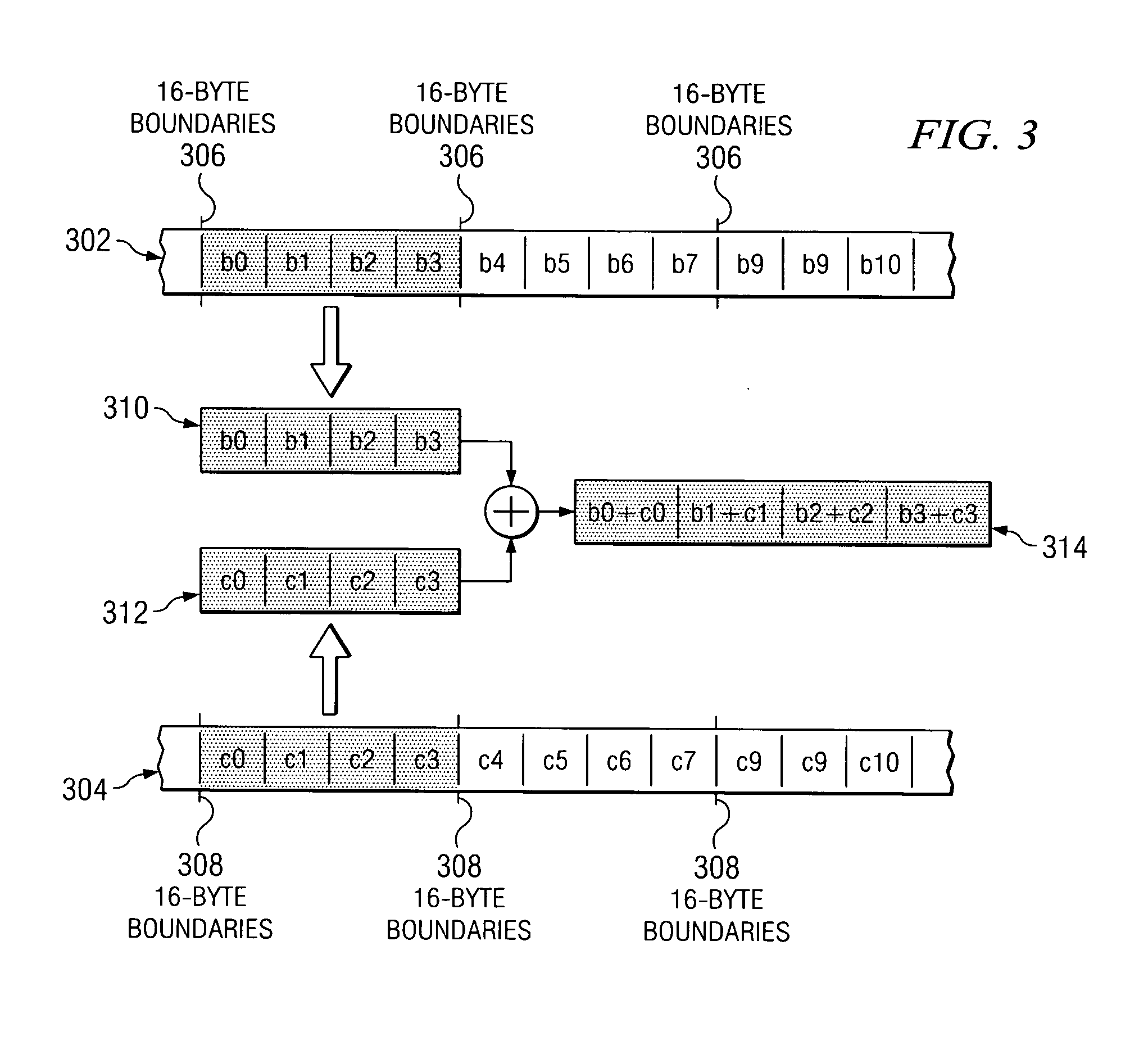

A computer implemented method, system and computer program product for automatically generating SIMD code, particularly in the presence of multi-threading and other false sharing conditions, and in machines having a segmented / virtual page memory protection system. The method begins by analyzing data to be accessed by a targeted loop including at least one statement, where each statement has at least one memory reference, to determine if memory accesses are safe. If memory accesses are safe, the targeted loop is simdized. If not safe, it is determined if a scheme can be applied in which safety need not be guaranteed. If such a scheme can be applied, the scheme is applied and the targeted loop is simdized according to the scheme. If such a scheme cannot be applied, it is determined if padding is appropriate. If padding is appropriate, the data is padded and the targeted loop is simdized. If padding is not appropriate, non-simdized code is generated based on the targeted loop for handling boundary conditions, the targeted loop is simdized, and the simdized targeted loop is combined with the non-simdized code.

Owner:IBM CORP

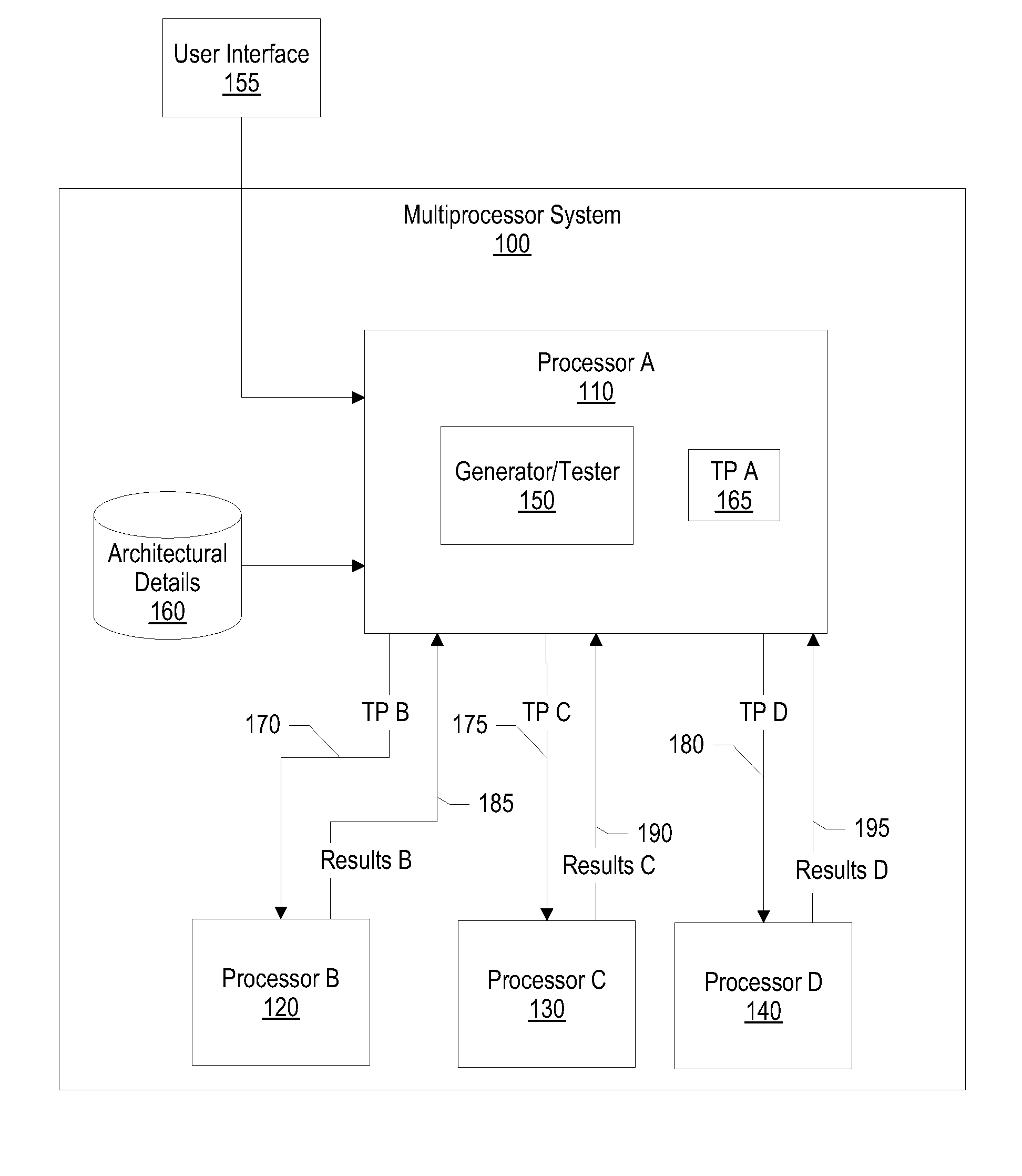

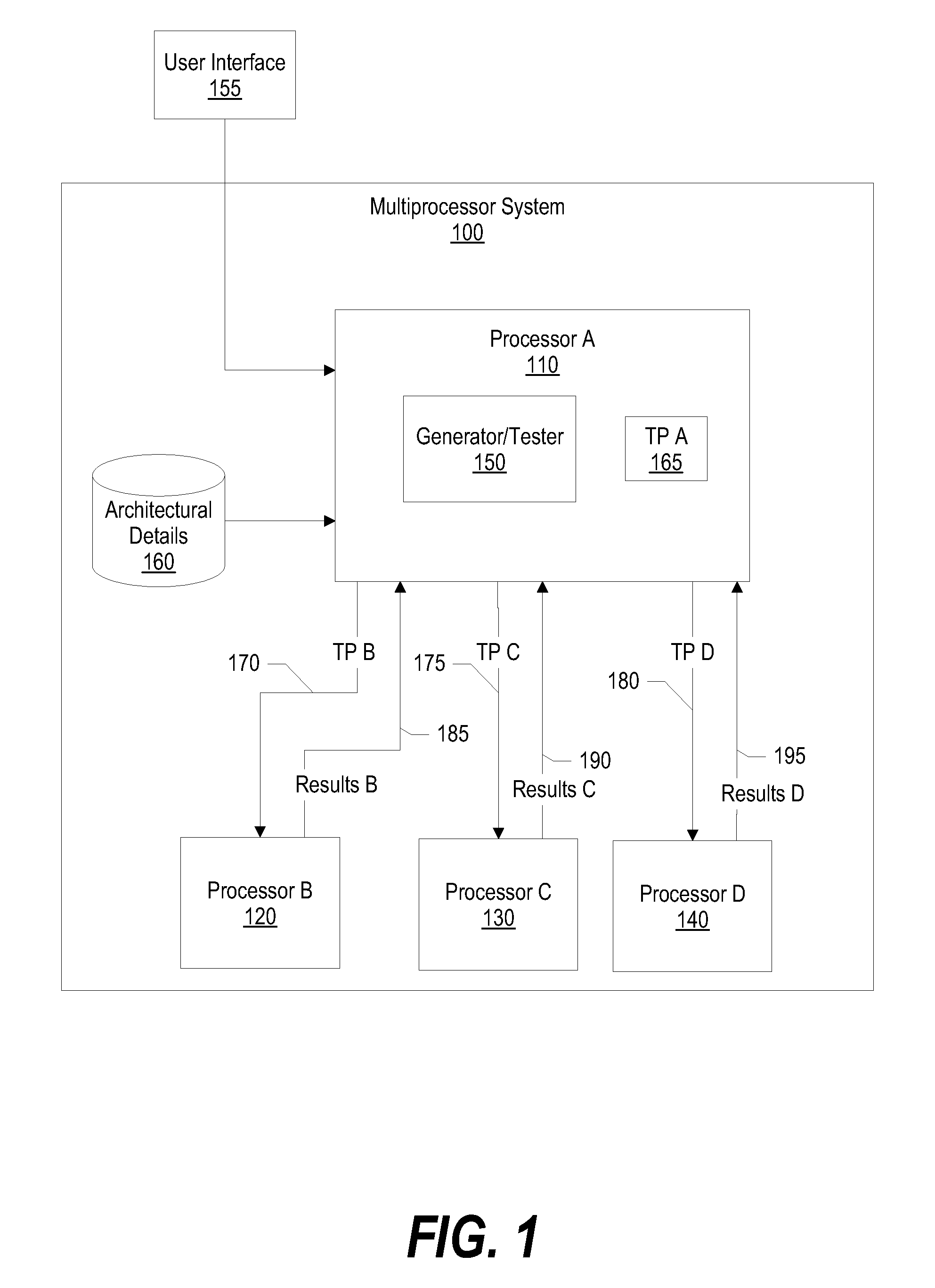

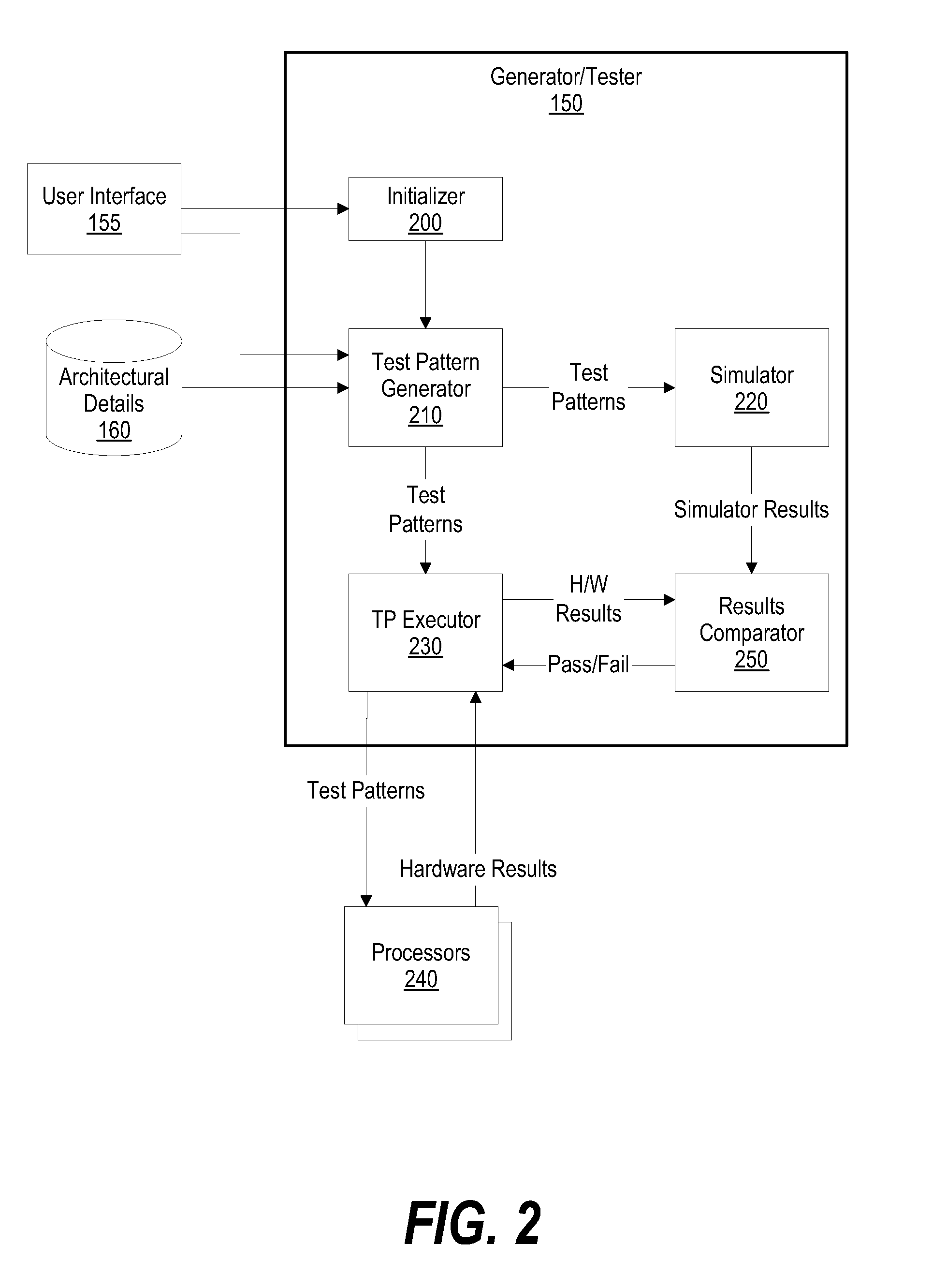

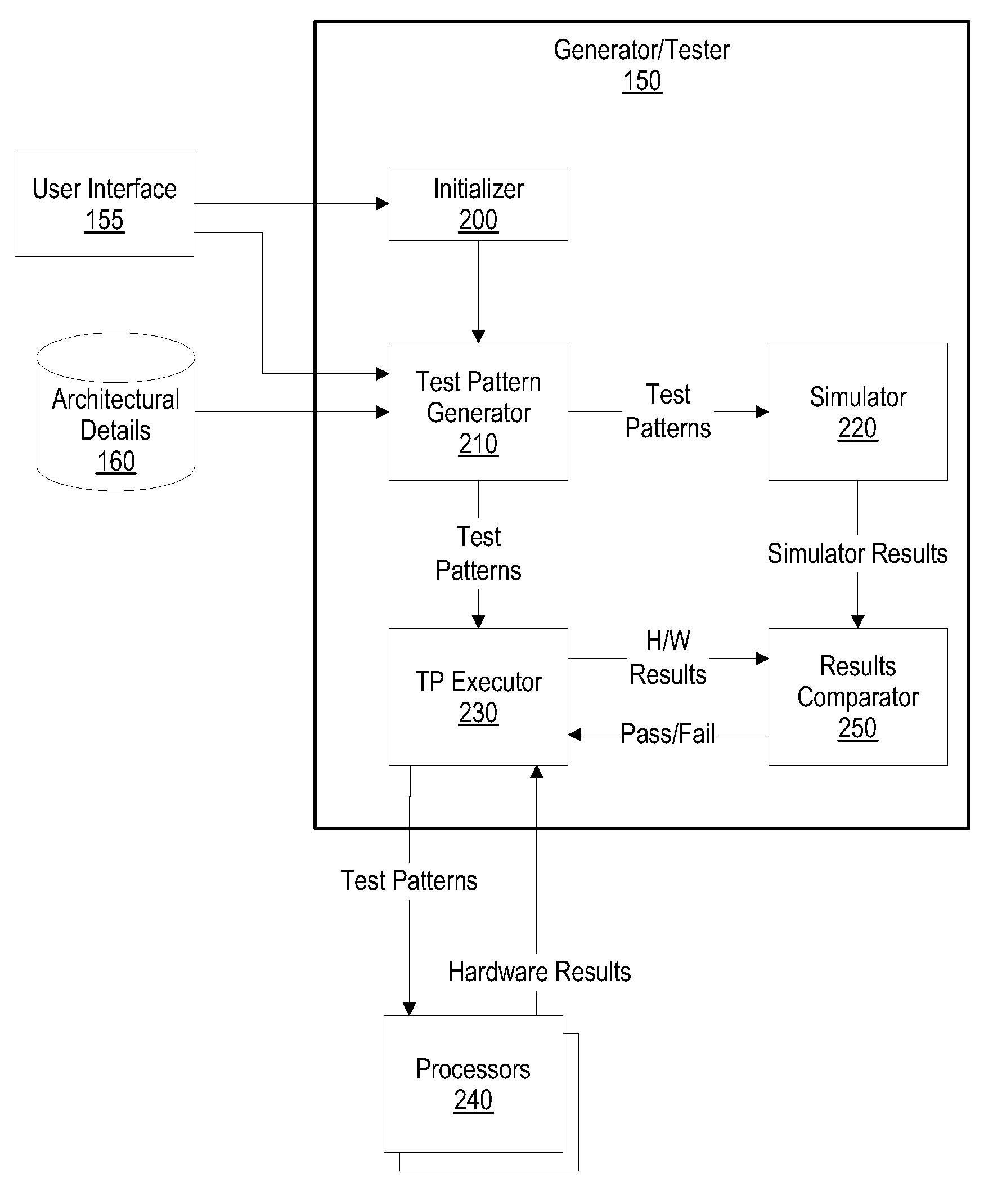

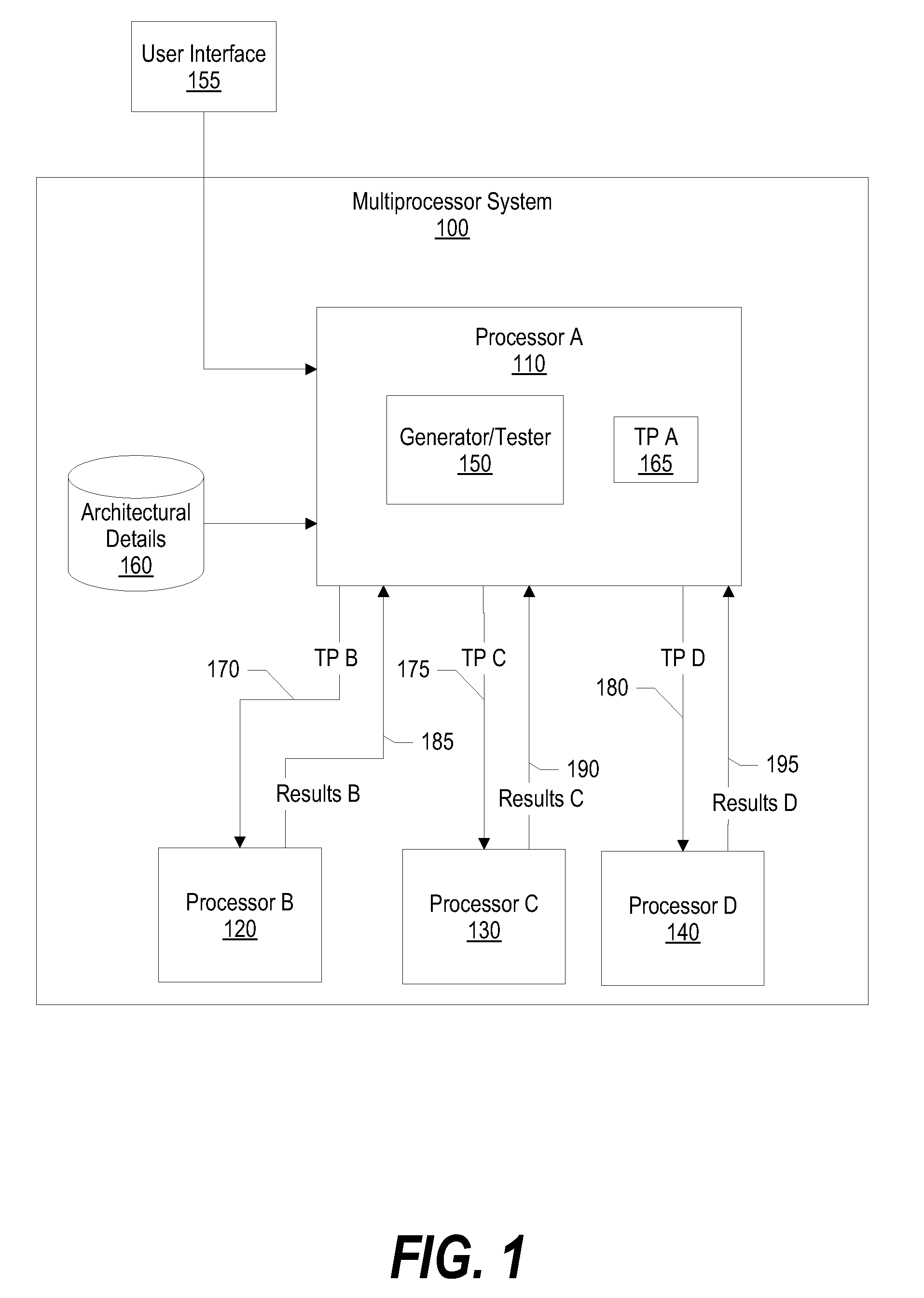

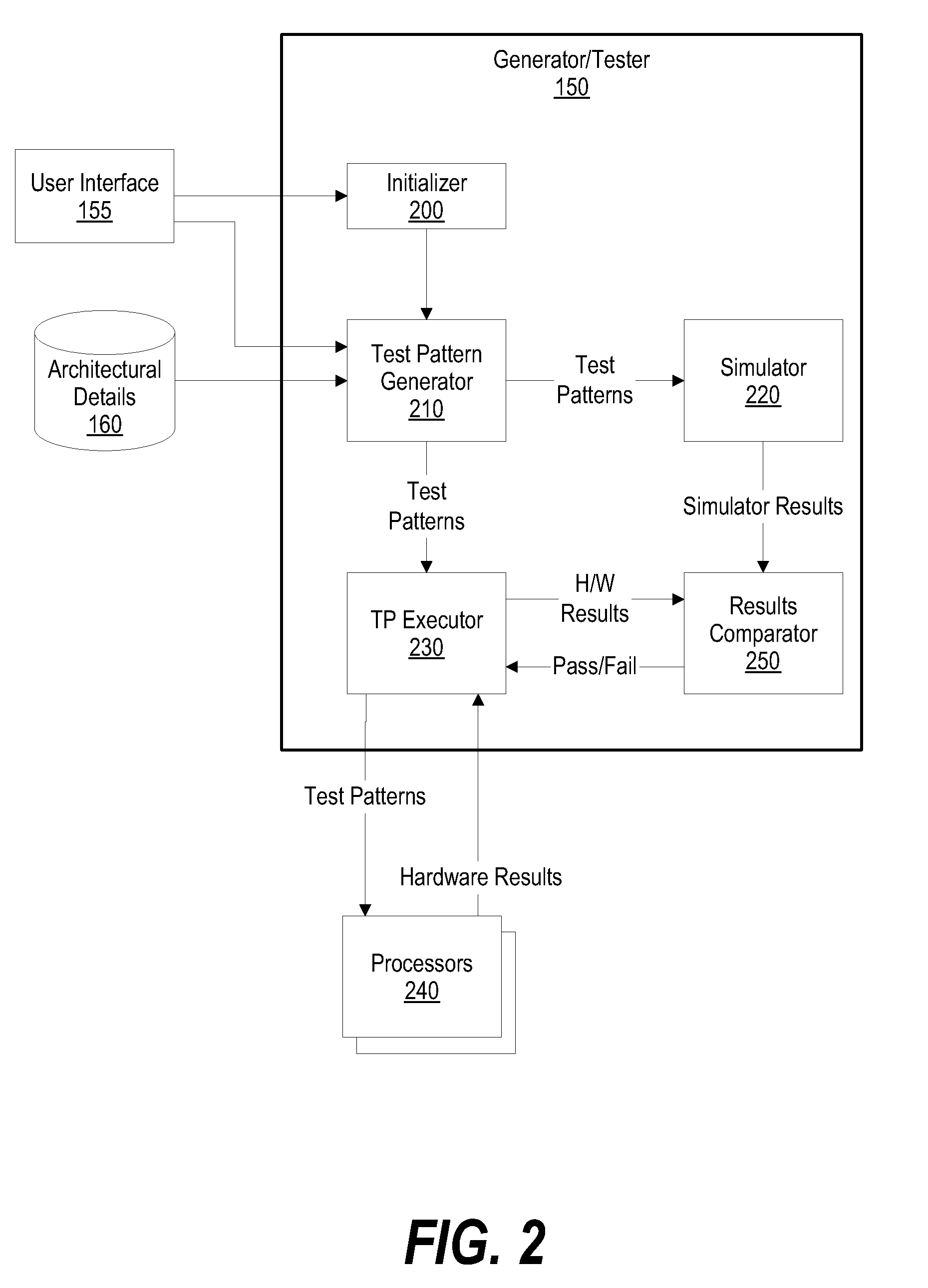

System and Method for Pseudo-Random Test Pattern Memory Allocation for Processor Design Verification and Validation

InactiveUS20090024891A1Efficient testingElectronic circuit testingHardware monitoringPage tableFalse sharing

A system and method for pseudo-randomly allocating page table memory for test pattern instructions to produce complex test scenarios during processor execution is presented. The invention described herein distributes page table memory across processors and across multiple test patterns, such as when a processor executes “n” test patterns. In addition, the page table memory is allocated using a “true” sharing mode or a “false” sharing mode. The false sharing mode provides flexibility of performing error detection checks on the test pattern results. In addition, since a processor comprises sub units such as a cache, a TLB (translation look aside buffer), an SLB (segment look aside buffer), an MMU (memory management unit), and data / instruction pre-fetch engines, the test patterns effectively use the page table memory to test each of the sub units.

Owner:IBM CORP

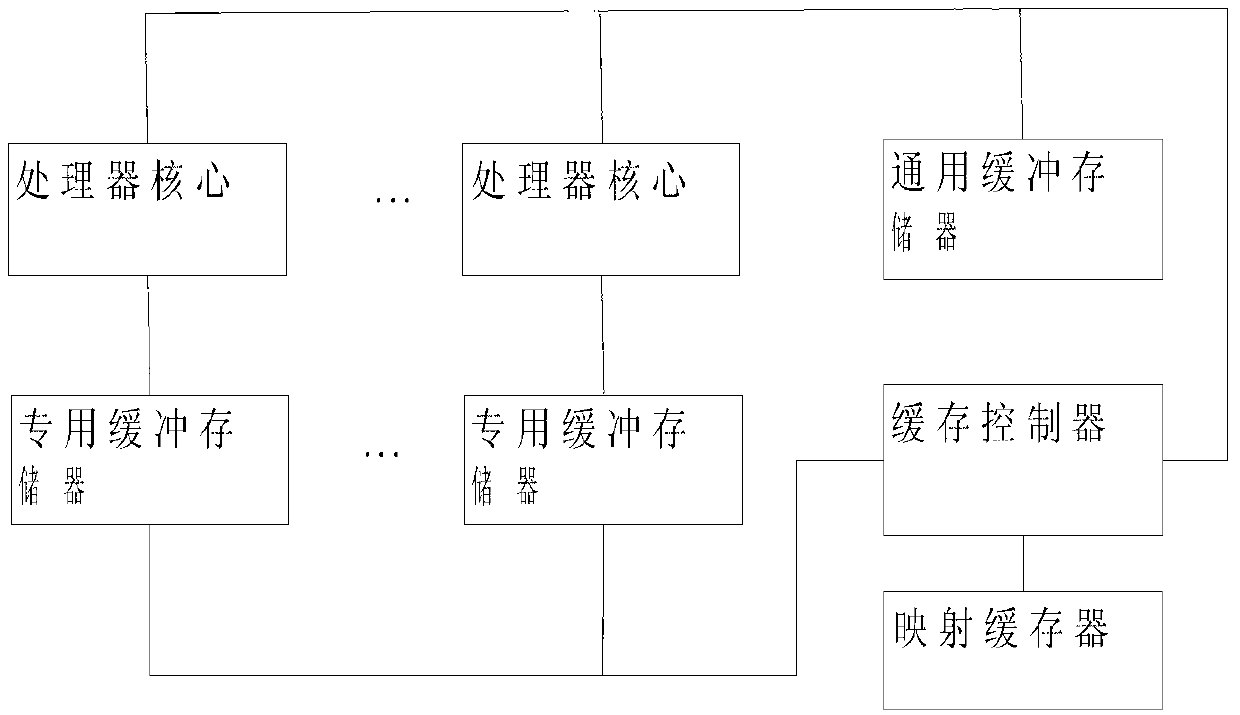

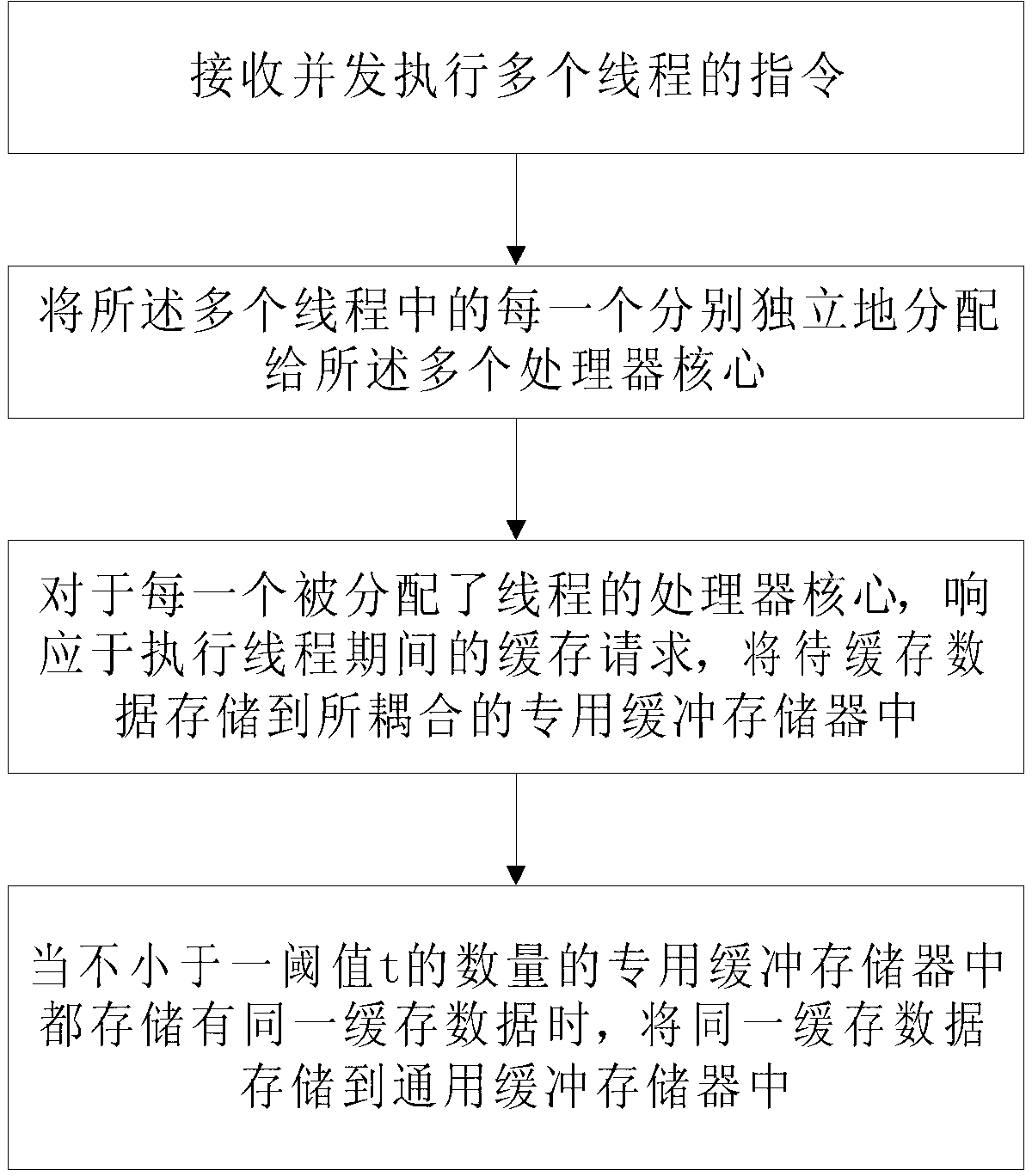

Data buffering method in multi-core processor

ActiveCN103345451AReduce complexityImprove efficiencyMemory adressing/allocation/relocationFalse sharingMulti-core processor

The invention provides a data buffering method in a multi-core processor. The data buffering method in the multi-core processor comprises the steps of receiving a command for concurrently executing multiple threads; independently assigning each of the multiple threads to multiple cores of the processor respectively, wherein each of the multiple cores of the processor is assigned with one thread at most; responding to caching requests regarding each core, with the assigned thread, of the processor during the period that the threads are executed, and storing caching data to a coupled special buffer storage; when caching storages which are larger than or equal to a threshold value t in number store the same caching data, storing the same caching data to a general buffer storage. Through the data buffering method in the multi-core processer, the caching assess speed and the replacement speed are improved, and the problem of false sharing is overcome.

Owner:四川千行你我科技股份有限公司

Efficient generation of SIMD code in presence of multi-threading and other false sharing conditions and in machines having memory protection support

InactiveUS7730463B2General purpose stored program computerProgram controlFalse sharingMemory protection

Owner:INT BUSINESS MASCH CORP

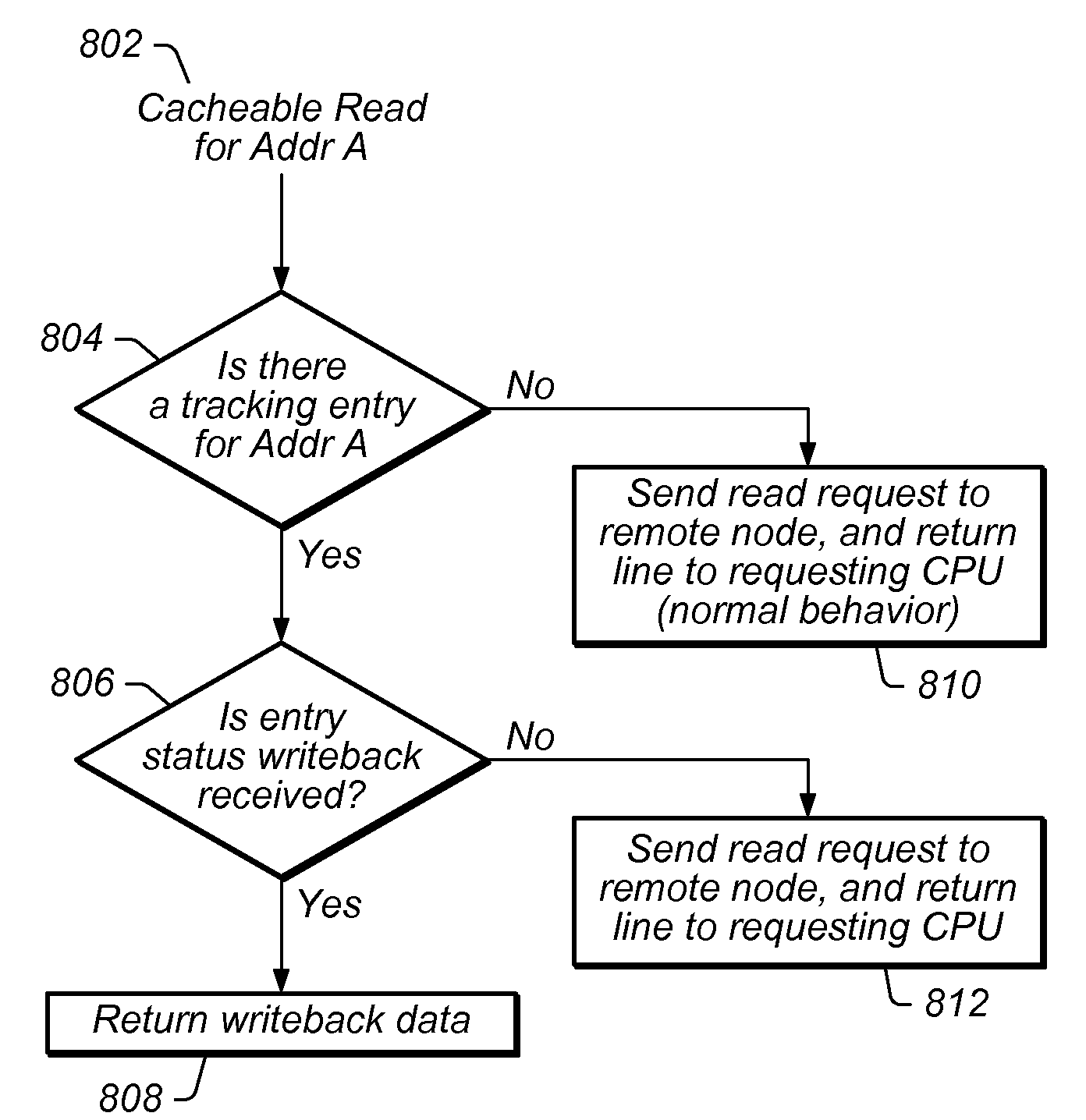

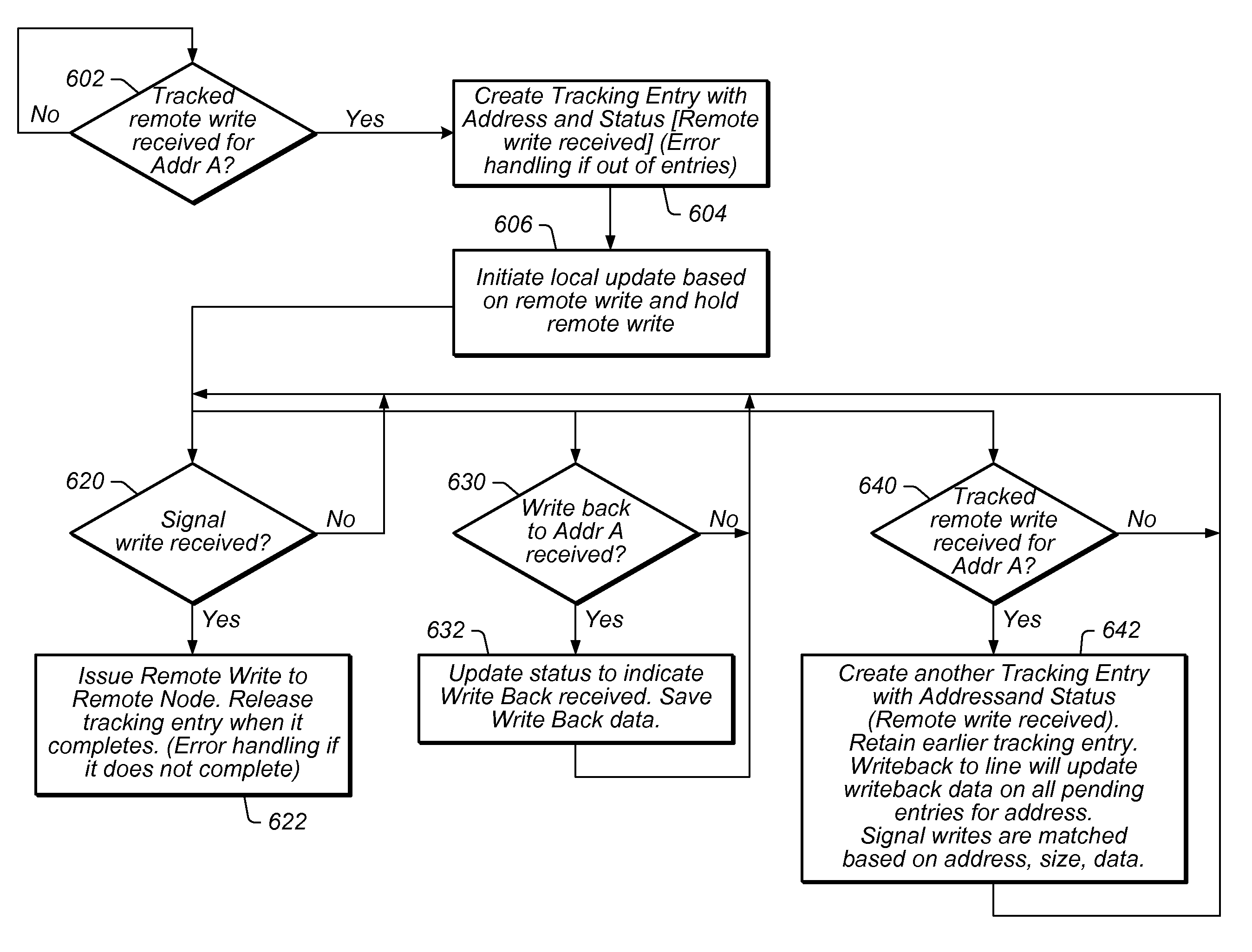

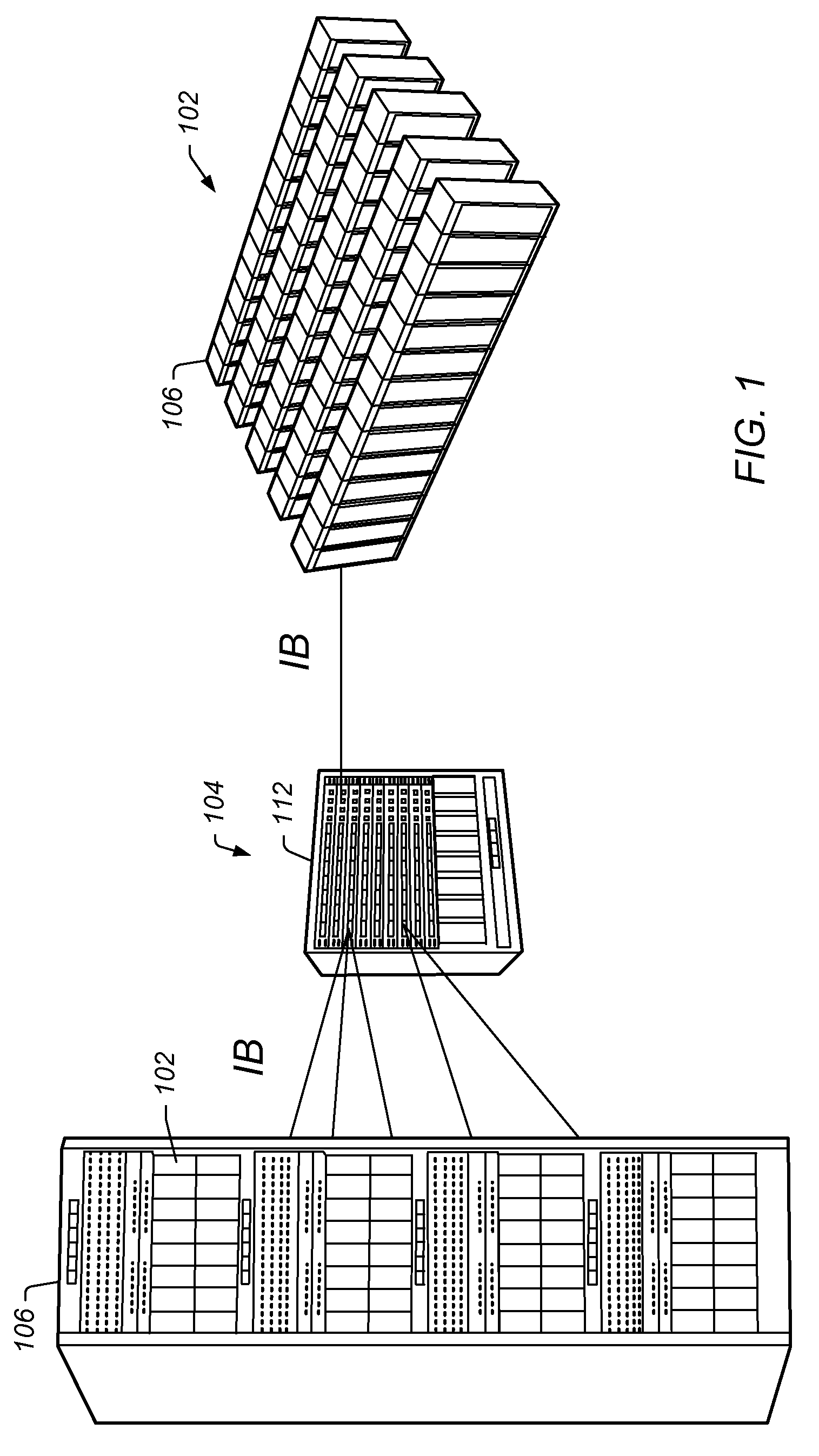

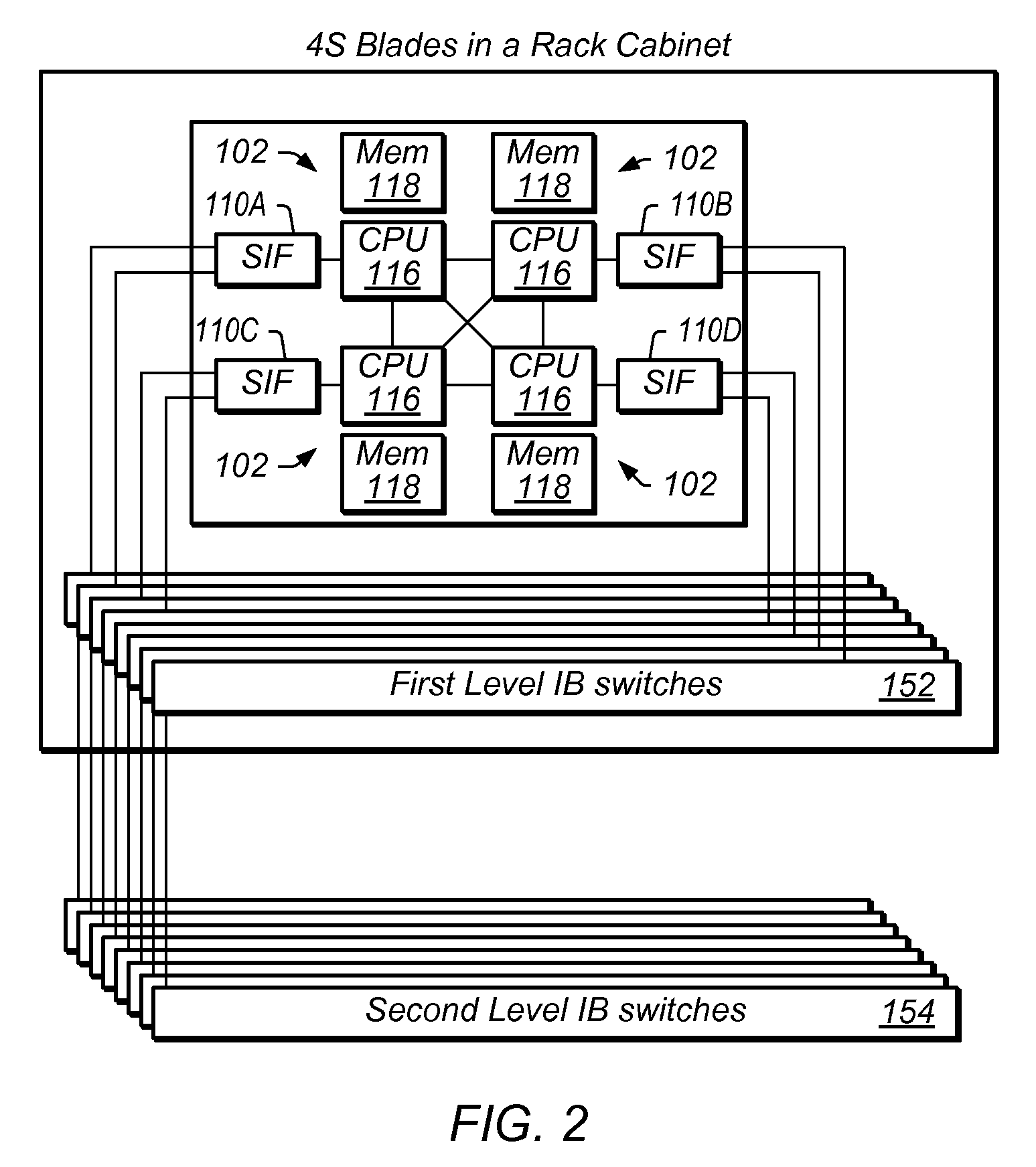

Caching Data in a Cluster Computing System Which Avoids False-Sharing Conflicts

ActiveUS20100332612A1Memory adressing/allocation/relocationMultiple digital computer combinationsComputerized systemRemote computing

Managing operations in a first compute node of a multi-computer system. A remote write may be received to a first address of a remote compute node. A first data structure entry may be created in a data structure, which may include the first address and status information indicating that the remote write has been received. Upon determining that the local cache of the first compute node has been updated with the remote write, the remote write may be issued to the remote compute node. Accordingly, the first data structure entry may be released upon completion of the remote write.

Owner:ORACLE INT CORP

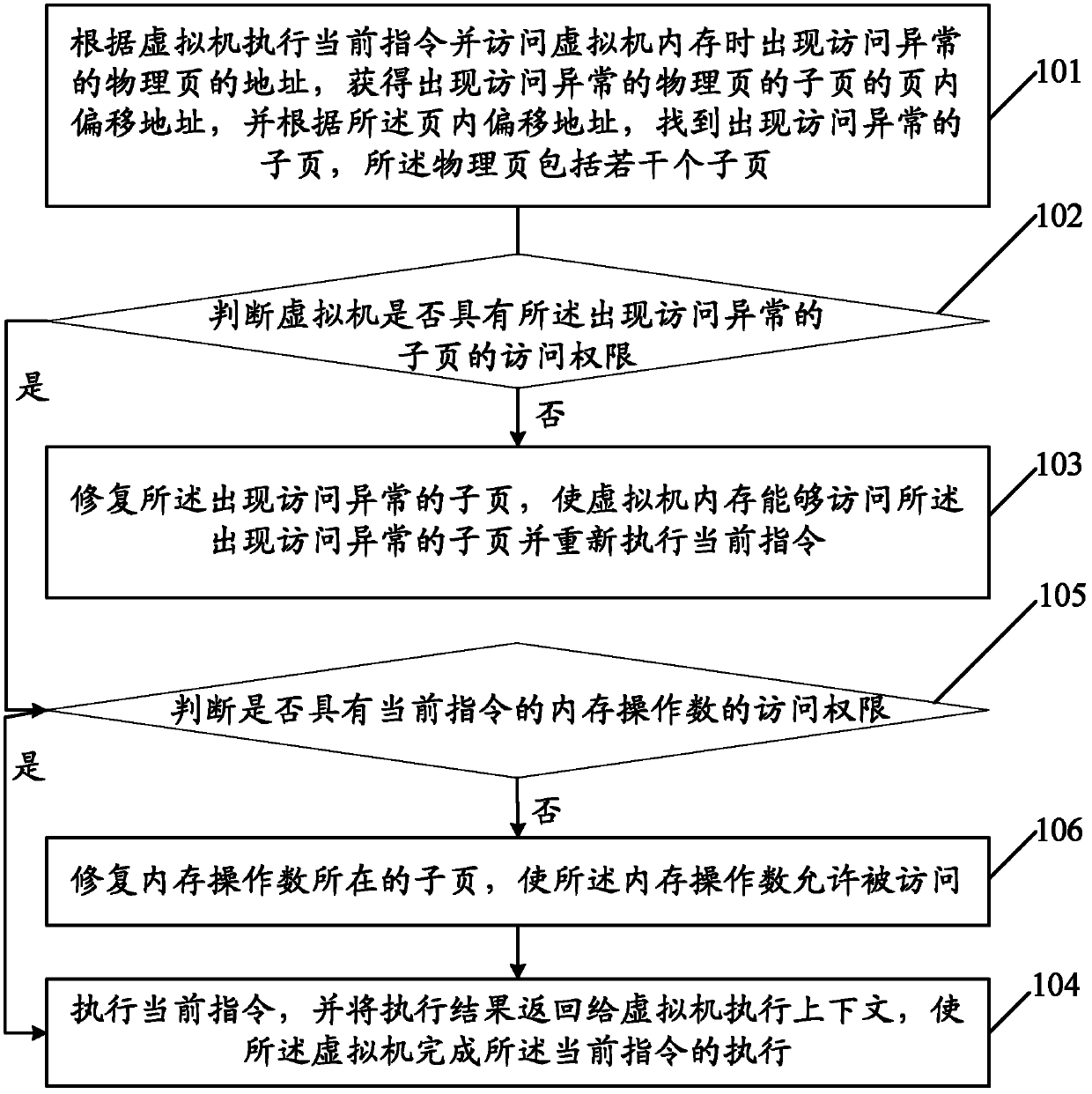

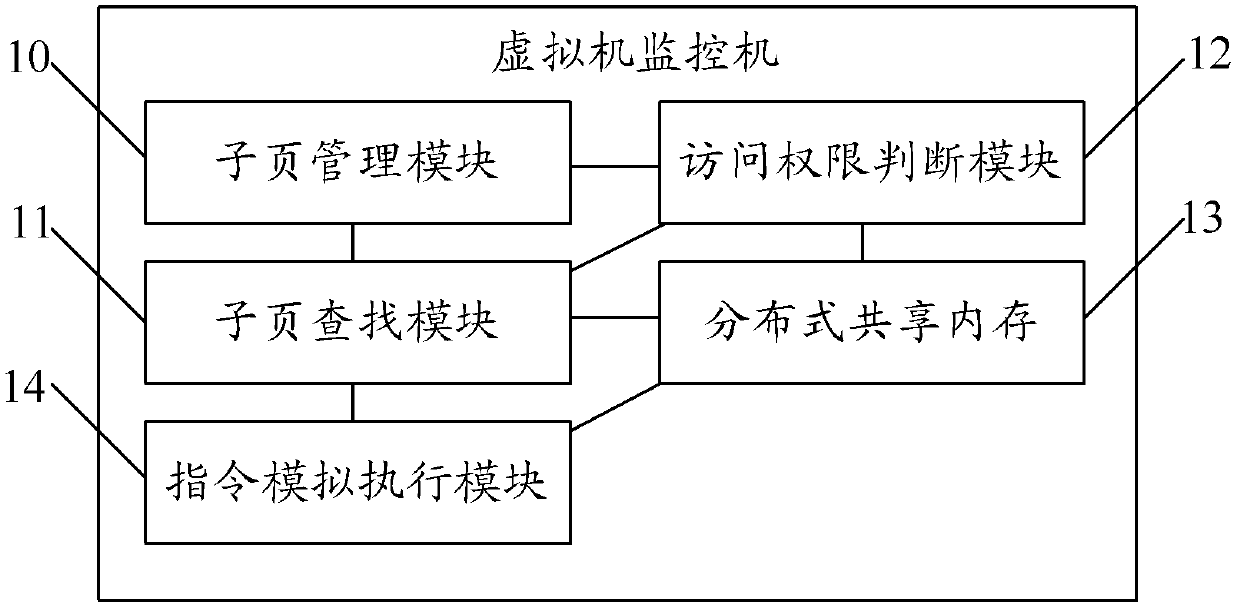

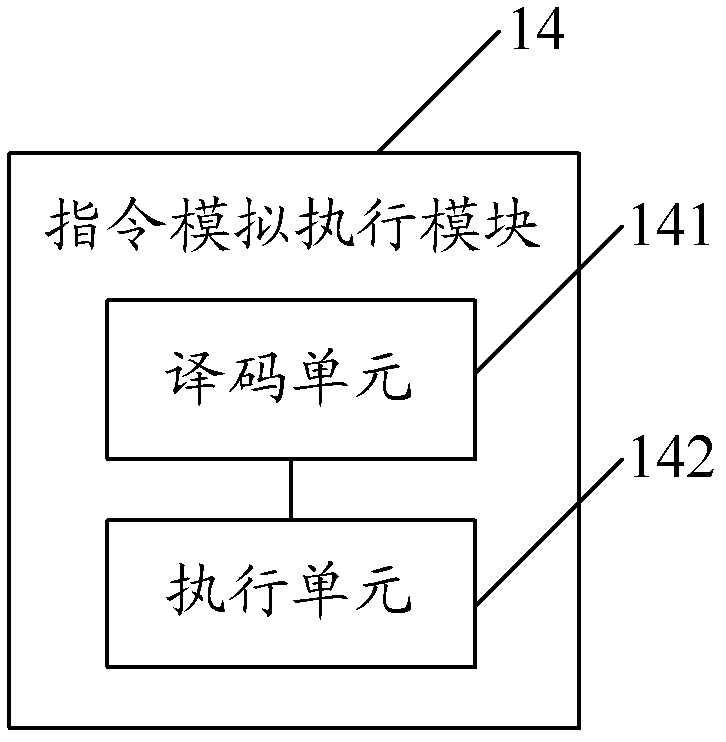

Processing method of distributed virtual machine visit abnormality and virtual machine monitor

InactiveCN102439567AImprove performanceReduce false sharingMemory architecture accessing/allocationInput/output to record carriersComputers technologyFalse sharing

The embodiment of the invention discloses a processing method of a distributed virtual machine visit abnormality and a virtual machine monitor, relating to the computer technology field, and being able to reduce the generation of false sharing, and accordingly, being able to improve the performance of a distributed virtual machine memory. The processing method of the distributed virtual machine visit abnormality of the embodiment of the invention comprises the steps of executing a current instruction and the address of a physical page appearing a visit abnormality when accessing the visit abnormality memory according to the virtual machine to find out a sub-page appearing the visit abnormality; determining whether the virtual machine possesses the access permission of the sub-page appearing the visit abnormality; if the virtual machine does not possess the access permission, repairing the sub-page appearing the visit abnormality to enable the virtual machine memory to access the sub-page appearing the visit abnormality and execute the current instruction again; if the virtual machine possesses the access permission, executing the current instruction and returning the execution result to the virtual machine to execute the context, thereby enabling the virtual machine to finish the execution of the current instruction.

Owner:HUAWEI TECH CO LTD

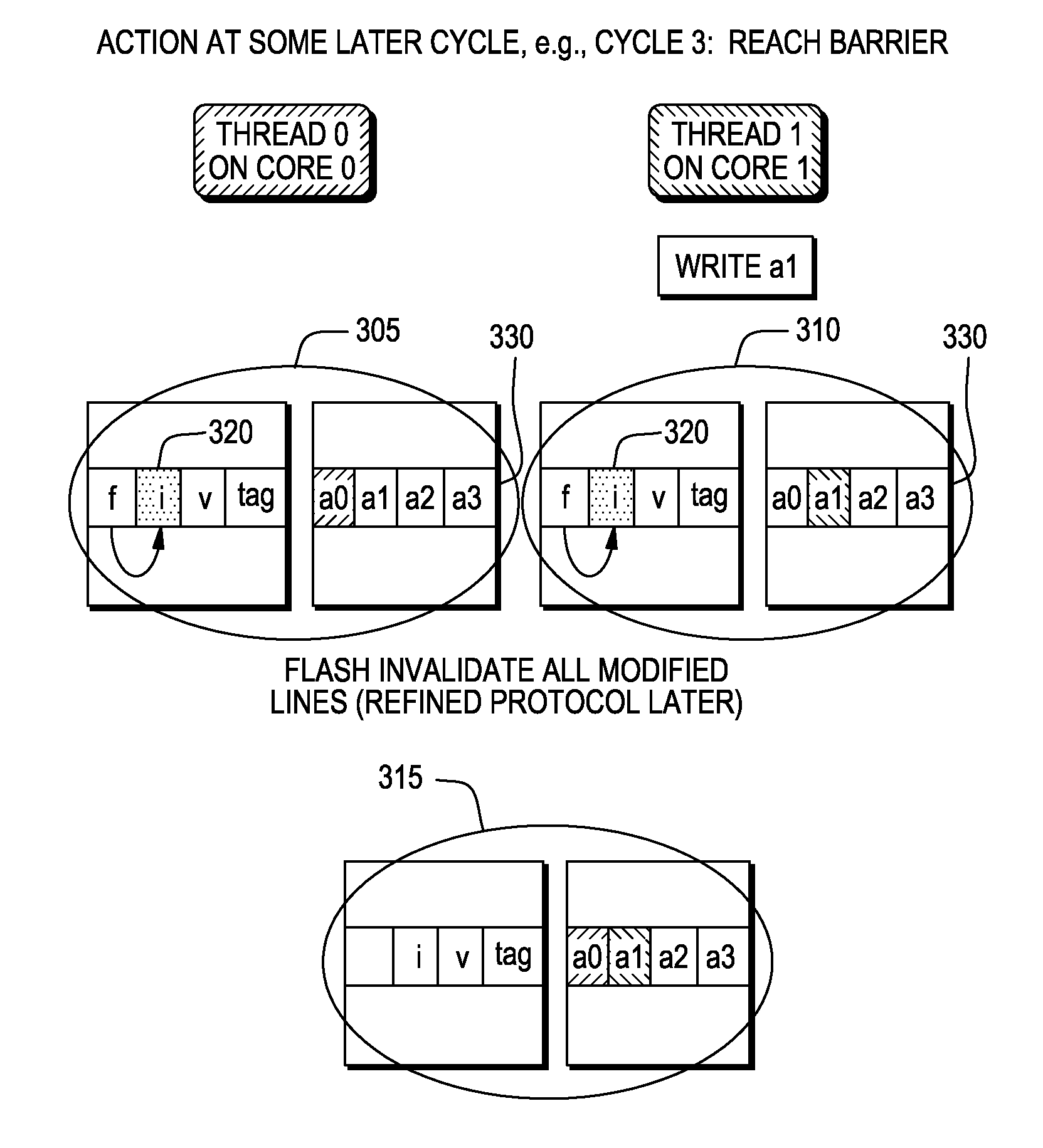

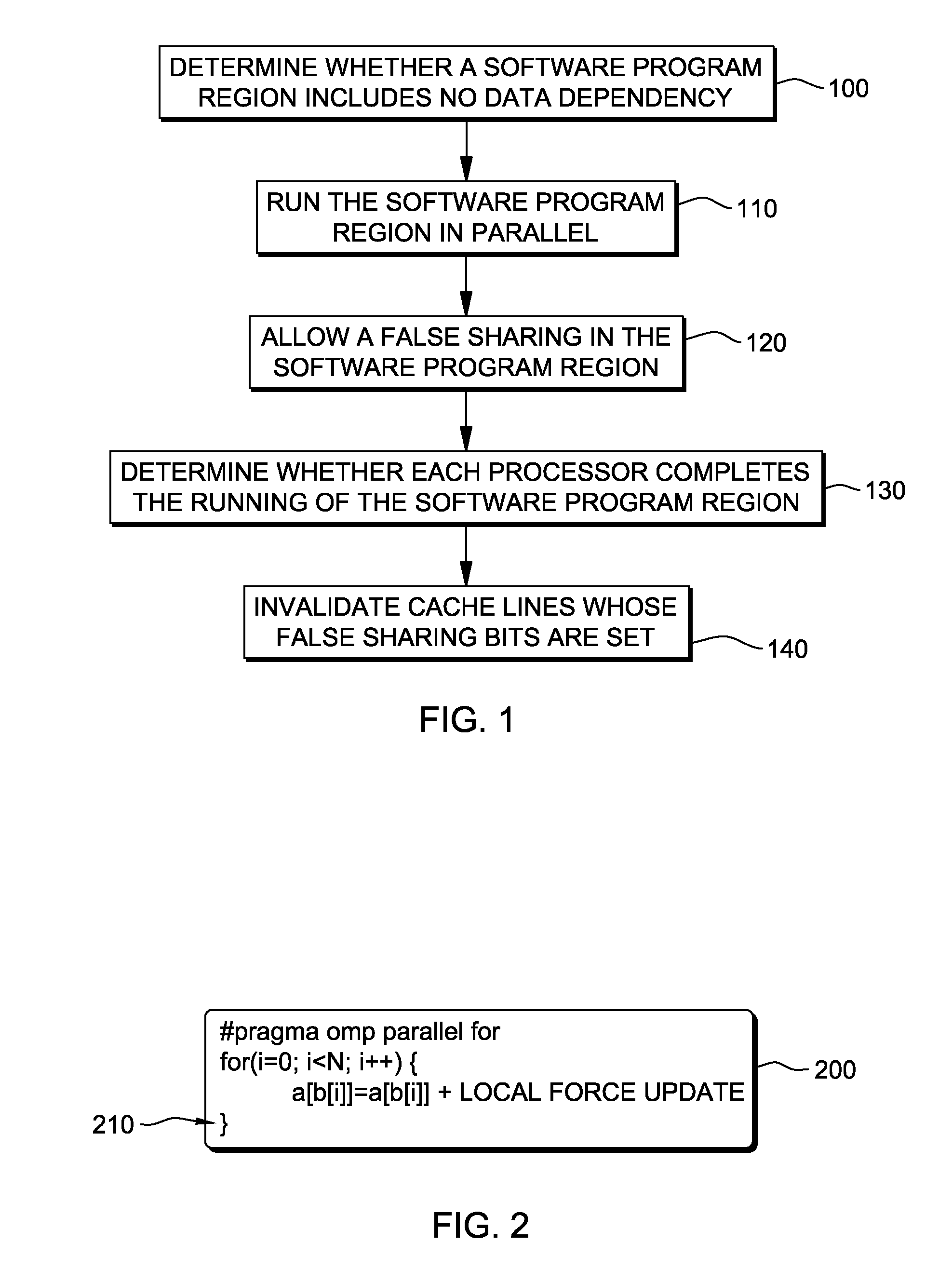

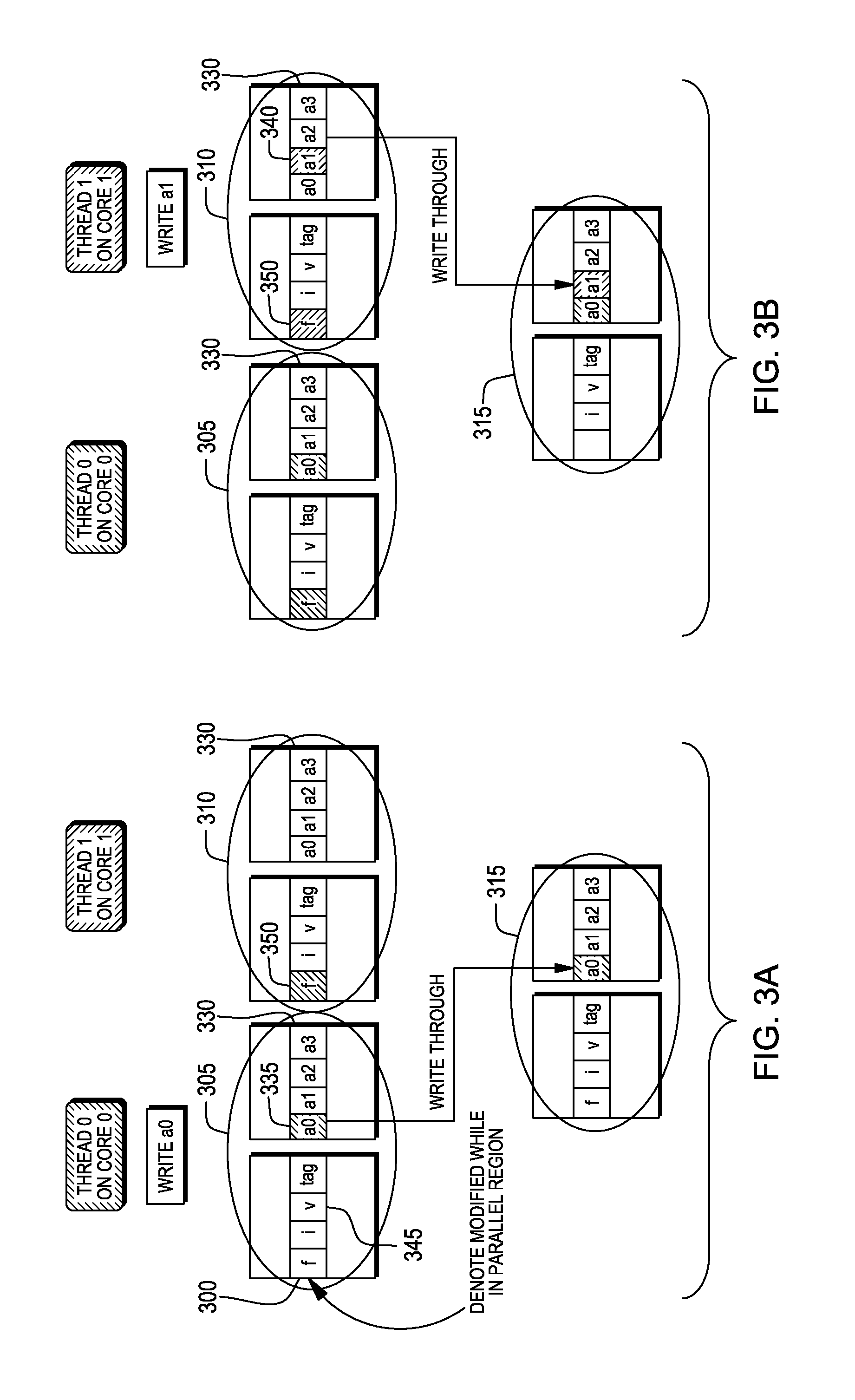

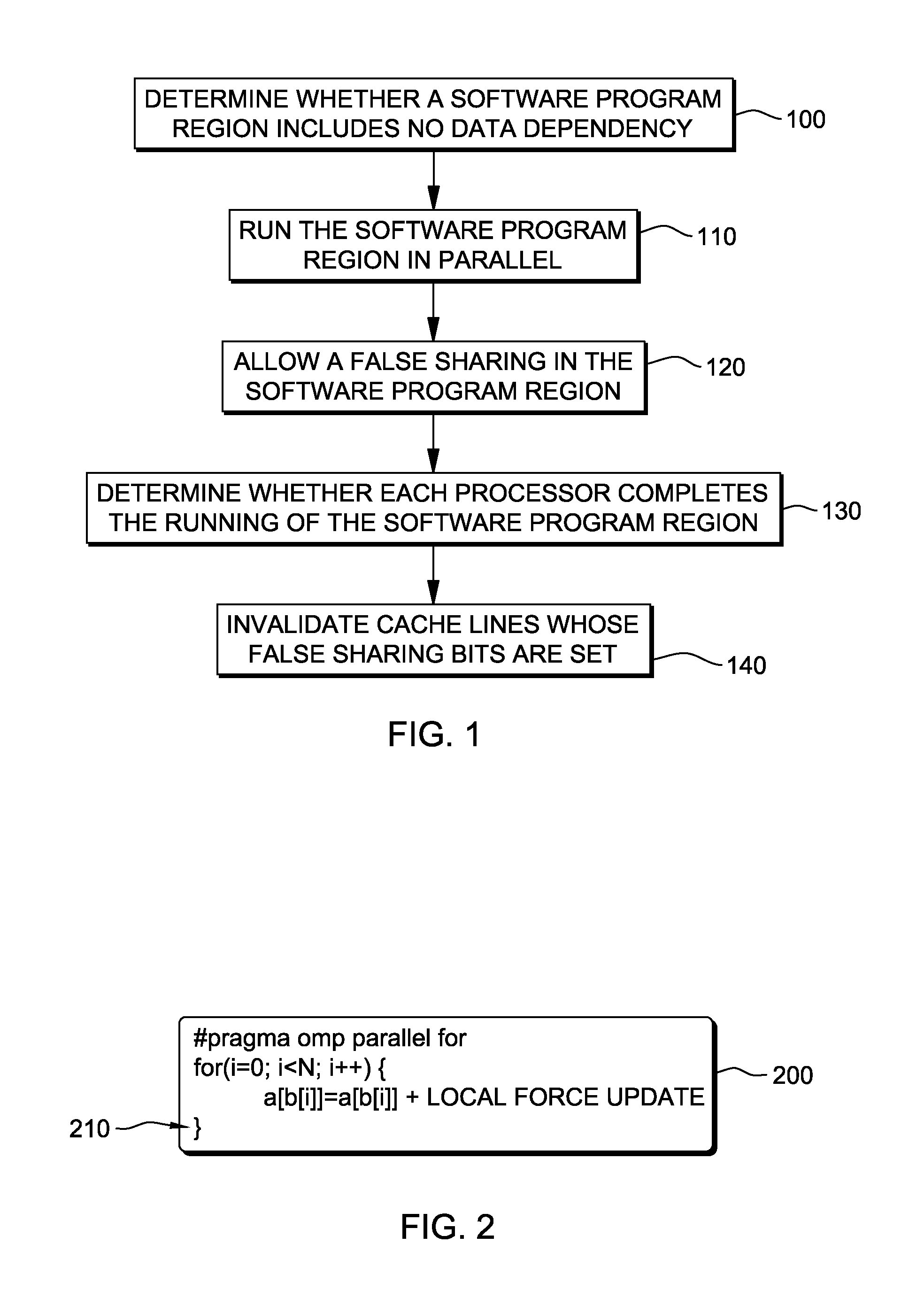

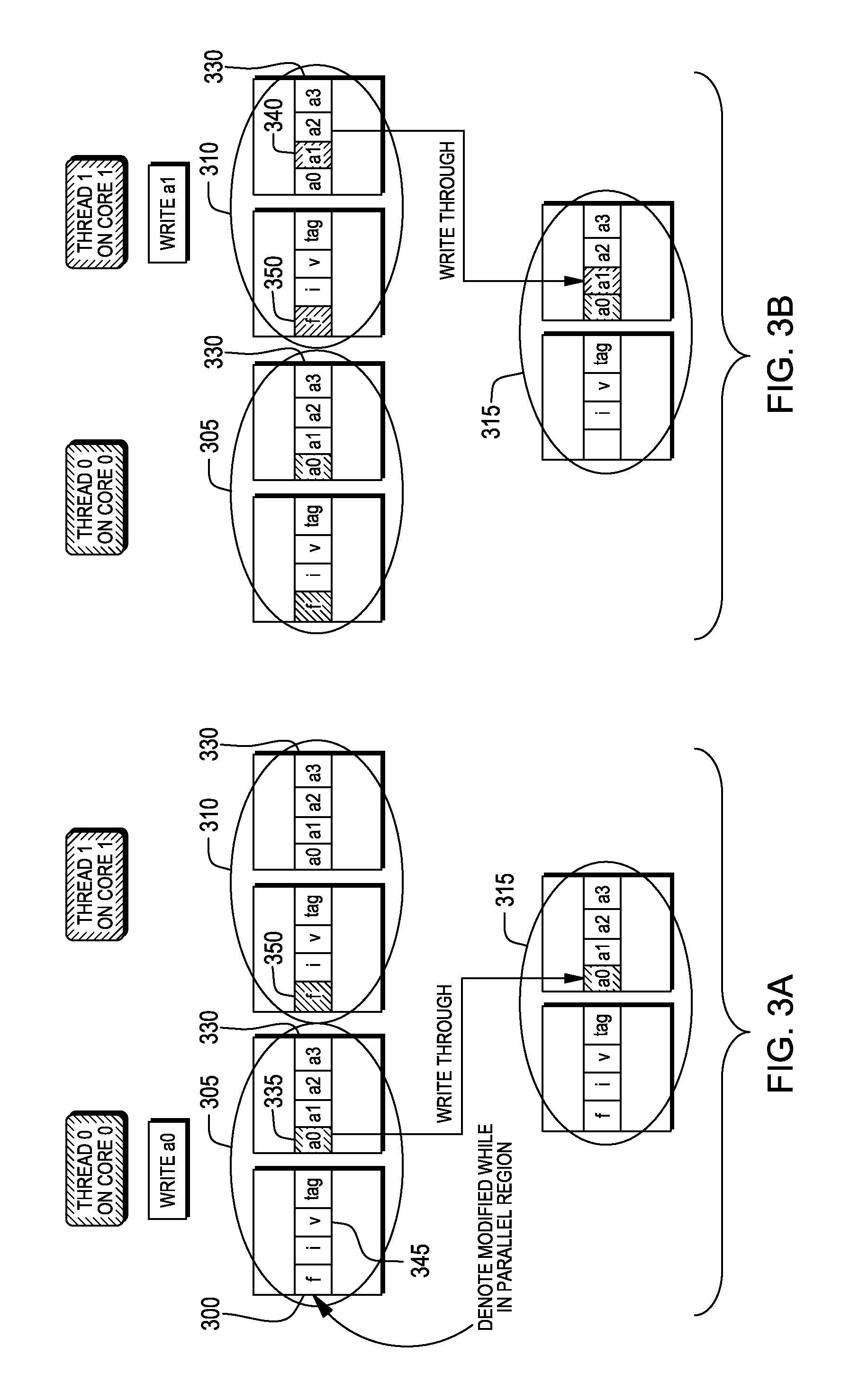

Write-through cache optimized for dependence-free parallel regions

InactiveUS20120210073A1Improve parallelismMemory adressing/allocation/relocationParallel computingComputing systems

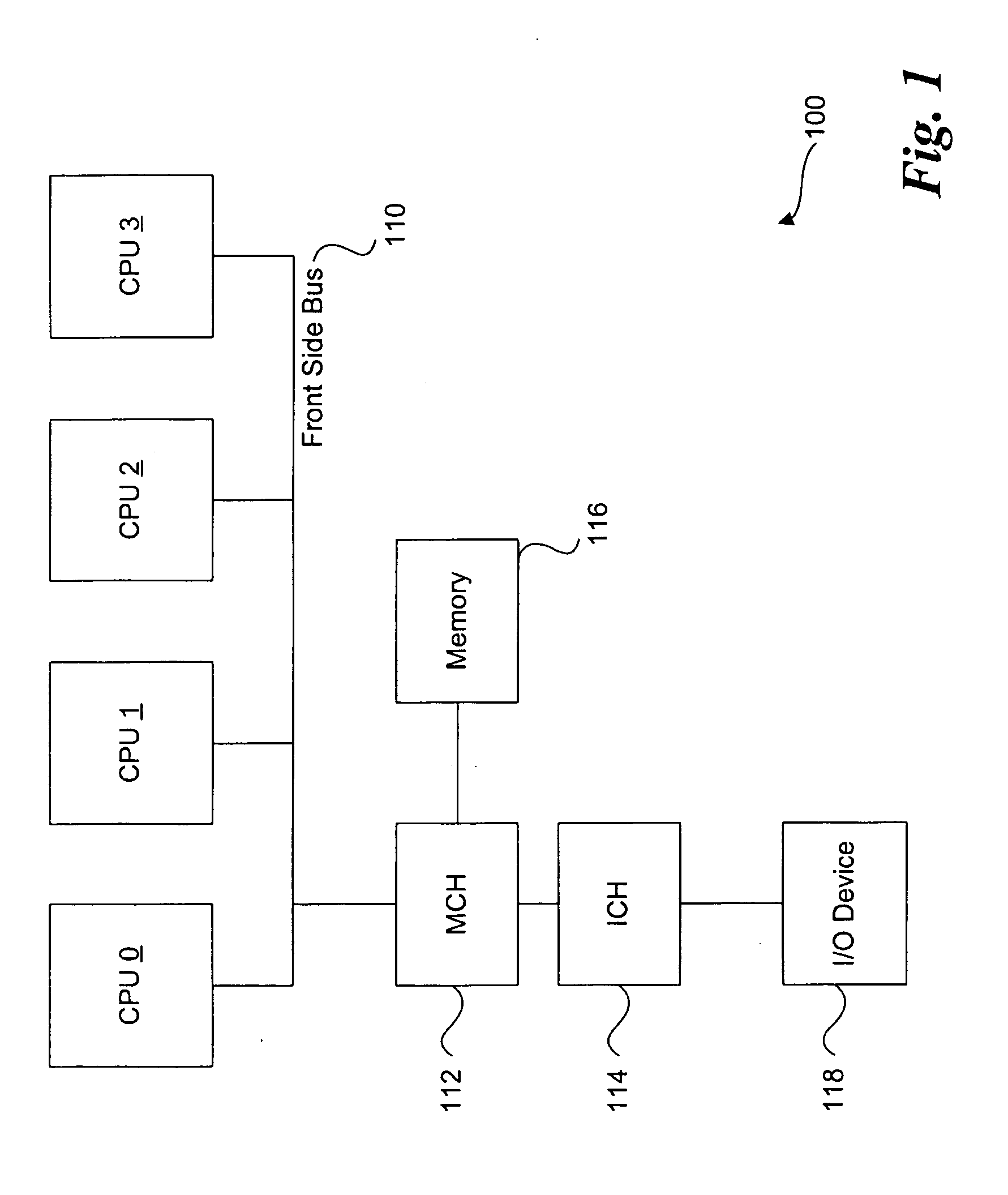

An apparatus, method and computer program product for improving performance of a parallel computing system. A first hardware local cache controller associated with a first local cache memory device of a first processor detects an occurrence of a false sharing of a first cache line by a second processor running the program code and allows the false sharing of the first cache line by the second processor. The false sharing of the first cache line occurs upon updating a first portion of the first cache line in the first local cache memory device by the first hardware local cache controller and subsequent updating a second portion of the first cache line in a second local cache memory device by a second hardware local cache controller.

Owner:IBM CORP

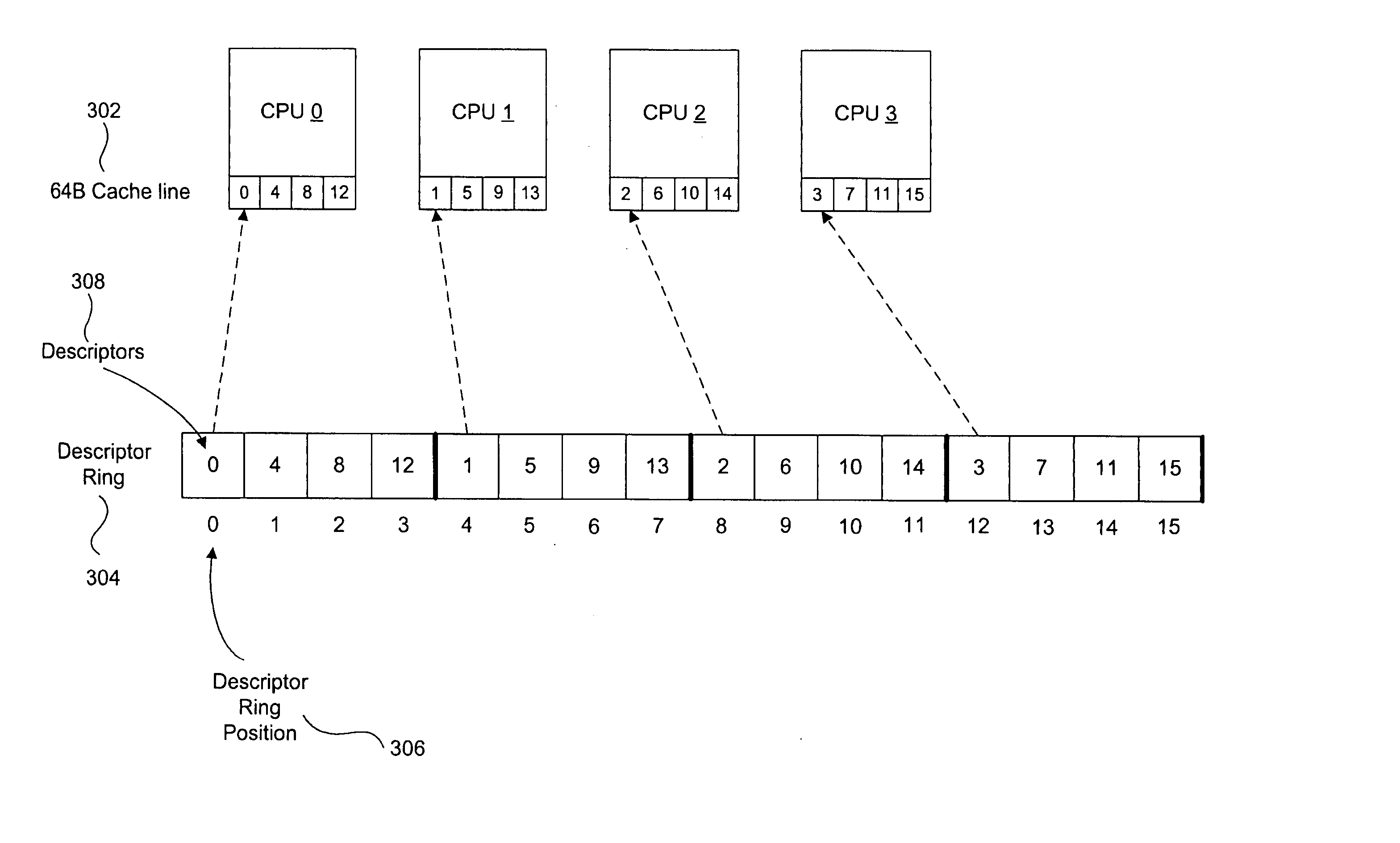

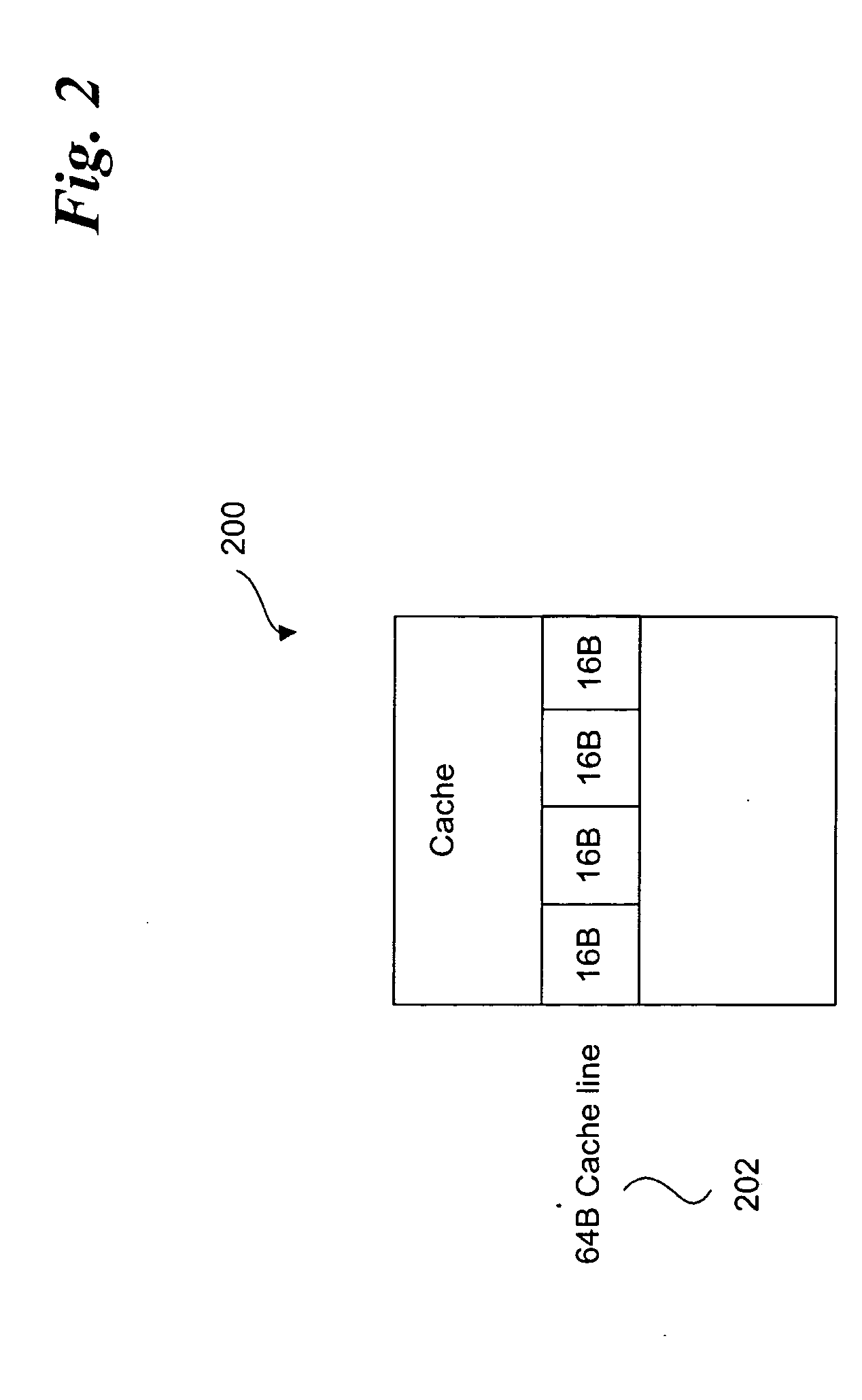

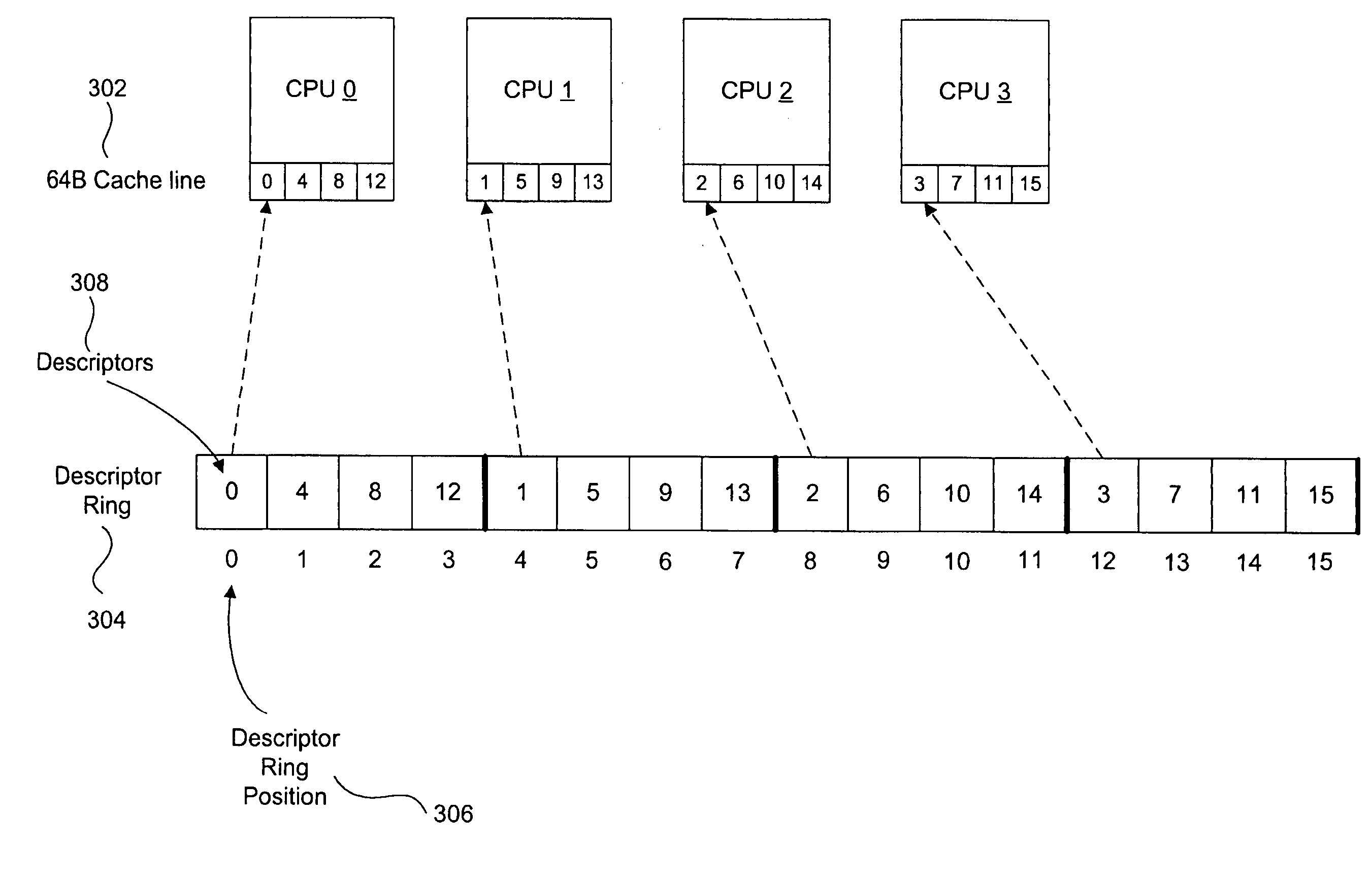

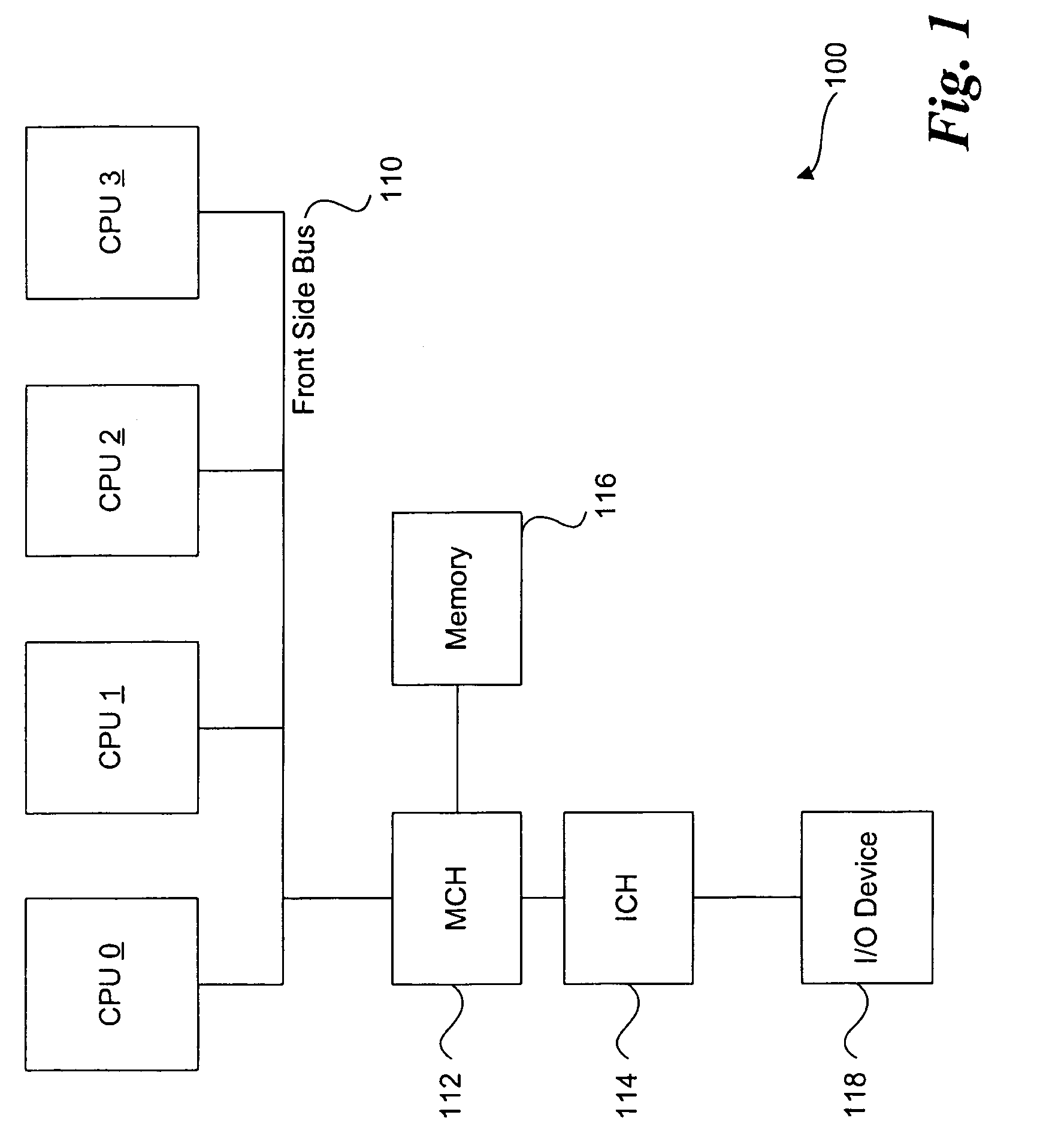

Striping across multiple cache lines to prevent false sharing

A method and system for striping across multiple cache lines to prevent false sharing. A first descriptor to correspond to a first data block is created. The first descriptor is placed in a descriptor ring according to a striping policy to prevent false sharing of a cache line of the computer system.

Owner:INTEL CORP

Apparatus and method for detecting false sharing

InactiveUS20120089785A1Program synchronisationError detection/correctionArtificial intelligenceFalse sharing

A false sharing detecting apparatus for analyzing a multi-thread application, the false sharing detecting apparatus includes an operation set detecting unit configured to detect an operation set having a chance of causing performance degradation due to false sharing, and a probability calculation unit configured to calculate a first probability defined as a probability that the detected operation set is to be executed according to an execution pattern causing performance degradation due to false sharing, and calculate a second probability based on the calculated first probability. The second probability is defined as a probability that performance degradation due to false sharing occurs with respect to an operation included in the detected operation set.

Owner:SAMSUNG ELECTRONICS CO LTD

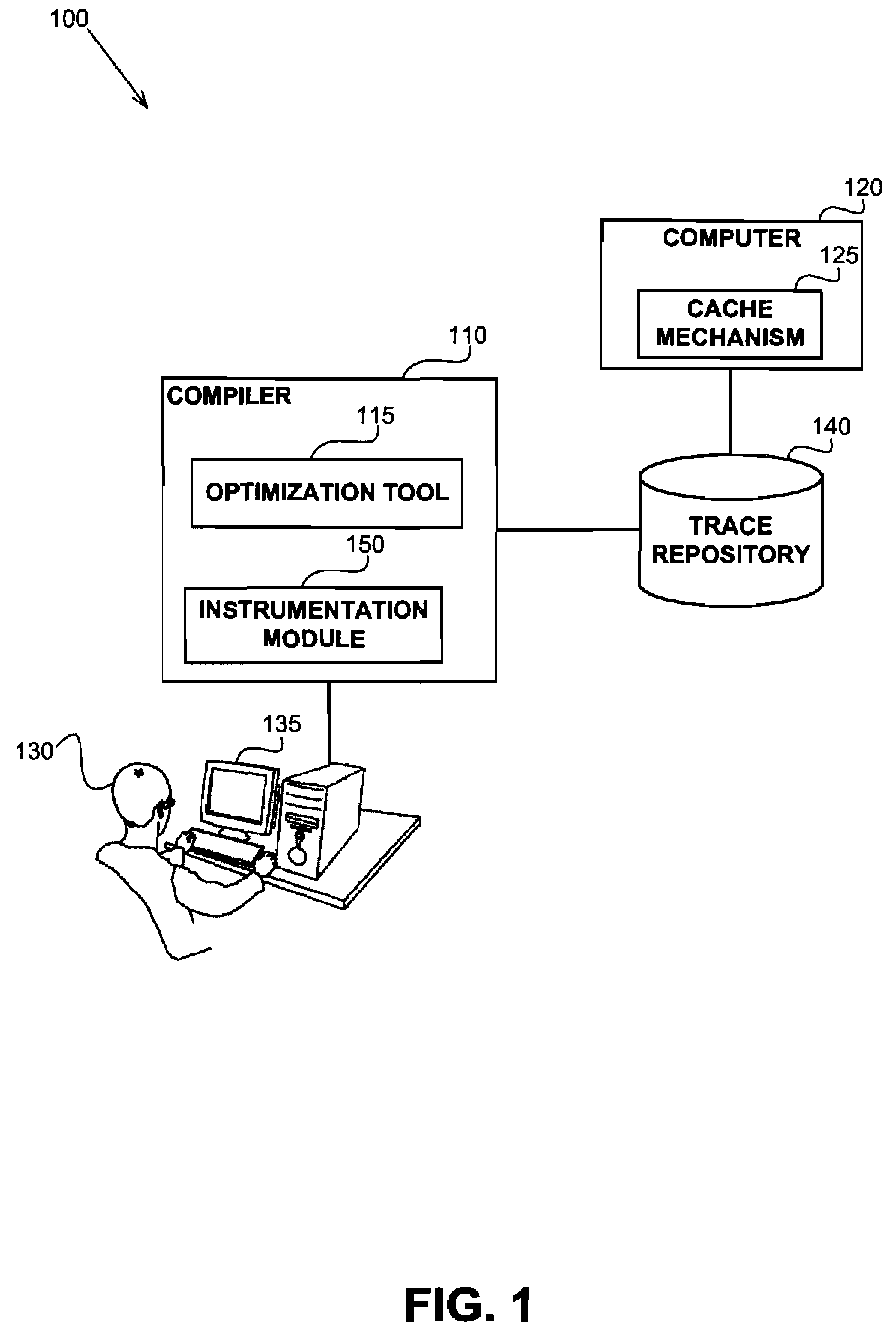

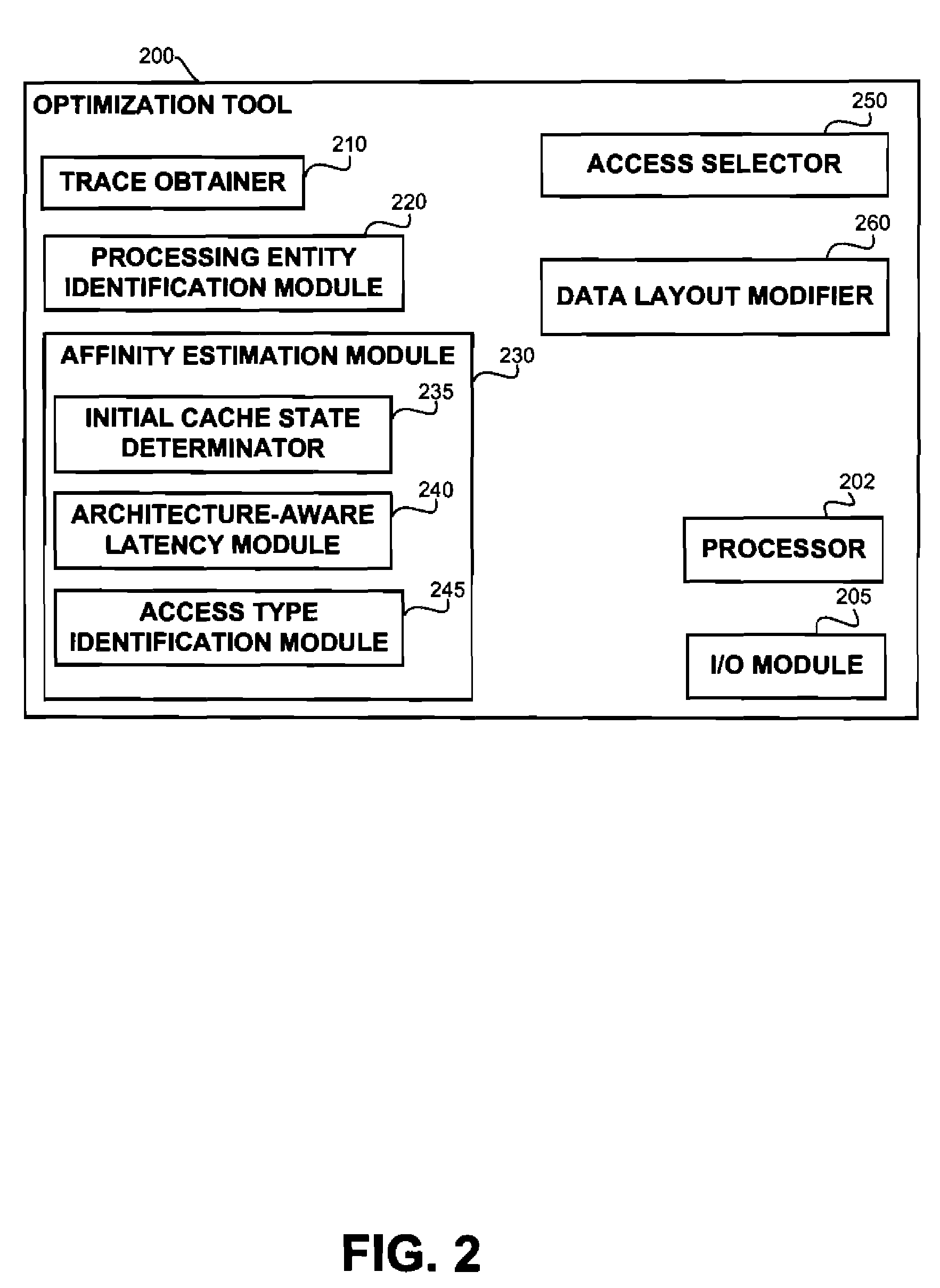

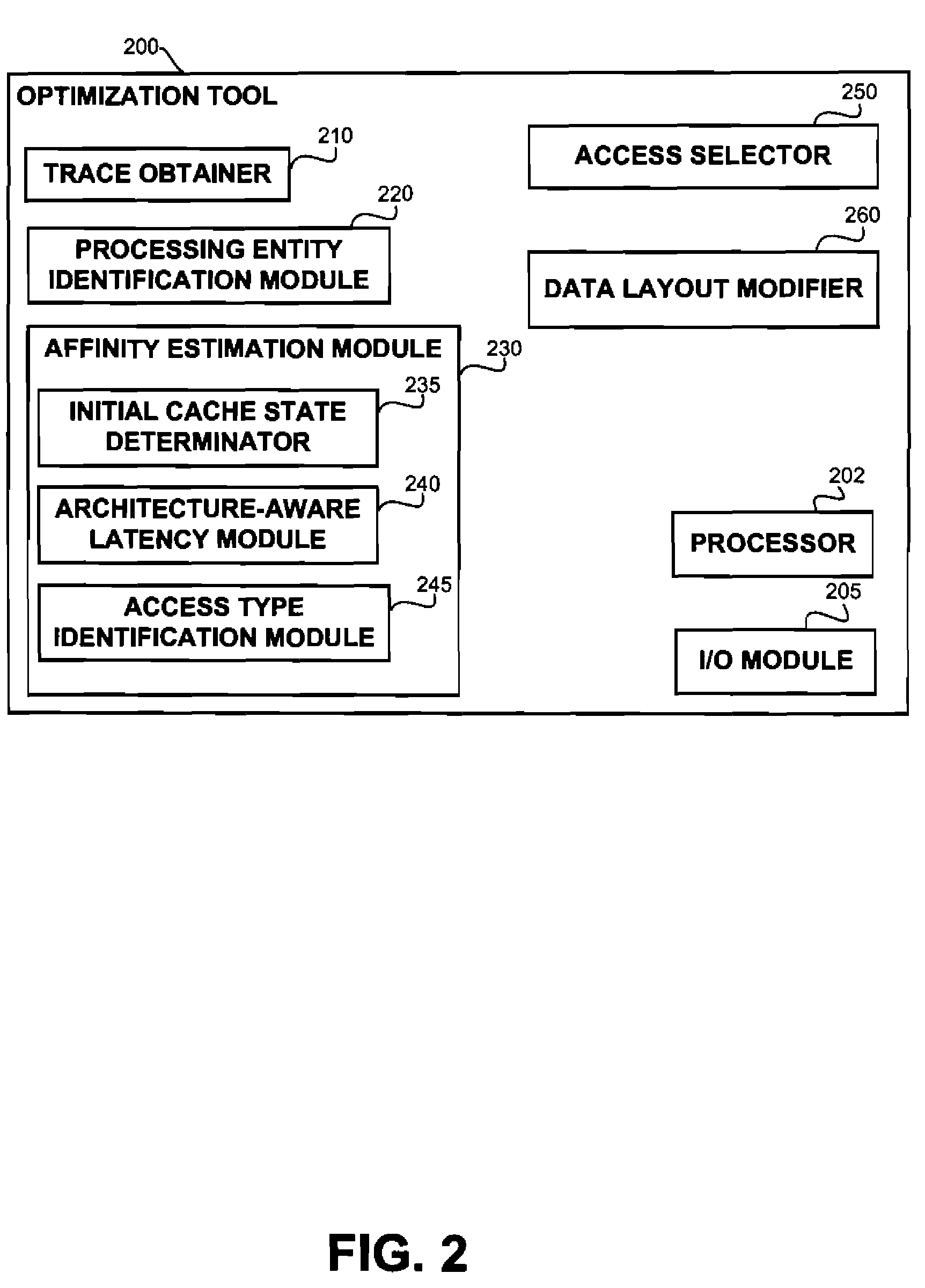

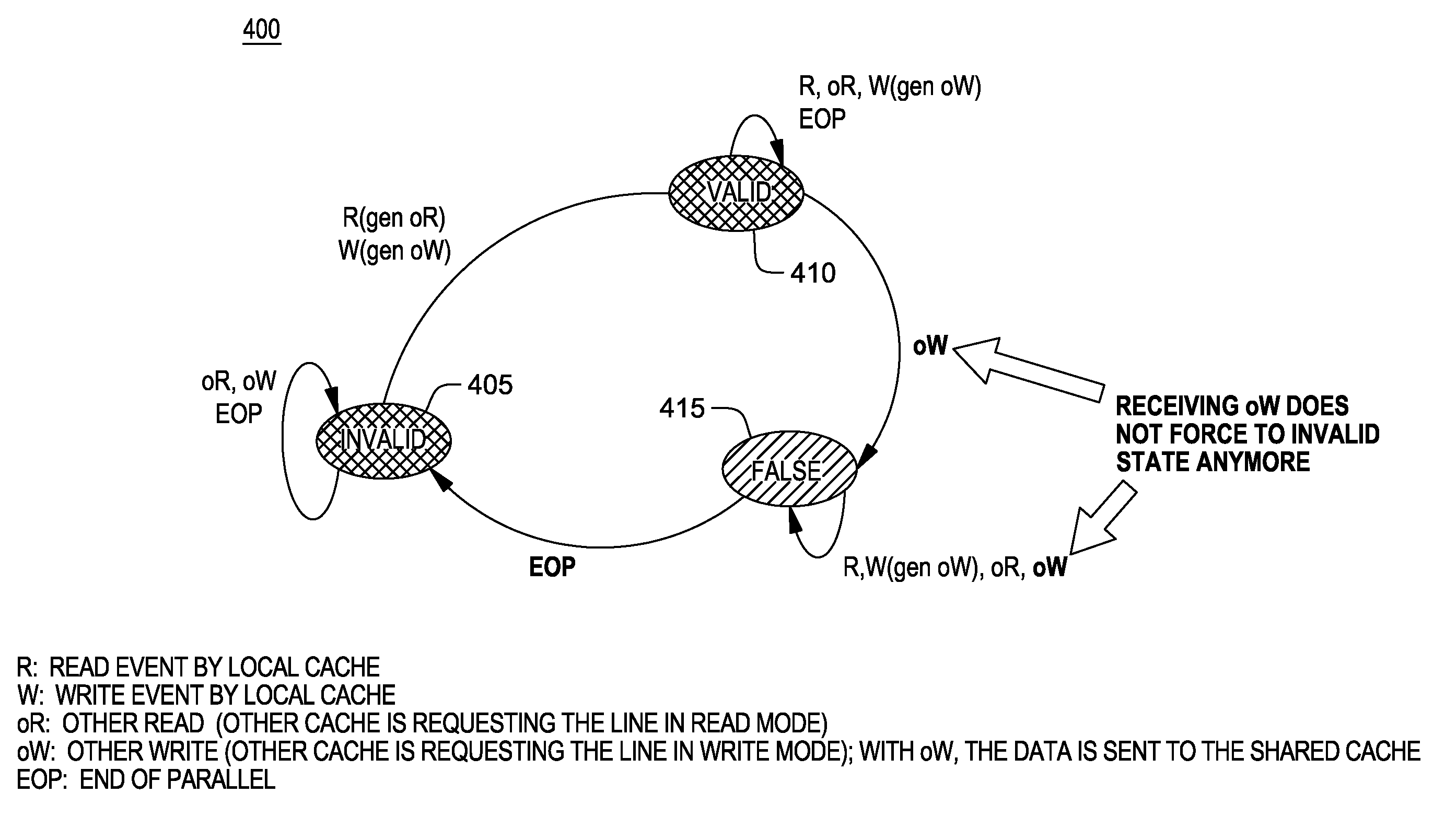

Architecture-aware field affinity estimation

InactiveUS20110302561A1Digital data processing detailsError detection/correctionSubject matterTheoretical computer science

A data layout optimization may utilize affinity estimation between paris of fields of a record in a computer program. The affinity estimation may be determined based on a trace of an execution and in view of actual processing entities performing each access to the fields. The disclosed subject matter may be configured to be aware of a specific architecture of a target computer having a plurality of processing entities, executing the program so as to provide an improved affinity estimation which may take into account both false sharing issues, spatial locality improvement and the like.

Owner:IBM CORP

Striping across multiple cache lines to prevent false sharing

A method and system for striping across multiple cache lines to prevent false sharing. A first descriptor to correspond to a first data block is created. The first descriptor is placed in a descriptor ring according to a striping policy to prevent false sharing of a cache line of the computer system.

Owner:INTEL CORP

System and method for pseudo-random test pattern memory allocation for processor design verification and validation

InactiveUS7584394B2Efficient testingElectronic circuit testingError detection/correctionPage tableVerification and validation

A system and method for pseudo-randomly allocating page table memory for test pattern instructions to produce complex test scenarios during processor execution is presented. The invention described herein distributes page table memory across processors and across multiple test patterns, such as when a processor executes “n” test patterns. In addition, the page table memory is allocated using a “true” sharing mode or a “false” sharing mode. The false sharing mode provides flexibility of performing error detection checks on the test pattern results. In addition, since a processor comprises sub units such as a cache, a TLB (translation look aside buffer), an SLB (segment look aside buffer), an MMU (memory management unit), and data / instruction pre-fetch engines, the test patterns effectively use the page table memory to test each of the sub units.

Owner:IBM CORP

Dynamic logical data channel assignment using time-grouped allocations

InactiveUS20090150575A1Reducing false dependencyImprove performanceElectric digital data processingData transmissionBitmap

A method, system and program are provided for dynamically allocating DMA channel identifiers to multiple DMA transfer requests that are grouped in time by virtualizing DMA transfer requests into an available DMA channel identifier using a channel bitmap listing of available DMA channels to select and set an allocated DMA channel identifier. Once the input values associated with the DMA transfer requests are mapped to the selected DMA channel identifier, the DMA transfers are performed using the selected DMA channel identifier, which is then deallocated in the channel bitmap upon completion of the DMA transfers. When there is a request to wait for completion of the data transfers, the same input values are used with the mapping to wait on the appropriate logical channel. With this method, all available logical channels can be utilized with reduced instances of false-sharing.

Owner:IBM CORP

Caching data in a cluster computing system which avoids false-sharing conflicts

ActiveUS8095617B2Memory adressing/allocation/relocationMultiple digital computer combinationsComputerized systemFalse sharing

Owner:ORACLE INT CORP

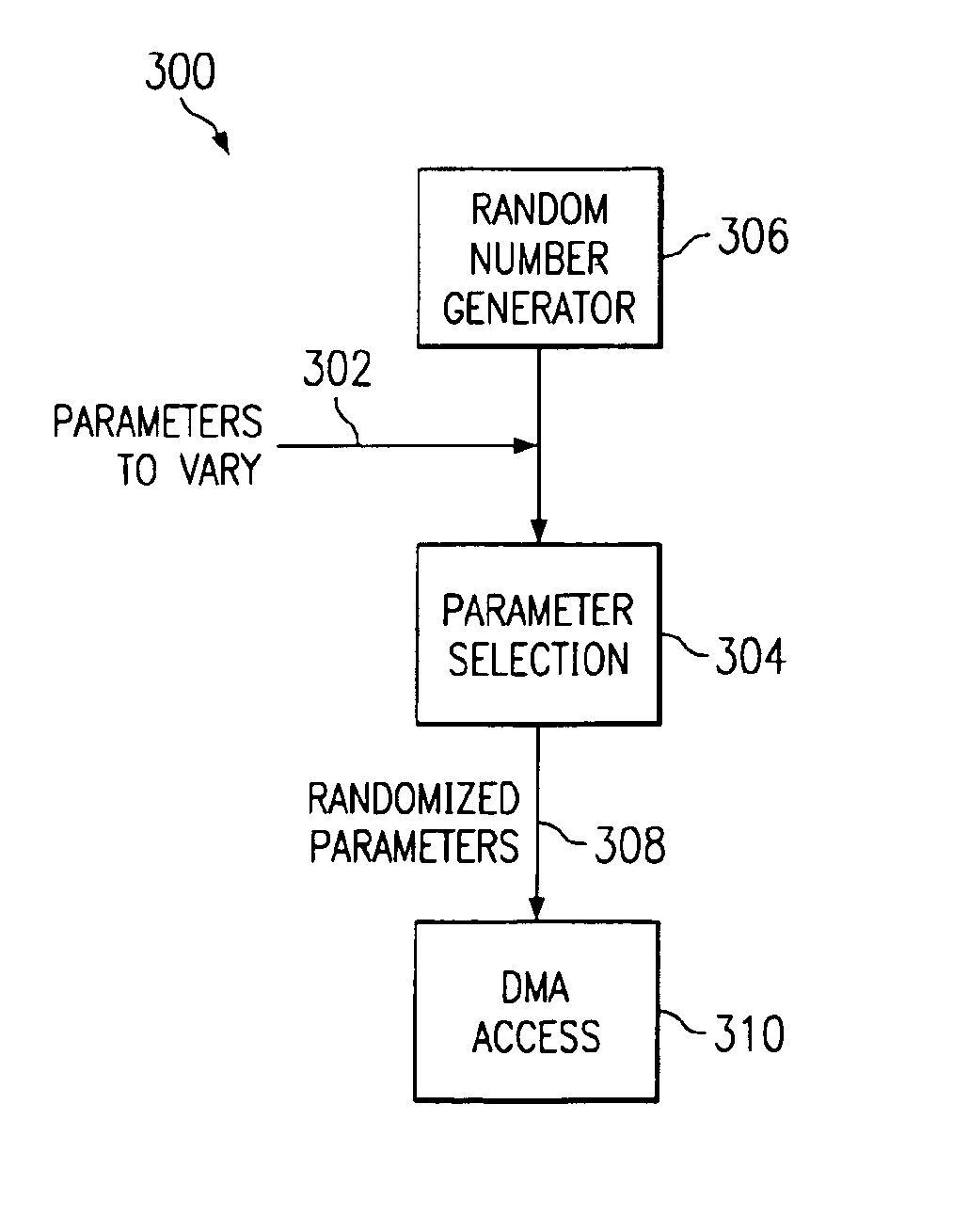

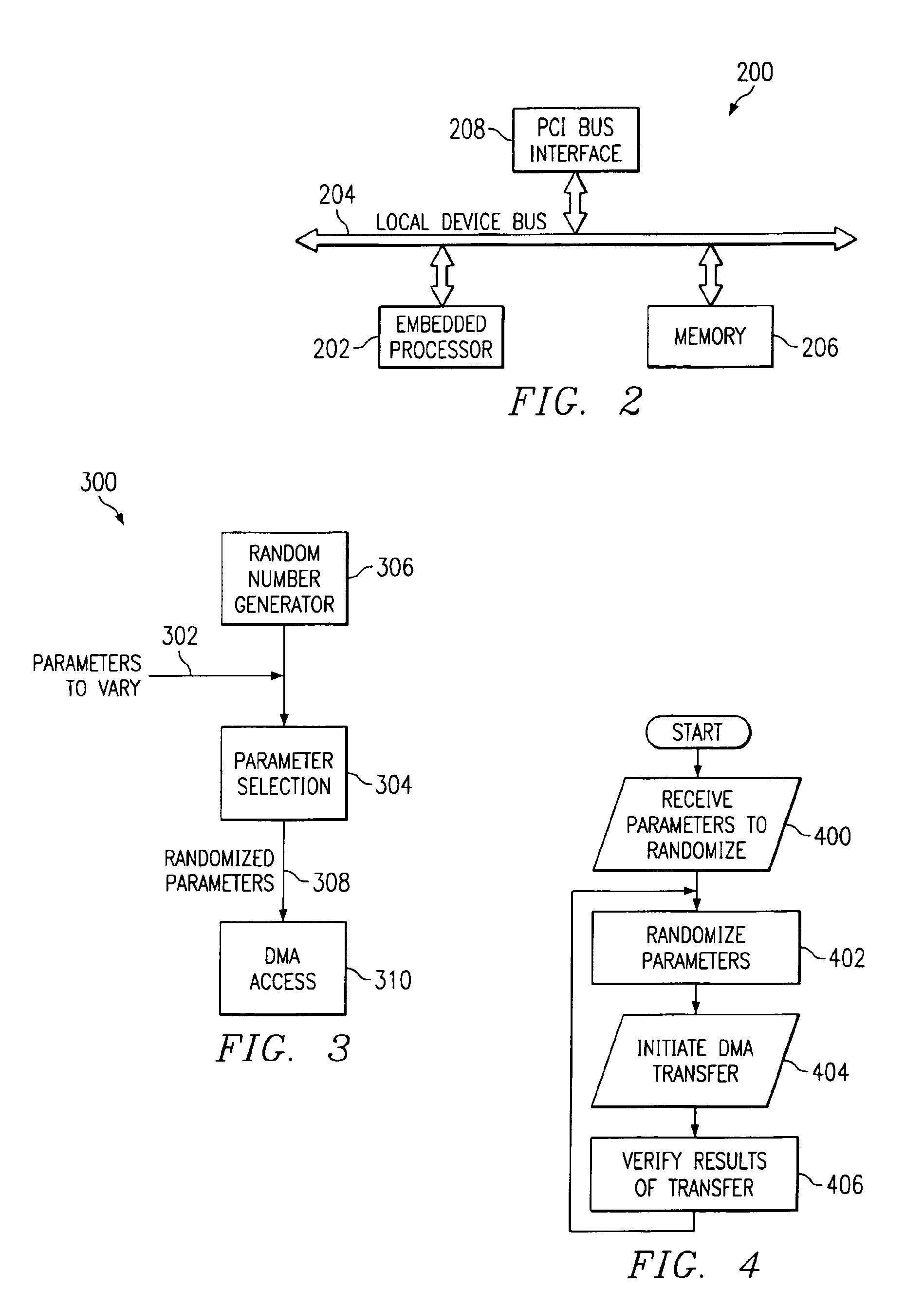

I/O stress test

The present invention provides a method, computer program product, input / output device, and computer system for stress testing the I / O subsystem of a computer system. An input / output device capable of engaging in repetitive direct memory access (DMA) transfers with pseudo-randomized transfer parameters is allowed to execute multiple DMA transfers with varying parameters. In this way, a single type of device may be used to simulate the effects of multiple types of devices. Multiple copies of the same I / O device may be used concurrently in a single computer system along with processor software to access the same portions of memory. In this way, false sharing, true sharing may be effected.

Owner:IBM CORP

Dynamic logical data channel assignment using time-grouped allocations

InactiveUS7865631B2Reducing false dependencyEffective distributionElectric digital data processingData transmissionBitmap

A method, system and program are provided for dynamically allocating DMA channel identifiers to multiple DMA transfer requests that are grouped in time by virtualizing DMA transfer requests into an available DMA channel identifier using a channel bitmap listing of available DMA channels to select and set an allocated DMA channel identifier. Once the input values associated with the DMA transfer requests are mapped to the selected DMA channel identifier, the DMA transfers are performed using the selected DMA channel identifier, which is then deallocated in the channel bitmap upon completion of the DMA transfers. When there is a request to wait for completion of the data transfers, the same input values are used with the mapping to wait on the appropriate logical channel. With this method, all available logical channels can be utilized with reduced instances of false-sharing.

Owner:INT BUSINESS MASCH CORP

Dynamic logical data channel assignment using channel bitmap

InactiveUS8266337B2Reducing false dependencyImprove performanceElectric digital data processingData transmissionComputer science

A method, system and program are provided for dynamically allocating DMA channel identifiers by virtualizing DMA transfer requests into available DMA channel identifiers using a channel bitmap listing of available DMA channels to select and set an allocated DMA channel identifier. Once an input value associated with the DMA transfer request is mapped to the selected DMA channel identifier, the DMA transfer is performed using the selected DMA channel identifier, which is then deallocated in the channel bitmap upon completion of the DMA transfer. When there is a request to wait for completion of the data transfer, the same input value is used with the mapping to wait on the appropriate logical channel. With this method, all available logical channels can be utilized with reduced instances of false-sharing.

Owner:IBM CORP

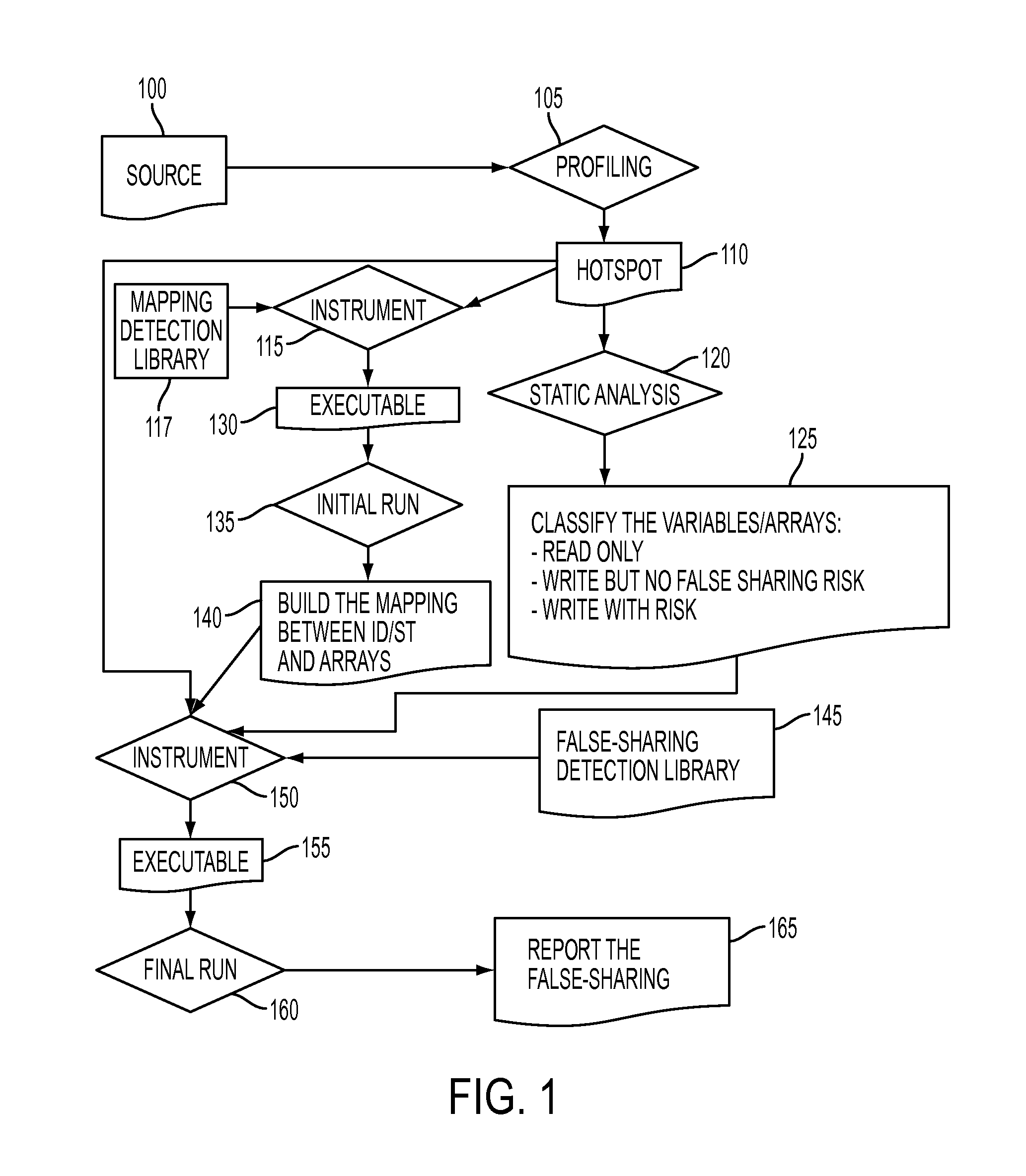

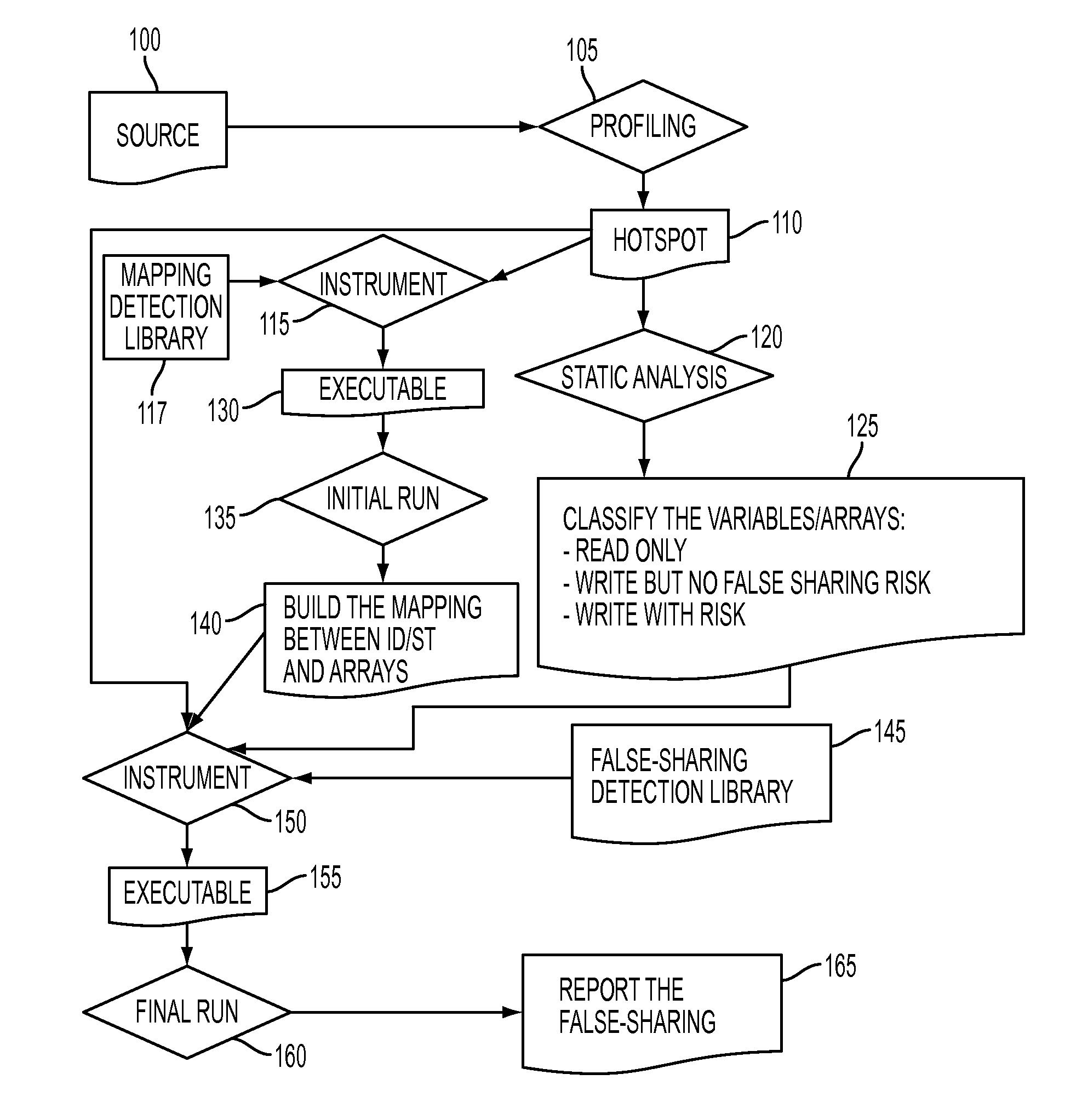

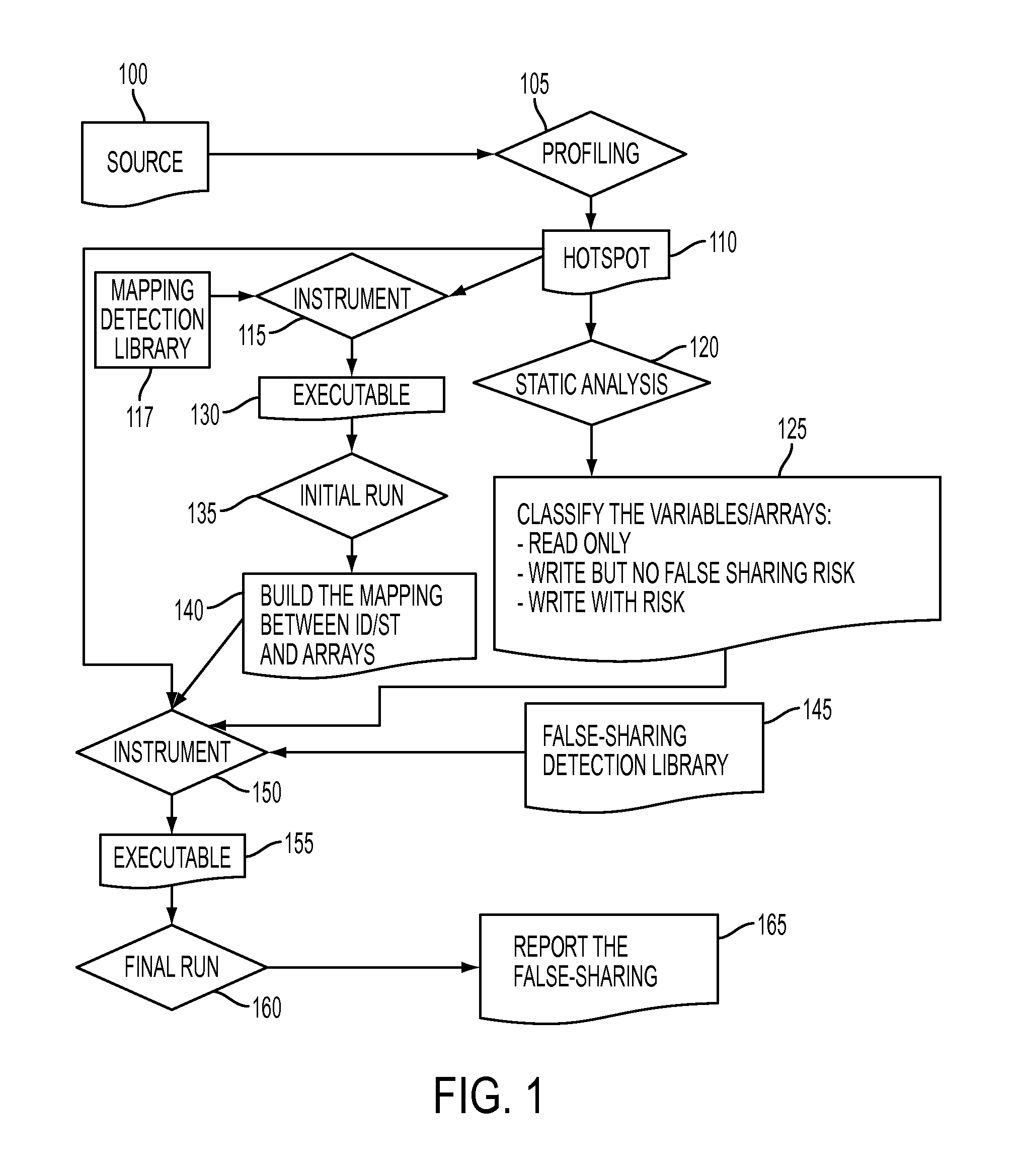

Methodology for fast detection of false sharing in threaded scientific codes

InactiveUS20140156939A1Error detection/correctionMemory adressing/allocation/relocationArray data structureParallel computing

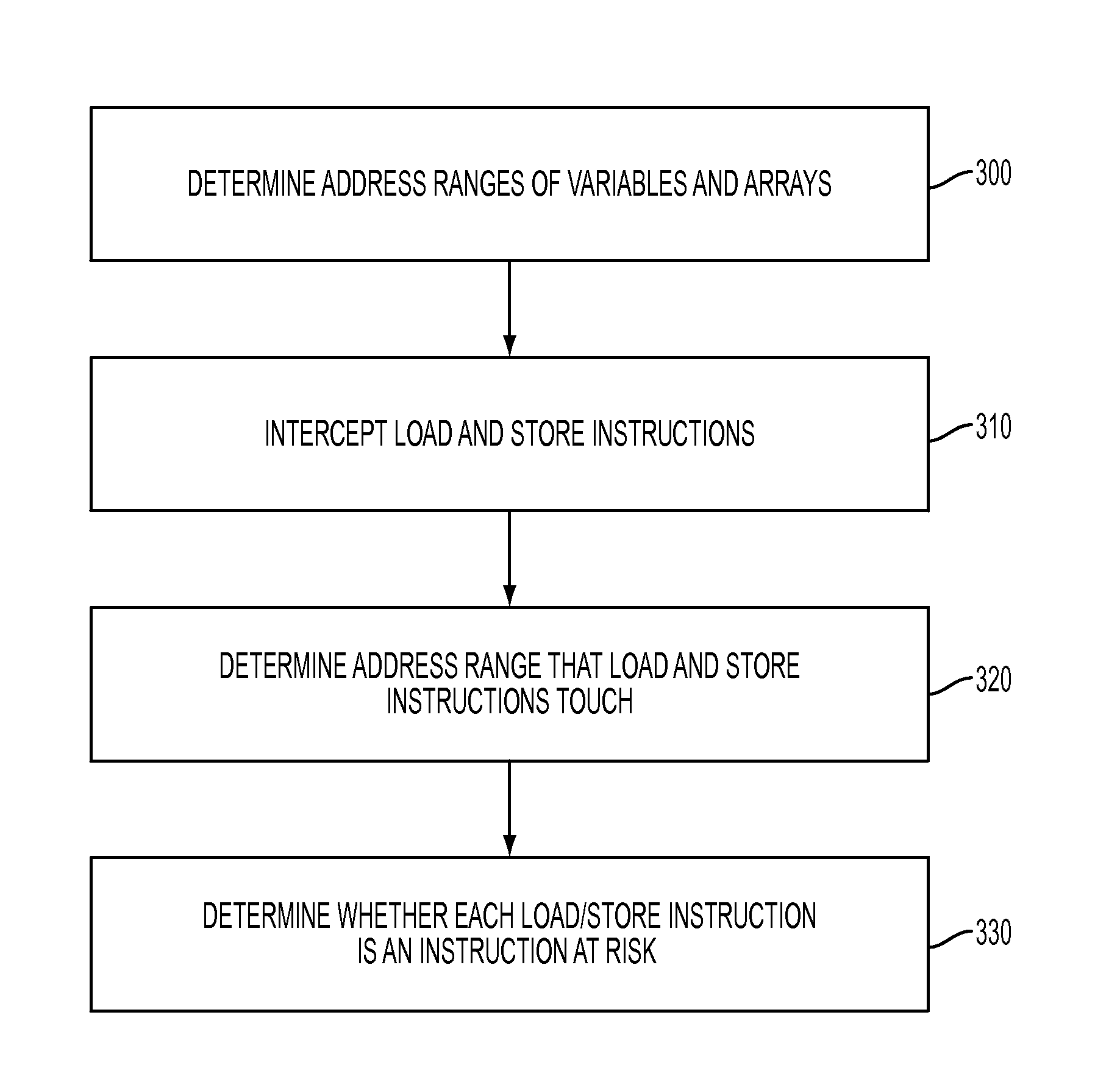

A profiling tool identifies a code region with a false sharing potential. A static analysis tool classifies variables and arrays in the identified code region. A mapping detection library correlates memory access instructions in the identified code region with variables and arrays in the identified code region while a processor is running the identified code region. The mapping detection library identifies one or more instructions at risk, in the identified code region, which are subject to an analysis by a false sharing detection library. A false sharing detection library performs a run-time analysis of the one or more instructions at risk while the processor is re-running the identified code region. The false sharing detection library determines, based on the performed run-time analysis, whether two different portions of the cache memory line are accessed by the generated binary code.

Owner:IBM CORP

Architecture-aware field affinity estimation

InactiveUS8359291B2Digital data processing detailsError detection/correctionSubject matterFalse sharing

A data layout optimization may utilize affinity estimation between pairs of fields of a record in a computer program. The affinity estimation may be determined based on a trace of an execution and in view of actual processing entities performing each access to the fields. The disclosed subject matter may be configured to be aware of a specific architecture of a target computer having a plurality of processing entities, executing the program so as to provide an improved affinity estimation which may take into account both false sharing issues, spatial locality improvement and the like.

Owner:IBM CORP

Write-through cache optimized for dependence-free parallel regions

InactiveUS20120331232A1Improve parallelismMemory adressing/allocation/relocationParallel computingComputing systems

Owner:IBM CORP

Methodology for fast detection of false sharing in threaded scientific codes

InactiveUS8898648B2Error detection/correctionGeneral purpose stored program computerArray data structureParallel computing

A profiling tool identifies a code region with a false sharing potential. A static analysis tool classifies variables and arrays in the identified code region. A mapping detection library correlates memory access instructions in the identified code region with variables and arrays in the identified code region while a processor is running the identified code region. The mapping detection library identifies one or more instructions at risk, in the identified code region, which are subject to an analysis by a false sharing detection library. A false sharing detection library performs a run-time analysis of the one or more instructions at risk while the processor is re-running the identified code region. The false sharing detection library determines, based on the performed run-time analysis, whether two different portions of the cache memory line are accessed by the generated binary code.

Owner:INT BUSINESS MASCH CORP

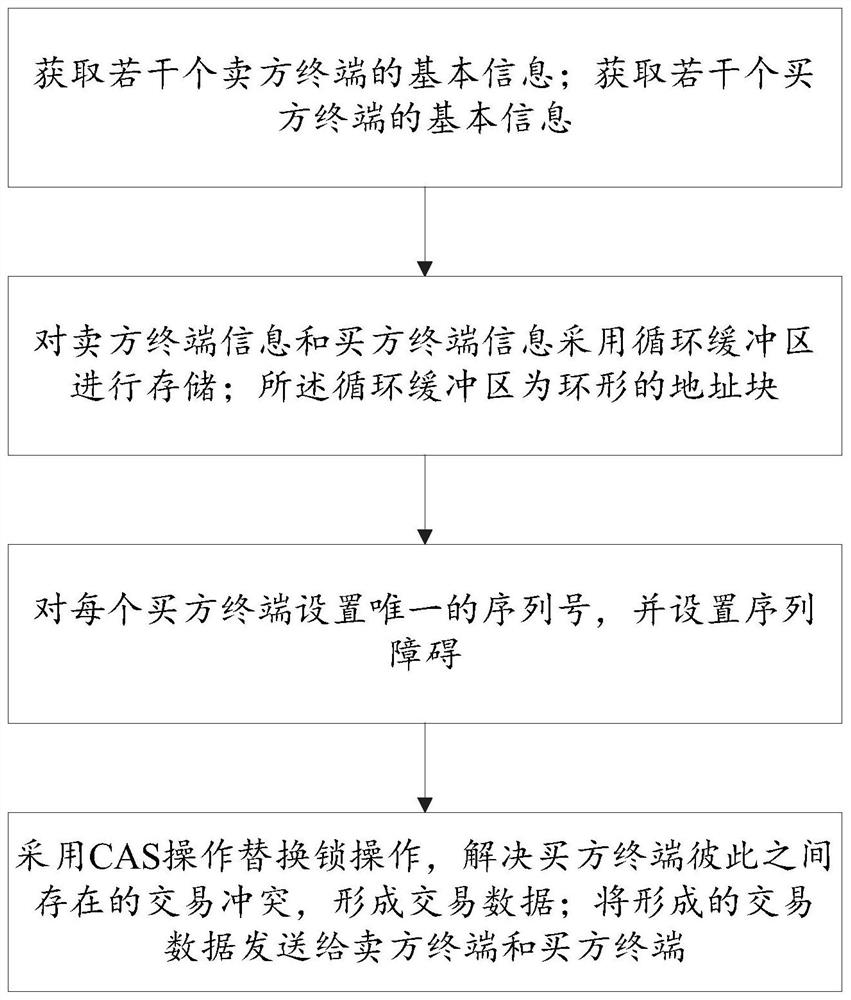

Large-scale concurrent request-oriented e-commerce transaction matching method and system

ActiveCN112598517ASolve the problem of inefficient data exchangeFinanceInterprogram communicationTransaction dataFinancial transaction

The invention discloses a large-scale concurrent request-oriented e-commerce transaction matching method and system. The method comprises the steps of obtaining basic information of a plurality of seller terminals, obtaining basic information of a plurality of buyer terminals, storing the seller terminal information and the buyer terminal information by adopting a circular buffer area, wherein thecircular buffer area is an annular address block, setting a unique serial number for each buyer terminal, and setting a sequence obstacle, adopting CAS operation to replace lock operation, solving transaction conflicts existing between buyer terminals, and forming transaction data, and sending the formed transaction data to the seller terminal and the buyer terminal. The problems of CPU pseudo sharing, lock programming and low efficiency of data exchange between independent threads of a traditional queue are solved. An e-commerce transaction matching method and an engine are designed, and a system single thread developed based on the engine can support 5 million orders per second.

Owner:临沂市新商网络技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com