Information tracking method based on convolutional neural network

A convolutional neural network and neural network technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as insufficient, uncertain tracking of target frame motion information, and insufficient use of video temporality. To achieve the effect of accurate tracking, improve accuracy, and improve accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] The present invention will be further described below in conjunction with the accompanying drawings and embodiments.

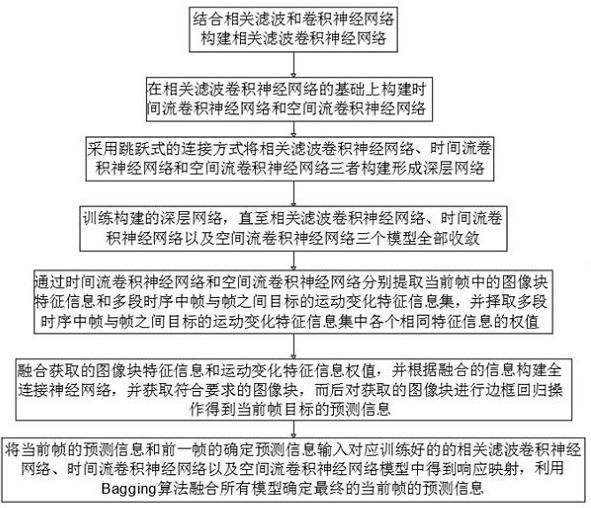

[0023] An information tracking method based on convolutional neural network, such as figure 1 shown, including the following steps:

[0024] Combining correlation filtering and convolutional neural network to construct correlation filtering convolutional neural network, wherein correlation filtering network is a layer of convolutional neural network formed by correlation filtering algorithm;

[0025] Construct a temporal stream convolutional neural network and a spatial stream convolutional neural network on the basis of the correlation filtering convolutional neural network constructed in step 1;

[0026] A deep network is formed by constructing the correlation filter convolutional neural network, the temporal flow convolutional neural network and the spatial flow convolutional neural network by means of skip connections;

[0027] Train the construct...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com